Nginx负载均衡

1.nginx负载均衡

nginx通常被用作后端服务器的反向代理,这样就可以很方便的实现动静分离以及负载均衡,从而大大提高服务器的处理能力。

nginx实现动静分离,其实就是在反向代理的时候,如果是静态资源,就直接从nginx发布的路径去读取,而不需要从后台服务器获取了。

但是要注意,这种情况下需要保证后端跟前端的程序保持一致,可以使用Rsync做服务端自动同步或者使用NFS,MFS分布式共享存储

Http Proxy模块,功能很多,最常用的是proxy_pass和proxy_cache

如果使用proxy_cache,需要集成第三方的ngx_cache_purge模块,用来清除指定的URL缓存。这个集成需要在安装nginx的时候去做,如:

./configure --add-module=../ngx_cache_purge-1.0 ......

nginx通过upstream模块来实现简单的负载均衡,upstream需要定义在http段内

如upstream段内,定义一个服务器列表,默认的方式是轮询,如果要确定同一个访问者发出的请求总是由同一个后端服务器来处理,可以设置ip_hash,如:

upstream idfsoft.com {

ip_hash;

server 127.0.0.1:9080 weight=5;

server 127.0.0.1:8080 weight=5;

server 127.0.0.1:1111;

}

注意:这个方法本质还是轮询,而且由于客户端的ip可能是不断变化的,比如动态ip,代理,FQ等,因此ip_hash并不能完全保证同一个客户端总是由同一个服务器来处理。

定义好upstream后,需要在server段内添加如下内容:

server {

location / {

proxy_pass http://idfsoft.com;

}

}

1.1 nginx负载均衡配置

接下来,做一个关于nginx负载均衡和反向代理的简单实验

环境说明

| 系统 | IP | 角色 | 需要安装的服务 |

|---|---|---|---|

| centos8 | 192.168.169.140 | Nginx负载均衡器 | nginx |

| centos8 | 192.168.169.142 | Web服务器 | apache |

| centos8 | 192.168.169.142 | Web服务器 | nginx |

Nginx负载均衡器使用源码的方式安装Nginx,另外两台Web服务器使用yum的方式分贝安装Nginx与Apache服务

nginx的源码安装详情可以参考《Nginx》

yum安装就不必多说了

1.1.1 修改Web服务器的默认主页

//Nginx

[root@web-nginx ~]# echo "Nginx" > /usr/share/nginx/html/index.html

[root@web-nginx ~]# systemctl restart nginx.service

//Apache

[root@web-apache ~]# echo "Apache" > /var/www/html/index.html

[root@web-apache ~]# systemctl restart httpd.service

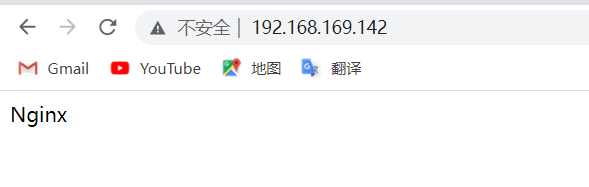

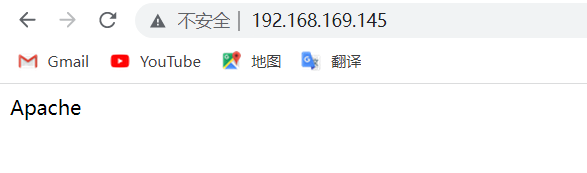

测试两台Web服务器的站点能否正常访问

1.1.2 开启nginx负载均衡和反向代理

[root@nginx ~]# vim /usr/local/nginx/conf/nginx.conf

upstream webserver {

server 192.168.169.142;

server 192.168.169.145;

}

//在server端里配置如下内容

location / {

proxy_pass http://webserver;

}

[root@nginx ~]# systemctl reload nginx.service

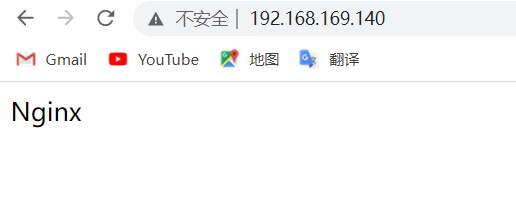

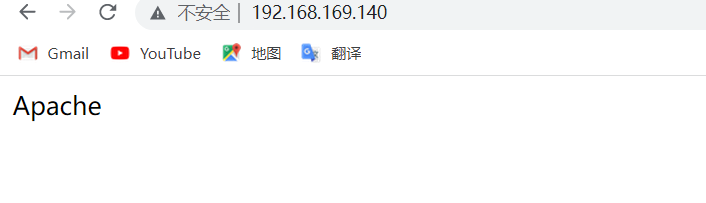

进行验证

在浏览器输入nginx负载均衡器的IP地址

刷新一下

//编辑nginx负载均衡器的nginx配置文件,修行如下修改

upstream webserver {

server 192.168.169.142 weight=3;

server 192.168.169.145;

}

[root@nginx ~]# systemctl reload nginx.service

//再进行验证效果

[root@nginx ~]# curl http://192.168.169.140

Nginx

[root@nginx ~]# curl http://192.168.169.140

Nginx

[root@nginx ~]# curl http://192.168.169.140

Nginx

[root@nginx ~]# curl http://192.168.169.140

Apache

//通过结果我们发现,访问到了3次nginx,1次apache,这是因为刚才在配置文件里的修改在nginx主机那条配置后加了一个weight=3,通俗的说,意思就是这条配置要连续访问3次,才会进行下一次轮询,当集群中有配置较低,较老的服务器可以进行使用,来减轻这些服务器的压力。

//再次修改nginx负载均衡器的配置文件

upstream webserver {

ip_hash;

server 192.168.169.142 weight=3;

server 192.168.169.145;

}

[root@nginx ~]# systemctl reload nginx.service

//进行验证

[root@nginx ~]# curl http://192.168.169.140

Nginx

[root@nginx ~]# curl http://192.168.169.140

Nginx

[root@nginx ~]# curl http://192.168.169.140

Nginx

[root@nginx ~]# curl http://192.168.169.140

Nginx

[root@nginx ~]# curl http://192.168.169.140

Nginx

[root@nginx ~]# curl http://192.168.169.140

Nginx

[root@nginx ~]# curl http://192.168.169.140

Nginx

//会发现,不管多少次,一直是nginx,这是因为ip_hash这条配置,这条配置可以让客户端访问到服务器端,以后就一直是此服务器来进行响应客户端,所以才会一直访问到nginx,当然前面已经说过,这个方式的本质还是轮询,并不能保证一个客户端总是由同一个服务器来进行响应

2. Keepalived高可用nginx负载均衡器

实验环境

本实验基于上面的实验之上

| 系统 | 角色 | 需要安装的服务 | IP |

|---|---|---|---|

| centos8 | nginx负载均衡器,Keepalived-master | nginx,keepalived | 192.168.169.139 |

| centos8 | nginx负载均衡器,keepalived-backup | nginx,keepalived | 192.168.169.140 |

| centos8 | Web服务器 | nginx | 192.168.169.142 |

| centos8 | Web服务器 | apache | 192.168.169.145 |

本实验里VIP为:192.168.169.250

140,142,145这三台主机,在前面环境已经部署完成了,现在只需在139安装nginx和keepalived,140安装keepalived即可

关于nginx的源码安装请参考《Nginx》

//修改140主机上的nginx配置文件

upstream webserver {

server 192.168.169.142;

server 192.168.169.145;

}

//将140主机上的nginx配置文件复制到139主机上来

[root@master ~]# scp root@192.168.169.140:/usr/local/nginx/conf/nginx.conf /usr/local/nginx/conf/nginx.conf

//重启nginx

[root@master conf]# systemctl restart nginx.service

//有一点需要注意,Keepalived高可用的备用节点的nginx是不用开启的,如果开启的话,后面访问VIP的时候可能会访问不到,当然现在可以暂时开启,验证一下nginx负载均衡是否有效

//验证

[root@master ~]# curl http://192.168.169.139

Nginx

[root@master ~]# curl http://192.168.169.139

Apache

[root@backup ~]# curl http://192.168.169.140

Nginx

[root@backup ~]# curl http://192.168.169.140

Apache

2.1 安装Keepalived

Keepalived-master

[root@master ~]# dnf -y install keepalived

Keepalived-backup

[root@backup ~]# dnf -y install keepalived

2.2 配置Keepalived

Keepalived-master

[root@master ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id lb01

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass zzd123...

}

virtual_ipaddress {

192.168.169.250

}

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 192.168.169.139 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.169.140 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

[root@master ~]# systemctl enable --now keepalived.service

Created symlink /etc/systemd/system/multi-user.target.wants/keepalived.service → /usr/lib/systemd/system/keepalived.service.

Keepalived-master

[root@backup ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id lb02

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass zzd123...

}

virtual_ipaddress {

192.168.169.250

}

}

virtual_server 192.168.169.250 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 192.168.169.139 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.169.140 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

[root@backup ~]# systemctl enable --now keepalived.service

Created symlink /etc/systemd/system/multi-user.target.wants/keepalived.service → /usr/lib/systemd/system/keepalived.service.

[root@backup ~]# systemctl disable --now nginx.service

Removed /etc/systemd/system/multi-user.target.wants/nginx.service.

2.3 查看VIP在那台主机

Keepalived-master

[root@master ~]# ip a show ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:f1:77:ce brd ff:ff:ff:ff:ff:ff

inet 192.168.169.139/24 brd 192.168.169.255 scope global dynamic noprefixroute ens33

valid_lft 1729sec preferred_lft 1729sec

inet 192.168.169.250/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::ac0:aa7e:f1b9:248e/64 scope link noprefixroute

valid_lft forever preferred_lft forever

Keepalived-backup

[root@backup ~]# ip a show ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:06:43:16 brd ff:ff:ff:ff:ff:ff

inet 192.168.169.140/24 brd 192.168.169.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe06:4316/64 scope link

valid_lft forever preferred_lft forever

查看ip发现VIP在master主机上,也就是139主机,这是在意料之中的,因为在Keepalived配置文件里我们设置master的优先级要高一些,所以master理所当然的抢占了VIP

验证结果

在浏览器访问VIP

刷新一下

模拟主节点宕机,关闭Keepalived服务和nginx

[root@master ~]# systemctl stop keepalived.service

[root@master ~]# systemctl stop nginx.service

查看备用节点上是否出现VIP

[root@backup ~]# ip a show ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:06:43:16 brd ff:ff:ff:ff:ff:ff

inet 192.168.169.140/24 brd 192.168.169.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.169.250/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe06:4316/64 scope link

valid_lft forever preferred_lft forever

启动备节点nginx

[root@backup ~]# systemctl start nginx.service

再次访问VIP

刷新一下

可以看到,即使其中一个nginx负载均衡器挂掉了,也不会影响正常访问,这就是nginx负载均衡的高可用的配置

将主节点的Keepalived重新开启,并且重启nginx,停掉备用节点的nginx、

[root@master ~]# systemctl start keepalived.service

[root@master ~]# systemctl start nginx.service

[root@backup ~]# systemctl stop nginx.service

//查看VIP是否回到了主节点,因为我们设置的默认抢占,所以当主节点的Keepalived恢复后,会抢会master的身份

[root@master ~]# ip a show ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:f1:77:ce brd ff:ff:ff:ff:ff:ff

inet 192.168.169.139/24 brd 192.168.169.255 scope global dynamic noprefixroute ens33

valid_lft 1642sec preferred_lft 1642sec

inet 192.168.169.250/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::ac0:aa7e:f1b9:248e/64 scope link noprefixroute

valid_lft forever preferred_lft forever

2.4 编写脚本监控Keepalived和nginx的状态

在master节点编写脚本

[root@master ~]# mkdir /scripts

[root@master ~]# cd /scripts/

[root@master scripts]# touch check_nginx.sh

[root@master scripts]# chmod +x check_nginx.sh

[root@master scripts]# vim check_nginx.sh

#!/bin/bash

nginx_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bnginx\b'|wc -l)

if [ $nginx_status -lt 1 ];then

systemctl stop keepalived

fi

[root@master scripts]# vim check_nginx.sh

[root@master scripts]# touch notify.sh

[root@master scripts]# chmod +x notify.sh

[root@master scripts]# vim notify.sh

#!/bin/bash

VIP=$2

case "$1" in

master)

nginx_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bnginx\b'|wc -l)

if [ $nginx_status -lt 1 ];then

systemctl start nginx

fi

;;

backup)

nginx_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bnginx\b'|wc -l)

if [ $nginx_status -gt 0 ];then

systemctl stop nginx

fi

;;

*)

echo "Usage:$0 master|backup VIP"

;;

esac

在backup节点编写脚本

[root@backup ~]# mkdir /scripts

[root@backup ~]# cd /scripts/

[root@backup scripts]# touch notify.sh

[root@backup scripts]# chmod +x notify.sh

[root@backup scripts]# vim notify.sh

#!/bin/bash

VIP=$2

case "$1" in

master)

nginx_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bnginx\b'|wc -l)

if [ $nginx_status -lt 1 ];then

systemctl start nginx

fi

;;

backup)

nginx_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bnginx\b'|wc -l)

if [ $nginx_status -gt 0 ];then

systemctl stop nginx

fi

;;

*)

echo "Usage:$0 master|backup VIP"

;;

esac

2.5 配置Keepalived加入监控脚本

Keepalived-master

//修改/etc/keepalived/keepalived.conf文件

! Configuration File for keepalived

global_defs {

router_id lb01

}

vrrp_script nginx_check{

script "/scripts/check_nginx.sh"

interval 1

weight -20

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

! Configuration File for keepalived

global_defs {

router_id lb01

}

vrrp_script nginx_check{ //添加这五行

script "/scripts/check_nginx.sh"

interval 1

weight -20

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass zzd123...

}

virtual_ipaddress {

192.168.169.250

}

track_script { //添加这4行

nginx_check

}

notify_master "/scripts/notify.sh master"

}

virtual_server 192.168.169.250 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 192.168.169.139 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.169.140 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

//重启Keepalived

[root@master ~]# systemctl restart keepalived.service

Keepalived-backup

//修改/etc/keepalived/keepalived.conf文件

! Configuration File for keepalived

global_defs {

router_id lb02

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass zzd123...

}

virtual_ipaddress {

192.168.169.250

}

notify_master "/scripts/notify.sh master" //添加这两行

notify_backup "/scripts/notify.sh backup"

}

virtual_server 192.168.169.250 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 192.168.169.139 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.169.140 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

//重启Keepalived

[root@backup ~]# systemctl restart keepalived.service

以上的脚本和配置可以实现,当主节点的nginx挂掉后,会自动停掉Keepalived,让出VIP,当备节点Keepalive转换为主节点时会自动开启nginx,从主转换为备时会自动关掉nginx,主节点只会在运行身份为master的时候开启nginx,

2.6 验证

当主节点一切正常的时候

//查看ip,有VIP

[root@master ~]# ip a show ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:f1:77:ce brd ff:ff:ff:ff:ff:ff

inet 192.168.169.139/24 brd 192.168.169.255 scope global dynamic noprefixroute ens33

valid_lft 1629sec preferred_lft 1629sec

inet 192.168.169.250/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::ac0:aa7e:f1b9:248e/64 scope link noprefixroute

valid_lft forever preferred_lft forever

//查看端口,nginx服务正常运行

[root@master ~]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 128 0.0.0.0:80 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 [::]:22 [::]:*

//访问VIP

[root@master ~]# curl http://192.168.169.250

Nginx

[root@master ~]# curl http://192.168.169.250

Apache

模拟主节点nginx服务发生故障

[root@master ~]# systemctl stop nginx.service

//在主节点查看Keepalived状态,发现Keepalived已经停掉

[root@master ~]# systemctl status keepalived.service

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)

Active: inactive (dead) since Mon 2022-10-17 22:23:03 CST; 25s ago

Process: 37092 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 37094 (code=exited, status=0/SUCCESS)

//再查看ip,发现VIP也没有了

[root@master ~]# ip a show ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:f1:77:ce brd ff:ff:ff:ff:ff:ff

inet 192.168.169.139/24 brd 192.168.169.255 scope global dynamic noprefixroute ens33

valid_lft 1467sec preferred_lft 1467sec

inet6 fe80::ac0:aa7e:f1b9:248e/64 scope link noprefixroute

valid_lft forever preferred_lft forever

//切换到备节点,查看ip,发现VIP转移到了备节点,说明备节点变成了主节点

[root@backup ~]# ip a show ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:06:43:16 brd ff:ff:ff:ff:ff:ff

inet 192.168.169.140/24 brd 192.168.169.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.169.250/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe06:4316/64 scope link

valid_lft forever preferred_lft forever

//查看端口,发现nginx服务自动开启了

[root@backup ~]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 128 0.0.0.0:111 0.0.0.0:*

LISTEN 0 128 0.0.0.0:80 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 [::]:111 [::]:*

LISTEN 0 128 [::]:22 [::]:*

//访问VIP,可以正常进行访问

[root@backup ~]# curl http://192.168.169.250

Nginx

[root@backup ~]# curl http://192.168.169.250

Apache

启动master节点的nginx和Keepalived

[root@master ~]# systemctl start nginx.service

[root@master ~]# systemctl start keepalived.service

//在备用节点查看端口,发现nginx自动停掉

[root@backup ~]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 128 0.0.0.0:111 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 [::]:111 [::]:*

LISTEN 0 128 [::]:22 [::]:*

//查看ip,发现VIP已经没有了,转移到了主节点

[root@backup ~]# ip a show ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:06:43:16 brd ff:ff:ff:ff:ff:ff

inet 192.168.169.140/24 brd 192.168.169.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe06:4316/64 scope link

valid_lft forever preferred_lft forever

//主节点查看ip

[root@master ~]# ip a show ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:f1:77:ce brd ff:ff:ff:ff:ff:ff

inet 192.168.169.139/24 brd 192.168.169.255 scope global dynamic noprefixroute ens33

valid_lft 1050sec preferred_lft 1050sec

inet 192.168.169.250/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::ac0:aa7e:f1b9:248e/64 scope link noprefixroute

valid_lft forever preferred_lft forever

//访问VIP

[root@master ~]# curl http://192.168.169.250

Nginx

[root@master ~]# curl http://192.168.169.250

Apache

浙公网安备 33010602011771号

浙公网安备 33010602011771号