基于keras的卷积神经网络(CNN)

1 前言

本文以MNIST手写数字分类为例,讲解使用一维卷积和二维卷积实现 CNN 模型。关于 MNIST 数据集的说明,见使用TensorFlow实现MNIST数据集分类。实验中主要用到 Conv1D 层、Conv2D 层、MaxPooling1D 层和 MaxPooling2D 层,其参数说明如下:

(1)Conv1D

Conv1D(filters, kernel_size, strides=1, padding='valid', dilation_rate=1, activation=None)

- filters:卷积核个数(通道数)

- kernel_size:卷积核尺寸(长度或宽度)

- strides:卷积核向右(或向下)移动步长

- padding:右边缘(或下边缘)不够一个窗口大小时,是否补零。valid 表示不补,same 表示补零

- dilation_rate:膨胀(空洞)率,每次卷积运算时,相邻元素之间的水平距离

- activation: 激活函数,可选 sigmoid、tanh、relu

注意:当该层作为第一层时,应提供 input_shape参数。例如 input_shape=(10,128)表示10个时间步长的时间序列,每步中有128个特征

(2)Conv2D

Conv2D(filters, kernel_size, strides=(1, 1), padding='valid', dilation_rate=(1, 1), activation=None)

- filters:卷积核个数(通道数)

- kernel_size:卷积核尺寸(长度和宽度)

- strides:卷积核向右和向下移动步长

- padding:右边缘和下边缘不够一个窗口大小时,是否补零。valid 表示不补,same 表示补零

- dilation_rate:膨胀(空洞)率,每次卷积运算时,相邻元素之间的水平和竖直距离

- activation: 激活函数,可选 sigmoid、tanh、relu

注意:当该层作为第一层时,应提供 input_shape参数。例如 input_shape=(128,128,3)表示128*128的彩色RGB图像

(3)MaxPooling1D

MaxPooling1D(pool_size=2, strides=None, padding='valid')

- pool_size:池化窗口尺寸(长度或宽度)

- strides:窗口向右(或向下)移动步长

- padding:右边缘(或下边缘)不够一个窗口大小时,是否补零。valid 表示不补,same 表示补零

(4)MaxPooling2D

MaxPooling2D(pool_size=(2, 2), strides=None, padding='valid')

- pool_size:池化窗口尺寸(长度和宽度)

- strides:窗口向右和向下移动步长

- padding:右边缘和下边缘不够一个窗口大小时,是否补零。valid 表示不补,same 表示补零

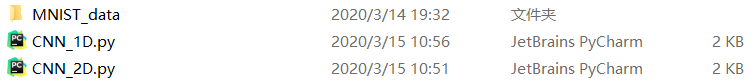

笔者工作空间如下:

代码资源见--> 使用一维卷积和二维卷积实现MNIST数据集分类

2 一维卷积

CNN_1D.py

from tensorflow.examples.tutorials.mnist import input_data

from keras.models import Sequential

from keras.layers import Conv1D,MaxPooling1D,Flatten,Dense

#载入数据

def read_data(path):

mnist=input_data.read_data_sets(path,one_hot=True)

train_x,train_y=mnist.train.images.reshape(-1,28,28),mnist.train.labels,

valid_x,valid_y=mnist.validation.images.reshape(-1,28,28),mnist.validation.labels,

test_x,test_y=mnist.test.images.reshape(-1,28,28),mnist.test.labels

return train_x,train_y,valid_x,valid_y,test_x,test_y

#序列模型

def CNN_1D(train_x,train_y,valid_x,valid_y,test_x,test_y):

#创建模型

model=Sequential()

model.add(Conv1D(input_shape=(28,28),filters=16,kernel_size=5,padding='same',activation='relu'))

model.add(MaxPooling1D(pool_size=2,padding='same')) #最大池化

model.add(Conv1D(filters=32,kernel_size=3,padding='same',activation='relu'))

model.add(MaxPooling1D(pool_size=2,padding='same')) #最大池化

model.add(Flatten()) #扁平化

model.add(Dense(10,activation='softmax'))

#查看网络结构

model.summary()

#编译模型

model.compile(optimizer='adam',loss='categorical_crossentropy',metrics=['accuracy'])

#训练模型

model.fit(train_x,train_y,batch_size=500,nb_epoch=20,verbose=2,validation_data=(valid_x,valid_y))

#评估模型

pre=model.evaluate(test_x,test_y,batch_size=500,verbose=2) #评估模型

print('test_loss:',pre[0],'- test_acc:',pre[1])

train_x,train_y,valid_x,valid_y,test_x,test_y=read_data('MNIST_data')

CNN_1D(train_x,train_y,valid_x,valid_y,test_x,test_y)

网络各层输出尺寸:

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv1d_1 (Conv1D) (None, 28, 16) 2256

_________________________________________________________________

max_pooling1d_1 (MaxPooling1 (None, 14, 16) 0

_________________________________________________________________

conv1d_2 (Conv1D) (None, 14, 32) 1568

_________________________________________________________________

max_pooling1d_2 (MaxPooling1 (None, 7, 32) 0

_________________________________________________________________

flatten_2 (Flatten) (None, 224) 0

_________________________________________________________________

dense_2 (Dense) (None, 10) 2250

=================================================================

Total params: 6,074

Trainable params: 6,074

Non-trainable params: 0

网络训练结果:

Epoch 18/20

- 1s - loss: 0.0659 - acc: 0.9803 - val_loss: 0.0654 - val_acc: 0.9806

Epoch 19/20

- 1s - loss: 0.0627 - acc: 0.9809 - val_loss: 0.0638 - val_acc: 0.9834

Epoch 20/20

- 1s - loss: 0.0601 - acc: 0.9819 - val_loss: 0.0645 - val_acc: 0.9828

test_loss: 0.06519819456152617 - test_acc: 0.9790999978780747

3 二维卷积

CNN_2D.py

from tensorflow.examples.tutorials.mnist import input_data

from keras.models import Sequential

from keras.layers import Conv2D,MaxPooling2D,Flatten,Dense

#载入数据

def read_data(path):

mnist=input_data.read_data_sets(path,one_hot=True)

train_x,train_y=mnist.train.images.reshape(-1,28,28,1),mnist.train.labels,

valid_x,valid_y=mnist.validation.images.reshape(-1,28,28,1),mnist.validation.labels,

test_x,test_y=mnist.test.images.reshape(-1,28,28,1),mnist.test.labels

return train_x,train_y,valid_x,valid_y,test_x,test_y

#序列模型

def CNN_2D(train_x,train_y,valid_x,valid_y,test_x,test_y):

#创建模型

model=Sequential()

model.add(Conv2D(input_shape=(28,28,1),filters=16,kernel_size=(5,5),padding='same',activation='relu'))

model.add(MaxPooling2D(pool_size=(2,2),padding='same')) #最大池化

model.add(Conv2D(filters=32,kernel_size=(3,3),padding='same',activation='relu'))

model.add(MaxPooling2D(pool_size=(2,2),padding='same')) #最大池化

model.add(Flatten()) #扁平化

model.add(Dense(10,activation='softmax'))

#查看网络结构

model.summary()

#编译模型

model.compile(optimizer='adam',loss='categorical_crossentropy',metrics=['accuracy'])

#训练模型

model.fit(train_x,train_y,batch_size=500,nb_epoch=20,verbose=2,validation_data=(valid_x,valid_y))

#评估模型

pre=model.evaluate(test_x,test_y,batch_size=500,verbose=2) #评估模型

print('test_loss:',pre[0],'- test_acc:',pre[1])

train_x,train_y,valid_x,valid_y,test_x,test_y=read_data('MNIST_data')

CNN_2D(train_x,train_y,valid_x,valid_y,test_x,test_y)

网络各层输出尺寸:

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 28, 28, 16) 416

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 14, 14, 16) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 14, 14, 32) 4640

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 7, 7, 32) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 1568) 0

_________________________________________________________________

dense_1 (Dense) (None, 10) 15690

=================================================================

Total params: 20,746

Trainable params: 20,746

Non-trainable params: 0

网络训练结果:

Epoch 18/20

- 11s - loss: 0.0290 - acc: 0.9911 - val_loss: 0.0480 - val_acc: 0.9872

Epoch 19/20

- 11s - loss: 0.0284 - acc: 0.9913 - val_loss: 0.0475 - val_acc: 0.9860

Epoch 20/20

- 11s - loss: 0.0258 - acc: 0.9921 - val_loss: 0.0453 - val_acc: 0.9874

test_loss: 0.038486057263799014 - test_acc: 0.9874000072479248

4 补充

(1)AveragePooling1D

AveragePooling1D(pool_size=2, strides=None, padding='valid')

(2)AveragePooling2D

AveragePooling2D(pool_size=(2, 2), strides=None, padding='valid')

声明:本文转自基于keras的卷积神经网络(CNN)

浙公网安备 33010602011771号

浙公网安备 33010602011771号