python_scrapy_爬虫

python , scrapy框架入门 , xpath解析, 文件存储, json存储.

涉及到详情页爬取,

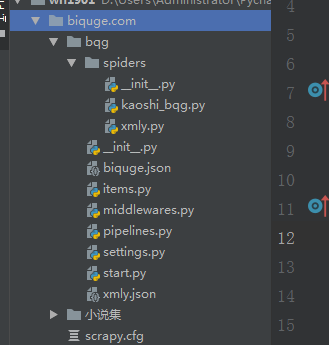

目录结构:

kaoshi_bqg.py

import scrapy from scrapy.spiders import Rule from scrapy.linkextractors import LinkExtractor from ..items import BookBQGItem class KaoshiBqgSpider(scrapy.Spider): name = 'kaoshi_bqg' allowed_domains = ['biquge5200.cc'] start_urls = ['https://www.biquge5200.cc/xuanhuanxiaoshuo/'] rules = ( # 编写匹配文章列表的规则 Rule(LinkExtractor(allow=r'https://www.biquge5200.cc/xuanhuanxiaoshuo/'), follow=True), # 匹配文章详情 Rule(LinkExtractor(allow=r'.+/[0-9]{1-3}_[0-9]{2-6}/'), callback='parse_item', follow=False), ) # 小书书名 def parse(self, response): a_list = response.xpath('//*[@id="newscontent"]/div[1]/ul//li//span[1]/a') for li in a_list: name = li.xpath(".//text()").get() detail_url = li.xpath(".//@href").get() yield scrapy.Request(url=detail_url, callback=self.parse_book, meta={'info': name}) # 单本书所有的章节名 def parse_book(self, response): name = response.meta.get('info') list_a = response.xpath('//*[@id="list"]/dl/dd[position()>20]//a') for li in list_a: chapter = li.xpath(".//text()").get() url = li.xpath(".//@href").get() yield scrapy.Request(url=url, callback=self.parse_content, meta={'info': (name, chapter)}) # 每章节内容 def parse_content(self, response): name, chapter = response.meta.get('info') content = response.xpath('//*[@id="content"]//p/text()').getall() item = BookBQGItem(name=name, chapter=chapter, content=content) yield item

xmly.py

# -*- coding: utf-8 -*- import scrapy from ..items import BookXMLYItem, BookChapterItem class XmlySpider(scrapy.Spider): name = 'xmly' allowed_domains = ['ximalaya.com'] start_urls = ['https://www.ximalaya.com/youshengshu/wenxue/'] def parse(self, response): div_details = response.xpath('//*[@id="root"]/main/section/div/div/div[3]/div[1]/div/div[2]/ul/li/div') # details = div_details[::3] for details in div_details: book_id = details.xpath('./div/a/@href').get().split('/')[-2] book_name = details.xpath('./a[1]/@title').get() book_author = details.xpath('./a[2]/text()').get() # 作者 book_url = details.xpath('./div/a/@href').get() url = 'https://www.ximalaya.com' + book_url # print(book_id, book_name, book_author, url) item = BookXMLYItem(book_id=book_id, book_name=book_name, book_author=book_author, book_url=url) yield item yield scrapy.Request(url=url, callback=self.parse_details, meta={'info': book_id}) def parse_details(self, response): book_id = response.meta.get('info') div_details = response.xpath('//*[@id="anchor_sound_list"]/div[2]/ul/li/div[2]') for details in div_details: chapter_id = details.xpath('./a/@href').get().split('/')[-1] chapter_name = details.xpath('./a/text()').get() chapter_url = details.xpath('./a/@href').get() url = 'https://www.ximalaya.com' + chapter_url item = BookChapterItem(book_id=book_id, chapter_id=chapter_id, chapter_name=chapter_name, chapter_url=url) yield item

item.py

import scrapy # 笔趣阁字段 class BookBQGItem(scrapy.Item): name = scrapy.Field() chapter = scrapy.Field() content = scrapy.Field() # 喜马拉雅 字段 class BookXMLYItem(scrapy.Item): book_name = scrapy.Field() book_id = scrapy.Field() book_url = scrapy.Field() book_author = scrapy.Field() # 喜马拉雅详情字段 class BookChapterItem(scrapy.Item): book_id = scrapy.Field() chapter_id = scrapy.Field() chapter_name = scrapy.Field() chapter_url = scrapy.Field()

pipelines.py

from scrapy.exporters import JsonLinesItemExporter import os class BqgPipeline(object): def process_item(self, item, spider): xs = '小说集' name = item['name'] xs_path = os.path.join(os.path.dirname(os.path.dirname(__file__)), xs) fiction_path = os.path.join(xs_path, name) # print(os.path.dirname(__file__)) D:/Users/Administrator/PycharmProjects/wh1901/biquge.com # print(os.path.dirname(os.path.dirname(__file__))) D:/Users/Administrator/PycharmProjects/wh1901 if not os.path.exists(xs_path): # 如果目录不存在 os.mkdir(xs_path) if not os.path.exists(fiction_path): os.mkdir(fiction_path) # 创建目录 chapter = item['chapter'] content = item['content'] file_path = os.path.join(fiction_path, chapter) + '.txt' # 在 该目录下面创建 xx .txt 文件 with open(file_path, 'w', encoding='utf-8') as fp: fp.write(content + '\n') print('保存成功') # class XmlyPipeline(object): # def __init__(self): # self.fp = open("xmly.json", 'wb') # # JsonLinesItemExporter 调度器 # self.exporter = JsonLinesItemExporter(self.fp, ensure_ascii=False) # # def process_item(self, item, spider): # self.exporter.export_item(item) # return item # # def close_item(self): # self.fp.close() # print("爬虫结束")

starts.py

from scrapy import cmdline cmdline.execute("scrapy crawl kaoshi_bqg".split()) # cmdline.execute("scrapy crawl xmly".split())

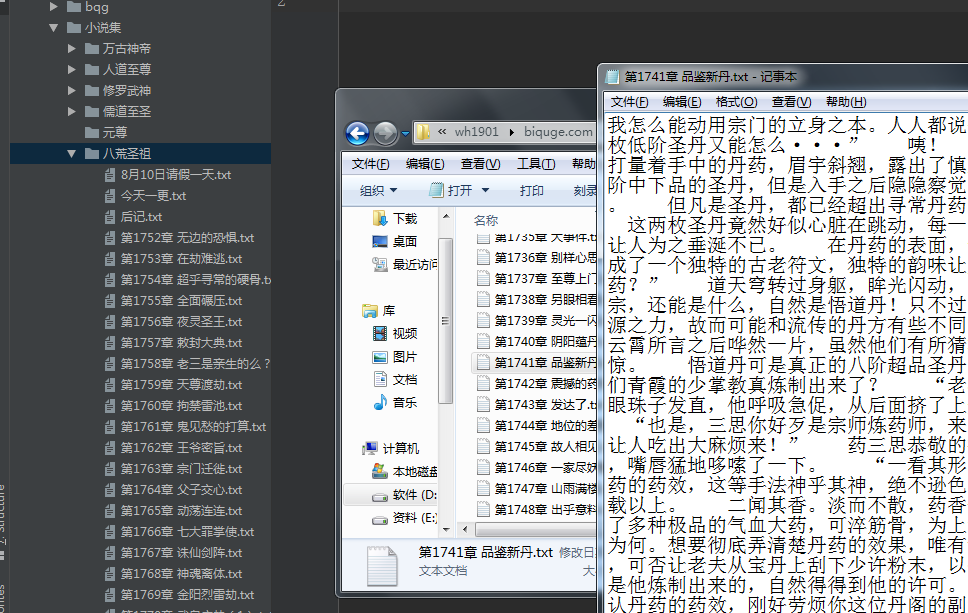

然后是爬取到的数据

小说

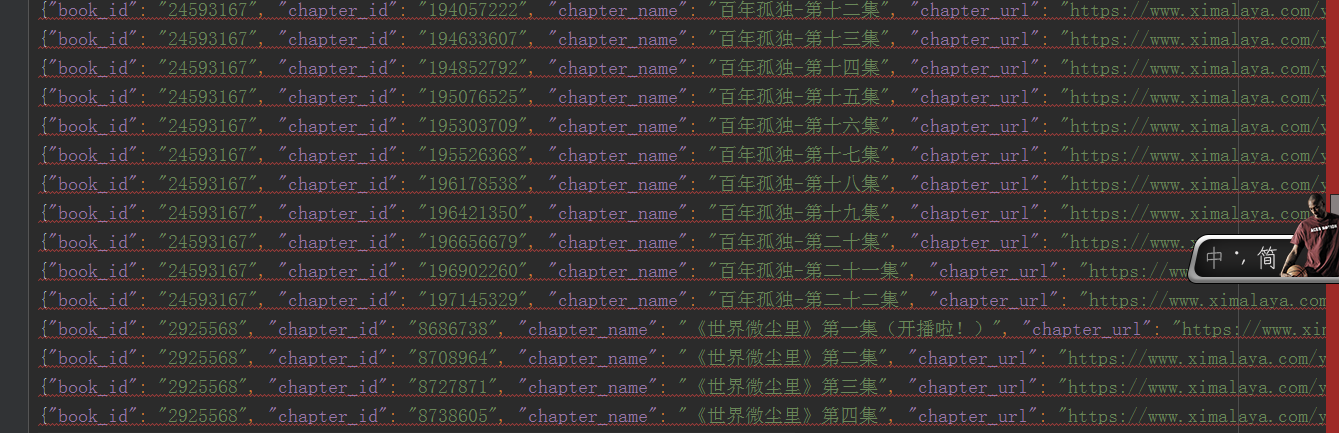

xmly.json

- 记录一下爬取过程中遇到的一点点问题:

- 在爬取详情页的的时候, 刚开始不知道怎么获取详情页的 url 以及 上一个页面拿到的字段

也就是 yield 返回 请求详情页 里面的参数没有很好地理解