数据平面Envoy部分四:Envoy动态配置

一、envoy动态配置介绍

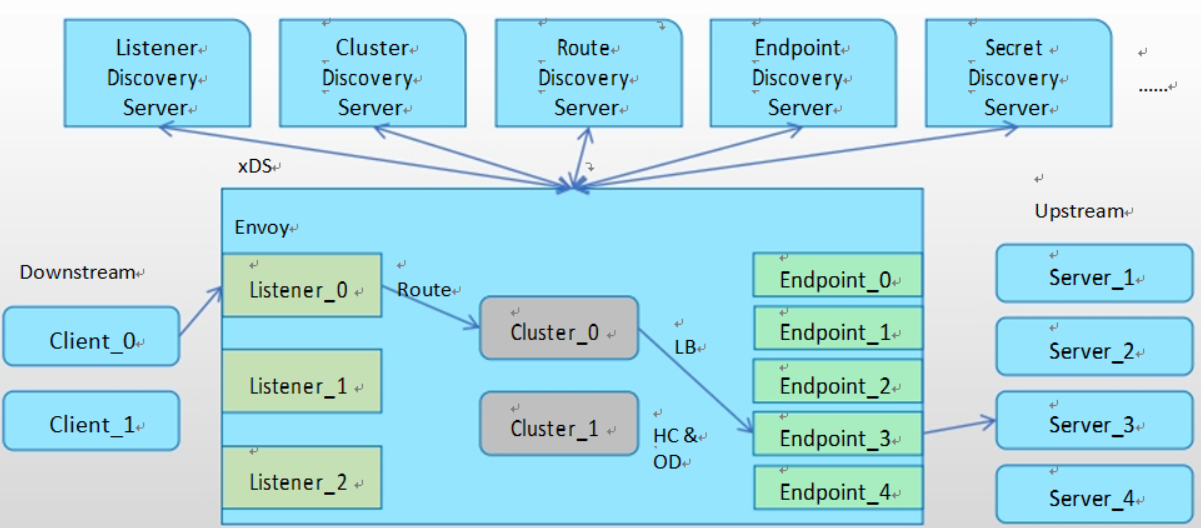

动态资源,是指由envoy通过xDS协议发现所需要的各项配置的机制,相关的配置信息保存 于称之为管理服务器(Management Server )的主机上,经由xDS API向外暴露;下面是一个 纯动态资源的基础配置框架。

1 2 3 4 5 | {"lds_config": "{...}","cds_config": "{...}","ads_config": "{...}"} |

xDS API为Envoy提供了资源的动态配置机制,它也被称为Data Plane API。

1 2 3 4 | 1)基于文件系统发现:指定要监视的文件系统路径2)通过查询一到多个管理服务器(Management Server)发现:通过DiscoveryRequest协议报文发送请求,并要求服务方以DiscoveryResponse协议报文进行响应(1)gRPC服务:启动gRPC流(2)REST服务:轮询REST-JSON URL |

v3 xDS支持如下几种资源类型

1 2 3 4 5 6 7 8 | envoy.config.listener.v3.Listenerenvoy.config.route.v3.RouteConfigurationenvoy.config.route.v3.ScopedRouteConfigurationenvoy.config.route.v3.VirtualHostenvoy.config.cluster.v3.Clusterenvoy.config.endpoint.v3.ClusterLoadAssignmentenvoy.extensions.transport_sockets.tls.v3.Secretenvoy.service.runtime.v3. Runtime |

二、xDS API介绍

Envoy对xDS API的管理由后端服务器实现,包括LDS、CDS、RDS、SRDS(Scoped Route)、VHDS (Virtual Host)、EDS、SDS、RTDS(Runtime )等

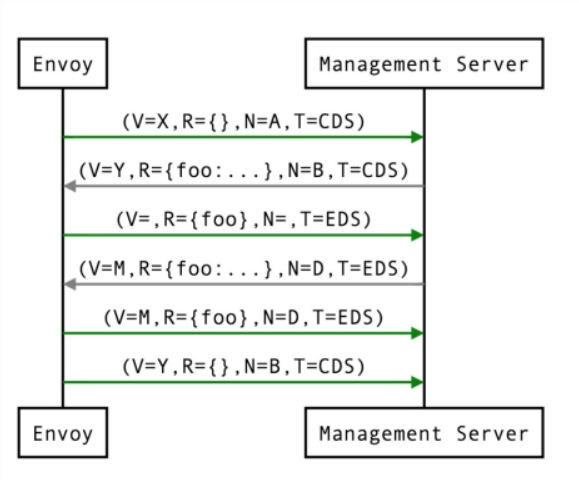

1 2 3 | 1)所有这些API都提供了最终的一致性,并且彼此间不存在相互影响;2)部分更高级别的操作(例如执行服务的A/B部署)需要进行排序以防止流量被丢弃,因此,基于一个管理服务器提供多类API时还需要使用聚合发现服务(ADS )API。 ADS API允许所有其他API通过来自单个管理服务器的单个gRPC双向流进行编组,从而允许对操作进行确定性排序 |

xDS的各API还支持增量传输机制,包括ADS

1 2 3 4 | 1)Management Server上的配置需要为适配到不同的Envoy实例2)Envoy 实例请求发现配置时,需要在请求报文中上报自身的信息(1)例如id、cluster、metadata和locality等(2)这些配置信息定义在Bootstrap配置文件中 |

专用的顶级配置段“node{…}”中配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | node: id: … # envoy节点的不透明节点标识符。 cluster: … # 定义运行特使的本地服务群集名称。 metadata: {…} # 扩展节点标识符的不透明元数据。envoy将直接将其传递给管理服务器。 locality: # 指定envoy实例运行位置的位置。 region: … zone: … sub_zone: … user_agent_name: … # 标识请求配置的实体的自由格式字符串。例如。“envoy”或“grpc” user_agent_version: … # 标识请求配置的实体版本的自由格式字符串。例如。“1.12.2”或“abcd1234”或“SpecialEnvoyBuild” user_agent_build_version: # 请求配置的实体的结构化版本 version: … metadata: {…} extensions: [ ] # 节点支持的扩展及其版本列表。 client_features: [ ] listening_addresses: [ ] # 节点上的已知侦听端口,作为对管理服务器的一般提示,用于筛选要返回的侦听器 |

四、API的流程

1、对于典型的HTTP路由方案,xDS API的Management Server 需要为其客户端(Envoy实例)配 置的核心资源类型为Listener、RouteConfiguration、Cluster和ClusterLoadAssignment四个。每个Listener资源可以包含一到多个RouteConfiguration资源,每个RouteConfiguration可以指向一到多个Cluster资源,而每个Cluster资源可以指向一到多个ClusterLoadAssignment资源

3、类型gRPC一类的非代理式客户端可以仅在启动时请求加载其感兴趣的Listener资源, 而后再加载这些特定Listener相关的RouteConfiguration资源;再然后,是这些 RouteConfiguration资源指向的Cluster资源,以及由这些Cluster资源依赖的 ClusterLoadAssignment资源;该种场景中,Listener资源是客户端整个配置树的“根”。

五、Envoy的配置方式

Envoy的架构支持非常灵活的配置方式:简单部署场景可以使用纯静态配置,而更复 杂的部署场景则可以逐步添加需要的动态配置机制

1 2 3 4 5 6 7 8 9 10 11 | 1、纯静态配置:用户自行提供侦听器、过滤器链、集 群及HTTP路由(http代理场景),上 游端点的 发 现仅可通过DNS服务进行,且配置的重新加载必须通过内置的热 重启( hot restart)完成;2、仅使用EDS:EDS提供的端点发现功能可有效规避DNS的限制( 响应中的 最大记录 数等);3、使用EDS和CDS:CDS能够让Envoy以优雅的方式添加、更新和删除 上游集群 ,于是, 初始配置 时, Envoy无须事先了解所有上游集群;4、EDS、CDS和RDS:动态发现路由配置;RDS与EDS、CDS一起使用时 ,为用户 提供了构 建复杂路 由 拓扑的能力(流量转移、蓝/绿部署等);5、EDS、CDS、RDS和LDS:动态发现侦听器配置,包括内嵌的过滤 器链;启 用此四种 发现服务 后,除 了较罕见的配置变动、证书轮替或更新Envoy程序之外,几 乎无须再 热重启Envoy;6、EDS、CDS、RDS、LDS和SDS:动态发现侦听器密钥相关的证书、 私钥及TLS会 话票据, 以及对证 书 验证逻辑的配置(受信任的根证书和撤销机制等 ); |

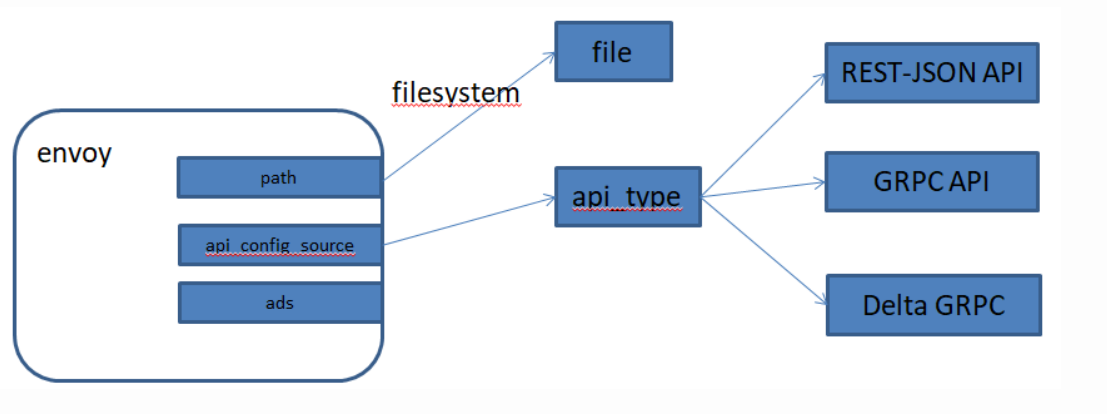

1、配置源(ConfigSource)用于指定资源配置数据的来源,用于为Listener、Cluster、Route、 Endpoint、Secret和VirtualHost等资源提供配置数据。

2、目前,Envoy支持的资源配置源只能是path、api_config_source或ads其中之一。

3、api_config_source或ads的数据来自于xDS API Server,即Management Server。

七、基于文件系统的订阅

为Envoy提供动态配置的最简单方法是将其放置在ConfigSource中显式指定的文件路径中

1 2 3 4 5 6 | 1)Envoy将使用inotify(Mac OS X上的kqueue)来监视文件的更改,并在更新时解析文件中的DiscoveryResponse 报文2)二进制protobufs,JSON,YAML和proto文本都是DiscoveryResponse 所支持的数据格式提示1)除了统计计数器和日志以外,没有任何机制可用于文件系统订阅ACK/NACK更新2)若配置更新被拒绝,xDS API的最后一个有效配置将继续适用 |

以EDS为例,Cluster为静态定义,其各Endpoint通过EDS动态发现

集群定义格式

1 2 3 4 5 6 7 8 9 | # Cluster中的endpoint配置格式clusters:- name: ... eds_cluster_config: service_name: eds_config: path: ... # ConfigSource,支持使用path, api_config_source或ads三者之一; |

cluster的配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 | # 类似如下纯静态格式的Cluster定义clusters:- name: webcluster connect_timeout: 0.25s type: STATIC #类型为静态 lb_policy: ROUND_ROBIN load_assignment: cluster_name: webcluster endpoints: - lb_endpoints: - endpoint: address: socket_address: address: 172.31.11.11 port_value: 8080#使用了EDS的配置clusters:- name: targetCluster connect_timeout: 0.25s lb_policy: ROUND_ROBIN type: EDS #类型为EDS eds_cluster_config: service_name: webcluster eds_config: path: '/etc/envoy/eds.yaml' # 指定订阅的文件路径#提示:文件后缀名为conf,则资源要以json格式定义;文件后缀名为yaml,则资源需要以yaml格式定义;另外,动态配置中,各Envoy实例需要有惟的id标识; |

1)文件/etc/envoy/eds.yaml中以Discovery Response报文的格式给出响应实例,例如,下面的 配置示例用于存在地址172.31.11.11某上游服务器可提供服务时

2) 响应报文需要以JSON格式给出

1 2 3 4 5 6 7 8 9 10 | resources: - "@type": type.googleapis.com/envoy.config.endpoint.v3.ClusterLoadAssignment cluster_name: webcluster endpoints: - lb_endpoints: - endpoint: address: socket_address: address: 172.31.11.11 port_value: 8080 |

随后,修改该文件,将172.31.11.12也添加进后端端点列表中,模拟配置变动

1 2 3 4 5 | - endpoint: address: socket_address: address: 172.31.11.12 port_value: 8080 |

2、基于lds和cds实现Envoy基本全动态的配置方式

1)各Listener的定义以Discovery Response 的标准格式保存于一个文件中。

2) 各Cluster的定义同样以Discovery Response的标准格式保存于另一文件中。

如下面Envoy Bootstrap配置文件示例中的文件引用

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | node: id: envoy_front_proxy cluster: MageEdu_Clusteradmin: profile_path: /tmp/envoy.prof access_log_path: /tmp/admin_access.log address: socket_address: address: 0.0.0.0 port_value: 9901dynamic_resources: lds_config: path: /etc/envoy/conf.d/lds.yaml cds_config: path: /etc/envoy/conf.d/cds.yaml |

lds的Discovery Response格式的配置示例如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | resources:- "@type": type.googleapis.com/envoy.config.listener.v3.Listener name: listener_http address: socket_address: { address: 0.0.0.0, port_value: 80 } filter_chains: - filters: name: envoy.http_connection_manager typed_config: "@type":type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager stat_prefix: ingress_http route_config: name: local_route virtual_hosts: - name: local_service domains: ["*"] routes: - match: prefix: "/" route: cluster: webcluster http_filters: - name: envoy.filters.http.router |

cds的Discovery Response格式的配置示例如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | resources:- "@type": type.googleapis.com/envoy.config.cluster.v3.Cluster name: webcluster connect_timeout: 1s type: STRICT_DNS load_assignment: cluster_name: webcluster endpoints: - lb_endpoints: - endpoint: address: socket_address: address: webserver01 port_value: 8080 - endpoint: address: socket_address: address: webserver02 port_value: 8080 |

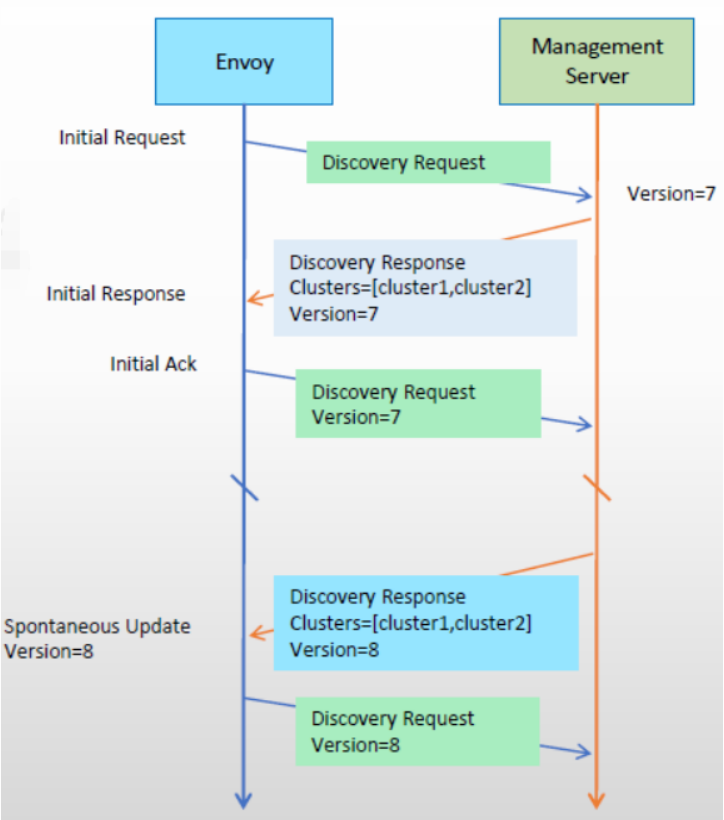

1、gRPC的介绍

1)、Enovy支持为每个xDS API独立指定gRPC ApiConfigSource,它指向与管理服务器对应的某 上游集群。

1 2 3 | (1)这将为每个xDS资源类型启动一个独立的双向gRPC流,可能会发送给不同的管理服务器(2)每个流都有自己独立维护的 资源版本,且不存在跨资源类型的共享版本机制;(3)在不使用ADS的情况下,每个资源类型可能具有不同的版本,因为Envoy API允许指向不同的 EDS/RDS资源配置并对应不同的ConfigSources |

2)、API的交付方式采用最终一致性机制。

2、基于gRPC的动态配置格式

以LDS为例,它配置Listener以动态方式发现和加载,而内部的路由可由发现的Listener直接 提供,也可配置再经由RDS发现。

下面为LDS配置格式,CDS等的配置格式类同:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | dynamic_resouces: lds_config: api_config_source: api_type: ... # API 可经由REST或gRPC获取,支持的类型包括REST、gRPC和delta_gRPC resource_api_version: ... # xDS 资源的API版本,对于1.19及之后的Envoy版本,要使用v3; rate_limit_settings: {...} # 速率限制 grpc_services: # 提供grpc服务的一到多个服务源 transport_api_version : ... # xDS 传输协议使用的API版本,对于1.19及之后的Envoy版本,要使用v3; envoy_grpc: # Envoy内建的grpc客户端,envoy_grpc和google_grpc二者仅能用其一; cluster_name: ... # grpc集群的名称; google_grpc: # Google的C++ grpc客户端 timeout: ... # grpc超时时长; 注意:提供gRPC API服务的Management Server (控制平面)也需要定义为Envoy上的集群, 并由envoy实例通过xDS API进行请求;(1)通常,这些管理服务器需要以静态资源的格式提供;(2)类似于,DHCP协议的Server 端的地址必须静态配置,而不能经由DHCP协议获取; |

3、基于GRPC管理服务器订阅

下面的示例配置使用了lds和cds 分别动态获取Listener和Cluster相关的配置。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 | node: id: envoy_front_proxy cluster: webcluster admin: profile_path: /tmp/envoy.prof access_log_path: /tmp/admin_access.log address: socket_address: address: 0.0.0.0 port_value: 9901dynamic_resources: lds_config: #定义动态获取listener resource_api_version: V3 api_config_source: api_type: GRPC #使用GRPC模式 transport_api_version: V3 grpc_services: - envoy_grpc: cluster_name: xds_cluster #调用grpc服务器 cds_config: #定义动态获取cluster resource_api_version: V3 api_config_source: api_type: GRPC #使用GRPC模式 transport_api_version: V3 grpc_services: - envoy_grpc: cluster_name: xds_cluster #调用grpc服务器#xds_cluster需要静态配置static_resources: clusters: - name: xds_cluster connect_timeout: 0.25s type: STRICT_DNS # Used to provide extension-specific protocol options for upstream connections. typed_extension_protocol_options: envoy.extensions.upstreams.http.v3.HttpProtocolOptions: "@type": type.googleapis.com/envoy.extensions.upstreams.http.v3.HttpProtocolOptions explicit_http_config: http2_protocol_options: {} lb_policy: ROUND_ROBIN load_assignment: cluster_name: xds_cluster endpoints: - lb_endpoints: - endpoint: address: socket_address: address: xdsserver-IP port_value: 18000 |

1 2 | 1)配合仔细规划的更新顺序,ADS可规避更新过程中流量丢失2)使用 ADS,在单个流上可通过类型 URL 来进行复用多个独立的 DiscoveryRequest/DiscoveryResponse 序列 |

使用了ads分别动态获取Listener和Cluster相关的配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 | node: id: envoy_front_proxy cluster: webcluster admin: profile_path: /tmp/envoy.prof access_log_path: /tmp/admin_access.log address: socket_address: address: 0.0.0.0 port_value: 9901dynamic_resources: ads_config: api_type: GRPC transport_api_version: V3 grpc_services: - envoy_grpc: cluster_name: xds_cluster set_node_on_first_message_only: true cds_config: resource_api_version: V3 ads: {} lds_config: resource_api_version: V3 ads: {} #xds_cluster需要静态配置static_resources: clusters: - name: xds_cluster connect_timeout: 0.25s type: STRICT_DNS # Used to provide extension-specific protocol options for upstream connections. typed_extension_protocol_options: envoy.extensions.upstreams.http.v3.HttpProtocolOptions: "@type": type.googleapis.com/envoy.extensions.upstreams.http.v3.HttpProtocolOptions explicit_http_config: http2_protocol_options: {} lb_policy: ROUND_ROBIN load_assignment: cluster_name: xds_cluster endpoints: - lb_endpoints: - endpoint: address: socket_address: address: xdsserver-IP port_value: 18000 |

九、REST-JSON轮询订阅

1、REST-JSON介绍

2)、上面的消息顺序是类似的,除了没有维护到管理服务器的持久流

3)、预计在任何时间点只有一个未完成的请求,因此响应nonce在REST-JSON中是可选的 p proto3的JSON规范转换用于编码DiscoveryRequest和DiscoveryResponse消息。

4)、ADS不适用于REST-JSON轮询

5)、当轮询周期设置为较小的值时,为了进行长轮询,则还需要避免发送DiscoveryResponse ,除 非发生了对底层资源的更改

2、以LDS为例,它用于发现并配置Listener

基于REST订阅的LDS配置格式,CDS等其它配置类似

1 2 3 4 5 6 7 8 9 | dynamic_resources: lds_config: resource_api_version: … # xDS 资源配置遵循的API版本,v1.19版本及以后仅支持V3 ; api_config_source: transport_api_version: ... # xDS 传输协议中使用API版本,v1.19版本及以后仅支持V3; api_type: ... # API 可经由REST或gRPC获取,支持的类型包括REST、GRPC和DELTA_GRPC cluster_names: ... # 提供服务的集群名称列表,仅能与REST类型的API一起使用;多个集群用于冗余之目的,故障时将循环访问; refresh_delay: ... # REST API 轮询时间间隔; request_timeout: ... # REST API请求超时时长,默认为1s; |

注意:提供REST API服务的管理服务器也需要定义为Envoy上的集群,并由LDS等相关 的动态发现服务进行调用;但这些管理服务器需要以静态配置的格式提供;

3、基于REST管理服务器订阅

使用了ads分别动态获取Listener和Cluster相关的配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | node: id: envoy_front_proxy cluster: webclusteradmin: profile_path: /tmp/envoy.prof access_log_path: /tmp/admin_access.log address: socket_address: address: 0.0.0.0 port_value: 9901dynamic_resources: cds_config: resource_api_version: V3 api_config_source: api_type: REST transport_api_version: V3 refresh_delay: {nanos: 500000000} # 1/2s cluster_names: - xds_cluster lds_config: resource_api_version: V3 api_config_source: api_type: REST transport_api_version: V3 refresh_delay: {nanos: 500000000} # 1/2s cluster_names: - xds_cluster |

十、实验案例

1 2 3 4 5 | 三个Service:envoy:Front Proxy,地址由docker-compose动态分配webserver01:第一个后端服务,地址由docker-compose动态分配,且将webserver01解析到该地址webserver02:第二个后端服务,地址由docker-compose动态分配,且将webserver02解析到该地址 |

envoy.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 | admin: access_log_path: "/dev/null" address: socket_address: address: 0.0.0.0 port_value: 9901static_resources: listeners: - name: listener_0 address: socket_address: { address: 0.0.0.0, port_value: 80 } filter_chains: - filters: - name: envoy.filters.network.http_connection_manager typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager stat_prefix: ingress_http codec_type: AUTO route_config: name: local_route virtual_hosts: - name: web_service_1 domains: ["*"] routes: - match: { prefix: "/" } route: { cluster: local_cluster } http_filters: - name: envoy.filters.http.router clusters: - name: local_cluster connect_timeout: 0.25s type: STRICT_DNS dns_lookup_family: V4_ONLY lb_policy: ROUND_ROBIN load_assignment: cluster_name: local_cluster endpoints: - lb_endpoints: - endpoint: address: socket_address: { address: webserver01, port_value: 8080 } - endpoint: address: socket_address: { address: webserver02, port_value: 8080 } |

docker-compose.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 | version: '3.3' services: envoy: image: envoyproxy/envoy-alpine:v1.20.0 environment: - ENVOY_UID=0 volumes: - ./envoy.yaml:/etc/envoy/envoy.yaml networks: envoymesh: aliases: - front-proxy depends_on: - webserver01 - webserver02 webserver01: image: ikubernetes/demoapp:v1.0 environment: - PORT=8080 hostname: webserver01 networks: envoymesh: aliases: - webserver01 webserver02: image: ikubernetes/demoapp:v1.0 environment: - PORT=8080 hostname: webserver02 networks: envoymesh: aliases: - webserver02networks: envoymesh: driver: bridge ipam: config: - subnet: 172.31.10.0/24 |

实验验证

1 | docker-compose up |

克隆窗口来验证

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 | root@test:~# front_proxy_ip=$(docker container inspect --format '{{ $network := index .NetworkSettings.Networks "cluster-static-dns-discovery_envoymesh" }}{{ $network.IPAddress}}' cluster-static-dns-discovery_envoy_1)root@test:~# echo $front_proxy_ip 172.31.10.4root@test:~# curl $front_proxy_ipiKubernetes demoapp v1.0 !! ClientIP: 172.31.10.4, ServerName: webserver01, ServerIP: 172.31.10.2!root@test:~# curl $front_proxy_ipiKubernetes demoapp v1.0 !! ClientIP: 172.31.10.4, ServerName: webserver02, ServerIP: 172.31.10.3!#可以通过admin interface了解集群的相关状态,尤其是获取的各endpoint的相关信息root@test:~# curl http://${front_proxy_ip}:9901/clusterslocal_cluster::observability_name::local_clusterlocal_cluster::default_priority::max_connections::1024local_cluster::default_priority::max_pending_requests::1024local_cluster::default_priority::max_requests::1024local_cluster::default_priority::max_retries::3local_cluster::high_priority::max_connections::1024local_cluster::high_priority::max_pending_requests::1024local_cluster::high_priority::max_requests::1024local_cluster::high_priority::max_retries::3local_cluster::added_via_api::falselocal_cluster::172.31.10.2:8080::cx_active::0local_cluster::172.31.10.2:8080::cx_connect_fail::0local_cluster::172.31.10.2:8080::cx_total::2local_cluster::172.31.10.2:8080::rq_active::0local_cluster::172.31.10.2:8080::rq_error::0local_cluster::172.31.10.2:8080::rq_success::2local_cluster::172.31.10.2:8080::rq_timeout::0local_cluster::172.31.10.2:8080::rq_total::2local_cluster::172.31.10.2:8080::hostname::webserver01local_cluster::172.31.10.2:8080::health_flags::healthylocal_cluster::172.31.10.2:8080::weight::1local_cluster::172.31.10.2:8080::region::local_cluster::172.31.10.2:8080::zone::local_cluster::172.31.10.2:8080::sub_zone::local_cluster::172.31.10.2:8080::canary::falselocal_cluster::172.31.10.2:8080::priority::0local_cluster::172.31.10.2:8080::success_rate::-1.0local_cluster::172.31.10.2:8080::local_origin_success_rate::-1.0local_cluster::172.31.10.3:8080::cx_active::0local_cluster::172.31.10.3:8080::cx_connect_fail::0local_cluster::172.31.10.3:8080::cx_total::3local_cluster::172.31.10.3:8080::rq_active::0local_cluster::172.31.10.3:8080::rq_error::0local_cluster::172.31.10.3:8080::rq_success::3local_cluster::172.31.10.3:8080::rq_timeout::0local_cluster::172.31.10.3:8080::rq_total::3local_cluster::172.31.10.3:8080::hostname::webserver02local_cluster::172.31.10.3:8080::health_flags::healthylocal_cluster::172.31.10.3:8080::weight::1local_cluster::172.31.10.3:8080::region::local_cluster::172.31.10.3:8080::zone::local_cluster::172.31.10.3:8080::sub_zone::local_cluster::172.31.10.3:8080::canary::falselocal_cluster::172.31.10.3:8080::priority::0local_cluster::172.31.10.3:8080::success_rate::-1.0local_cluster::172.31.10.3:8080::local_origin_success_rate::-1.0 |

2、eds-filesystem

实验环境

1 2 3 4 5 6 7 | 五个Service:envoy:Front Proxy,地址为172.31.11.2webserver01:第一个后端服务webserver01-sidecar:第一个后端服务的Sidecar Proxy,地址为172.31.11.11webserver02:第二个后端服务webserver02-sidecar:第二个后端服务的Sidecar Proxy,地址为172.31.11.12 |

front-envoy.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 | node: id: envoy_front_proxy cluster: MageEdu_Clusteradmin: profile_path: /tmp/envoy.prof access_log_path: /tmp/admin_access.log address: socket_address: address: 0.0.0.0 port_value: 9901static_resources: listeners: - name: listener_0 address: socket_address: { address: 0.0.0.0, port_value: 80 } filter_chains: - filters: - name: envoy.filters.network.http_connection_manager typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager stat_prefix: ingress_http codec_type: AUTO route_config: name: local_route virtual_hosts: - name: web_service_01 domains: ["*"] routes: - match: { prefix: "/" } route: { cluster: webcluster } http_filters: - name: envoy.filters.http.router clusters: - name: webcluster connect_timeout: 0.25s type: EDS lb_policy: ROUND_ROBIN eds_cluster_config: service_name: webcluster eds_config: path: '/etc/envoy/eds.conf.d/eds.yaml' |

envoy-sidecar-proxy.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 | admin: profile_path: /tmp/envoy.prof access_log_path: /tmp/admin_access.log address: socket_address: address: 0.0.0.0 port_value: 9901static_resources: listeners: - name: listener_0 address: socket_address: { address: 0.0.0.0, port_value: 80 } filter_chains: - filters: - name: envoy.filters.network.http_connection_manager typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager stat_prefix: ingress_http codec_type: AUTO route_config: name: local_route virtual_hosts: - name: local_service domains: ["*"] routes: - match: { prefix: "/" } route: { cluster: local_cluster } http_filters: - name: envoy.filters.http.router clusters: - name: local_cluster connect_timeout: 0.25s type: STATIC lb_policy: ROUND_ROBIN load_assignment: cluster_name: local_cluster endpoints: - lb_endpoints: - endpoint: address: socket_address: { address: 127.0.0.1, port_value: 8080 } |

eds.yaml

1 2 3 4 5 6 7 8 9 10 | resources:- "@type": type.googleapis.com/envoy.config.endpoint.v3.ClusterLoadAssignment cluster_name: webcluster endpoints: - lb_endpoints: - endpoint: address: socket_address: address: 172.31.11.11 port_value: 8080 |

eds.yaml.v1

1 2 3 4 5 6 7 8 9 10 | resources:- "@type": type.googleapis.com/envoy.config.endpoint.v3.ClusterLoadAssignment cluster_name: webcluster endpoints: - lb_endpoints: - endpoint: address: socket_address: address: 172.31.11.11 port_value: 8080 |

eds.yaml.v2

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | version_info: '2'resources:- "@type": type.googleapis.com/envoy.config.endpoint.v3.ClusterLoadAssignment cluster_name: webcluster endpoints: - lb_endpoints: - endpoint: address: socket_address: address: 172.31.11.11 port_value: 8080 - endpoint: address: socket_address: address: 172.31.11.12 port_value: 8080 |

docker-compose.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 | version: '3.3' services: envoy: image: envoyproxy/envoy-alpine:v1.20.0 environment: - ENVOY_UID=0 volumes: - ./front-envoy.yaml:/etc/envoy/envoy.yaml - ./eds.conf.d/:/etc/envoy/eds.conf.d/ networks: envoymesh: ipv4_address: 172.31.11.2 aliases: - front-proxy depends_on: - webserver01-sidecar - webserver02-sidecar webserver01-sidecar: image: envoyproxy/envoy-alpine:v1.20.0 environment: - ENVOY_UID=0 volumes: - ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml hostname: webserver01 networks: envoymesh: ipv4_address: 172.31.11.11 aliases: - webserver01-sidecar webserver01: image: ikubernetes/demoapp:v1.0 environment: - PORT=8080 - HOST=127.0.0.1 network_mode: "service:webserver01-sidecar" depends_on: - webserver01-sidecar webserver02-sidecar: image: envoyproxy/envoy-alpine:v1.20.0 environment: - ENVOY_UID=0 volumes: - ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml hostname: webserver02 networks: envoymesh: ipv4_address: 172.31.11.12 aliases: - webserver02-sidecar webserver02: image: ikubernetes/demoapp:v1.0 environment: - PORT=8080 - HOST=127.0.0.1 network_mode: "service:webserver02-sidecar" depends_on: - webserver02-sidecarnetworks: envoymesh: driver: bridge ipam: config: - subnet: 172.31.11.0/24 |

实验验证

1 | docker-compose up |

克隆窗口

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 | # 查看Cluster中的Endpoint信息 root@test:~# curl 172.31.11.2:9901/clusterswebcluster::observability_name::webclusterwebcluster::default_priority::max_connections::1024webcluster::default_priority::max_pending_requests::1024webcluster::default_priority::max_requests::1024webcluster::default_priority::max_retries::3webcluster::high_priority::max_connections::1024webcluster::high_priority::max_pending_requests::1024webcluster::high_priority::max_requests::1024webcluster::high_priority::max_retries::3webcluster::added_via_api::falsewebcluster::172.31.11.11:8080::cx_active::0webcluster::172.31.11.11:8080::cx_connect_fail::0webcluster::172.31.11.11:8080::cx_total::0webcluster::172.31.11.11:8080::rq_active::0webcluster::172.31.11.11:8080::rq_error::0webcluster::172.31.11.11:8080::rq_success::0webcluster::172.31.11.11:8080::rq_timeout::0webcluster::172.31.11.11:8080::rq_total::0webcluster::172.31.11.11:8080::hostname::webcluster::172.31.11.11:8080::health_flags::healthywebcluster::172.31.11.11:8080::weight::1webcluster::172.31.11.11:8080::region::webcluster::172.31.11.11:8080::zone::webcluster::172.31.11.11:8080::sub_zone::webcluster::172.31.11.11:8080::canary::falsewebcluster::172.31.11.11:8080::priority::0webcluster::172.31.11.11:8080::success_rate::-1.0webcluster::172.31.11.11:8080::local_origin_success_rate::-1.0# 接入front proxy envoy容器的交互式接口,修改eds.conf文件中的内容,将另一个endpoint添加进文件中;root@test:~# docker exec -it eds-filesystem_envoy_1 /bin/sh/ # cd /etc/envoy/eds.conf.d//etc/envoy/eds.conf.d # cat eds.yaml.v2 > eds.yaml# 运行下面的命令强制激活文件更改,以便基于inode监视的工作机制可被触发/etc/envoy/eds.conf.d # mv eds.yaml temp && mv temp eds.yaml# 再次查看Cluster中的Endpoint信息 root@test:~# curl 172.31.11.2:9901/clusterswebcluster::observability_name::webclusterwebcluster::default_priority::max_connections::1024webcluster::default_priority::max_pending_requests::1024webcluster::default_priority::max_requests::1024webcluster::default_priority::max_retries::3webcluster::high_priority::max_connections::1024webcluster::high_priority::max_pending_requests::1024webcluster::high_priority::max_requests::1024webcluster::high_priority::max_retries::3webcluster::added_via_api::falsewebcluster::172.31.11.11:8080::cx_active::0webcluster::172.31.11.11:8080::cx_connect_fail::0webcluster::172.31.11.11:8080::cx_total::0webcluster::172.31.11.11:8080::rq_active::0webcluster::172.31.11.11:8080::rq_error::0webcluster::172.31.11.11:8080::rq_success::0webcluster::172.31.11.11:8080::rq_timeout::0webcluster::172.31.11.11:8080::rq_total::0webcluster::172.31.11.11:8080::hostname::webcluster::172.31.11.11:8080::health_flags::healthywebcluster::172.31.11.11:8080::weight::1webcluster::172.31.11.11:8080::region::webcluster::172.31.11.11:8080::zone::webcluster::172.31.11.11:8080::sub_zone::webcluster::172.31.11.11:8080::canary::falsewebcluster::172.31.11.11:8080::priority::0webcluster::172.31.11.11:8080::success_rate::-1.0webcluster::172.31.11.11:8080::local_origin_success_rate::-1.0webcluster::172.31.11.12:8080::cx_active::0webcluster::172.31.11.12:8080::cx_connect_fail::0webcluster::172.31.11.12:8080::cx_total::0webcluster::172.31.11.12:8080::rq_active::0webcluster::172.31.11.12:8080::rq_error::0webcluster::172.31.11.12:8080::rq_success::0webcluster::172.31.11.12:8080::rq_timeout::0webcluster::172.31.11.12:8080::rq_total::0webcluster::172.31.11.12:8080::hostname::webcluster::172.31.11.12:8080::health_flags::healthywebcluster::172.31.11.12:8080::weight::1webcluster::172.31.11.12:8080::region::webcluster::172.31.11.12:8080::zone::webcluster::172.31.11.12:8080::sub_zone::webcluster::172.31.11.12:8080::canary::falsewebcluster::172.31.11.12:8080::priority::0webcluster::172.31.11.12:8080::success_rate::-1.0webcluster::172.31.11.12:8080::local_origin_success_rate::-1.0#新节点172.31.11.12已经添加 |

3、lds-cds-filesystem

实验环境

1 2 3 4 5 6 7 | 五个Service:envoy:Front Proxy,地址为172.31.12.2webserver01:第一个后端服务webserver01-sidecar:第一个后端服务的Sidecar Proxy,地址为172.31.12.11webserver02:第二个后端服务webserver02-sidecar:第二个后端服务的Sidecar Proxy,地址为172.31.12.12 |

front-envoy.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | node: id: envoy_front_proxy cluster: MageEdu_Clusteradmin: profile_path: /tmp/envoy.prof access_log_path: /tmp/admin_access.log address: socket_address: address: 0.0.0.0 port_value: 9901dynamic_resources: lds_config: path: /etc/envoy/conf.d/lds.yaml cds_config: path: /etc/envoy/conf.d/cds.yaml |

envoy-sidecar-proxy.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 | admin: profile_path: /tmp/envoy.prof access_log_path: /tmp/admin_access.log address: socket_address: address: 0.0.0.0 port_value: 9901static_resources: listeners: - name: listener_0 address: socket_address: { address: 0.0.0.0, port_value: 80 } filter_chains: - filters: - name: envoy.filters.network.http_connection_manager typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager stat_prefix: ingress_http codec_type: AUTO route_config: name: local_route virtual_hosts: - name: local_service domains: ["*"] routes: - match: { prefix: "/" } route: { cluster: local_cluster } http_filters: - name: envoy.filters.http.routerclusters: - name: local_cluster connect_timeout: 0.25s type: STATIC lb_policy: ROUND_ROBIN load_assignment: cluster_name: local_cluster endpoints: - lb_endpoints: - endpoint: address: socket_address: { address: 127.0.0.1, port_value: 8080 } |

docker-compose.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 | version: '3.3' services: envoy: image: envoyproxy/envoy-alpine:v1.20.0 environment: - ENVOY_UID=0 volumes: - ./front-envoy.yaml:/etc/envoy/envoy.yaml - ./conf.d/:/etc/envoy/conf.d/ networks: envoymesh: ipv4_address: 172.31.12.2 aliases: - front-proxy depends_on: - webserver01 - webserver01-app - webserver02 - webserver02-app webserver01: #image: envoyproxy/envoy-alpine:v1.18-latest image: envoyproxy/envoy-alpine:v1.20.0 environment: - ENVOY_UID=0 volumes: - ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml hostname: webserver01 networks: envoymesh: ipv4_address: 172.31.12.11 aliases: - webserver01-sidecar webserver01-app: image: ikubernetes/demoapp:v1.0 environment: - PORT=8080 - HOST=127.0.0.1 network_mode: "service:webserver01" depends_on: - webserver01 webserver02: #image: envoyproxy/envoy-alpine:v1.18-latest image: envoyproxy/envoy-alpine:v1.20.0 environment: - ENVOY_UID=0 volumes: - ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml hostname: webserver02 networks: envoymesh: ipv4_address: 172.31.12.12 aliases: - webserver02-sidecar webserver02-app: image: ikubernetes/demoapp:v1.0 environment: - PORT=8080 - HOST=127.0.0.1 network_mode: "service:webserver02" depends_on: - webserver02networks: envoymesh: driver: bridge ipam: config: - subnet: 172.31.12.0/24 |

cds.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | resources:- "@type": type.googleapis.com/envoy.config.cluster.v3.Cluster name: webcluster connect_timeout: 1s type: STRICT_DNS load_assignment: cluster_name: webcluster endpoints: - lb_endpoints: - endpoint: address: socket_address: address: webserver01 port_value: 8080 #- endpoint: # address: # socket_address: # address: webserver02 # port_value: 8080 |

lds.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | resources:- "@type": type.googleapis.com/envoy.config.listener.v3.Listener name: listener_http address: socket_address: { address: 0.0.0.0, port_value: 80 } filter_chains: - filters: name: envoy.http_connection_manager typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager stat_prefix: ingress_http route_config: name: local_route virtual_hosts: - name: local_service domains: ["*"] routes: - match: prefix: "/" route: cluster: webcluster http_filters: - name: envoy.filters.http.router |

实验验证

1 | docker-compose up |

克隆窗口

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 | # 查看Cluster的信息 root@test:~# curl 172.31.12.2:9901/clusterswebcluster::observability_name::webclusterwebcluster::default_priority::max_connections::1024webcluster::default_priority::max_pending_requests::1024webcluster::default_priority::max_requests::1024webcluster::default_priority::max_retries::3webcluster::high_priority::max_connections::1024webcluster::high_priority::max_pending_requests::1024webcluster::high_priority::max_requests::1024webcluster::high_priority::max_retries::3webcluster::added_via_api::truewebcluster::172.31.12.11:8080::cx_active::0webcluster::172.31.12.11:8080::cx_connect_fail::0webcluster::172.31.12.11:8080::cx_total::0webcluster::172.31.12.11:8080::rq_active::0webcluster::172.31.12.11:8080::rq_error::0webcluster::172.31.12.11:8080::rq_success::0webcluster::172.31.12.11:8080::rq_timeout::0webcluster::172.31.12.11:8080::rq_total::0webcluster::172.31.12.11:8080::hostname::webserver01webcluster::172.31.12.11:8080::health_flags::healthywebcluster::172.31.12.11:8080::weight::1webcluster::172.31.12.11:8080::region::webcluster::172.31.12.11:8080::zone::webcluster::172.31.12.11:8080::sub_zone::webcluster::172.31.12.11:8080::canary::falsewebcluster::172.31.12.11:8080::priority::0webcluster::172.31.12.11:8080::success_rate::-1.0webcluster::172.31.12.11:8080::local_origin_success_rate::-1.0# 查看Listener的信息 root@test:~# curl 172.31.12.2:9901/listenerslistener_http::0.0.0.0:80# 接入front proxy envoy容器的交互式接口root@test:~# docker exec -it lds-cds-filesystem_envoy_1 /bin/sh/ # cd /etc/envoy/conf.d/# 修改cds.yaml的内容,cds.yaml中添加一个节点/etc/envoy/conf.d # cat cds.yaml resources:- "@type": type.googleapis.com/envoy.config.cluster.v3.Cluster name: webcluster connect_timeout: 1s type: STRICT_DNS load_assignment: cluster_name: webcluster endpoints: - lb_endpoints: - endpoint: address: socket_address: address: webserver01 port_value: 8080 - endpoint: address: socket_address: address: webserver02 port_value: 8080#运行类似下面的命令强制激活文件更改,以便基于inode监视的工作机制可被触发/etc/envoy/conf.d # mv cds.yaml temp && mv temp cds.yaml## 再次验证相关的配置信息root@test:~# curl 172.31.12.2:9901/clusterswebcluster::observability_name::webclusterwebcluster::default_priority::max_connections::1024webcluster::default_priority::max_pending_requests::1024webcluster::default_priority::max_requests::1024webcluster::default_priority::max_retries::3webcluster::high_priority::max_connections::1024webcluster::high_priority::max_pending_requests::1024webcluster::high_priority::max_requests::1024webcluster::high_priority::max_retries::3webcluster::added_via_api::truewebcluster::172.31.12.11:8080::cx_active::0webcluster::172.31.12.11:8080::cx_connect_fail::0webcluster::172.31.12.11:8080::cx_total::0webcluster::172.31.12.11:8080::rq_active::0webcluster::172.31.12.11:8080::rq_error::0webcluster::172.31.12.11:8080::rq_success::0webcluster::172.31.12.11:8080::rq_timeout::0webcluster::172.31.12.11:8080::rq_total::0webcluster::172.31.12.11:8080::hostname::webserver01webcluster::172.31.12.11:8080::health_flags::healthywebcluster::172.31.12.11:8080::weight::1webcluster::172.31.12.11:8080::region::webcluster::172.31.12.11:8080::zone::webcluster::172.31.12.11:8080::sub_zone::webcluster::172.31.12.11:8080::canary::falsewebcluster::172.31.12.11:8080::priority::0webcluster::172.31.12.11:8080::success_rate::-1.0webcluster::172.31.12.11:8080::local_origin_success_rate::-1.0webcluster::172.31.12.12:8080::cx_active::0webcluster::172.31.12.12:8080::cx_connect_fail::0webcluster::172.31.12.12:8080::cx_total::0webcluster::172.31.12.12:8080::rq_active::0webcluster::172.31.12.12:8080::rq_error::0webcluster::172.31.12.12:8080::rq_success::0webcluster::172.31.12.12:8080::rq_timeout::0webcluster::172.31.12.12:8080::rq_total::0webcluster::172.31.12.12:8080::hostname::webserver02webcluster::172.31.12.12:8080::health_flags::healthywebcluster::172.31.12.12:8080::weight::1webcluster::172.31.12.12:8080::region::webcluster::172.31.12.12:8080::zone::webcluster::172.31.12.12:8080::sub_zone::webcluster::172.31.12.12:8080::canary::falsewebcluster::172.31.12.12:8080::priority::0webcluster::172.31.12.12:8080::success_rate::-1.0webcluster::172.31.12.12:8080::local_origin_success_rate::-1.0#新节点已经添加 |

1 2 3 4 5 6 7 8 | 六个Service:envoy:Front Proxy,地址为172.31.16.2webserver01:第一个后端服务webserver01-sidecar:第一个后端服务的Sidecar Proxy,地址为172.31.16.11webserver02:第二个后端服务webserver02-sidecar:第二个后端服务的Sidecar Proxy,地址为172.31.16.12xdsserver: xDS management server,地址为172.31.16.5 |

front-envoy.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 | node: id: envoy_front_proxy cluster: webclusteradmin: profile_path: /tmp/envoy.prof access_log_path: /tmp/admin_access.log address: socket_address: address: 0.0.0.0 port_value: 9901dynamic_resources: ads_config: api_type: GRPC transport_api_version: V3 grpc_services: - envoy_grpc: cluster_name: xds_cluster set_node_on_first_message_only: true cds_config: resource_api_version: V3 ads: {} lds_config: resource_api_version: V3 ads: {}static_resources: clusters: - name: xds_cluster connect_timeout: 0.25s type: STRICT_DNS # The extension_protocol_options field is used to provide extension-specific protocol options for upstream connections. typed_extension_protocol_options: envoy.extensions.upstreams.http.v3.HttpProtocolOptions: "@type": type.googleapis.com/envoy.extensions.upstreams.http.v3.HttpProtocolOptions explicit_http_config: http2_protocol_options: {} lb_policy: ROUND_ROBIN load_assignment: cluster_name: xds_cluster endpoints: - lb_endpoints: - endpoint: address: socket_address: address: xdsserver port_value: 18000 |

envoy-sidecar-proxy.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 | admin: profile_path: /tmp/envoy.prof access_log_path: /tmp/admin_access.log address: socket_address: address: 0.0.0.0 port_value: 9901static_resources: listeners: - name: listener_0 address: socket_address: { address: 0.0.0.0, port_value: 80 } filter_chains: - filters: - name: envoy.filters.network.http_connection_manager typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager stat_prefix: ingress_http codec_type: AUTO route_config: name: local_route virtual_hosts: - name: local_service domains: ["*"] routes: - match: { prefix: "/" } route: { cluster: local_cluster } http_filters: - name: envoy.filters.http.router clusters: - name: local_cluster connect_timeout: 0.25s type: STATIC lb_policy: ROUND_ROBIN load_assignment: cluster_name: local_cluster endpoints: - lb_endpoints: - endpoint: address: socket_address: { address: 127.0.0.1, port_value: 8080 } |

docker-compose.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 | version: '3.3' services: envoy: image: envoyproxy/envoy-alpine:v1.18-latest volumes: - ./front-envoy.yaml:/etc/envoy/envoy.yaml networks: envoymesh: ipv4_address: 172.31.16.2 aliases: - front-proxy depends_on: - webserver01 - webserver02 - xdsserver webserver01: image: ikubernetes/demoapp:v1.0 environment: - PORT=8080 - HOST=127.0.0.1 hostname: webserver01 networks: envoymesh: ipv4_address: 172.31.16.11 webserver01-sidecar: image: envoyproxy/envoy-alpine:v1.18-latest volumes: - ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml network_mode: "service:webserver01" depends_on: - webserver01 webserver02: image: ikubernetes/demoapp:v1.0 environment: - PORT=8080 - HOST=127.0.0.1 hostname: webserver02 networks: envoymesh: ipv4_address: 172.31.16.12 webserver02-sidecar: image: envoyproxy/envoy-alpine:v1.18-latest volumes: - ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml network_mode: "service:webserver02" depends_on: - webserver02 xdsserver: image: ikubernetes/envoy-xds-server:v0.1 environment: - SERVER_PORT=18000 - NODE_ID=envoy_front_proxy - RESOURCES_FILE=/etc/envoy-xds-server/config/config.yaml volumes: - ./resources:/etc/envoy-xds-server/config/ networks: envoymesh: ipv4_address: 172.31.16.5 aliases: - xdsserver - xds-service expose: - "18000" networks: envoymesh: driver: bridge ipam: config: - subnet: 172.31.16.0/24 |

resources目录中的文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | name: myconfigspec: listeners: - name: listener_http address: 0.0.0.0 port: 80 routes: - name: local_route prefix: / clusters: - webcluster clusters: - name: webcluster endpoints: - address: 172.31.16.11 port: 8080 |

config.yaml-v2

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | name: myconfigspec: listeners: - name: listener_http address: 0.0.0.0 port: 80 routes: - name: local_route prefix: / clusters: - webcluster clusters: - name: webcluster endpoints: - address: 172.31.16.11 port: 8080 - address: 172.31.16.12 port: 8080 |

实验验证

1 | docker-compose up |

克隆窗口

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 | # 查看Cluster及Endpoints信息;root@test:~# curl 172.31.16.2:9901/clusterswebcluster::observability_name::webclusterwebcluster::default_priority::max_connections::1024webcluster::default_priority::max_pending_requests::1024webcluster::default_priority::max_requests::1024webcluster::default_priority::max_retries::3webcluster::high_priority::max_connections::1024webcluster::high_priority::max_pending_requests::1024webcluster::high_priority::max_requests::1024webcluster::high_priority::max_retries::3webcluster::added_via_api::truewebcluster::172.31.16.11:8080::cx_active::0webcluster::172.31.16.11:8080::cx_connect_fail::0webcluster::172.31.16.11:8080::cx_total::0webcluster::172.31.16.11:8080::rq_active::0webcluster::172.31.16.11:8080::rq_error::0webcluster::172.31.16.11:8080::rq_success::0webcluster::172.31.16.11:8080::rq_timeout::0webcluster::172.31.16.11:8080::rq_total::0webcluster::172.31.16.11:8080::hostname::webcluster::172.31.16.11:8080::health_flags::healthywebcluster::172.31.16.11:8080::weight::1webcluster::172.31.16.11:8080::region::webcluster::172.31.16.11:8080::zone::webcluster::172.31.16.11:8080::sub_zone::webcluster::172.31.16.11:8080::canary::falsewebcluster::172.31.16.11:8080::priority::0webcluster::172.31.16.11:8080::success_rate::-1.0webcluster::172.31.16.11:8080::local_origin_success_rate::-1.0xds_cluster::observability_name::xds_clusterxds_cluster::default_priority::max_connections::1024xds_cluster::default_priority::max_pending_requests::1024xds_cluster::default_priority::max_requests::1024xds_cluster::default_priority::max_retries::3xds_cluster::high_priority::max_connections::1024xds_cluster::high_priority::max_pending_requests::1024xds_cluster::high_priority::max_requests::1024xds_cluster::high_priority::max_retries::3xds_cluster::added_via_api::falsexds_cluster::172.31.16.5:18000::cx_active::1xds_cluster::172.31.16.5:18000::cx_connect_fail::0xds_cluster::172.31.16.5:18000::cx_total::1xds_cluster::172.31.16.5:18000::rq_active::3xds_cluster::172.31.16.5:18000::rq_error::0xds_cluster::172.31.16.5:18000::rq_success::0xds_cluster::172.31.16.5:18000::rq_timeout::0xds_cluster::172.31.16.5:18000::rq_total::3xds_cluster::172.31.16.5:18000::hostname::xdsserverxds_cluster::172.31.16.5:18000::health_flags::healthyxds_cluster::172.31.16.5:18000::weight::1xds_cluster::172.31.16.5:18000::region::xds_cluster::172.31.16.5:18000::zone::xds_cluster::172.31.16.5:18000::sub_zone::xds_cluster::172.31.16.5:18000::canary::falsexds_cluster::172.31.16.5:18000::priority::0xds_cluster::172.31.16.5:18000::success_rate::-1.0xds_cluster::172.31.16.5:18000::local_origin_success_rate::-1.0#查看动态Clusters的相关信息root@test:~# curl -s 172.31.16.2:9901/config_dump | jq '.configs[1].dynamic_active_clusters'[ { "version_info": "411", "cluster": { "@type": "type.googleapis.com/envoy.config.cluster.v3.Cluster", "name": "webcluster", "type": "EDS", "eds_cluster_config": { "eds_config": { "api_config_source": { "api_type": "GRPC", "grpc_services": [ { "envoy_grpc": { "cluster_name": "xds_cluster" } } ], "set_node_on_first_message_only": true, "transport_api_version": "V3" }, "resource_api_version": "V3" } }, "connect_timeout": "5s", "dns_lookup_family": "V4_ONLY" }, "last_updated": "2021-12-02T07:47:28.765Z" }]# 查看Listener列表root@test:~# curl 172.31.16.2:9901/listenerslistener_http::0.0.0.0:80#查看动态的Listener信息root@test:~# curl -s 172.31.16.2:9901/config_dump?resource=dynamic_listeners | jq '.configs[0].active_state.listener.address'{ "socket_address": { "address": "0.0.0.0", "port_value": 80 }}# 接入xdsserver容器的交互式接口,修改config.yaml文件中的内容,将另一个endpoint添加进文件中,或进行其它修改;root@test:/apps/servicemesh_in_practise-develop/Dynamic-Configuration/ads-grpc# docker-compose exec xdsserver sh/ # cd /etc/envoy-xds-server/config//etc/envoy-xds-server/config #/etc/envoy-xds-server/config # cat config.yaml-v2 > config.yaml#提示:以上修改操作也可以直接在宿主机上的存储卷目录中进行。# 再次查看Cluster中的Endpoint信息root@test:/apps/servicemesh_in_practise-develop/Dynamic-Configuration/ads-grpc# curl 172.31.16.2:9901/clusterswebcluster::observability_name::webclusterwebcluster::default_priority::max_connections::1024webcluster::default_priority::max_pending_requests::1024webcluster::default_priority::max_requests::1024webcluster::default_priority::max_retries::3webcluster::high_priority::max_connections::1024webcluster::high_priority::max_pending_requests::1024webcluster::high_priority::max_requests::1024webcluster::high_priority::max_retries::3webcluster::added_via_api::truewebcluster::172.31.16.11:8080::cx_active::0webcluster::172.31.16.11:8080::cx_connect_fail::0webcluster::172.31.16.11:8080::cx_total::0webcluster::172.31.16.11:8080::rq_active::0webcluster::172.31.16.11:8080::rq_error::0webcluster::172.31.16.11:8080::rq_success::0webcluster::172.31.16.11:8080::rq_timeout::0webcluster::172.31.16.11:8080::rq_total::0webcluster::172.31.16.11:8080::hostname::webcluster::172.31.16.11:8080::health_flags::healthywebcluster::172.31.16.11:8080::weight::1webcluster::172.31.16.11:8080::region::webcluster::172.31.16.11:8080::zone::webcluster::172.31.16.11:8080::sub_zone::webcluster::172.31.16.11:8080::canary::falsewebcluster::172.31.16.11:8080::priority::0webcluster::172.31.16.11:8080::success_rate::-1.0webcluster::172.31.16.11:8080::local_origin_success_rate::-1.0webcluster::172.31.16.12:8080::cx_active::0webcluster::172.31.16.12:8080::cx_connect_fail::0webcluster::172.31.16.12:8080::cx_total::0webcluster::172.31.16.12:8080::rq_active::0webcluster::172.31.16.12:8080::rq_error::0webcluster::172.31.16.12:8080::rq_success::0webcluster::172.31.16.12:8080::rq_timeout::0webcluster::172.31.16.12:8080::rq_total::0webcluster::172.31.16.12:8080::hostname::webcluster::172.31.16.12:8080::health_flags::healthywebcluster::172.31.16.12:8080::weight::1webcluster::172.31.16.12:8080::region::webcluster::172.31.16.12:8080::zone::webcluster::172.31.16.12:8080::sub_zone::webcluster::172.31.16.12:8080::canary::falsewebcluster::172.31.16.12:8080::priority::0webcluster::172.31.16.12:8080::success_rate::-1.0webcluster::172.31.16.12:8080::local_origin_success_rate::-1.0xds_cluster::observability_name::xds_clusterxds_cluster::default_priority::max_connections::1024xds_cluster::default_priority::max_pending_requests::1024xds_cluster::default_priority::max_requests::1024xds_cluster::default_priority::max_retries::3xds_cluster::high_priority::max_connections::1024xds_cluster::high_priority::max_pending_requests::1024xds_cluster::high_priority::max_requests::1024xds_cluster::high_priority::max_retries::3xds_cluster::added_via_api::falsexds_cluster::172.31.16.5:18000::cx_active::1xds_cluster::172.31.16.5:18000::cx_connect_fail::0xds_cluster::172.31.16.5:18000::cx_total::1xds_cluster::172.31.16.5:18000::rq_active::3xds_cluster::172.31.16.5:18000::rq_error::0xds_cluster::172.31.16.5:18000::rq_success::0xds_cluster::172.31.16.5:18000::rq_timeout::0xds_cluster::172.31.16.5:18000::rq_total::3xds_cluster::172.31.16.5:18000::hostname::xdsserverxds_cluster::172.31.16.5:18000::health_flags::healthyxds_cluster::172.31.16.5:18000::weight::1xds_cluster::172.31.16.5:18000::region::xds_cluster::172.31.16.5:18000::zone::xds_cluster::172.31.16.5:18000::sub_zone::xds_cluster::172.31.16.5:18000::canary::falsexds_cluster::172.31.16.5:18000::priority::0xds_cluster::172.31.16.5:18000::success_rate::-1.0xds_cluster::172.31.16.5:18000::local_origin_success_rate::-1.0#新节点已经添加 |

5、lds-cds-grpc

实验环境

1 2 3 4 5 6 7 8 | 六个Service:envoy:Front Proxy,地址为172.31.15.2webserver01:第一个后端服务webserver01-sidecar:第一个后端服务的Sidecar Proxy,地址为172.31.15.11webserver02:第二个后端服务webserver02-sidecar:第二个后端服务的Sidecar Proxy,地址为172.31.15.12xdsserver: xDS management server,地址为172.31.15.5 |

envoy_front_proxy.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 | node: id: envoy_front_proxy cluster: webclusteradmin: profile_path: /tmp/envoy.prof access_log_path: /tmp/admin_access.log address: socket_address: address: 0.0.0.0 port_value: 9901dynamic_resources: lds_config: resource_api_version: V3 api_config_source: api_type: GRPC transport_api_version: V3 grpc_services: - envoy_grpc: cluster_name: xds_cluster cds_config: resource_api_version: V3 api_config_source: api_type: GRPC transport_api_version: V3 grpc_services: - envoy_grpc: cluster_name: xds_clusterstatic_resources: clusters: - name: xds_cluster connect_timeout: 0.25s type: STRICT_DNS # The extension_protocol_options field is used to provide extension-specific protocol options for upstream connections. typed_extension_protocol_options: envoy.extensions.upstreams.http.v3.HttpProtocolOptions: "@type": type.googleapis.com/envoy.extensions.upstreams.http.v3.HttpProtocolOptions explicit_http_config: http2_protocol_options: {} lb_policy: ROUND_ROBIN load_assignment: cluster_name: xds_cluster endpoints: - lb_endpoints: - endpoint: address: socket_address: address: xdsserver port_value: 18000 |

envoy-sidecar-proxy.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 | admin: profile_path: /tmp/envoy.prof access_log_path: /tmp/admin_access.log address: socket_address: address: 0.0.0.0 port_value: 9901static_resources: listeners: - name: listener_0 address: socket_address: { address: 0.0.0.0, port_value: 80 } filter_chains: - filters: - name: envoy.filters.network.http_connection_manager typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager stat_prefix: ingress_http codec_type: AUTO route_config: name: local_route virtual_hosts: - name: local_service domains: ["*"] routes: - match: { prefix: "/" } route: { cluster: local_cluster } http_filters: - name: envoy.filters.http.router clusters: - name: local_cluster connect_timeout: 0.25s type: STATIC lb_policy: ROUND_ROBIN load_assignment: cluster_name: local_cluster endpoints: - lb_endpoints: - endpoint: address: socket_address: { address: 127.0.0.1, port_value: 8080 } |

docker-compose.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 | version: '3.3'services: envoy: image: envoyproxy/envoy-alpine:v1.20.0 environment: - ENVOY_UID=0 volumes: - ./front-envoy.yaml:/etc/envoy/envoy.yaml networks: envoymesh: ipv4_address: 172.31.15.2 aliases: - front-proxy depends_on: - webserver01 - webserver02 - xdsserver webserver01: image: ikubernetes/demoapp:v1.0 environment: - PORT=8080 - HOST=127.0.0.1 hostname: webserver01 networks: envoymesh: ipv4_address: 172.31.15.11 webserver01-sidecar: image: envoyproxy/envoy-alpine:v1.20.0 environment: - ENVOY_UID=0 volumes: - ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml network_mode: "service:webserver01" depends_on: - webserver01 webserver02: image: ikubernetes/demoapp:v1.0 environment: - PORT=8080 - HOST=127.0.0.1 hostname: webserver02 networks: envoymesh: ipv4_address: 172.31.15.12 webserver02-sidecar: image: envoyproxy/envoy-alpine:v1.20.0 environment: - ENVOY_UID=0 volumes: - ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml network_mode: "service:webserver02" depends_on: - webserver02 xdsserver: image: ikubernetes/envoy-xds-server:v0.1 environment: - SERVER_PORT=18000 - NODE_ID=envoy_front_proxy - RESOURCES_FILE=/etc/envoy-xds-server/config/config.yaml volumes: - ./resources:/etc/envoy-xds-server/config/ networks: envoymesh: ipv4_address: 172.31.15.5 aliases: - xdsserver - xds-service expose: - "18000"networks: envoymesh: driver: bridge ipam: config: - subnet: 172.31.15.0/24 |

resources目录文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | name: myconfigspec: listeners: - name: listener_http address: 0.0.0.0 port: 80 routes: - name: local_route prefix: / clusters: - webcluster clusters: - name: webcluster endpoints: - address: 172.31.15.11 port: 8080 |

config.yaml-v2

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | name: myconfigspec: listeners: - name: listener_http address: 0.0.0.0 port: 80 routes: - name: local_route prefix: / clusters: - webcluster clusters: - name: webcluster endpoints: - address: 172.31.15.11 port: 8080 - address: 172.31.15.12 port: 8080 |

实验验证

1 | docker-compose up |

克隆窗口

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 | # 查看Cluster及Endpoints信息root@test:/apps/servicemesh_in_practise-develop/Dynamic-Configuration/ads-grpc# curl 172.31.15.2:9901/clustersxds_cluster::observability_name::xds_clusterxds_cluster::default_priority::max_connections::1024xds_cluster::default_priority::max_pending_requests::1024xds_cluster::default_priority::max_requests::1024xds_cluster::default_priority::max_retries::3xds_cluster::high_priority::max_connections::1024xds_cluster::high_priority::max_pending_requests::1024xds_cluster::high_priority::max_requests::1024xds_cluster::high_priority::max_retries::3xds_cluster::added_via_api::falsexds_cluster::172.31.15.5:18000::cx_active::1xds_cluster::172.31.15.5:18000::cx_connect_fail::0xds_cluster::172.31.15.5:18000::cx_total::1xds_cluster::172.31.15.5:18000::rq_active::4xds_cluster::172.31.15.5:18000::rq_error::0xds_cluster::172.31.15.5:18000::rq_success::0xds_cluster::172.31.15.5:18000::rq_timeout::0xds_cluster::172.31.15.5:18000::rq_total::4xds_cluster::172.31.15.5:18000::hostname::xdsserverxds_cluster::172.31.15.5:18000::health_flags::healthyxds_cluster::172.31.15.5:18000::weight::1xds_cluster::172.31.15.5:18000::region::xds_cluster::172.31.15.5:18000::zone::xds_cluster::172.31.15.5:18000::sub_zone::xds_cluster::172.31.15.5:18000::canary::falsexds_cluster::172.31.15.5:18000::priority::0xds_cluster::172.31.15.5:18000::success_rate::-1.0xds_cluster::172.31.15.5:18000::local_origin_success_rate::-1.0webcluster::observability_name::webclusterwebcluster::default_priority::max_connections::1024webcluster::default_priority::max_pending_requests::1024webcluster::default_priority::max_requests::1024webcluster::default_priority::max_retries::3webcluster::high_priority::max_connections::1024webcluster::high_priority::max_pending_requests::1024webcluster::high_priority::max_requests::1024webcluster::high_priority::max_retries::3webcluster::added_via_api::truewebcluster::172.31.15.11:8080::cx_active::0webcluster::172.31.15.11:8080::cx_connect_fail::0webcluster::172.31.15.11:8080::cx_total::0webcluster::172.31.15.11:8080::rq_active::0webcluster::172.31.15.11:8080::rq_error::0webcluster::172.31.15.11:8080::rq_success::0webcluster::172.31.15.11:8080::rq_timeout::0webcluster::172.31.15.11:8080::rq_total::0webcluster::172.31.15.11:8080::hostname::webcluster::172.31.15.11:8080::health_flags::healthywebcluster::172.31.15.11:8080::weight::1webcluster::172.31.15.11:8080::region::webcluster::172.31.15.11:8080::zone::webcluster::172.31.15.11:8080::sub_zone::webcluster::172.31.15.11:8080::canary::falsewebcluster::172.31.15.11:8080::priority::0webcluster::172.31.15.11:8080::success_rate::-1.0webcluster::172.31.15.11:8080::local_origin_success_rate::-1.0#或者查看动态Clusters的相关信息root@test:/apps/servicemesh_in_practise-develop/Dynamic-Configuration/ads-grpc# curl -s 172.31.15.2:9901/config_dump | jq '.configs[1].dynamic_active_clusters'[ { "version_info": "411", "cluster": { "@type": "type.googleapis.com/envoy.config.cluster.v3.Cluster", "name": "webcluster", "type": "EDS", "eds_cluster_config": { "eds_config": { "api_config_source": { "api_type": "GRPC", "grpc_services": [ { "envoy_grpc": { "cluster_name": "xds_cluster" } } ], "set_node_on_first_message_only": true, "transport_api_version": "V3" }, "resource_api_version": "V3" } }, "connect_timeout": "5s", "dns_lookup_family": "V4_ONLY" }, "last_updated": "2021-12-02T08:05:20.650Z" }]# 查看Listener列表root@test:/apps/servicemesh_in_practise-develop/Dynamic-Configuration/ads-grpc# curl 172.31.15.2:9901/listenerslistener_http::0.0.0.0:80#或者查看动态的Listener信息root@test:/apps/servicemesh_in_practise-develop/Dynamic-Configuration/ads-grpc# curl -s 172.31.15.2:9901/config_dump?resource=dynamic_listeners | jq '.configs[0].active_state.listener.address'{ "socket_address": { "address": "0.0.0.0", "port_value": 80 }}# 接入xdsserver容器的交互式接口,修改config.yaml文件中的内容,将另一个endpoint添加进文件中,或进行其它修改;root@test:/apps/servicemesh_in_practise-develop/Dynamic-Configuration/ads-grpc# docker exec -it lds-cds-grpc_xdsserver_1 sh/ # cd /etc/envoy-xds-server/config/etc/envoy-xds-server/config # cat config.yaml-v2 > config.yaml#提示:以上修改操作也可以直接在宿主机上的存储卷目录中进行。# 再次查看Cluster中的Endpoint信息 root@test:/apps/servicemesh_in_practise-develop/Dynamic-Configuration/ads-grpc# curl 172.31.15.2:9901/clustersxds_cluster::observability_name::xds_clusterxds_cluster::default_priority::max_connections::1024xds_cluster::default_priority::max_pending_requests::1024xds_cluster::default_priority::max_requests::1024xds_cluster::default_priority::max_retries::3xds_cluster::high_priority::max_connections::1024xds_cluster::high_priority::max_pending_requests::1024xds_cluster::high_priority::max_requests::1024xds_cluster::high_priority::max_retries::3xds_cluster::added_via_api::falsexds_cluster::172.31.15.5:18000::cx_active::1xds_cluster::172.31.15.5:18000::cx_connect_fail::0xds_cluster::172.31.15.5:18000::cx_total::1xds_cluster::172.31.15.5:18000::rq_active::4xds_cluster::172.31.15.5:18000::rq_error::0xds_cluster::172.31.15.5:18000::rq_success::0xds_cluster::172.31.15.5:18000::rq_timeout::0xds_cluster::172.31.15.5:18000::rq_total::4xds_cluster::172.31.15.5:18000::hostname::xdsserverxds_cluster::172.31.15.5:18000::health_flags::healthyxds_cluster::172.31.15.5:18000::weight::1xds_cluster::172.31.15.5:18000::region::xds_cluster::172.31.15.5:18000::zone::xds_cluster::172.31.15.5:18000::sub_zone::xds_cluster::172.31.15.5:18000::canary::falsexds_cluster::172.31.15.5:18000::priority::0xds_cluster::172.31.15.5:18000::success_rate::-1.0xds_cluster::172.31.15.5:18000::local_origin_success_rate::-1.0webcluster::observability_name::webclusterwebcluster::default_priority::max_connections::1024webcluster::default_priority::max_pending_requests::1024webcluster::default_priority::max_requests::1024webcluster::default_priority::max_retries::3webcluster::high_priority::max_connections::1024webcluster::high_priority::max_pending_requests::1024webcluster::high_priority::max_requests::1024webcluster::high_priority::max_retries::3webcluster::added_via_api::truewebcluster::172.31.15.11:8080::cx_active::0webcluster::172.31.15.11:8080::cx_connect_fail::0webcluster::172.31.15.11:8080::cx_total::0webcluster::172.31.15.11:8080::rq_active::0webcluster::172.31.15.11:8080::rq_error::0webcluster::172.31.15.11:8080::rq_success::0webcluster::172.31.15.11:8080::rq_timeout::0webcluster::172.31.15.11:8080::rq_total::0webcluster::172.31.15.11:8080::hostname::webcluster::172.31.15.11:8080::health_flags::healthywebcluster::172.31.15.11:8080::weight::1webcluster::172.31.15.11:8080::region::webcluster::172.31.15.11:8080::zone::webcluster::172.31.15.11:8080::sub_zone::webcluster::172.31.15.11:8080::canary::falsewebcluster::172.31.15.11:8080::priority::0webcluster::172.31.15.11:8080::success_rate::-1.0webcluster::172.31.15.11:8080::local_origin_success_rate::-1.0webcluster::172.31.15.12:8080::cx_active::0webcluster::172.31.15.12:8080::cx_connect_fail::0webcluster::172.31.15.12:8080::cx_total::0webcluster::172.31.15.12:8080::rq_active::0webcluster::172.31.15.12:8080::rq_error::0webcluster::172.31.15.12:8080::rq_success::0webcluster::172.31.15.12:8080::rq_timeout::0webcluster::172.31.15.12:8080::rq_total::0webcluster::172.31.15.12:8080::hostname::webcluster::172.31.15.12:8080::health_flags::healthywebcluster::172.31.15.12:8080::weight::1webcluster::172.31.15.12:8080::region::webcluster::172.31.15.12:8080::zone::webcluster::172.31.15.12:8080::sub_zone::webcluster::172.31.15.12:8080::canary::falsewebcluster::172.31.15.12:8080::priority::0webcluster::172.31.15.12:8080::success_rate::-1.0webcluster::172.31.15.12:8080::local_origin_success_rate::-1.0#新节点已加入 |

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY