《PyTorch深度学习实践》-刘二大人 第七讲

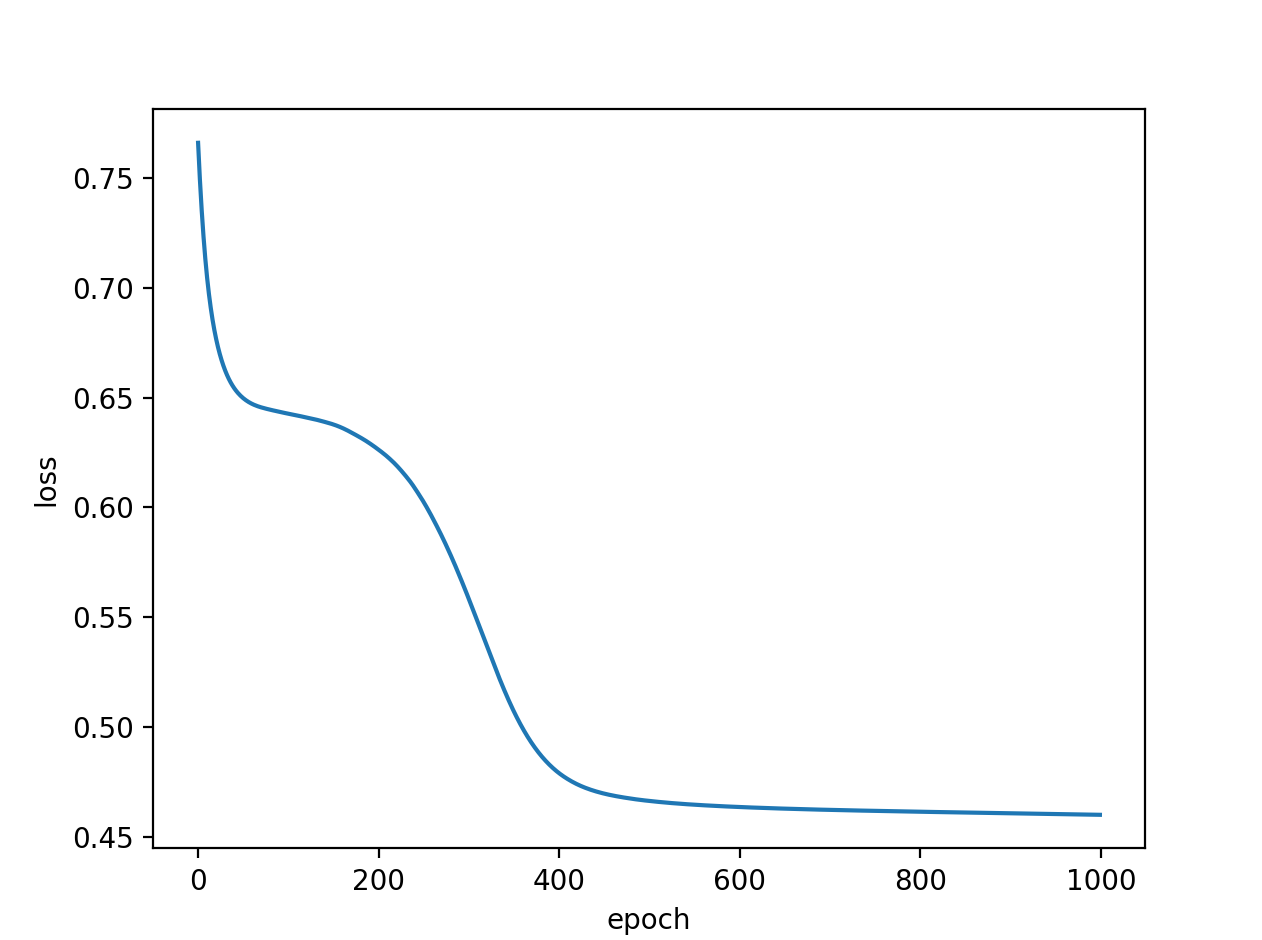

1 import numpy as np 2 import torch 3 import matplotlib.pyplot as plt 4 import os 5 os.environ['KMP_DUPLICATE_LIB_OK']='True' 6 7 #1 prepare dataset 8 xy = np.loadtxt('diabetes.csv', delimiter=',', dtype=np.float32) 9 x_data = torch.from_numpy(xy[:, :-1]) # 第一个‘:’是指读取所有行,第二个‘:’是指从第一列开始,最后一列不要 10 y_data = torch.from_numpy(xy[:, [-1]]) # [-1] 最后得到的是个矩阵 11 12 13 #2 design model using class 14 class Model(torch.nn.Module): 15 def __init__(self): 16 super(Model, self).__init__() 17 self.linear1 = torch.nn.Linear(8, 6) # 输入数据x的特征是8维,x有8个特征 18 self.linear2 = torch.nn.Linear(6, 4) 19 self.linear3 = torch.nn.Linear(4, 1) 20 self.activate = torch.nn.ReLU() 21 self.sigmoid = torch.nn.Sigmoid() # 将其看作是网络的一层,而不是简单的函数使用 22 23 def forward(self, x): 24 x = self.activate(self.linear1(x)) 25 x = self.activate(self.linear2(x)) 26 x = self.sigmoid(self.linear3(x)) # y hat 27 return x 28 model = Model() 29 30 #3 construct loss and optimizer 31 # criterion = torch.nn.BCELoss(size_average = True) 32 criterion = torch.nn.BCELoss(reduction='mean') 33 optimizer = torch.optim.SGD(model.parameters(), lr=0.1) 34 35 epoch_list = [] 36 loss_list = [] 37 #4 training cycle forward, backward, update 38 for epoch in range(1000): 39 y_pred = model(x_data) 40 loss = criterion(y_pred, y_data) 41 print(epoch, loss.item()) 42 epoch_list.append(epoch) 43 loss_list.append(loss.item()) 44 45 optimizer.zero_grad() 46 loss.backward() 47 48 optimizer.step() 49 50 #test 51 # print('w = ', model.linear3.weight.data) 52 # print('b = ', model.linear3.bias.data) 53 # x_test = torch.Tensor([0.176471,0.256281,0.147541,-0.474747,-0.728132,-0.0730253,-0.891546,-0.333333])#0 54 # x_test = torch.Tensor([-0.0588235,-0.00502513,0.377049,0,0,0.0551417,-0.735269,-0.0333333])#1 55 # y_test = model(x_test) 56 # print('y_pred = ', y_test.data) 57 58 plt.plot(epoch_list, loss_list) 59 plt.ylabel('loss') 60 plt.xlabel('epoch') 61 plt.show()

996 0.4599120318889618

997 0.4599059224128723

998 0.45989990234375

999 0.45989376306533813

训练1000次loss在0.45的样子,预测也不准,单独使用sigmoid函数和添加ReLu感觉没什么区别,反而只用sigmoid时随着次数的增加结果是有细微变好的,但是再增加epoch次数也很难达到理想的效果,我达到的最好结果是loss在0.3,应该是学习函数太简单了

下面代码epoch过大,破旧电脑慎重运行

1 import numpy as np 2 import torch 3 import matplotlib.pyplot as plt 4 import os 5 os.environ['KMP_DUPLICATE_LIB_OK']='True' 6 7 #1 prepare dataset 8 xy = np.loadtxt('diabetes.csv', delimiter=',', dtype=np.float32) 9 x_data = torch.from_numpy(xy[:, :-1]) # 第一个‘:’是指读取所有行,第二个‘:’是指从第一列开始,最后一列不要 10 y_data = torch.from_numpy(xy[:, [-1]]) # [-1] 最后得到的是个矩阵 11 12 13 #2 design model using class 14 class Model(torch.nn.Module): 15 def __init__(self): 16 super(Model, self).__init__() 17 self.linear1 = torch.nn.Linear(8, 6) # 输入数据x的特征是8维,x有8个特征 18 self.linear2 = torch.nn.Linear(6, 4) 19 self.linear3 = torch.nn.Linear(4, 1) 20 #self.activate = torch.nn.ReLU() 21 self.sigmoid = torch.nn.Sigmoid() # 将其看作是网络的一层,而不是简单的函数使用 22 23 def forward(self, x): 24 #x = self.activate(self.linear1(x)) 25 #x = self.activate(self.linear2(x)) 26 x = self.sigmoid(self.linear1(x)) 27 x = self.sigmoid(self.linear2(x)) 28 x = self.sigmoid(self.linear3(x)) # y hat 29 return x 30 model = Model() 31 32 #3 construct loss and optimizer 33 # criterion = torch.nn.BCELoss(size_average = True) 34 criterion = torch.nn.BCELoss(reduction='mean') 35 optimizer = torch.optim.SGD(model.parameters(), lr=0.1) 36 37 epoch_list = [] 38 loss_list = [] 39 #4 training cycle forward, backward, update 40 for epoch in range(1000000): 41 y_pred = model(x_data) 42 loss = criterion(y_pred, y_data) 43 print(epoch, loss.item()) 44 epoch_list.append(epoch) 45 loss_list.append(loss.item()) 46 47 optimizer.zero_grad() 48 loss.backward() 49 50 optimizer.step() 51 52 #test 53 # print('w = ', model.linear3.weight.data) 54 # print('b = ', model.linear3.bias.data) 55 # x_test = torch.Tensor([0.176471,0.256281,0.147541,-0.474747,-0.728132,-0.0730253,-0.891546,-0.333333])#0 56 # x_test = torch.Tensor([-0.0588235,-0.00502513,0.377049,0,0,0.0551417,-0.735269,-0.0333333])#1 57 # y_test = model(x_test) 58 # print('y_pred = ', y_test.data) 59 60 plt.plot(epoch_list, loss_list) 61 plt.ylabel('loss') 62 plt.xlabel('epoch') 63 plt.show()

最后loss值:

999996 0.30911391973495483

999997 0.30911383032798767

999998 0.3091137111186981

999999 0.3091137409210205