《PyTorch深度学习实践》-刘二大人 第二讲

刘二大人的Pytorch保姆式教程。

我觉得算0基础学Pytorch吧,从我现在的基础看就是比较easy的程度,正和我意~

课堂练习:

import numpy as np import matplotlib.pyplot as plt x_data = [1.0, 2.0, 3.0] y_data = [2.0, 4.0, 6.0] #前馈函数 def forward(x): return x * w #损失函数 def loss(x, y): y_pred = forward(x) return (y_pred - y) * (y_pred - y) w_list = []#参数值w mse_list = []#随着参数值变化产生的均方差 for w in np.arange(0.0, 4.1, 0.1): print('w=', w) l_sum = 0 #将x_data, y_data打包成一个个元组(x_val, y_val) # 其实就是每次对每个list取一个值放入x_val和y_val for x_val, y_val in zip(x_data, y_data): y_pred_val = forward(x_val) loss_val = loss(x_val, y_val) l_sum += loss_val print('\t', x_val, y_val, y_pred_val, loss_val) print('MSE=', l_sum / 3) w_list.append(w) mse_list.append(l_sum / 3) plt.plot(w_list, mse_list) plt.ylabel('Loss') plt.xlabel('w') plt.show()

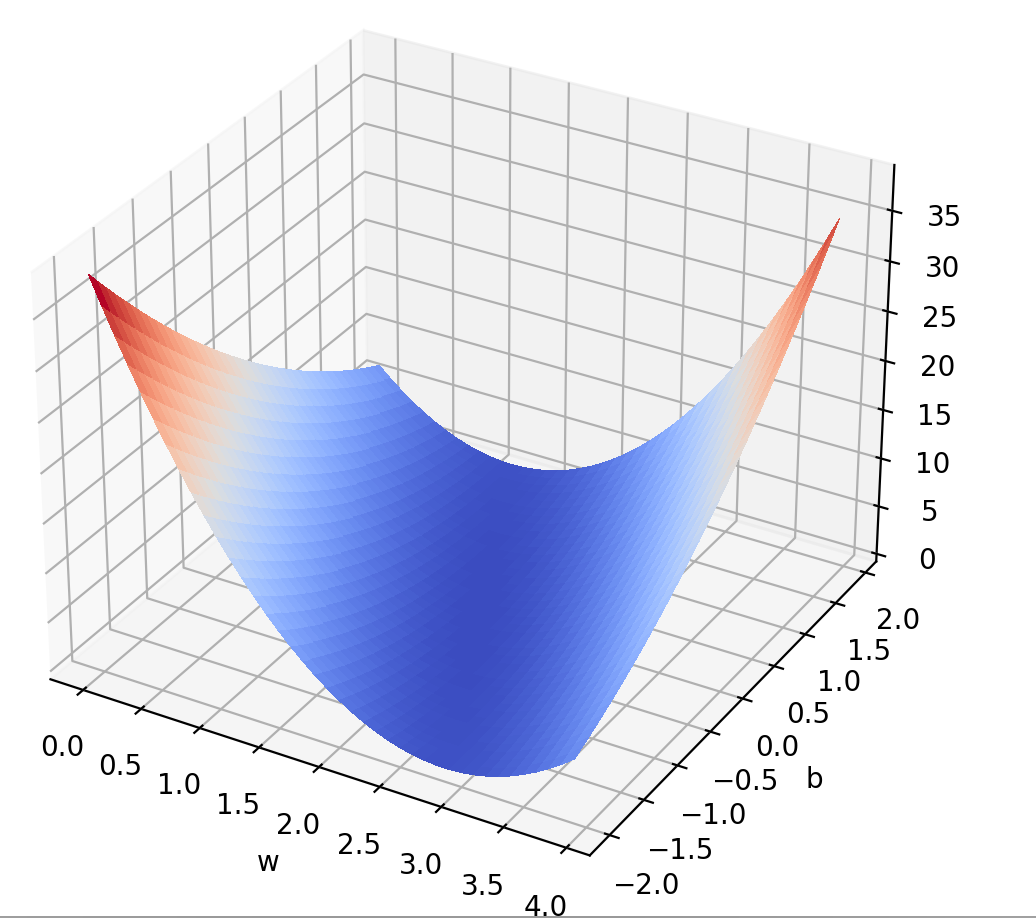

课后练习:(懵逼了两个小时才会的,因为毕竟第一次认真看Python代码,再加上“深厚”的Java功底干扰,我上来就写了嵌套for循环,套了半天发现Python根本不这么用……所以感触就是编程有变化,思维要改变……)

import numpy as np import matplotlib.pyplot as plt from mpl_toolkits.mplot3d import Axes3D x_data = [1.0, 2.0, 3.0] y_data = [2.0, 4.0, 6.0] def forward(x): return x * w + b def loss(x, y): y_pred = forward(x) return (y_pred - y) * (y_pred - y) w_list = np.arange(0.0, 4.0, 0.1) b_list = np.arange(-2, 2.0, 0.1) w, b = np.meshgrid(w_list, b_list) l_sum = 0 for x_val, y_val in zip(x_data, y_data): loss_val = loss(x_val, y_val) l_sum += loss_val print(l_sum) mse = l_sum / 3 fig = plt.figure() ax = Axes3D(fig,auto_add_to_figure=False) fig.add_axes(ax) surf = ax.plot_surface(w, b, mse, rstride=1, cstride=1, cmap='coolwarm', linewidth=0, antialiased=False) #fig.colorbar(surf, shrink=0.5, aspect=5) ax.set_xlabel("w") ax.set_ylabel("b") plt.title("loss") plt.show()