学生课程分数的Spark SQL分析

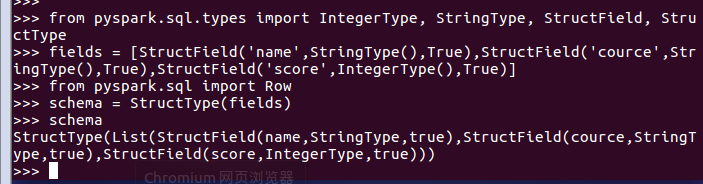

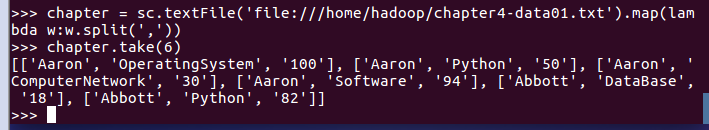

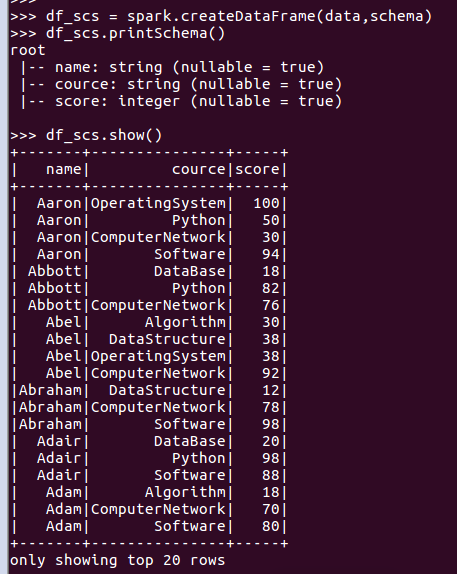

读学生课程分数文件chapter4-data01.txt,创建DataFrame。

1.生成“表头”

2.生成“表中的记录”

3.把“表头”和“表中的记录”拼装在一起

用DataFrame的操作或SQL语句完成以下数据分析要求,并和用RDD操作的实现进行对比:

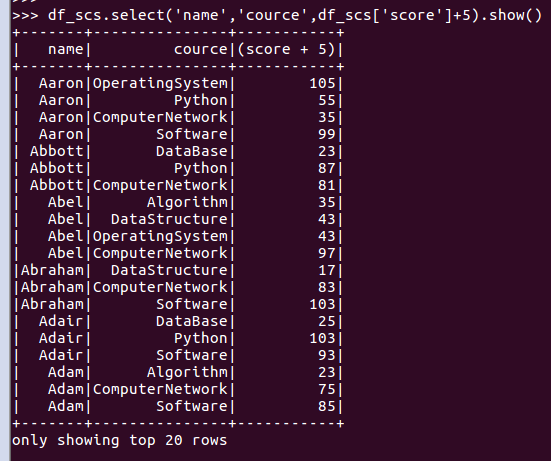

- 每个分数+5分。

-

df_scs.select('name','cource',df_scs['score']+5).show()

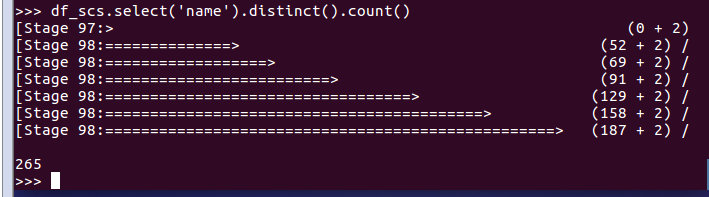

- 总共有多少学生?

- df_scs.select('name').distinct().count()

-

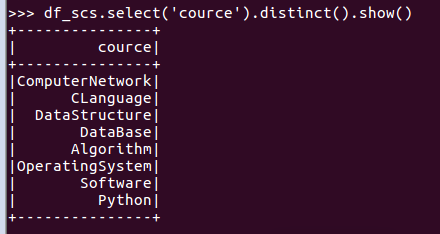

- 总共开设了哪些课程?

- df_scs.select('cource').distinct().show()

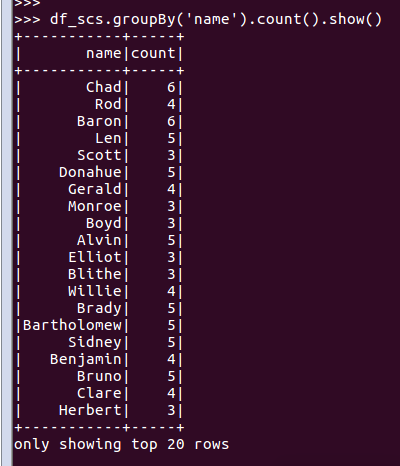

- 每个学生选修了多少门课?

- df_scs.groupBy('name').count().show()

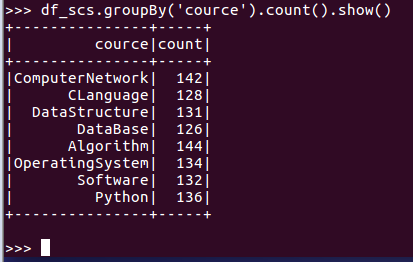

- 每门课程有多少个学生选?

- df_scs.groupBy('cource').count().show()

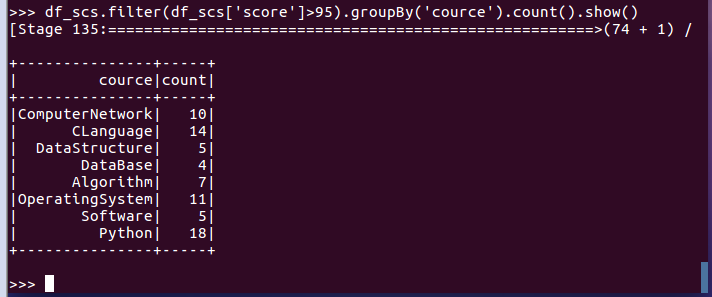

- 每门课程大于95分的学生人数?

- df_scs.filter(df_scs['score']>95).groupBy('cource').count().show()

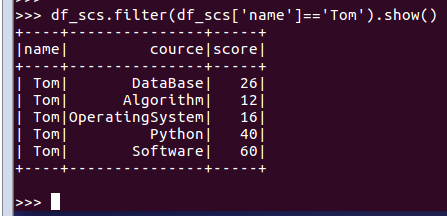

- Tom选修了几门课?每门课多少分?

- df_scs.filter(df_scs['name']=='Tom').show()

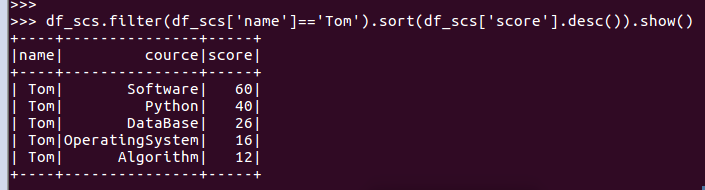

- Tom的成绩按分数大小排序。

- df_scs.filter(df_scs['name']=='Tom').sort(df_scs['score'].desc()).show()

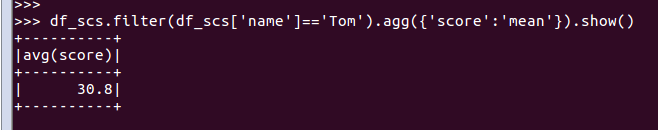

- Tom的平均分。

- df_scs.filter(df_scs['name']=='Tom').agg({'score':'mean'}).show()

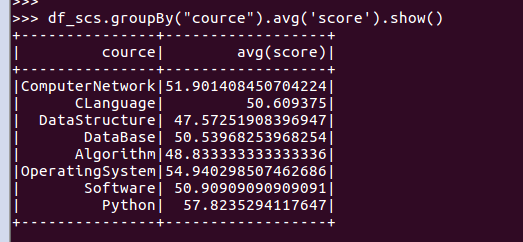

- 求每门课的平均分,最高分,最低分。

- 每门课的平均分:

- df_scs.groupBy("cource").avg('score').show()

df_scs.groupBy("cource").agg({'score':'mean'}).show()

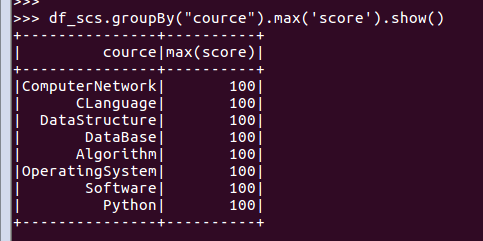

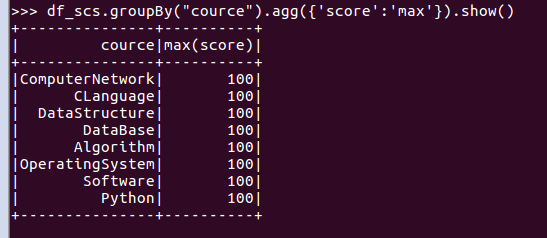

- 每门课的最高分:

-

df_scs.groupBy("cource").max('score').show()

df_scs.groupBy("cource").agg({'score':'max'}).show()

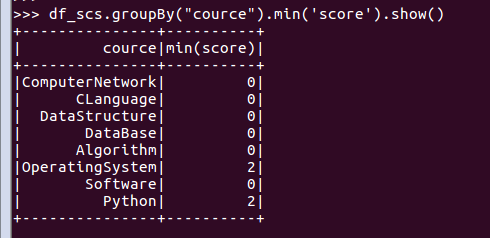

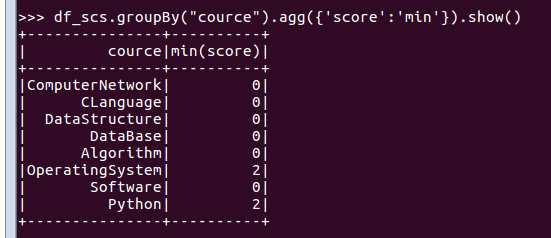

- 每门课的最低分:

-

df_scs.groupBy("cource").min('score').show()

df_scs.groupBy("cource").agg({'score':'min'}).show()

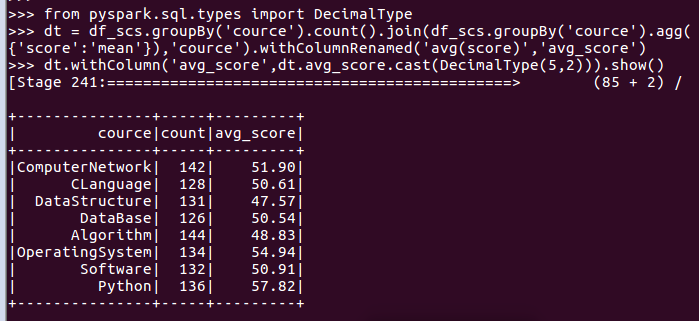

- 求每门课的选修人数及平均分,精确到2位小数。

- from pyspark.sql.types import DecimalType

-

dt = df_scs.groupBy('cource').count().join(df_scs.groupBy('cource').agg({'score':'mean'}),'cource').withColumnRenamed('avg(score)','avg_score')

- dt.withColumn('avg_score',dt.avg_score.cast(DecimalType(5,2))).show()

- 每门课的不及格人数,通过率

- df_scs.filter(df_scs.score<60).groupBy(df_scs.cource).count().show()

二、用SQL语句完成以下数据分析要求

必须把DataFrame注册为临时表

df_scs.createOrReplaceTempView('scs')

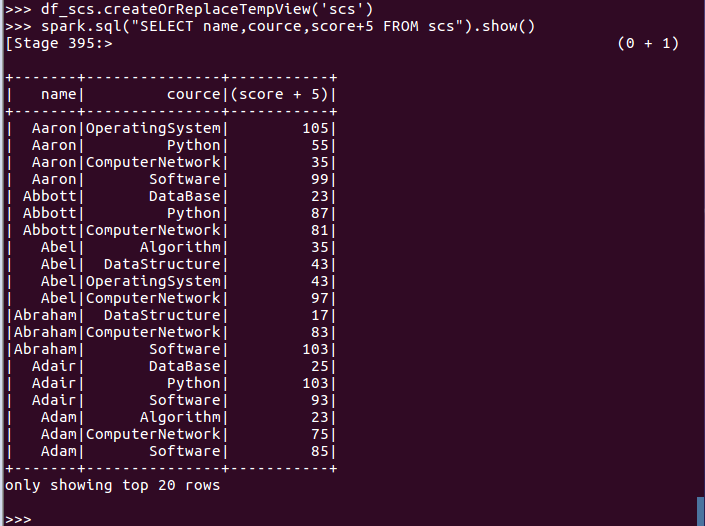

- 每个分数+5分。

- spark.sql("SELECT name,cource,score+5 FROM scs").show()

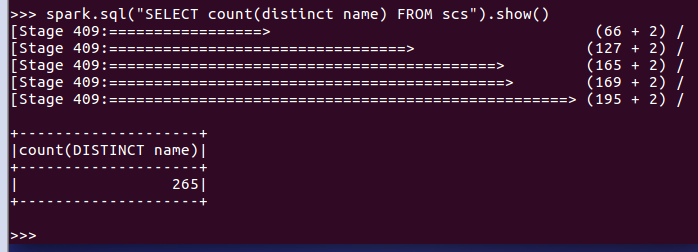

- 总共有多少学生?

-

spark.sql("SELECT count(distinct name) FROM scs").show()

-

-

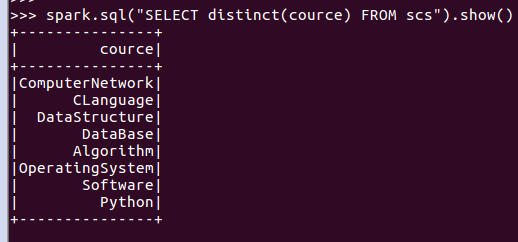

- 总共开设了哪些课程?

-

spark.sql("SELECT distinct(cource) FROM scs").show()

-

- 每个学生选修了多少门课?

-

- spark.sql("SELECT name,count(course) FROM scs group by name").show()

-

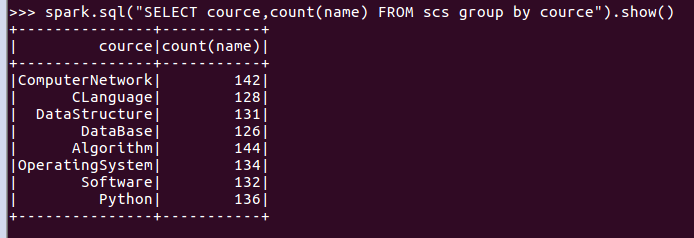

- 每门课程有多少个学生选?

- spark.sql("SELECT cource,count(name) FROM scs group by cource").show()

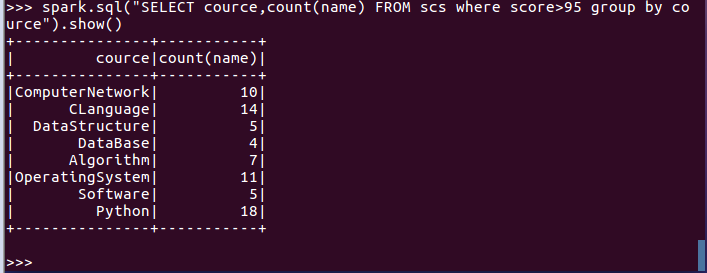

- 每门课程大于95分的学生人数?

-

spark.sql("SELECT cource,count(name) FROM scs where score>95 group by cource").show()

-

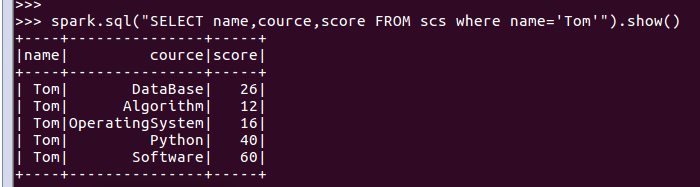

- Tom选修了几门课?每门课多少分

- spark.sql("SELECT name,cource,score FROM scs where name='Tom'").show()

-

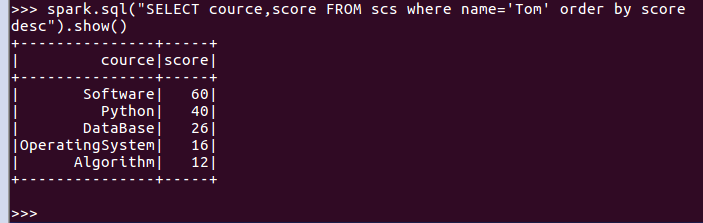

- Tom的成绩按分数大小排序。

- (从大到小 desc 从小到大 asc)

-

spark.sql("SELECT cource,score FROM scs where name='Tom' order by score desc").show()

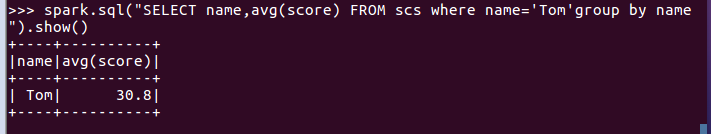

- Tom的平均分。

- spark.sql("SELECT name,avg(score) FROM scs where name='Tom'group by name").show()

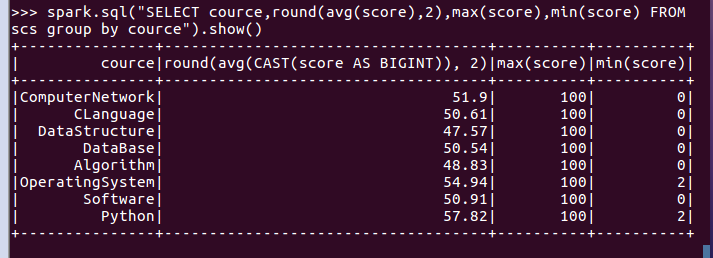

- 求每门课的平均分,最高分,最低分。

-

spark.sql("SELECT cource,round(avg(score),2),max(score),min(score) FROM scs group by cource").show()

-

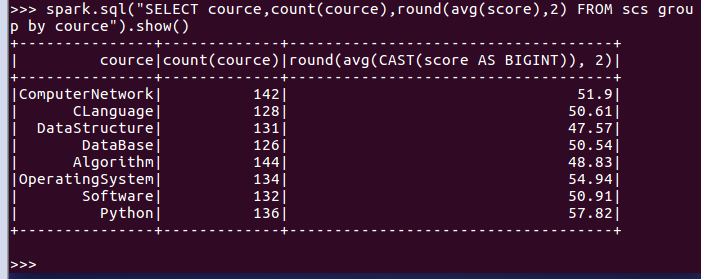

- 求每门课的选修人数及平均分,精确到2位小数。

-

spark.sql("SELECT cource,count(cource),round(avg(score),2) FROM scs group by cource").show()

-

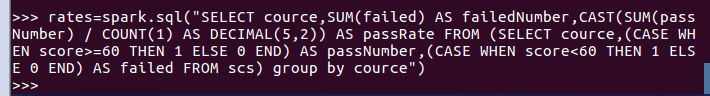

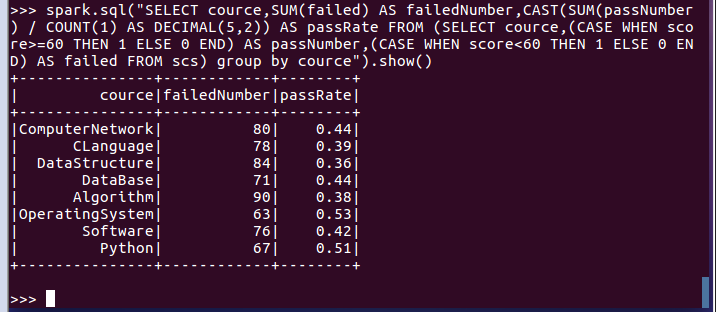

- 每门课的不及格人数,通过率(未成功)

-

spark.sql("SELECT cource,SUM(failed) AS failedNumber,CAST(SUM(passNumber) / COUNT(1) AS DECIMAL(5,2)) AS passRate FROM (SELECT cource,(CASE WHEN score>=60 THEN 1 ELSE 0 END) AS passNumber,(CASE WHEN score<60 THEN 1 ELSE 0 END) AS failed FROM scs) group by cource").show()

三、对比分别用RDD操作实现、用DataFrame操作实现和用SQL语句实现的异同。(比较两个以上问题)

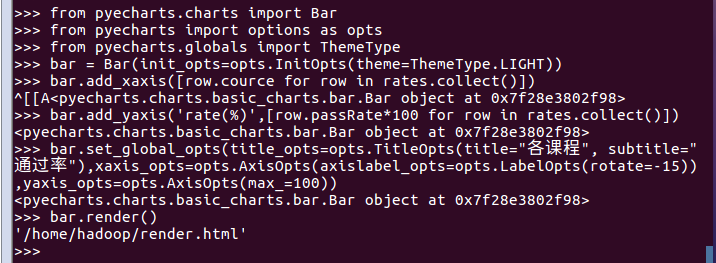

四、结果可视化

rates=spark.sql("SELECT cource,SUM(failed) AS failedNumber,CAST(SUM(passNumber) / COUNT(1) AS DECIMAL(5,2)) AS passRate FROM (SELECT cource,(CASE WHEN score>=60 THEN 1 ELSE 0 END) AS passNumber,(CASE WHEN score<60 THEN 1 ELSE 0 END) AS failed FROM scs) group by cource")

from pyecharts.charts import Bar

from pyecharts import options as opts

from pyecharts.globals import ThemeType

bar = Bar(init_opts=opts.InitOpts(theme=ThemeType.LIGHT))

bar.add_xaxis([row.cource for row in rates.collect()])

bar.add_yaxis('rate(%)',[row.passRate*100 for row in rates.collect()])

bar.set_global_opts(title_opts=opts.TitleOpts(title="各课程", subtitle="通过率"),xaxis_opts=opts.AxisOpts(axislabel_opts=opts.LabelOpts(rotate=-15)),yaxis_opts=opts.AxisOpts(max_=100))

bar.render()