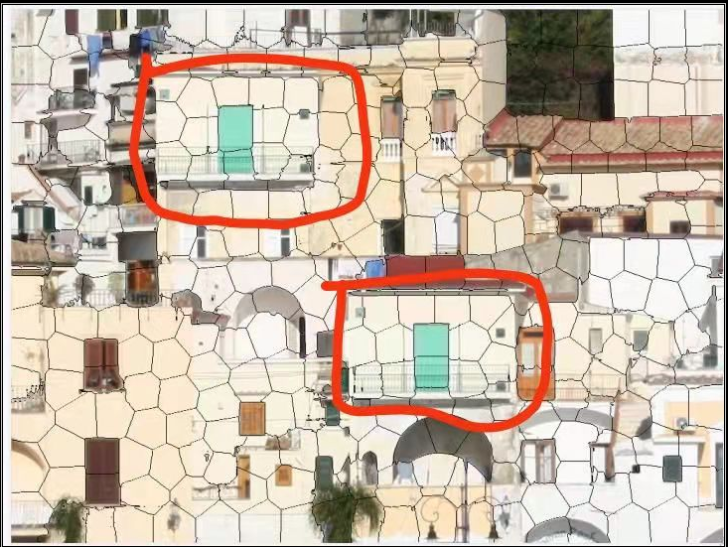

计算机视觉(7)基于NMS算法从一副图像中找出相同的目标物体

NMS算法(NonMaximumSuppression):

非极大值抑制(NMS)顾名思义就是抑制不是极大值的元素,搜索局部的极大值。这个局部代表的是一个邻域,邻域有两个参数可变,一是邻域的维数,二是邻域的大小。这里不讨论通用的NMS算法,而是用于在目标检测中用于提取分数最高的窗口的。

例如在行人检测中,滑动窗口经提取特征,经分类器分类识别后,每个窗口都会得到一个分数。但是滑动窗口会导致很多窗口与其他窗口存在包含或者大部分交叉的情况。这时就需要用到NMS来选取那些邻域里分数最高(是行人的概率最大),并且抑制那些分数低的窗口。

实现步骤如下:

-

设定目标框的置信度阈值,常用的阈值是0.5左右

-

根据置信度降序排列候选框列表

-

选取置信度最高的框A添加到输出列表,并将其从候选框列表中删除

-

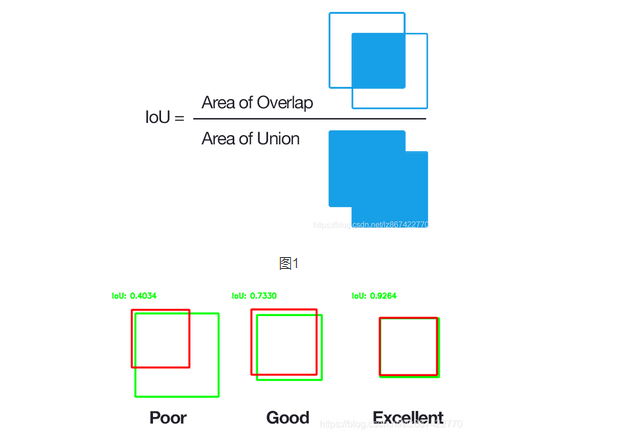

计算A与候选框列表中的所有框的IoU值,删除大于阈值的候选框

-

重复上述过程,直到候选框列表为空,返回输出列表

1 import cv2 2 import numpy as np 3 import os 4 import time 5 import matplotlib.pyplot as plt 6 import copy 7 8 9 10 def NMS(boxes, overlapThresh = 0.4): 11 #return an empty list, if no boxes given 12 if len(boxes) == 0: 13 return [] 14 x1 = boxes[:, 0] # x coordinate of the top-left corner 15 y1 = boxes[:, 1] # y coordinate of the top-left corner 16 x2 = boxes[:, 2] # x coordinate of the bottom-right corner 17 y2 = boxes[:, 3] # y coordinate of the bottom-right corner 18 # compute the area of the bounding boxes and sort the bounding 19 # boxes by the bottom-right y-coordinate of the bounding box 20 areas = (x2 - x1 + 1) * (y2 - y1 + 1) # We have a least a box of one pixel, therefore the +1 21 indices = np.arange(len(x1)) 22 for i,box in enumerate(boxes): 23 temp_indices = indices[indices!=i] 24 xx1 = np.maximum(box[0], boxes[temp_indices,0]) 25 yy1 = np.maximum(box[1], boxes[temp_indices,1]) 26 xx2 = np.minimum(box[2], boxes[temp_indices,2]) 27 yy2 = np.minimum(box[3], boxes[temp_indices,3]) 28 w = np.maximum(0, xx2 - xx1 + 1) 29 h = np.maximum(0, yy2 - yy1 + 1) 30 # compute the ratio of overlap 31 overlap = (w * h) / areas[temp_indices] 32 if np.any(overlap) > treshold: 33 indices = indices[indices != i] 34 return boxes[indices].astype(int) 35 36 def bounding_boxes(image, template): 37 (tH, tW) = template.shape[:2] # getting height and width of template 38 imageGray = cv2.cvtColor(image, 0) # convert the image to grayscale 39 templateGray = cv2.cvtColor(template, 0) # convert the template to grayscale 40 41 result = cv2.matchTemplate(imageGray, templateGray, cv2.TM_CCOEFF_NORMED) # template matching return the correlatio 42 print(result) 43 print('--------------') 44 (y1, x1) = np.where(result >= treshold) # object is detected, where the correlation is above the treshold 45 46 boxes = np.zeros((len(y1), 4)) # construct array of zeros 47 x2 = x1 + tW # calculate x2 with the width of the template 48 y2 = y1 + tH # calculate y2 with the height of the template 49 # fill the bounding boxes array 50 boxes[:, 0] = x1 51 boxes[:, 1] = y1 52 boxes[:, 2] = x2 53 boxes[:, 3] = y2 54 return boxes.astype(int) 55 56 def draw_bounding_boxes(image,boxes): 57 for box in boxes: 58 59 return image 60 61 if __name__ == "__main__": 62 # time.sleep(2) 63 treshold = 0.5837 # the correlation treshold, in order for an object to be recognised 64 # template_diamonds = plt.imread(r"cones/ace_diamonds_plant_template.jpg") 65 template_diamonds = plt.imread(r"cones/22.jpg") 66 67 ace_diamonds_rotated = plt.imread(r"cones/11.jpg") 68 69 boxes_redundant = bounding_boxes(ace_diamonds_rotated, template_diamonds) # calculate bounding boxes 70 print(len(boxes_redundant)) 71 boxes = NMS(boxes_redundant) # remove redundant bounding boxes 72 73 print(boxes) 74 overlapping_BB_image = draw_bounding_boxes(ace_diamonds_rotated,boxes_redundant) # draw image with all redundant bounding boxes 75 segmented_image = draw_bounding_boxes(ace_diamonds_rotated,boxes) # draw the bounding boxes onto the image 76 plt.imshow(overlapping_BB_image) 77 plt.show() 78 plt.imshow(segmented_image) 79 plt.show()