计算机视觉(6)双目相机基于SGM(semi-global matching)算法获取深度信息

摘要:

最近在做双目视差估计算法,在OpenCV里有一些算法,其中半全局块匹配(Semi-Global Block Matching,SGBM)算法具有视差效果好速度快的特点,因此常常被广泛应用。本文主要讨论的就是SGBM算法。

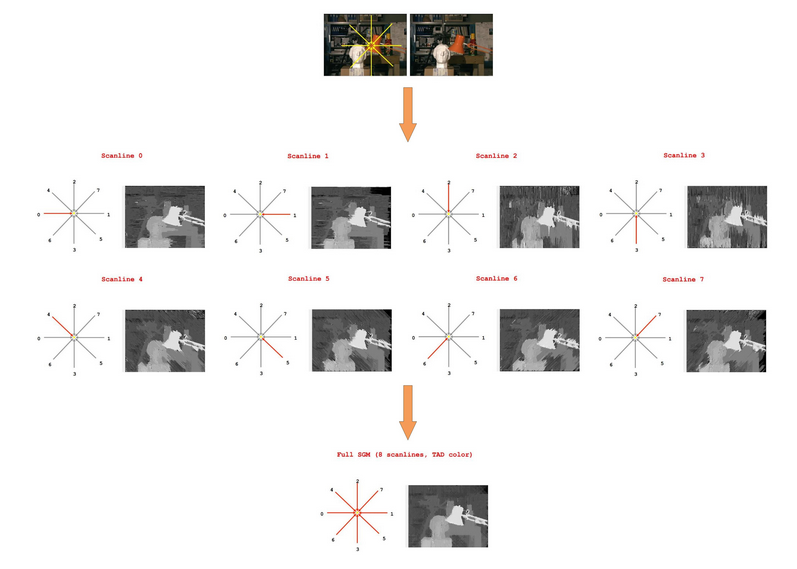

SGM聚合步骤示意图(视差图呈现):

1 import argparse 2 import sys 3 import time as t 4 5 import cv2 6 import numpy as np 7 8 9 class Direction: 10 def __init__(self, direction=(0, 0), name='invalid'): 11 """ 12 represent a cardinal direction in image coordinates (top left = (0, 0) and bottom right = (1, 1)). 13 :param direction: (x, y) for cardinal direction. 14 :param name: common name of said direction. 15 """ 16 self.direction = direction 17 self.name = name 18 19 20 # 8 defined directions for sgm 21 N = Direction(direction=(0, -1), name='north') 22 NE = Direction(direction=(1, -1), name='north-east') 23 E = Direction(direction=(1, 0), name='east') 24 SE = Direction(direction=(1, 1), name='south-east') 25 S = Direction(direction=(0, 1), name='south') 26 SW = Direction(direction=(-1, 1), name='south-west') 27 W = Direction(direction=(-1, 0), name='west') 28 NW = Direction(direction=(-1, -1), name='north-west') 29 30 31 class Paths: 32 def __init__(self): 33 """ 34 represent the relation between the directions. 35 """ 36 self.paths = [N, NE, E, SE, S, SW, W, NW] 37 self.size = len(self.paths) 38 self.effective_paths = [(E, W), (SE, NW), (S, N), (SW, NE)] 39 40 41 class Parameters: 42 def __init__(self, max_disparity=128, P1=10, P2=120, csize=(7, 7), bsize=(3, 3)): 43 """ 44 represent all parameters used in the sgm algorithm. 45 :param max_disparity: maximum distance between the same pixel in both images. 46 :param P1: penalty for disparity difference = 1 47 :param P2: penalty for disparity difference > 1 48 :param csize: size of the kernel for the census transform. 49 :param bsize: size of the kernel for blurring the images and median filtering. 50 """ 51 self.max_disparity = max_disparity 52 self.P1 = P1 53 self.P2 = P2 54 self.csize = csize 55 self.bsize = bsize 56 57 58 def load_images(left_name, right_name, parameters): 59 """ 60 read and blur stereo image pair. 61 :param left_name: name of the left image. 62 :param right_name: name of the right image. 63 :param parameters: structure containing parameters of the algorithm. 64 :return: blurred left and right images. 65 """ 66 left = cv2.imread(left_name, 0) 67 left = cv2.GaussianBlur(left, parameters.bsize, 0, 0) 68 right = cv2.imread(right_name, 0) 69 right = cv2.GaussianBlur(right, parameters.bsize, 0, 0) 70 return left, right 71 72 73 def get_indices(offset, dim, direction, height): 74 """ 75 for the diagonal directions (SE, SW, NW, NE), return the array of indices for the current slice. 76 :param offset: difference with the main diagonal of the cost volume. 77 :param dim: number of elements along the path. 78 :param direction: current aggregation direction. 79 :param height: H of the cost volume. 80 :return: arrays for the y (H dimension) and x (W dimension) indices. 81 """ 82 y_indices = [] 83 x_indices = [] 84 85 for i in range(0, dim): 86 if direction == SE.direction: 87 if offset < 0: 88 y_indices.append(-offset + i) 89 x_indices.append(0 + i) 90 else: 91 y_indices.append(0 + i) 92 x_indices.append(offset + i) 93 94 if direction == SW.direction: 95 if offset < 0: 96 y_indices.append(height + offset - i) 97 x_indices.append(0 + i) 98 else: 99 y_indices.append(height - i) 100 x_indices.append(offset + i) 101 102 return np.array(y_indices), np.array(x_indices) 103 104 105 def get_path_cost(slice, offset, parameters): 106 """ 107 part of the aggregation step, finds the minimum costs in a D x M slice (where M = the number of pixels in the 108 given direction) 109 :param slice: M x D array from the cost volume. 110 :param offset: ignore the pixels on the border. 111 :param parameters: structure containing parameters of the algorithm. 112 :return: M x D array of the minimum costs for a given slice in a given direction. 113 """ 114 other_dim = slice.shape[0] 115 disparity_dim = slice.shape[1] 116 117 disparities = [d for d in range(disparity_dim)] * disparity_dim 118 disparities = np.array(disparities).reshape(disparity_dim, disparity_dim) 119 120 penalties = np.zeros(shape=(disparity_dim, disparity_dim), dtype=slice.dtype) 121 penalties[np.abs(disparities - disparities.T) == 1] = parameters.P1 122 penalties[np.abs(disparities - disparities.T) > 1] = parameters.P2 123 124 minimum_cost_path = np.zeros(shape=(other_dim, disparity_dim), dtype=slice.dtype) 125 minimum_cost_path[offset - 1, :] = slice[offset - 1, :] 126 127 for i in range(offset, other_dim): 128 previous_cost = minimum_cost_path[i - 1, :] 129 current_cost = slice[i, :] 130 costs = np.repeat(previous_cost, repeats=disparity_dim, axis=0).reshape(disparity_dim, disparity_dim) 131 costs = np.amin(costs + penalties, axis=0) 132 minimum_cost_path[i, :] = current_cost + costs - np.amin(previous_cost) 133 return minimum_cost_path 134 135 136 def aggregate_costs(cost_volume, parameters, paths): 137 """ 138 sgm第二步,在N个可能方向匹配 139 second step of the sgm algorithm, aggregates matching costs for N possible directions (8 in this case). 140 :param cost_volume: array containing the matching costs. 141 :param parameters: structure containing parameters of the algorithm. 142 :param paths: structure containing all directions in which to aggregate costs. 143 :return: H x W x D x N array of matching cost for all defined directions. 144 """ 145 height = cost_volume.shape[0] 146 width = cost_volume.shape[1] 147 disparities = cost_volume.shape[2] 148 start = -(height - 1) 149 end = width - 1 150 151 aggregation_volume = np.zeros(shape=(height, width, disparities, paths.size), dtype=cost_volume.dtype) 152 153 path_id = 0 154 for path in paths.effective_paths: 155 print('\tProcessing paths {} and {}...'.format(path[0].name, path[1].name), end='') 156 sys.stdout.flush() 157 dawn = t.time() 158 159 main_aggregation = np.zeros(shape=(height, width, disparities), dtype=cost_volume.dtype) 160 opposite_aggregation = np.copy(main_aggregation) 161 162 main = path[0] 163 if main.direction == S.direction: 164 for x in range(0, width): 165 south = cost_volume[0:height, x, :] 166 north = np.flip(south, axis=0) 167 main_aggregation[:, x, :] = get_path_cost(south, 1, parameters) 168 opposite_aggregation[:, x, :] = np.flip(get_path_cost(north, 1, parameters), axis=0) 169 170 if main.direction == E.direction: 171 for y in range(0, height): 172 east = cost_volume[y, 0:width, :] 173 west = np.flip(east, axis=0) 174 main_aggregation[y, :, :] = get_path_cost(east, 1, parameters) 175 opposite_aggregation[y, :, :] = np.flip(get_path_cost(west, 1, parameters), axis=0) 176 177 if main.direction == SE.direction: 178 for offset in range(start, end): 179 south_east = cost_volume.diagonal(offset=offset).T 180 north_west = np.flip(south_east, axis=0) 181 dim = south_east.shape[0] 182 y_se_idx, x_se_idx = get_indices(offset, dim, SE.direction, None) 183 y_nw_idx = np.flip(y_se_idx, axis=0) 184 x_nw_idx = np.flip(x_se_idx, axis=0) 185 main_aggregation[y_se_idx, x_se_idx, :] = get_path_cost(south_east, 1, parameters) 186 opposite_aggregation[y_nw_idx, x_nw_idx, :] = get_path_cost(north_west, 1, parameters) 187 188 if main.direction == SW.direction: 189 for offset in range(start, end): 190 south_west = np.flipud(cost_volume).diagonal(offset=offset).T 191 north_east = np.flip(south_west, axis=0) 192 dim = south_west.shape[0] 193 y_sw_idx, x_sw_idx = get_indices(offset, dim, SW.direction, height - 1) 194 y_ne_idx = np.flip(y_sw_idx, axis=0) 195 x_ne_idx = np.flip(x_sw_idx, axis=0) 196 main_aggregation[y_sw_idx, x_sw_idx, :] = get_path_cost(south_west, 1, parameters) 197 opposite_aggregation[y_ne_idx, x_ne_idx, :] = get_path_cost(north_east, 1, parameters) 198 199 aggregation_volume[:, :, :, path_id] = main_aggregation 200 aggregation_volume[:, :, :, path_id + 1] = opposite_aggregation 201 path_id = path_id + 2 202 203 dusk = t.time() 204 print('\t(done in {:.2f}s)'.format(dusk - dawn)) 205 206 return aggregation_volume 207 208 209 def compute_costs(left, right, parameters, save_images): 210 """ 211 SGM第一步,基于人口普查算法和汉明距离 212 first step of the sgm algorithm, matching cost based on census transform and hamming distance. 213 :param left: left image. 214 :param right: right image. 215 :param parameters: structure containing parameters of the algorithm. 216 :param save_images: whether to save census images or not. 217 :return: H x W x D array with the matching costs. 218 """ 219 assert left.shape[0] == right.shape[0] and left.shape[1] == right.shape[1], 'left & right must have the same shape.' 220 assert parameters.max_disparity > 0, 'maximum disparity must be greater than 0.' 221 222 height = left.shape[0] 223 width = left.shape[1] 224 cheight = parameters.csize[0] 225 cwidth = parameters.csize[1] 226 y_offset = int(cheight / 2) 227 x_offset = int(cwidth / 2) 228 disparity = parameters.max_disparity 229 230 left_img_census = np.zeros(shape=(height, width), dtype=np.uint8) 231 right_img_census = np.zeros(shape=(height, width), dtype=np.uint8) 232 left_census_values = np.zeros(shape=(height, width), dtype=np.uint64) 233 right_census_values = np.zeros(shape=(height, width), dtype=np.uint64) 234 235 print('\tComputing left and right census...', end='') 236 sys.stdout.flush() 237 dawn = t.time() 238 # pixels on the border will have no census values 239 for y in range(y_offset, height - y_offset): 240 for x in range(x_offset, width - x_offset): 241 left_census = np.int64(0) 242 center_pixel = left[y, x] 243 reference = np.full(shape=(cheight, cwidth), fill_value=center_pixel, dtype=np.int64) 244 image = left[(y - y_offset):(y + y_offset + 1), (x - x_offset):(x + x_offset + 1)] 245 comparison = image - reference 246 for j in range(comparison.shape[0]): 247 for i in range(comparison.shape[1]): 248 if (i, j) != (y_offset, x_offset): 249 left_census = left_census << 1 250 if comparison[j, i] < 0: 251 bit = 1 252 else: 253 bit = 0 254 left_census = left_census | bit 255 left_img_census[y, x] = np.uint8(left_census) 256 left_census_values[y, x] = left_census 257 258 right_census = np.int64(0) 259 center_pixel = right[y, x] 260 reference = np.full(shape=(cheight, cwidth), fill_value=center_pixel, dtype=np.int64) 261 image = right[(y - y_offset):(y + y_offset + 1), (x - x_offset):(x + x_offset + 1)] 262 comparison = image - reference 263 for j in range(comparison.shape[0]): 264 for i in range(comparison.shape[1]): 265 if (i, j) != (y_offset, x_offset): 266 right_census = right_census << 1 267 if comparison[j, i] < 0: 268 bit = 1 269 else: 270 bit = 0 271 right_census = right_census | bit 272 right_img_census[y, x] = np.uint8(right_census) 273 right_census_values[y, x] = right_census 274 275 dusk = t.time() 276 print('\t(done in {:.2f}s)'.format(dusk - dawn)) 277 278 if save_images: 279 cv2.imwrite('left_census.png', left_img_census) 280 cv2.imwrite('right_census.png', right_img_census) 281 282 print('\tComputing cost volumes...', end='') 283 sys.stdout.flush() 284 dawn = t.time() 285 left_cost_volume = np.zeros(shape=(height, width, disparity), dtype=np.uint32) 286 right_cost_volume = np.zeros(shape=(height, width, disparity), dtype=np.uint32) 287 lcensus = np.zeros(shape=(height, width), dtype=np.int64) 288 rcensus = np.zeros(shape=(height, width), dtype=np.int64) 289 for d in range(0, disparity): 290 rcensus[:, (x_offset + d):(width - x_offset)] = right_census_values[:, x_offset:(width - d - x_offset)] 291 left_xor = np.int64(np.bitwise_xor(np.int64(left_census_values), rcensus)) 292 left_distance = np.zeros(shape=(height, width), dtype=np.uint32) 293 while not np.all(left_xor == 0): 294 tmp = left_xor - 1 295 mask = left_xor != 0 296 left_xor[mask] = np.bitwise_and(left_xor[mask], tmp[mask]) 297 left_distance[mask] = left_distance[mask] + 1 298 left_cost_volume[:, :, d] = left_distance 299 300 lcensus[:, x_offset:(width - d - x_offset)] = left_census_values[:, (x_offset + d):(width - x_offset)] 301 right_xor = np.int64(np.bitwise_xor(np.int64(right_census_values), lcensus)) 302 right_distance = np.zeros(shape=(height, width), dtype=np.uint32) 303 while not np.all(right_xor == 0): 304 tmp = right_xor - 1 305 mask = right_xor != 0 306 right_xor[mask] = np.bitwise_and(right_xor[mask], tmp[mask]) 307 right_distance[mask] = right_distance[mask] + 1 308 right_cost_volume[:, :, d] = right_distance 309 310 dusk = t.time() 311 print('\t(done in {:.2f}s)'.format(dusk - dawn)) 312 313 return left_cost_volume, right_cost_volume 314 315 316 def select_disparity(aggregation_volume): 317 """ 318 最后一步 319 last step of the sgm algorithm, corresponding to equation 14 followed by winner-takes-all approach. 320 :param aggregation_volume: H x W x D x N array of matching cost for all defined directions. 321 :return: disparity image. 322 """ 323 volume = np.sum(aggregation_volume, axis=3) 324 disparity_map = np.argmin(volume, axis=2) 325 return disparity_map 326 327 328 def normalize(volume, parameters): 329 """ 330 transforms values from the range (0, 64) to (0, 255). 331 :param volume: n dimension array to normalize. 332 :param parameters: structure containing parameters of the algorithm. 333 :return: normalized array. 334 """ 335 return 255.0 * volume / parameters.max_disparity 336 #return 255.0 * volume / 128 337 338 339 340 def get_recall(disparity, gt, args): 341 """ 342 computes the recall of the disparity map. 343 :param disparity: disparity image. 344 :param gt: path to ground-truth image. 345 :param args: program arguments. 346 :return: rate of correct predictions. 347 """ 348 gt = np.float32(cv2.imread(gt, cv2.IMREAD_GRAYSCALE)) 349 gt = np.int16(gt / 255.0 * float(args.disp)) 350 disparity = np.int16(np.float32(disparity) / 255.0 * float(args.disp)) 351 correct = np.count_nonzero(np.abs(disparity - gt) <= 3) 352 return float(correct) / gt.size 353 354 355 def sgm(): 356 """ 357 main function applying the semi-global matching algorithm. 358 :return: void. 359 """ 360 parser = argparse.ArgumentParser() 361 parser.add_argument('--left', default='cones/im2.png', help='name (path) to the left image') 362 parser.add_argument('--right', default='cones/im6.png', help='name (path) to the right image') 363 parser.add_argument('--left_gt', default='cones/disp2.png', help='name (path) to the left ground-truth image') 364 parser.add_argument('--right_gt', default='cones/disp6.png', help='name (path) to the right ground-truth image') 365 parser.add_argument('--output', default='disparity_map.png', help='name of the output image') 366 parser.add_argument('--disp', default=33, type=int, help='maximum disparity for the stereo pair') 367 parser.add_argument('--images', default=True, type=bool, help='save intermediate representations') 368 parser.add_argument('--eval', default=True, type=bool, help='evaluate disparity map with 3 pixel error') 369 args = parser.parse_args() 370 371 left_name = args.left 372 right_name = args.right 373 left_gt_name = args.left_gt 374 right_gt_name = args.right_gt 375 output_name = args.output 376 disparity = args.disp 377 save_images = args.images 378 evaluation = args.eval 379 380 dawn = t.time() 381 382 parameters = Parameters(max_disparity=disparity, P1=10, P2=120, csize=(7, 7), bsize=(3, 3)) 383 paths = Paths() 384 385 print('\nLoading images...') 386 left, right = load_images(left_name, right_name, parameters) 387 388 #第一步 389 print('\nStarting cost computation...') 390 left_cost_volume, right_cost_volume = compute_costs(left, right, parameters, save_images) 391 if save_images: 392 left_disparity_map = np.uint8(normalize(np.argmin(left_cost_volume, axis=2), parameters)) 393 cv2.imwrite('disp_map_left_cost_volume.png', left_disparity_map) 394 right_disparity_map = np.uint8(normalize(np.argmin(right_cost_volume, axis=2), parameters)) 395 cv2.imwrite('disp_map_right_cost_volume.png', right_disparity_map) 396 #第二步 397 print('\nStarting left aggregation computation...') 398 left_aggregation_volume = aggregate_costs(left_cost_volume, parameters, paths) 399 print('\nStarting right aggregation computation...') 400 right_aggregation_volume = aggregate_costs(right_cost_volume, parameters, paths) 401 402 print('\nSelecting best disparities...') 403 left_disparity_map = np.uint8(normalize(select_disparity(left_aggregation_volume), parameters)) 404 right_disparity_map = np.uint8(normalize(select_disparity(right_aggregation_volume), parameters)) 405 if save_images: 406 cv2.imwrite('left_disp_map_no_post_processing.png', left_disparity_map) 407 cv2.imwrite('right_disp_map_no_post_processing.png', right_disparity_map) 408 409 print('\nApplying median filter...') 410 #中值滤波 411 left_disparity_map = cv2.medianBlur(left_disparity_map, parameters.bsize[0]) 412 right_disparity_map = cv2.medianBlur(right_disparity_map, parameters.bsize[0]) 413 # right_disparity_map = cv2.GaussianBlur(right_disparity_map,(5,5), 15) 414 # left_disparity_map = cv2.GaussianBlur(left_disparity_map,(5,5), 15) 415 cv2.imwrite(f'left_{output_name}', left_disparity_map) 416 cv2.imwrite(f'right_{output_name}', right_disparity_map) 417 418 if evaluation: 419 print('\nEvaluating left disparity map...') 420 recall = get_recall(left_disparity_map, left_gt_name, args) 421 print('\tRecall = {:.2f}%'.format(recall * 100.0)) 422 print('\nEvaluating right disparity map...') 423 recall = get_recall(right_disparity_map, right_gt_name, args) 424 print('\tRecall = {:.2f}%'.format(recall * 100.0)) 425 426 dusk = t.time() 427 print('\nFin.') 428 print('\nTotal execution time = {:.2f}s'.format(dusk - dawn)) 429 430 431 if __name__ == '__main__': 432 sgm()

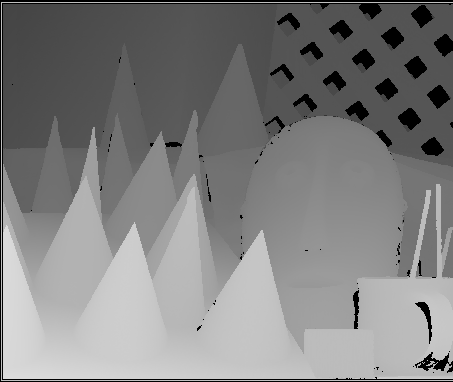

效果图:

分类:

计算机视觉

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)