hadoop2.x通过Zookeeper来实现namenode的HA方案集群搭建-实践版

最近有时间整理了一下全套hadoop2.x通过Zookeeper来实现namenode的HA方案,步骤如下:

实验使用了4个节点,192.168.1.201~192.168.1.204

1、配置静态IP,如下以201节点为例,其它节点除了IP不一样,其它内容配置一样即可:

vi /etc/sysconfig/network-scripts/ifcfg-eth0 DEVICE=eth0 HWADDR=00:0C:29:B4:3F:A2 TYPE=Ethernet UUID=16bdaf21-574b-4e55-87fd-12797bc7da5c ONBOOT=yes NM_CONTROLLED=yes BOOTPROTO=static IPADDR=192.168.1.201 NETMASK=255.255.255.0 GATEWAY=192.168.1.1 DNS=192.168.1.1

2、修改host,每个节点内容都一样(需要reboot机器):

vi /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.1.201 hadoop-cs198201 192.168.1.202 hadoop-cs198202 192.168.1.203 hadoop-cs198203 192.168.1.204 hadoop-cs198204

3、如果需要上网,则需要设置nameserver,每个节点内容都一样:

vi /etc/resolv.conf # Generated by NetworkManager # No nameservers found; try putting DNS servers into your # ifcfg files in /etc/sysconfig/network-scripts like so: # # DNS1=xxx.xxx.xxx.xxx # DNS2=xxx.xxx.xxx.xxx # DOMAIN=lab.foo.com bar.foo.com nameserver 192.168.1.1

4、删除centos自带jdk,每个节点执行如下命令:

(1)rpm -qa|grep jdk:显示如下: java-1.7.0-openjdk-1.7.0.45-2.4.3.3.el6.x86_64 java-1.6.0-openjdk-1.6.0.0-1.66.1.13.0.el6.x86_64 (2)删除安装包: yum -y remove java java-1.7.0-openjdk-1.7.0.45-2.4.3.3.el6.x86_64 yum -y remove java java-1.6.0-openjdk-1.6.0.0-1.66.1.13.0.el6.x86_64

5、安装jdk,每个节点执行如下命令(安装文件需要使用rz命令上传文件,如果提示找不到rz命令,则先执行yum -y install lrzsz命令安装rz):

(1)首先将jdk压缩包使用命令tar -zxvf jdk-7u79-linux-x64.tar.gz解压到安装目录,如/export/servers/jdk1.7.0_79

(2)配置环境变量(注:该环境变量一定要设置齐全,否则后面执行hadoop一些命令会出错):

export JAVA_HOME=/export/servers/jdk1.7.0_79 export JAVA_BIN=/export/servers/jdk1.7.0_79/bin export HADOOP_HOME=/export/servers/hadoop-2.2.0 export HADOOP_COMMON_HOME=$HADOOP_HOME export HADOOP_HDFS_HOME=$HADOOP_HOME export HADOOP_MAPRED_HOME=$HADOOP_HOME export HADOOP_YARN_HOME=$HADOOP_HOME export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib" export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HADOOP_HOME/lib:$PATH

(3)执行source /etc/profile使配置文件生效

(4)执行java -version校验jdk安装是否成功

6、配置SSH连接:

(1)首先在主节点生成秘钥文件,执行如下命令,一路回车即可: ssh-keygen -t rsa (2)将主节点生成的秘钥文件拷贝到子节点,执行如下命令: ssh-copy-id -i .ssh/id_rsa.pub hadoop@hadoop-cs198202 (3)以此类推,将主节点秘钥文件拷贝到所有子节点 (4)执行ssh 子节点域名 测试是否可以直接跳转子节点,如果可以,代表配置成功

7、安装zookeeper(教程很多,基本正确,就是启动的时候可能会遇到连接超时问题,问题是没有关闭防火墙,将防火墙关闭后重启机器即可):

7、下载hadoop2.2压缩包,下载地址:https://archive.apache.org/dist/hadoop/common/hadoop-2.2.0/

(1)将hadoop压缩文件压缩到安装目录,如/export/servers

8、下面就是主要工作修改配置文件了:

(1)core-site.xml:

<?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>fs.defaultFS</name> <value>hdfs://ns1</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/export/data/data0/hadoop_tmp</value> </property> <property> <name>ha.zookeeper.quorum</name> <value>hadoop-cs198201:2181,hadoop-cs198202:2181,hadoop-cs198203:2181</value> </property> <property> <name>ha.zookeeper.parent-znode</name> <value>/hadoop-ha</value> </property> <property> <name>ha.zookeeper.session-timeout.ms</name> <value>5000</value> </property> <property> <name>io.file.buffer.size</name> <value>131072</value> </property> <property> <name>io.native.lib.available</name> <value>true</value> <description>hadoop.native.lib is deprecated</description> </property> <property> <name>hadoop.http.staticuser.user</name> <value>hadoop</value> </property> <property> <name>hadoop.security.authorization</name> <value>true</value> </property> <property> <name>hadoop.security.authentication</name> <value>simple</value> </property> <property> <name>fs.trash.interval</name> <value>1440</value> </property> <property> <name>ha.failover-controller.graceful-fence.rpc-timeout.ms</name> <value>160000</value> </property> <property> <name>ha.failover-controller.new-active.rpc-timeout.ms</name> <value>360000</value> </property> <!-- OOZIE --> <property> <name>hadoop.proxyuser.oozie.hosts</name> <value>*</value> </property> <property> <name>hadoop.proxyuser.oozie.groups</name> <value>*</value> </property> <!-- hive --> <property> <name>hadoop.proxyuser.hive.hosts</name> <value>*</value> </property> <property> <name>hadoop.proxyuser.hive.groups</name> <value>*</value> </property> </configuration>

(2)hdfs-site.xml:

<configuration>

<property>

<name>dfs.nameservices</name>

<value>ns1</value>

</property>

<property>

<name>dfs.ha.namenodes.ns1</name>

<value>nn1,nn2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns1.nn1</name>

<value>hadoop-cs198201:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns1.nn2</name>

<value>hadoop-cs198202:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.ns1.nn1</name>

<value>hadoop-cs198201:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.ns1.nn2</name>

<value>hadoop-cs198202:50070</value>

</property>

<property>

<name>dfs.namenode.servicerpc-address.ns1.nn1</name>

<value>hadoop-cs198201:53310</value>

</property>

<property>

<name>dfs.namenode.servicerpc-address.ns1.nn2</name>

<value>hadoop-cs198202:53310</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hadoop-cs198201:8485;hadoop-cs198202:8485;hadoop-cs198203:8485/ns1</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/export/data/data0/journal/data</value>

</property>

<property>

<name>dfs.qjournal.write-txns.timeout.ms</name>

<value>120000</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.ns1</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/hadoop/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>30000</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/export/data/data0/nn</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/export/data/data0/dfs</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.namenode.servicerpc-address.ns1.nn1</name>

<value>hadoop-cs198201:8021</value>

</property>

<property>

<name>dfs.namenode.servicerpc-address.ns1.nn2</name>

<value>hadoop-cs198202:8021</value>

</property>

<property>

<name>dfs.client.socket-timeout</name>

<value>180000</value>

</property>

<property>

<name>dfs.permissions.enable</name>

<value>false</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

(3)mapreduce-site.xml:

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <property> <name>mapreduce.task.io.sort.factor</name> <value>10</value> </property> <property> <name>mapreduce.reduce.shuffle.parallelcopies</name> <value>5</value> </property> <property> <name>mapreduce.jobhistory.address</name> <value>hadoop-cs198203:10020</value> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>hadoop-cs198203:19888</value> </property> </configuration>

(4)yarn-site.xml:

<?xml version="1.0"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <configuration> <property> <name>yarn.acl.enable</name> <value>true</value> </property> <property> <name>yarn.admin.acl</name> <value>hadoop</value> </property> <property> <name>yarn.resourcemanager.ha.enabled</name> <value>true</value> </property> <property> <name>yarn.resourcemanager.address</name> <value>hadoop-cs198201:8032</value> </property> <property> <name>yarn.resourcemanager.scheduler.address</name> <value>hadoop-cs198201:8030</value> </property> <property> <name>yarn.resourcemanager.resource-tracker.address</name> <value>hadoop-cs198201:8031</value> </property> <property> <name>yarn.resourcemanager.admin.address</name> <value>hadoop-cs198201:8033</value> </property> <property> <name>yarn.resourcemanager.webapp.address</name> <value>hadoop-cs198201:8088</value> </property> <property> <name>yarn.resourcemanager.scheduler.class</name> <value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value> </property> <property> <name>yarn.scheduler.fair.allocation.file</name> <value>/export/servers/hadoop-2.2.0/etc/hadoop/fair-scheduler.xml</value> </property> <property> <name>yarn.scheduler.assignmultiple</name> <value>true</value> </property> <property> <name>yarn.scheduler.fair.allow-undeclared-pools</name> <value>false</value> </property> <property> <name>yarn.scheduler.fair.locality.threshold.node</name> <value>0.1</value> </property> <property> <name>yarn.scheduler.fair.locality.threshold.rack</name> <value>0.1</value> </property> <property> <name>yarn.nodemanager.pmem-check-enabled</name> <value>true</value> </property> <property> <name>yarn.nodemanager.vmem-check-enabled</name> <value>true</value> </property> <property> <name>yarn.nodemanager.local-dirs</name> <value>/export/data/data0/yarn/local,/export/data/data1/yarn/local,/export/data/data2/yarn/local</value> </property> <property> <name>yarn.log-aggregation-enable</name> <value>true</value> </property> <property> <name>yarn.nodemanager.log-dirs</name> <value>/export/data/data0/yarn/logs</value> </property> <property> <name>yarn.nodemanager.log.retain-seconds</name> <value>86400</value> </property> <property> <name>yarn.nodemanager.remote-app-log-dir</name> <value>/export/tmp/app-logs</value> </property> <property> <name>yarn.nodemanager.remote-app-log-dir-suffix</name> <value>logs</value> </property> <property> <name>yarn.log-aggregation.retain-seconds</name> <value>259200</value> </property> <property> <name>yarn.log-aggregation.retain-check-interval-seconds</name> <value>86400</value> </property> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> </configuration>

9、最后启动,启动顺序如下:

(1)启动zookeeper(在201、202、203上启动即可):

zkServer.sh start

(2)对zookeeper集群进行格式化(在其中一个namenode上执行即可):

hdfs zkfc -formatZK

(3)启动JournalNode进程(在201、202、203上启动即可):

hadoop-daemon.sh start journalnode

(4)格式化hadoop的集群(在其中一个namenode上执行即可):

hdfs namenode -format ns1

(5)启动第(4)步格式化之后的namenode:

hadoop-daemon.sh start namenode

(6)在另外一个namenode节点上按顺序执行如下两个命令来启动namenode进程:

hdfs namenode -bootstrapStandby hadoop-daemon.sh start namenode

(7)在一个namenode节点上执行一下两个命令启动所有的进程:

start-dfs.sh start-yarn.sh

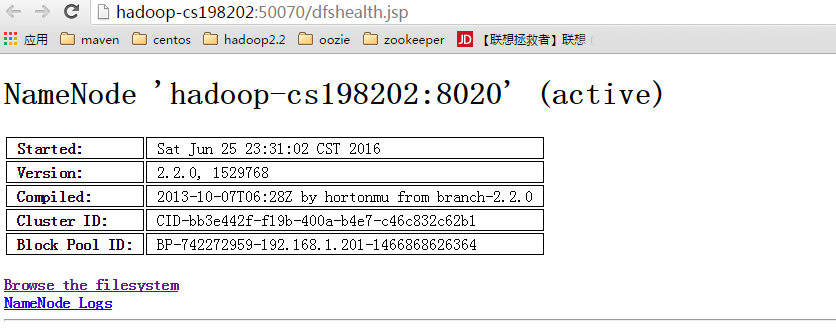

(8)此时启动完之后,我们可以通过下面的命令来查看两个namenode的状态是否是standby或者是active:

hdfs haadmin -getServiceState nn1 standby hdfs haadmin -getServiceState nn2 active

(9)当然最后别忘了启动historyserver:

sh mr-jobhistory-daemon.sh start historyserver

启动过程参考http://www.tuicool.com/articles/iUBJJj3

10、启动完后,查看是否成功:

在安装过程中遇到几个问题就是:

1、启动hadoop命令时报内存错误,原因可能是hadoop-env.sh或者yarn-env.sh文件中设置的内存过大,进行调整或者使用默认值即可。

2、启动start-dfs.sh命令时报hostname无法识别错误,原因是没有设置hadoop环境变量,添加进去即可。

3、环境变量更新后需要重新重新启动集群,否则可能出现两个namenode均是standby的情况。

浙公网安备 33010602011771号

浙公网安备 33010602011771号