梯度下降

本文总字数:2593,阅读预计需要:6分钟

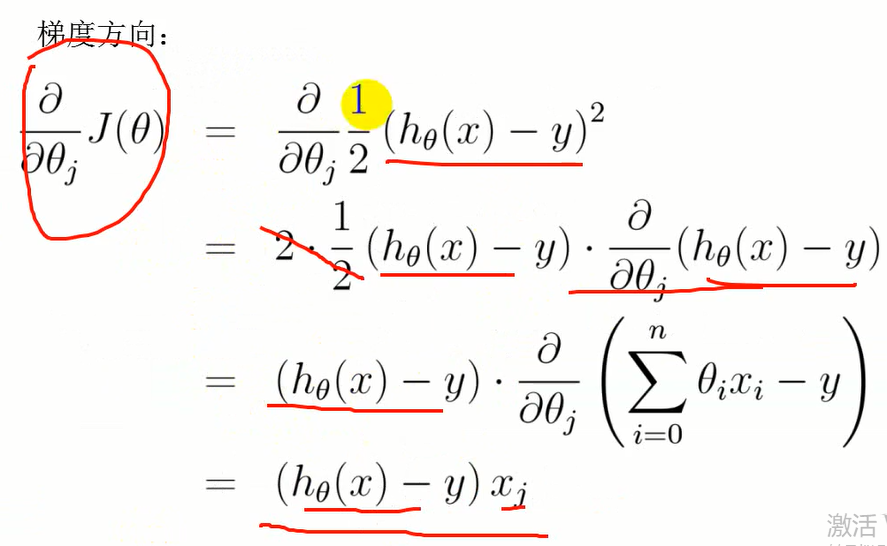

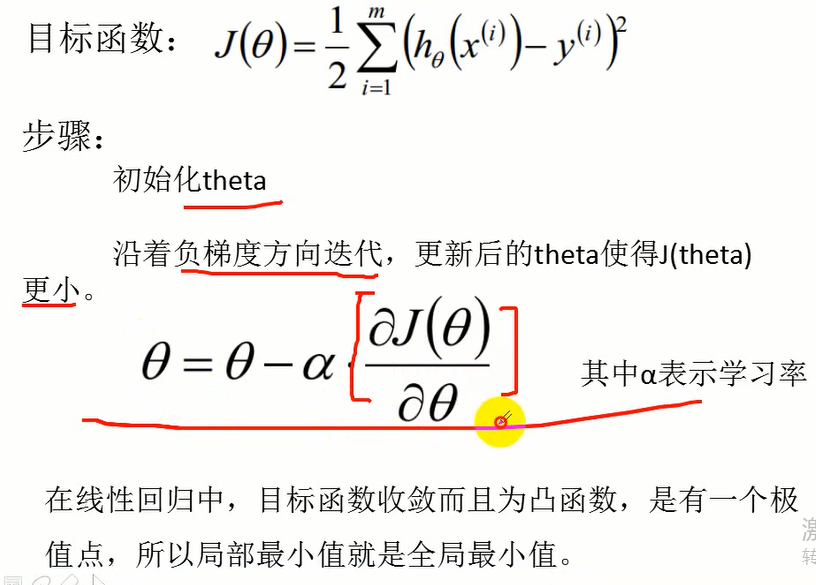

梯度讲解

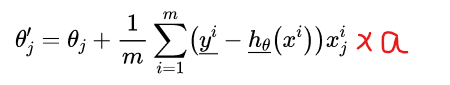

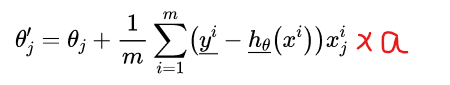

更新过程的公式有问题,修改为:

a代表学习率或者说事步长

举例说明

假设

有样本点(4,20)、(8,50)、(5,30)、(10,70)、(12,60)

求回归函数

求解过程:

将样本点拆分

x=[4, 8, 5, 10, 12]

y = [20, 50, 30, 70, 60]

假设回归函数是线性函数:y = theta0 + theta1*x

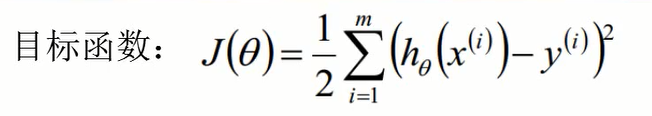

x、y已知求theta0、theta1,则可以写成下面的目标函数,求目标函数的最小时的theta0和theta1的值

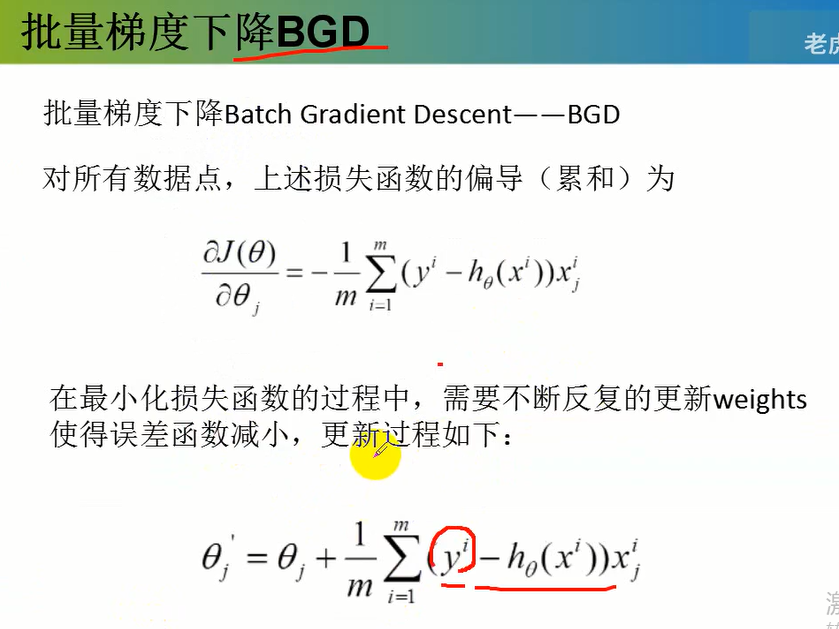

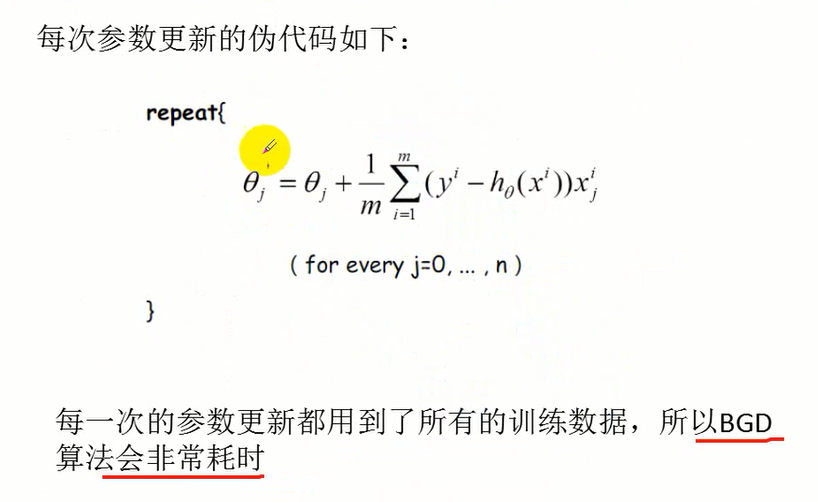

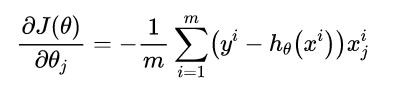

使用梯度下降法:

迭代得到新的theta0,theta1

将新的theta0,theta1带入目标函数得到新的目标函数值j1,与上一次theta0,theta1带入目标函数得到上一次的目标函数值j0;j1与j0相减小于一个阈值(很小的数)时,可以认为此时新的theta0,theta1就是所求的theta0,theta1。

代码如下:(python)

#y= theta0 + theta1*x

X = [4, 8, 5, 10, 12]

y = [20, 50, 30, 70, 60]

theta0 = theta1 = 0

#学习率 步长

alpha = 0.00001

#迭代次数

cnt = 0

#误差

error0=error1 = 0

#指定阈值用于检查两个误差的差 一遍用来停止迭代

threshold = 0.0000001

while True:

#dif[0]为theta0的梯度, dif[1]为theta1的梯度

dif = [0, 0]

m = len(X)

for i in range(m):

dif[0] += y[i] - (theta0 + theta1*X[i])

dif[1] += (y[i] - (theta0 + theta1*X[i])) * X[i]

pass

theta0 = theta0 + alpha*dif[0]

theta1 = theta1 + alpha*dif[1]

#计算误差

for i in range(m):

error1 += (y[i] - (theta0 + theta1*X[i]))**2

pass

error1 /= m

if abs(error1 - error0) <= threshold:

break

else:

error0 = error1

pass

cnt += 1

pass

print(theta0, theta1, cnt)

def predicty(theta0, theta1, x_test):

return theta0 + theta1*x_test

print(predicty(theta0, theta1, 15))

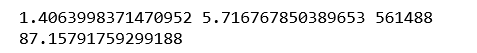

结果:

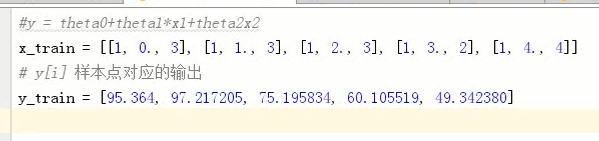

练习:编程实现

#y= theta0*x0 + theta1*x1 + theta2*x2

#X = [[1,0,3],[1,1,3],[1,2,3],[1,3,2],[1,4,4]]

X0 = [1,1,1,1,1]

X1 = [0,1,2,3,4]

X2 = [3,3,3,2,4]

y = [95.364, 97.217205, 75.195834, 60.105519, 49.342380]

theta0 = theta1 = theta2 = 0

#学习率 步长

alpha = 0.00001

#迭代次数

cnt = 0

#误差

error0=error1 = 0

#指定阈值用于检查两个误差的差 一遍用来停止迭代

threshold = 0.000000001

while True:

#dif[0]为theta0的梯度, dif[1]为theta1的梯度

dif = [0, 0, 0]

m = len(X)

for i in range(m):

dif[0] += y[i] - (theta0*x0[i] + theta1*X1[i] + theta2*X2[i])*X0[i]

dif[1] += (y[i] - (theta0*x0[i] + theta1*X1[i] + theta2*X2[i]))*X1[i]

dif[2] += (y[i] - (theta0*x0[i] + theta1*X1[i] + theta2*X2[i]))*X2[i]

pass

theta0 = theta0 + alpha*dif[0]

theta1 = theta1 + alpha*dif[1]

theta2 = theta2 + alpha*dif[2]

#计算误差

for i in range(m):

error1 += (y[i] - (theta0 + theta1*X1[i] + theta2*X2[i]))**2

pass

error1 /= m

if abs(error1 - error0) <= threshold:

break

else:

error0 = error1

pass

cnt += 1

pass

print(theta0, theta1, theta2, cnt)

def predicty(theta0, theta1, theta2,x1_test, x2_test):

return theta0 + theta1*x1_test +theta2 * x2_test

print(predicty(theta0, theta1, theta2, 0,3))

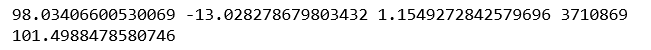

结果:

我们向往远方,却忽略了此刻的美丽

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· AI与.NET技术实操系列:基于图像分类模型对图像进行分类

· go语言实现终端里的倒计时

· 如何编写易于单元测试的代码

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 25岁的心里话

· 闲置电脑爆改个人服务器(超详细) #公网映射 #Vmware虚拟网络编辑器

· 零经验选手,Compose 一天开发一款小游戏!

· 通过 API 将Deepseek响应流式内容输出到前端

· AI Agent开发,如何调用三方的API Function,是通过提示词来发起调用的吗

2020-04-12 (stm32f103学习总结)—ADC模数转换实验

2020-04-12 (stm32f103学习总结)—待机唤醒实验