Centos7系统-postgresql+etcd+patroni+haproxy+keepalived高可用集群部署

一、概况

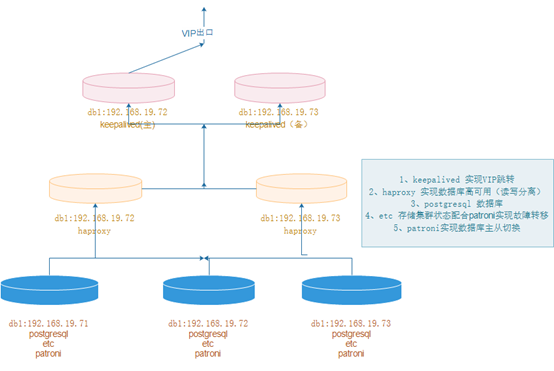

1、概念

pgsql高可用集群采用postgresql+etcd+patroni+haproxy+keepalived等软件实现,以postgresql做数据库,etcd存储集群状态,patroni与etcd结合实现数据库集群故障切换,

haproxy实现数据库高可用(读读写分离),keepalived实现VIP跳转。

2、拓扑图

软件下载地址:

链接:https://pan.baidu.com/s/1VIWwXcfQRCumJjEXndSXPQ

提取码:5bpz

二、postgresql部署(三个节点)

1、下载解压

https://www.enterprisedb.com/download-postgresql-binaries mkdir -p /data/pg_data tar xf postgresql-10.18-1-linux-x64-binaries.tar.gz -C /data/

2、创建用户并授权

useradd postgres passwd postgres chown -R postgres.postgres /data/

3、初始化数据库(postgres用户下)

切换目录 [root@centos7 ~]# su – postgres 初始化目录 [postgres@centos7 ~]$ /data/pgsql/bin/initdb -D /data/pg_data/

4、配置变量

su – postgres vim .bash_profile PATH=$PATH:$HOME/bin export PATH export PATH export PGHOME=/data/pgsql export PATH=$PATH:$PGHOME/bin export PGDATA=/data/pg_data export PGLOG=/data/pg_log/pg.log source .bash_profile mkdir -p /data/pg_log chown postgres.postgres /data/pg_data chown postgres.postgres /data/pg_log

5、配置postgresql启动脚本

vim /etc/systemd/system/postgresql.service [Unit] Description=PostgreSQL database server After=network.target [Service] Type=forking User=postgres Group=postgres ExecStart= /data/pgsql/bin/pg_ctl -D /data/pg_data/ start ExecReload= /data/pgsql/bin/pg_ctl -D /data/pg_data/ restart ExecStop= /data/pgsql/bin/pg_ctl -D /data/pg_data/ stop PrivateTmp=true [Install] WantedBy=multi-user.target

6、启动与关闭

systemctl daemon-reload 开启 systemctl start postgresql 关闭 systemctl stop postgresql 重启 systemctl restart postgresql

7、数据库添加密码

[postgres@pgsql-19 ~]$ psql -U postgres -h localhost postgres=# alter user postgres with password 'P@sswrd';

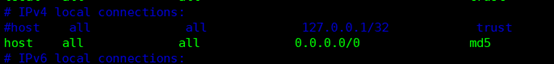

8、允许远程连接

vim /data/pg_data/pg_hba.conf host all all 0.0.0.0/0 md5

vim /data/pg_data/postgresql.conf listen_addresses = '*' password_encryption = on 重启数据库 systemctl restart postgresql

三、etcd部署(三个节点)

1、下载解压

tar xf etcd-v3.1.20-linux-amd64.tar.gz -C /usr/local/ ln -s /usr/local/etcd-v3.1.20-linux-amd64 /usr/local/etcd

2、文件配置

mkdir -p /usr/local/etcd/data/etcd vim /usr/local/etcd/conf.yml name: pgsql_1971 data-dir: /usr/local/etcd/data/etcd listen-client-urls: http://192.168.19.71:2379,http://127.0.0.1:2379 advertise-client-urls: http://192.168.19.71:2379,http://127.0.0.1:2379 listen-peer-urls: http://192.168.19.71:2380 initial-advertise-peer-urls: http://192.168.19.71:2380 initial-cluster: pgsql_1971=http://192.168.19.71:2380,pgsql_1972=http://192.168.19.72:2380,pgsql_1973=http://192.168.19.73:2380 initial-cluster-token: etcd-cluster-token initial-cluster-state: new mkdir -p /usr/local/etcd/data/etc vim /usr/local/etcd/conf.yml name: pgsql_1972 data-dir: /usr/local/etcd/data/etcd listen-client-urls: http://192.168.19.72:2379,http://127.0.0.1:2379 advertise-client-urls: http://192.168.19.72:2379,http://127.0.0.1:2379 listen-peer-urls: http://192.168.19.72:2380 initial-advertise-peer-urls: http://192.168.19.72:2380 initial-cluster: pgsql_1971=http://192.168.19.71:2380,pgsql_1972=http://192.168.19.72:2380,pgsql_1973=http://192.168.19.73:2380 initial-cluster-token: etcd-cluster-token initial-cluster-state: new mkdir -p /usr/local/etcd/data/etc vim /usr/local/etcd/conf.yml name: pgsql_1973 data-dir: /usr/local/etcd/data/etcd listen-client-urls: http://192.168.19.73:2379,http://127.0.0.1:2379 advertise-client-urls: http://192.168.19.73:2379,http://127.0.0.1:2379 listen-peer-urls: http://192.168.19.73:2380 initial-advertise-peer-urls: http://192.168.19.73:2380 initial-cluster: pgsql_1971=http://192.168.19.71:2380,pgsql_1972=http://192.168.19.72:2380,pgsql_1973=http://192.168.19.73:2380 initial-cluster-token: etcd-cluster-token initial-cluster-state: new

3、启动并加入到开机自启中

加入开机自启里边 nohup /usr/local/etcd/etcd --config-file=/usr/local/etcd/conf.yml &

4、集群检查

netstat -lntup|grep etcd /usr/local/etcd/etcdctl member list

四、patroni部署(三个节点)

1、更新postgresql.conf文件

postgresql.conf配置如下

max_connections = '500'

max_wal_senders = '10'

port = '5432'

listen_addresses = '*'

synchronous_commit = on

full_page_writes = on

wal_log_hints = on

synchronous_standby_names = '*'

max_replication_slots = 10

wal_level = replica

注:wal_log_hints = on,synchronous_standby_names = '*' 这两个参数会导致数据库执行呆滞,后来者欢迎留言看是怎么回事儿

2、更新pg_hba.conf文件

vim /data/pg_data/pg_hba.conf 清理最后配置的配置,新增以下 local all all peer host all all 127.0.0.1/32 md5 host all postgres 127.0.0.1/32 md5 host all all 192.168.19.0/24 md5 host all all ::1/128 md5 local replication replicator peer host replication replicator 127.0.0.1/32 md5 host replication replicator ::1/128 md5 host replication replicator 192.168.19.71/32 md5 host replication replicator 192.168.19.72/32 md5 host replication replicator 192.168.19.73/32 md5

以上配置完成后,重启数据库

3、在主节点上创建复制槽,很重要,patroni会用到

postgres=# create user replicator replication login encrypted password '1qaz2wsx';

4、配置stream replication(在两个从节点操作)

systemctl stop postgresql

su - postgres cd /data/ && rm -rf pg_data /data/pgsql/bin/pg_basebackup -h 192.168.19.71 -D /data/pg_data -U replicator -v -P -R

启动数据库

systemctl start postgresql

5、安装patroni(三个节点)

yum install -y python3 python-psycopg2 python3-devel pip3 install --upgrade pip pip3 install psycopg2-binary -i http://pypi.douban.com/simple/ --trusted-host pypi.douban.com pip3 install patroni[etcd] -i http://pypi.douban.com/simple/ --trusted-host pypi.douban.com 验证是否安装成功 which patroni patronictl --help

6、创建patroni配置文件

mkdir -p /usr/patroni/conf cd /usr/patroni/conf/

node1

scope: batman

namespace: /service/

name: postgresql1

restapi:

listen: 192.168.19.71:8008

connect_address: 192.168.19.71:8008

# certfile: /etc/ssl/certs/ssl-cert-snakeoil.pem

# keyfile: /etc/ssl/private/ssl-cert-snakeoil.key

# authentication:

# username: username

# password: password

# ctl:

# insecure: false # Allow connections to SSL sites without certs

# certfile: /etc/ssl/certs/ssl-cert-snakeoil.pem

# cacert: /etc/ssl/certs/ssl-cacert-snakeoil.pem

etcd:

#Provide host to do the initial discovery of the cluster topology:

host: 192.168.19.71:2379

#Or use "hosts" to provide multiple endpoints

#Could be a comma separated string:

#hosts: host1:port1,host2:port2

#host: 192.168.19.71:2379,192.168.19.71:2379,192.168.19.73:2379

#or an actual yaml list:

#hosts:

#- host1:port1

#- host2:port2

#Once discovery is complete Patroni will use the list of advertised clientURLs

#It is possible to change this behavior through by setting:

#use_proxies: true

#raft:

# data_dir: .

# self_addr: 192.168.19.71:2222

# partner_addrs:

# - 192.168.19.71:2223

# - 192.168.19.71:2224

bootstrap:

# this section will be written into Etcd:/<namespace>/<scope>/config after initializing new cluster

# and all other cluster members will use it as a `global configuration`

dcs:

ttl: 30

loop_wait: 10

retry_timeout: 10

maximum_lag_on_failover: 1048576

# master_start_timeout: 300

# synchronous_mode: false

#standby_cluster:

#host: 192.168.19.71

#port: 1111

#primary_slot_name: patroni

postgresql:

use_pg_rewind: true

# use_slots: true

parameters:

# wal_level: hot_standby

# hot_standby: "on"

# max_connections: 100

# max_worker_processes: 8

# wal_keep_segments: 8

# max_wal_senders: 10

# max_replication_slots: 10

# max_prepared_transactions: 0

# max_locks_per_transaction: 64

# wal_log_hints: "on"

# track_commit_timestamp: "off"

# archive_mode: "on"

# archive_timeout: 1800s

# archive_command: mkdir -p ../wal_archive && test ! -f ../wal_archive/%f && cp %p ../wal_archive/%f

# recovery_conf:

# restore_command: cp ../wal_archive/%f %p

# some desired options for 'initdb'

initdb: # Note: It needs to be a list (some options need values, others are switches)

- encoding: UTF8

- data-checksums

pg_hba: # Add following lines to pg_hba.conf after running 'initdb'

# For kerberos gss based connectivity (discard @.*$)

#- host replication replicator 192.168.19.71/32 gss include_realm=0

#- host all all 0.0.0.0/0 gss include_realm=0

- host replication replicator 192.168.19.71/32 md5

- host all all 0.0.0.0/0 md5

# - hostssl all all 0.0.0.0/0 md5

# Additional script to be launched after initial cluster creation (will be passed the connection URL as parameter)

# post_init: /usr/local/bin/setup_cluster.sh

# Some additional users users which needs to be created after initializing new cluster

users:

admin:

password: admin

options:

- createrole

- createdb

postgresql:

listen: 192.168.19.71:5432

connect_address: 192.168.19.71:5432

data_dir: /data/pg_data

bin_dir: /data/pgsql/bin

# config_dir:

pgpass: /tmp/pgpass0

authentication:

replication:

username: replicator

password: 1qaz2wsx

superuser:

username: postgres

password: P@sswrd

rewind: # Has no effect on postgres 10 and lower

username: postgres

password: P@sswrd

# Server side kerberos spn

# krbsrvname: postgres

parameters:

# Fully qualified kerberos ticket file for the running user

# same as KRB5CCNAME used by the GSS

# krb_server_keyfile: /var/spool/keytabs/postgres

unix_socket_directories: '.'

# Additional fencing script executed after acquiring the leader lock but before promoting the replica

#pre_promote: /path/to/pre_promote.sh

#watchdog:

# mode: automatic # Allowed values: off, automatic, required

# device: /dev/watchdog

# safety_margin: 5

tags:

nofailover: false

noloadbalance: false

clonefrom: false

nosync: false

------------------------------------------------------------------------------

node2

scope: batman

namespace: /service/

name: postgresql2

restapi:

listen: 192.168.19.72:8008

connect_address: 192.168.19.72:8008

# certfile: /etc/ssl/certs/ssl-cert-snakeoil.pem

# keyfile: /etc/ssl/private/ssl-cert-snakeoil.key

# authentication:

# username: username

# password: password

# ctl:

# insecure: false # Allow connections to SSL sites without certs

# certfile: /etc/ssl/certs/ssl-cert-snakeoil.pem

# cacert: /etc/ssl/certs/ssl-cacert-snakeoil.pem

etcd:

#Provide host to do the initial discovery of the cluster topology:

host: 192.168.19.72:2379

#Or use "hosts" to provide multiple endpoints

#Could be a comma separated string:

#hosts: host1:port1,host2:port2

#host: 192.168.19.71:2379,192.168.19.72:2379,192.168.19.73:2379

#or an actual yaml list:

#hosts:

#- host1:port1

#- host2:port2

#Once discovery is complete Patroni will use the list of advertised clientURLs

#It is possible to change this behavior through by setting:

#use_proxies: true

#raft:

# data_dir: .

# self_addr: 192.168.19.72:2222

# partner_addrs:

# - 192.168.19.72:2223

# - 192.168.19.72:2224

bootstrap:

# this section will be written into Etcd:/<namespace>/<scope>/config after initializing new cluster

# and all other cluster members will use it as a `global configuration`

dcs:

ttl: 30

loop_wait: 10

retry_timeout: 10

maximum_lag_on_failover: 1048576

# master_start_timeout: 300

# synchronous_mode: false

#standby_cluster:

#host: 192.168.19.72

#port: 1111

#primary_slot_name: patroni

postgresql:

use_pg_rewind: true

# use_slots: true

parameters:

# wal_level: hot_standby

# hot_standby: "on"

# max_connections: 100

# max_worker_processes: 8

# wal_keep_segments: 8

# max_wal_senders: 10

# max_replication_slots: 10

# max_prepared_transactions: 0

# max_locks_per_transaction: 64

# wal_log_hints: "on"

# track_commit_timestamp: "off"

# archive_mode: "on"

# archive_timeout: 1800s

# archive_command: mkdir -p ../wal_archive && test ! -f ../wal_archive/%f && cp %p ../wal_archive/%f

# recovery_conf:

# restore_command: cp ../wal_archive/%f %p

# some desired options for 'initdb'

initdb: # Note: It needs to be a list (some options need values, others are switches)

- encoding: UTF8

- data-checksums

pg_hba: # Add following lines to pg_hba.conf after running 'initdb'

# For kerberos gss based connectivity (discard @.*$)

#- host replication replicator 192.168.19.72/32 gss include_realm=0

#- host all all 0.0.0.0/0 gss include_realm=0

- host replication replicator 192.168.19.72/32 md5

- host all all 0.0.0.0/0 md5

# - hostssl all all 0.0.0.0/0 md5

# Additional script to be launched after initial cluster creation (will be passed the connection URL as parameter)

# post_init: /usr/local/bin/setup_cluster.sh

# Some additional users users which needs to be created after initializing new cluster

users:

admin:

password: admin

options:

- createrole

- createdb

postgresql:

listen: 192.168.19.72:5432

connect_address: 192.168.19.72:5432

data_dir: /data/pg_data

bin_dir: /data/pgsql/bin

# config_dir:

pgpass: /tmp/pgpass0

authentication:

replication:

username: replicator

password: 1qaz2wsx

superuser:

username: postgres

password: P@sswrd

rewind: # Has no effect on postgres 10 and lower

username: postgres

password: P@sswrd

# Server side kerberos spn

# krbsrvname: postgres

parameters:

# Fully qualified kerberos ticket file for the running user

# same as KRB5CCNAME used by the GSS

# krb_server_keyfile: /var/spool/keytabs/postgres

unix_socket_directories: '.'

# Additional fencing script executed after acquiring the leader lock but before promoting the replica

#pre_promote: /path/to/pre_promote.sh

#watchdog:

# mode: automatic # Allowed values: off, automatic, required

# device: /dev/watchdog

# safety_margin: 5

tags:

nofailover: false

noloadbalance: false

clonefrom: false

nosync: false

------------------------------------------------------------------------------

node3

scope: batman

namespace: /service/

name: postgresql3

restapi:

listen: 192.168.19.73:8008

connect_address: 192.168.19.73:8008

# certfile: /etc/ssl/certs/ssl-cert-snakeoil.pem

# keyfile: /etc/ssl/private/ssl-cert-snakeoil.key

# authentication:

# username: username

# password: password

# ctl:

# insecure: false # Allow connections to SSL sites without certs

# certfile: /etc/ssl/certs/ssl-cert-snakeoil.pem

# cacert: /etc/ssl/certs/ssl-cacert-snakeoil.pem

etcd:

#Provide host to do the initial discovery of the cluster topology:

host: 192.168.19.73:2379

#Or use "hosts" to provide multiple endpoints

#Could be a comma separated string:

#hosts: host1:port1,host2:port2

#host: 192.168.19.73:2379,192.168.19.73:2379,192.168.19.73:2379

#or an actual yaml list:

#hosts:

#- host1:port1

#- host2:port2

#Once discovery is complete Patroni will use the list of advertised clientURLs

#It is possible to change this behavior through by setting:

#use_proxies: true

#raft:

# data_dir: .

# self_addr: 192.168.19.73:2222

# partner_addrs:

# - 192.168.19.73:2223

# - 192.168.19.73:2224

bootstrap:

# this section will be written into Etcd:/<namespace>/<scope>/config after initializing new cluster

# and all other cluster members will use it as a `global configuration`

dcs:

ttl: 30

loop_wait: 10

retry_timeout: 10

maximum_lag_on_failover: 1048576

# master_start_timeout: 300

# synchronous_mode: false

#standby_cluster:

#host: 192.168.19.73

#port: 1111

#primary_slot_name: patroni

postgresql:

use_pg_rewind: true

# use_slots: true

parameters:

# wal_level: hot_standby

# hot_standby: "on"

# max_connections: 100

# max_worker_processes: 8

# wal_keep_segments: 8

# max_wal_senders: 10

# max_replication_slots: 10

# max_prepared_transactions: 0

# max_locks_per_transaction: 64

# wal_log_hints: "on"

# track_commit_timestamp: "off"

# archive_mode: "on"

# archive_timeout: 1800s

# archive_command: mkdir -p ../wal_archive && test ! -f ../wal_archive/%f && cp %p ../wal_archive/%f

# recovery_conf:

# restore_command: cp ../wal_archive/%f %p

# some desired options for 'initdb'

initdb: # Note: It needs to be a list (some options need values, others are switches)

- encoding: UTF8

- data-checksums

pg_hba: # Add following lines to pg_hba.conf after running 'initdb'

# For kerberos gss based connectivity (discard @.*$)

#- host replication replicator 192.168.19.73/32 gss include_realm=0

#- host all all 0.0.0.0/0 gss include_realm=0

- host replication replicator 192.168.19.73/32 md5

- host all all 0.0.0.0/0 md5

# - hostssl all all 0.0.0.0/0 md5

# Additional script to be launched after initial cluster creation (will be passed the connection URL as parameter)

# post_init: /usr/local/bin/setup_cluster.sh

# Some additional users users which needs to be created after initializing new cluster

users:

admin:

password: admin

options:

- createrole

- createdb

postgresql:

listen: 192.168.19.73:5432

connect_address: 192.168.19.73:5432

data_dir: /data/pg_data

bin_dir: /data/pgsql/bin

# config_dir:

pgpass: /tmp/pgpass0

authentication:

replication:

username: replicator

password: 1qaz2wsx

superuser:

username: postgres

password: P@sswrd

rewind: # Has no effect on postgres 10 and lower

username: postgres

password: P@sswrd

# Server side kerberos spn

# krbsrvname: postgres

parameters:

# Fully qualified kerberos ticket file for the running user

# same as KRB5CCNAME used by the GSS

# krb_server_keyfile: /var/spool/keytabs/postgres

unix_socket_directories: '.'

# Additional fencing script executed after acquiring the leader lock but before promoting the replica

#pre_promote: /path/to/pre_promote.sh

#watchdog:

# mode: automatic # Allowed values: off, automatic, required

# device: /dev/watchdog

# safety_margin: 5

tags:

nofailover: false

noloadbalance: false

clonefrom: false

nosync: false

7、依次启动patroni服务

Postgres用户下启动 nohup patroni /usr/patroni/conf/patroni_postgresql.yml &

8、patroni配置启动脚本

为了方便开机自启,故配置成 patroni.service,3个node都需要进行配置,配置好patroni.service后就可以直接在root用户下切换Leader以及重启postgres节点等操作

[root@pgsql_1971 ~]$ vim /etc/systemd/system/patroni.service [Unit] Description=patroni - a high-availability PostgreSQL Documentation=https://patroni.readthedocs.io/en/latest/index.html After=syslog.target network.target etcd.target Wants=network-online.target [Service] Type=simple User=postgres Group=postgres PermissionsStartOnly=true ExecStart=/usr/local/bin/patroni /usr/patroni/conf/patroni_postgresql.yml ExecReload=/bin/kill -HUP $MAINPID LimitNOFILE=65536 KillMode=process KillSignal=SIGINT Restart=on-abnormal RestartSec=30s TimeoutSec=0 [Install] WantedBy=multi-user.target

9、禁用postgresql脚本采用patroni服务启动数据库

禁止 postgresql 的自启动,通过 patroni 来管理 postgresql systemctl stop postgresql systemctl status postgresql systemctl disable postgresql systemctl status patroni systemctl start patroni systemctl enable patroni

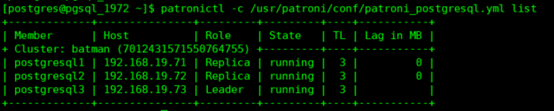

五、集群检查

1、数据库集群检查

patronictl -c /usr/patroni/conf/patroni_postgresql.yml list

2、etcd检查

root@pgsql_1971 ~]# /usr/local/etcd/etcdctl ls /service/batman

root@pgsql_1971 ~]# /usr/local/etcd/etcdctl get /service/batman/members/postgresql1

六、haproxy部署(两个从节点)

1、安装haproxy服务

yum install -y haproxy cp -r /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg_bak

2、配置文件

vi /etc/haproxy/haproxy.cfg

#---------------------------------------------------------------------

# 全局定义

global

# log语法:log [max_level_1]

# 全局的日志配置,使用log关键字,指定使用127.0.0.1上的syslog服务中的local0日志设备,

# 记录日志等级为info的日志

# log 127.0.0.1 local0 info

log 127.0.0.1 local1 notice

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

# 定义每个haproxy进程的最大连接数 ,由于每个连接包括一个客户端和一个服务器端,

# 所以单个进程的TCP会话最大数目将是该值的两倍。

maxconn 4096

# 用户,组

user haproxy

group haproxy

# 以守护进程的方式运行

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# 默认部分的定义

defaults

# mode语法:mode {http|tcp|health} 。http是七层模式,tcp是四层模式,health是健康检测,返回OK

mode tcp

# 使用127.0.0.1上的syslog服务的local3设备记录错误信息

log 127.0.0.1 local3 err

#if you set mode to http,then you nust change tcplog into httplog

option tcplog

# 启用该项,日志中将不会记录空连接。所谓空连接就是在上游的负载均衡器或者监控系统为了

#探测该服务是否存活可用时,需要定期的连接或者获取某一固定的组件或页面,或者探测扫描

#端口是否在监听或开放等动作被称为空连接;官方文档中标注,如果该服务上游没有其他的负

#载均衡器的话,建议不要使用该参数,因为互联网上的恶意扫描或其他动作就不会被记录下来

option dontlognull

# 定义连接后端服务器的失败重连次数,连接失败次数超过此值后将会将对应后端服务器标记为不可用

retries 3

# 当使用了cookie时,haproxy将会将其请求的后端服务器的serverID插入到cookie中,以保证

#会话的SESSION持久性;而此时,如果后端的服务器宕掉了,但是客户端的cookie是不会刷新的

#,如果设置此参数,将会将客户的请求强制定向到另外一个后端server上,以保证服务的正常

option redispatch

#等待最大时长 When a server's maxconn is reached, connections are left pending in a queue which may be server-specific or global to the backend.

timeout queue 525600m

# 设置成功连接到一台服务器的最长等待时间,默认单位是毫秒

timeout connect 10s

# 客户端非活动状态的超时时长 The inactivity timeout applies when the client is expected to acknowledge or send data.

timeout client 525600m

# Set the maximum inactivity time on the server side.The inactivity timeout applies when the server is expected to acknowledge or send data.

timeout server 525600m

timeout check 5s

maxconn 5120

#---------------------------------------------------------------------

# 配置haproxy web监控,查看统计信息

listen status

bind 0.0.0.0:1080

mode http

log global

stats enable

# stats是haproxy的一个统计页面的套接字,该参数设置统计页面的刷新间隔为30s

stats refresh 30s

stats uri /haproxy-stats

# 设置统计页面认证时的提示内容

stats realm Private lands

# 设置统计页面认证的用户和密码,如果要设置多个,另起一行写入即可

stats auth admin:passw0rd

# 隐藏统计页面上的haproxy版本信息

# stats hide-version

#---------------------------------------------------------------------

listen master

bind *:5000

mode tcp

option tcplog

balance roundrobin

option httpchk OPTIONS /master

http-check expect status 200

default-server inter 3s fall 3 rise 2 on-marked-down shutdown-sessions

server node1 192.168.19.71:5432 maxconn 1000 check port 8008 inter 5000 rise 2 fall 2

server node2 192.168.19.72:5432 maxconn 1000 check port 8008 inter 5000 rise 2 fall 2

server node3 192.168.19.73:5432 maxconn 1000 check port 8008 inter 5000 rise 2 fall 2

listen replicas

bind *:5001

mode tcp

option tcplog

balance roundrobin

option httpchk OPTIONS /replica

http-check expect status 200

default-server inter 3s fall 3 rise 2 on-marked-down shutdown-sessions

server node1 192.168.19.71:5432 maxconn 1000 check port 8008 inter 5000 rise 2 fall 2

server node2 192.168.19.72:5432 maxconn 1000 check port 8008 inter 5000 rise 2 fall 2

server node3 192.168.19.73:5432 maxconn 1000 check port 8008 inter 5000 rise 2 fall 2

3、启动服务并加入开机自启

systemctl start haproxy systemctl enable haproxy systemctl status haproxy 浏览器访问http://192.168.19.72:1080/haproxy-stats输入用户名admin密码passw0rd 这里我们通过5000端口和5001端口分别来提供写服务和读服务,如果需要对数据库写入数据只需要对外提供192.168.216.136:5000即可,可以模拟主库故障,即关闭其中的master节点来验证是否会进行自动主从切换

七、keepalived部署(两个从节点)

1、安装keepalived服务

yum install -y keepalived

2、配置更新

pg-node1

cat keepalived.conf

global_defs {

notification_email {

root@localhost #收邮件人

}

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id master-node

router_id LVS_01

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass 1221

}

virtual_ipaddress {

192.168.19.110/24 dev eth0 label eth0:0

}

}

-------------------------------------------------------------------------------

pg-node2

cat keepalived.conf

!Configuration File for keepalived

global_defs {

notification_email {

root@localhost #收邮件人

}

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id master-node

router_id LVS_02

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1221

}

virtual_ipaddress {

192.168.19.110/24 dev eth0 label eth0:0

}

}

3、启动keepalived服务

systemctl restart keepalived systemctl enable keepalived

4、对外提供访问端口

VIP:192.168.19.110 端口:5000 读写权限 端口:5001 读权限

注:以上部署有个问题就是haproxy与keepalived相互依存的问题,我的观点是除非死机否则不切换,所以我没有做脚本控制,其他要是有问题,欢迎大佬们提提意见哦!!!