prometheus+grafana+nodeExporter监控服务器的表现

数据存储方:prometheus 时序数据库用来做数据收集;

数据发送方:nodeExporter 用来将日志打到promexxxxx上;

数据展示方:grafana用来做数据的展示;

数据报警方:alert Manager(这里没搞)

1.wget nodeExporter 到本地 ,解压后启动

wget https://github.com/prometheus/node_exporter/releases/download/v0.18.1/node_exporter-0.18.1.linux-amd64.tar.gz --no-check-certificate tar -xf node_exporter-0.18.1.linux-amd64.tar.gz ./node_exporter

如果服务是通过docker启动的,cp文件到对应容器后启动

docker cp ../node_exporterxxxxxx <dockername>:/

启动后访问 ip:9100/metrtics

node_cpu:系统CPU占用

node_disk*:磁盘io

node_filesystem*:文件系统用量

node_load1:系统负载

node_memory*:内存使用量

node_network*:网络带宽

node_time:当前系统时间

go_*:node exporter中go相关指标

process_*:node exporter自身进程相关运行指标

2.编写prometheus的yml文件,启动docker的时候加载该yml配置文件。

vi prometheus.yml

nodeexporter 一定要装在服务器上。这里9100端口是我本地启动的mall-portal服务暴露的端口。

global:

scrape_interval: 15s # By default, scrape targets every 15 seconds.

evaluation_interval: 15s # Evaluate rules every 15 seconds.

scrape_configs:

- job_name: prometheus

static_configs:

- targets: ['localhost:9090']

labels:

instance: prometheus

- job_name: linux

static_configs:

- targets: ['47.112.188.174:9100']

labels:

instance: node

- job_name: 'spring'

static_configs:

metrics_path: '/actuator/prometheus'

- targets: ['47.112.188.174:8081']

- job_name: consul

consul_sd_configs:

- server: ['47.112.188.174:8500']

services: []

relabel_configs:

- source_labels: [__meta_consul_tags]

regex: .*mall.*

action: keep

启动prometheus

docker run --name prometheus -d -p 9090:9090 --privileged=true -v /usr/local/dockerdata/prometheus/prometheus.yml:/etc/prometheus/prometheus.yml prom/prometheus --config.file=/etc/prometheus/prometheus.yml

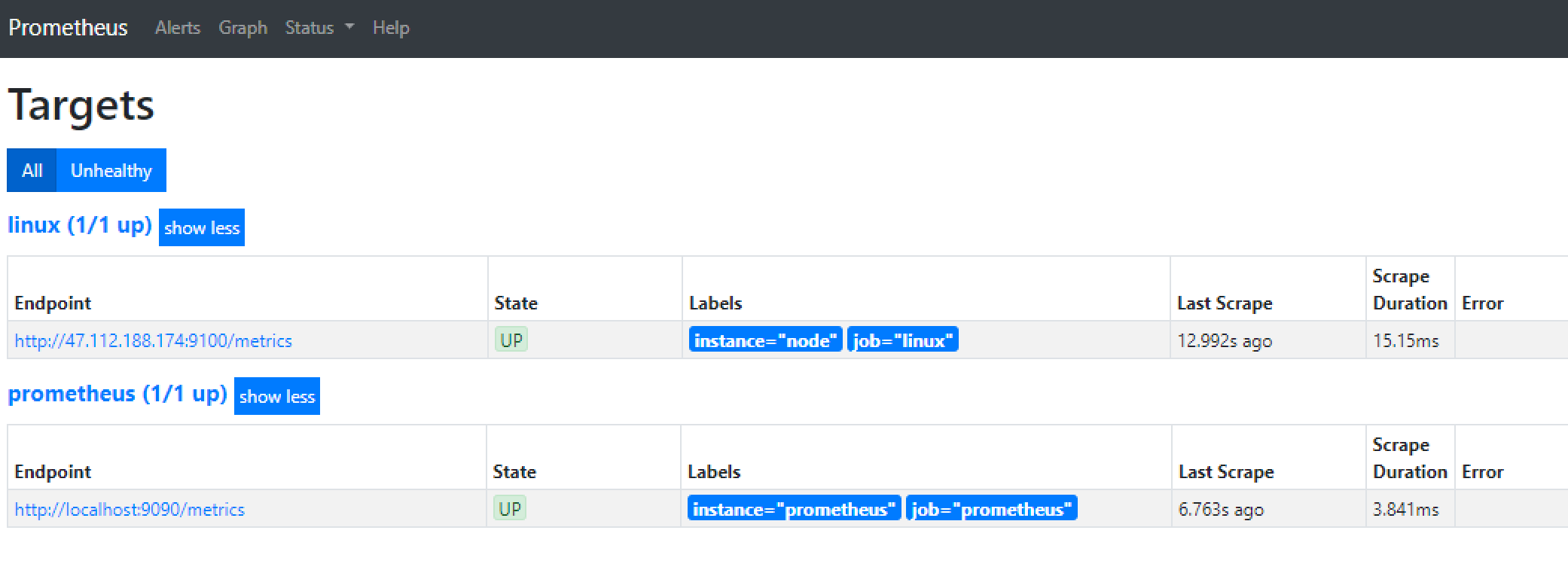

可在9090端口查看prometheus的数据

http://47.112.188.174:9090/targets

http://47.112.188.174:9090/graph 里面可以查询

grafana 添加prometheus数据后查看

global: scrape_interval: 15s # By default, scrape targets every 15 seconds. evaluation_interval: 15s # Evaluate rules every 15 seconds.

scrape_configs: - job_name: prometheus static_configs: - targets: ['localhost:9090'] labels: instance: prometheus

- job_name: linux static_configs: - targets: ['47.112.188.174:9100'] labels: instance: node - job_name: 'spring' static_configs:metrics_path: '/actuator/prometheus' - targets: ['47.112.188.174:8081'] - job_name: consul consul_sd_configs: - server: ['47.112.188.174:8500'] services: [] relabel_configs: - source_labels: [__meta_consul_tags] regex: .*mall.* action: keep

global: scrape_interval: 15s # By default, scrape targets every 15 seconds. evaluation_interval: 15s # Evaluate rules every 15 seconds.

scrape_configs: - job_name: prometheus static_configs: - targets: ['localhost:9090'] labels: instance: prometheus

- job_name: linux static_configs: - targets: ['47.112.188.174:9100'] labels: instance: node - job_name: 'spring' static_configs:metrics_path: '/actuator/prometheus' - targets: ['47.112.188.174:8081'] - job_name: consul consul_sd_configs: - server: ['47.112.188.174:8500'] services: [] relabel_configs: - source_labels: [__meta_consul_tags] regex: .*mall.* action: keep