python使用multiprocessing,为什么没有加快?

转载:python使用了multiprocessing,为什么并没有加快? - SegmentFault 思否

def calcutype(dataframe,model,xiangguandict):

'''主函数'''

typelist = {}

xiangguan = {}

res = {}

pool = multiprocessing.Pool(40)

for index, row in dataframe.iterrows():

scorearr = []

name = row['data_name1'].split(',') #data_name

descrip = row['data_descrip1'].split(',') #data_descrip

#计算得出分类,与相关度

res[index]=pool.apply_async(calcumodelnum, (name,descrip,model,xiangguandict))

pool.close()

pool.join()

for i in res:

#本条分类相关度计算完毕

typelist[i] = res[i].get()[0]

xiangguan[i] = res[i].get()[1]

return typelist,xiangguan

def calcumodelnum(***省略***):

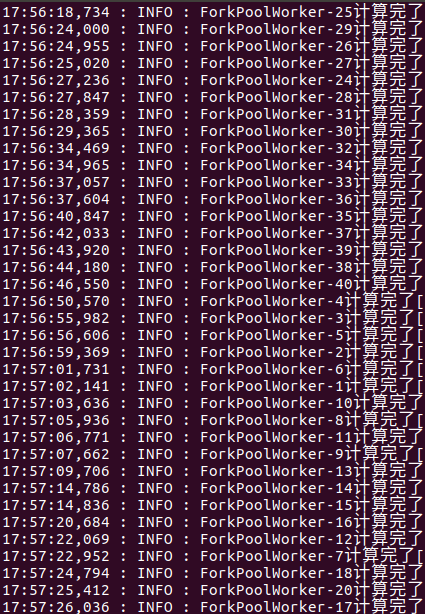

logging.info(multiprocessing.current_process().name+'计算完了')

return 结果

def main():

data1 = **省略***

model = **省略***

dict1 = **省略***

calcutype(data1,model,dict1)

if __name__ == '__main__':

main()

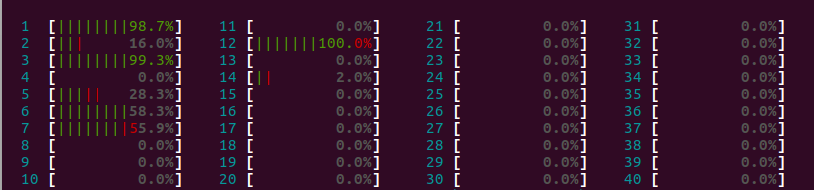

大家看到我上面的代码了,因为在40核的机器上跑,所以我启动了40个进程,但是看cpu情况

明明任务很多,却根本没有打满,有很多核空着,

像这些时间那就根本动也不动。

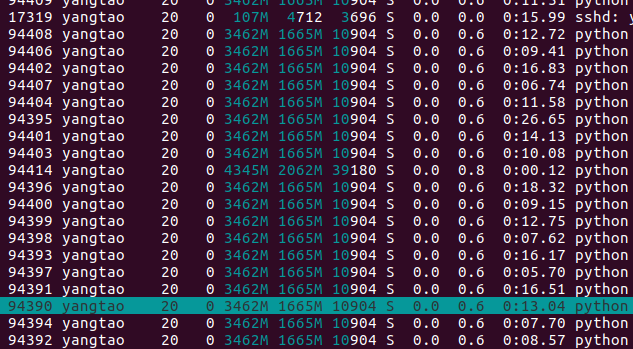

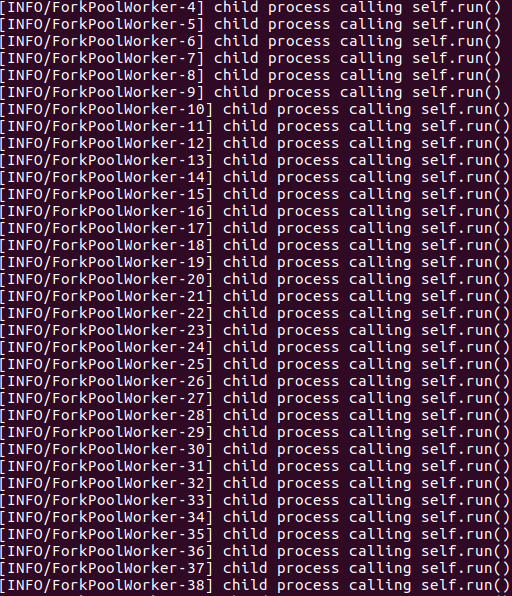

log显示确实启动了40个进程,可是那些进程好像启动之后就不动了似的。

运行的log如下:

我又试了试启动10个进程,最后程序运行时间与启用40个几乎一样长!!!

这是怎么回事?求大神指点。(那个for循环很长,计算强度够大)

multiprocessing.Pool 只是用来启动多个进程而不是在每个core上启动一个进程。换句话说Python解释器本身不会去在每个core或者processor去做负载均衡。这个是由操作系统决定的。如果你的工作特别的计算密集型的话,操作系统确实会分配更多的core,但这也不是Python或者代码所能控制的或指定的。

multiprocessing.Pool(num)中的num可以很小也可以很大,比如I/O密集型的操作,这个值完全可以大于cpu的个数。

硬件系统的资源分配是由操作系统决定的,如果你希望每个core都在工作,就需要更多的从操作系统出发了~

代码修改下, 应该能跑满CPU

from multiprocessing import Pool, cpu_count, current_process

data1 = '...' # dataframe

model = '...'

dict1 = '...'

typelist = {}

xiangguan = {}

res = {}

def calcutype(row):

index, data = row

name = data['data_name1'].split(',') # data_name

descrip = data['data_descrip1'].split(',') # data_descrip

res[index] = calcumodelnum(name, descrip)

logging.info(current_process().name + '计算完了')

def calcumodelnum(name, des):

"""你的计算逻辑"""

pass

def main():

pool = Pool(cpu_count())

pool.map(calcutype, data1.iterrows())

pool.close()

pool.join()

for i in res:

# 本条分类相关度计算完毕

typelist[i] = res[i].get()[0]

xiangguan[i] = res[i].get()[1]

return typelist, xiangguan

if __name__ == '__main__':

main()

试试吧

看看楼下的其他答案,我曾经采纳了一个。

I am working on multiprocessing in Python. For example, consider the example given in the Python multiprocessing documentation (I have changed 100 to 1000000 in the example, just to consume more time). When I run this, I do see that Pool() is using all the 4 processes but I don't see each CPU moving upto 100%. How to achieve the usage of each CPU by 100%?

from multiprocessing import Pool

def f(x):

return x*x

if __name__ == '__main__':

pool = Pool(processes=4)

result = pool.map(f, range(10000000))

2 Answers

It is because requires interprocess communication between the main process and the worker processes behind the scene, and the communication overhead took more (wall-clock) time than the "actual" computation () in your case.multiprocessingx * x

Try "heavier" computation kernel instead, like

def f(x):

return reduce(lambda a, b: math.log(a+b), xrange(10**5), x)

Update (clarification)

I pointed out that the low CPU usage observed by the OP was due to the IPC overhead inherent in but the OP didn't need to worry about it too much because the original computation kernel was way too "light" to be used as a benchmark. In other words, works the worst with such a way too "light" kernel. If the OP implements a real-world logic (which, I'm sure, will be somewhat "heavier" than ) on top of , the OP will achieve a decent efficiency, I assure. My argument is backed up by an experiment with the "heavy" kernel I presented.multiprocessingmultiprocessingx * xmultiprocessing

@FilipMalczak, I hope my clarification makes sense to you.

By the way there are some ways to improve the efficiency of while using . For example, we can combine 1,000 jobs into one before we submit it to unless we are required to solve each job in real time (ie. if you implement a REST API server, we shouldn't do in this way).x * xmultiprocessingPool

-

It looks like right answer, and will behave so, but you missed the whole point of multiprocessing Jan 25, 2014 at 11:06

You're asking wrong kind of question. represents process as understood in your operating system. is just a simple way to run several processes to do your work. Python environment has nothing to do with balancing load on cores/processors. multiprocessing.Processmultiprocessing.Pool

If you want to control how will processor time be given to processes, you should try tweaking your OS, not python interpreter.

Of course, "heavier" computations will be recognised by system, and may look like they do just what you want to do, but in fact, you have almost no control on process handling.

"Heavier" functions will just look heavier to your OS, and his usual reaction will be assigning more processor time to your processes, but that doesn't mean you did what you wanted to - and that's good, because that the whole point of languages with VM - you specify logic, and VM takes care of mapping this logic onto operating system.

-

Thank you. It helps in better understanding of how multiprocessing works :) Jan 25, 2014 at

printmost of the time, so it is what is called "I/O bound".printstarts until themapfinishes. Synchronization overhead might account for some of the underutilization.