MPTCP(六):MPTCP测试

MPTCP测试

1.注意事项

-

测试主机已替换支持

MPTCPv1的内核,并且已使能MPTCP,本次测试中使用的内核版本均为5.18.19 -

测试主机中确保已经正确配置了

iproute2和mptcpd,参考文档如下: -

测试用的

server主机可以只有一个WAN口,但是client主机必须至少有两个WAN口 -

本次测试网络环境如下

2.参考文档

- 本次测试主要参考文档链接如下, 将其

clone到本地后,使用浏览器打开docs/index.html即可查看文档 - 主要参考章节:

《Using Multipath TCP on recent Linux kernels》

- 懒得

clone文档得话,直接参考下面的链接吧

3.服务器环境配置

3.1 IP地址

- 服务器端只有一个网口

eth0, IP地址:192.168.16.54, 网关:192.168.16.1

3.2 ip mptcp路径管理

- 执行如下命令,

signal的含义暂时不是很理解(2023/5/5)

sudo ip mptcp endpoint add 192.168.16.54 dev eth0 signal

- 设置子流的最大数量限制

sudo ip mptcp limits set subflow 4 sudo ip mptcp limits set add_addr_accepted 4

4.客户端环境配置

4.1 IP地址

client两个WAN口,相应地有两个IP地址, 网关均为192.168.16.1

eth0: 192.168.16.15 eth1: 192.168.16.53

4.2ip mptcp路径管理

- 执行如下命令,表示允许通过该网口创建MPTCP子流

# 允许通过eth0创建子流 sudo ip mptcp endpoint add 192.168.16.15 dev eth0 subflow # 允许通过eth1创建子流 sudo ip mptcp endpoint add 192.168.16.53 dev eth1 subflow

- 设置子流的最大数量限制

sudo ip mptcp limits set subflow 4 sudo ip mptcp limits set add_addr_accepted 4

4.3 路由表配置

(1)client端还需要配置路由,要保证client端的每个网口均能够和server建立连接,其实我们在局域网中测试应该是不需要配置路由的,但是为了和实际环境保持一致,还是配置一下吧

(2)修改/etc/iproute2/rt_tables, 在其中加入Teth0 和 Teth1这两项,目的是为了让我们在查看路由表时能够通过表名直观得看出路由表和网口的对应关系, 如下

$ cat /etc/iproute2/rt_tables # # reserved values # 255 local 254 main 253 default 240 Teth0 241 Teth1 0 unspec # # local # #1 inr.ruhep

(3)配置eth0路由表

# create eth0 routing tables sudo ip rule add from 192.168.16.15 table Teth0 sudo ip route add 192.168.16.0/24 dev eth0 scope link table Teth0 sudo ip route add default via 192.168.16.1 dev eth0 table Teth0 # show eth0 routing tables $ ip route show table Teth0 default via 192.168.16.1 dev eth0 192.168.16.0/24 dev eth0 scope link

(4)配置eth1路由表

# create eth1 routing tables sudo ip rule add from 192.168.16.53 table Teth1 sudo ip route add 192.168.16.0/24 dev eth1 scope link table Teth1 sudo ip route add default via 192.168.16.1 dev eth1 table Teth1 # show eth1 routing tables $ ip route show table Teth1 default via 192.168.16.1 dev eth1 192.168.16.0/24 dev eth1 scope link

5. 使用netcat进行测试

(1)必须正确安装mptcpd,否则系统中会没有mptcpize

(2)tcpdump使用指南

(3)安装netcat

sudo apt-get install -y netcat

(4)在server启动netcat,监听端口12345

mptcpize run nc -l 12345

(5)在client中运行tcpdump,抓取端口12345的包,并使用-vv参数显示详细信息

sudo tcpdump -ni any port 12345 -vv

(6)在client新开一终端,启动netcat,连接服务器192.168.16.54:12345,并发送测试字符串(67个字符a)

$ mptcpize run nc 192.168.16.54 12345 aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa

(7)tcpdump抓包结果如下

tcpdump: data link type LINUX_SLL2 tcpdump: listening on any, link-type LINUX_SLL2 (Linux cooked v2), snapshot length 262144 bytes #1. eth0 192.168.16.15:59254 和 服务器192.168.16.54:12345 建立TCP连接时发送的SYN包[S],注意标识:mptcp capable v1 16:09:32.727979 eth0 Out IP (tos 0x0, ttl 64, id 5322, offset 0, flags [DF], proto TCP (6), length 64) 192.168.16.15.59254 > 192.168.16.54.12345: Flags [S], cksum 0xa1c8 (incorrect -> 0x2337), seq 1143547619, win 65535, options [mss 1460,sackOK,TS val 1682140458 ecr 0,nop,wscale 7,mptcp capable v1], length 0 #2. 服务器发送SYN包,同时对客户端的SYN回复ACK包[S.] 16:09:32.728182 eth0 In IP (tos 0x0, ttl 64, id 0, offset 0, flags [DF], proto TCP (6), length 72) 192.168.16.54.12345 > 192.168.16.15.59254: Flags [S.], cksum 0x0122 (correct), seq 3458909688, ack 1143547620, win 65160, options [mss 1460,sackOK,TS val 473542376 ecr 1682140458,nop,wscale 7,mptcp capable v1 {0x55e5e6bfda688d17}], length 0 16:09:32.728345 eth0 Out IP (tos 0x0, ttl 64, id 5323, offset 0, flags [DF], proto TCP (6), length 72) #3. 客户端对服务器的SYN回复ACK包[.], 三次握手完成 192.168.16.15.59254 > 192.168.16.54.12345: Flags [.], cksum 0xa1d0 (incorrect -> 0x6312), seq 1, ack 1, win 512, options [nop,nop,TS val 1682140458 ecr 473542376,mptcp capable v1 {0xb68d501d6d0c359d,0x55e5e6bfda688d17}], length 0 16:09:50.591297 eth0 Out IP (tos 0x0, ttl 64, id 5324, offset 0, flags [DF], proto TCP (6), length 143) #4. 客户端发送67byte的payload到server,确实,在这一步发送了67个字符a到server 192.168.16.15.59254 > 192.168.16.54.12345: Flags [P.], cksum 0xa217 (incorrect -> 0x7428), seq 1:68, ack 1, win 512, options [nop,nop,TS val 1682158321 ecr 473542376,mptcp capable v1 {0xb68d501d6d0c359d,0x55e5e6bfda688d17},nop,nop], length 67 16:09:50.591485 eth0 In IP (tos 0x0, ttl 64, id 5783, offset 0, flags [DF], proto TCP (6), length 64) #5. server回复ACK包[.], ack = 68 192.168.16.54.12345 > 192.168.16.15.59254: Flags [.], cksum 0xff34 (correct), seq 1, ack 68, win 509, options [nop,nop,TS val 473560240 ecr 1682158321,mptcp dss ack 6184476764236969448], length 0 16:09:50.591875 eth1 Out IP (tos 0x0, ttl 64, id 21432, offset 0, flags [DF], proto TCP (6), length 72) #6. 注意了:这时MPTCP开始创建子流,网口eth1 192.168.16.53:39509开始和服务器192.168.16.54:12345建立连接, 注意标识:mptcp join id 3 token 0xa781e174 nonce 0x1069cb10 # 这里有疑问:为什么在第一次数据发送完成后才开始建立子流?而不是在发送数据之前就建立子流呢? # 问题已解决:参考https://mptcp-apps.github.io/mptcp-doc/mptcp.html 的Fig.13,可见MPTCP协议规定如此 192.168.16.53.39509 > 192.168.16.54.12345: Flags [S], cksum 0xa1f6 (incorrect -> 0xa632), seq 3051078268, win 65535, options [mss 1460,sackOK,TS val 2165143953 ecr 0,nop,wscale 7,mptcp join id 3 token 0xa781e174 nonce 0x1069cb10], length 0 16:09:50.592361 eth1 In IP (tos 0x0, ttl 64, id 0, offset 0, flags [DF], proto TCP (6), length 76) #7. server向eth1发送SYN,同时对client的SYN回复ACK[S.], 注意标识:mptcp join id 0 hmac 0x56c46c3b8b417d nonce 0x2e91a3d2 192.168.16.54.12345 > 192.168.16.53.39509: Flags [S.], cksum 0x4763 (correct), seq 619200371, ack 3051078269, win 65160, options [mss 1460,sackOK,TS val 3121185292 ecr 2165143953,nop,wscale 7,mptcp join id 0 hmac 0x56c46c3b8b417d nonce 0x2e91a3d2], length 0 16:09:50.592485 eth1 Out IP (tos 0x0, ttl 64, id 21433, offset 0, flags [DF], proto TCP (6), length 76) #8. client对server的SYN回复ACK[.], 子流的三次握手完成,子流创建成功 192.168.16.53.39509 > 192.168.16.54.12345: Flags [.], cksum 0xa1fa (incorrect -> 0x6395), seq 1, ack 1, win 512, options [nop,nop,TS val 2165143953 ecr 3121185292,mptcp join hmac 0x505d3849a1f214befb11ee9a55b25f4893fa9148], length 0 16:09:50.592962 eth1 In IP (tos 0x0, ttl 64, id 32872, offset 0, flags [DF], proto TCP (6), length 64) ...

6. 使用iperf3进行测试

(1)必须正确安装mptcpd,否则系统中会没有mptcpize

(2)安装iperf3

sudo apt-get install -y iperf3

(3)在服务器上运行iperf server, 指定端口8888

mptcpize run iperf3 -s -p 8888

(4)在client上运行iperf client, 绑定网口eth0, 分别测试TCP上行/下行; 因为我们绑定了网口eth0,所以如果MPTCP不生效的话,网口eth1上不应该有iperf3测试的流量;反之,如果在eth1上观测到了iperf3测试的流量,说明MPTCP成功生效了

# TCP上行测试 mptcpize run iperf3 -c 192.168.16.54 -p 8888 -B 192.168.16.15 -P 5 -t 30 # TCP下行测试 mptcpize run iperf3 -c 192.168.16.54 -p 8888 -B 192.168.16.15 -P 5 -t 30 -R

(5)在client上安装流量监控工具nload,用来观察所有网口上的实时流量

sudo apt-get -y install nload sudo nload -m

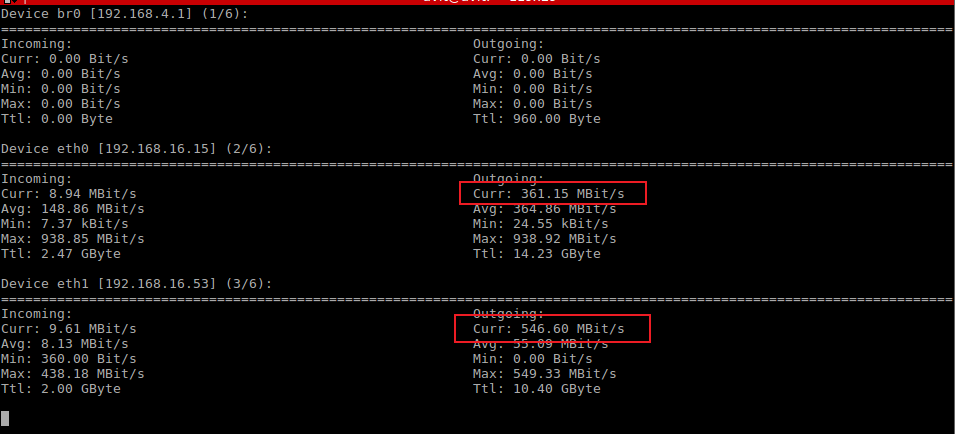

(6)TCP上行测试时,各个网口实时流量如下

- 如上图所示,两个网口

eth0和eth1上均有大流量在传输,MPTCP测试成功 - 并且在

iperf3测速过程中移除一条网线,测速不会中断 - 当然也可以通过

tcpdump抓包进行分析,在此偷个懒,就不演示了

本文作者:zhijun

本文链接:https://www.cnblogs.com/zhijun1996/p/17430244.html

版权声明:本作品采用知识共享署名-非商业性使用-禁止演绎 2.5 中国大陆许可协议进行许可。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通