安装hadoop查看:CentOS安装Hadoop

◆下载winutils.exe和hadoop.dll

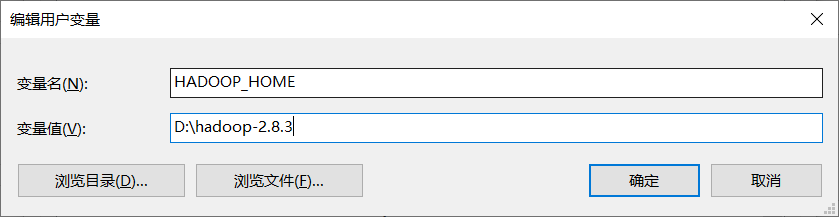

在windows平台下操作hadoop必须使用winutils.exe和hadoop.dll,下载地址:https://github.com/steveloughran/winutils,本文测试使用的是hadoop-2.8.3,虽然这个版本与服务器安装的版本不一致 ,但经测试是有效的。

配置环境变量:

或者在Java代码中设置:

System.setProperty("hadoop.home.dir", "D:\\hadoop-2.8.3");

◆修改core-site.xml配置

设置fs.defaultFS参数值为hdfs://主机IP:9000

<property> <name>fs.defaultFS</name> <value>hdfs://192.168.107.141:9000</value> </property>

◆pom.xml,尽量让hadoop-client版本与安装的hadoop版本一致

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.zhi.test</groupId> <artifactId>hadoop-test</artifactId> <version>0.0.1-SNAPSHOT</version> <packaging>jar</packaging> <name>hadoop-test</name> <url>http://maven.apache.org</url> <properties> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <hadoop.version>2.9.2</hadoop.version> <!-- Logger --> <lg4j2.version>2.12.1</lg4j2.version> </properties> <dependencies> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>${hadoop.version}</version> <exclusions> <exclusion> <groupId>log4j</groupId> <artifactId>log4j</artifactId> </exclusion> </exclusions> </dependency> <!-- Logger(log4j2) --> <dependency> <groupId>org.apache.logging.log4j</groupId> <artifactId>log4j-core</artifactId> <version>${lg4j2.version}</version> </dependency> <!-- Log4j 1.x API Bridge --> <dependency> <groupId>org.apache.logging.log4j</groupId> <artifactId>log4j-1.2-api</artifactId> <version>${lg4j2.version}</version> </dependency> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>4.12</version> </dependency> </dependencies> </project>

◆Java代码

package com.zhi.test.hadoop; import java.io.FileInputStream; import java.io.FileOutputStream; import java.io.IOException; import java.io.InputStream; import java.io.OutputStream; import java.net.URI; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FileStatus; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IOUtils; import org.apache.logging.log4j.LogManager; import org.apache.logging.log4j.Logger; import org.junit.After; import org.junit.Before; import org.junit.Test; /** * HDFS测试 * * @author zhi * @since 2019年9月10日18:28:24 * */ public class HadoopTest { private Logger logger = LogManager.getLogger(this.getClass()); private FileSystem fileSystem = null; @Before public void before() throws Exception { // System.setProperty("hadoop.home.dir", "D:\\hadoop-2.8.3"); Configuration configuration = new Configuration(); fileSystem = FileSystem.get(new URI("hdfs://192.168.107.141:9000"), configuration, "root"); } @After public void after() throws Exception { if (fileSystem != null) { fileSystem.close(); } } /** * 创建文件夹 */ @Test public void mkdir() { try { boolean result = fileSystem.mkdirs(new Path("/test")); logger.info("创建文件夹结果:{}", result); } catch (IllegalArgumentException | IOException e) { logger.error("创建文件夹出错", e); } } /** * 上传文件 */ @Test public void uploadFile() { String fileName = "hadoop.txt"; InputStream input = null; OutputStream output = null; try { input = new FileInputStream("F:\\" + fileName); output = fileSystem.create(new Path("/test/" + fileName)); IOUtils.copyBytes(input, output, 4096, true); logger.error("上传文件成功"); } catch (IllegalArgumentException | IOException e) { logger.error("上传文件出错", e); } finally { if (input != null) { try { input.close(); } catch (IOException e) { } } if (output != null) { try { output.close(); } catch (IOException e) { } } } } /** * 下载文件 */ @Test public void downFile() { String fileName = "hadoop.txt"; InputStream input = null; OutputStream output = null; try { input = fileSystem.open(new Path("/test/" + fileName)); output = new FileOutputStream("F:\\down\\" + fileName); IOUtils.copyBytes(input, output, 4096, true); logger.error("下载文件成功"); } catch (IllegalArgumentException | IOException e) { logger.error("下载文件出错", e); } finally { if (input != null) { try { input.close(); } catch (IOException e) { } } if (output != null) { try { output.close(); } catch (IOException e) { } } } } /** * 删除文件 */ @Test public void deleteFile() { String fileName = "hadoop.txt"; try { boolean result = fileSystem.delete(new Path("/test/" + fileName), true); logger.info("删除文件结果:{}", result); } catch (IllegalArgumentException | IOException e) { logger.error("删除文件出错", e); } } /** * 遍历文件 */ @Test public void listFiles() { try { FileStatus[] statuses = fileSystem.listStatus(new Path("/")); for (FileStatus file : statuses) { logger.info("扫描到文件或目录,名称:{},是否为文件:{}", file.getPath().getName(), file.isFile()); } } catch (IllegalArgumentException | IOException e) { logger.error("遍历文件出错", e); } } }

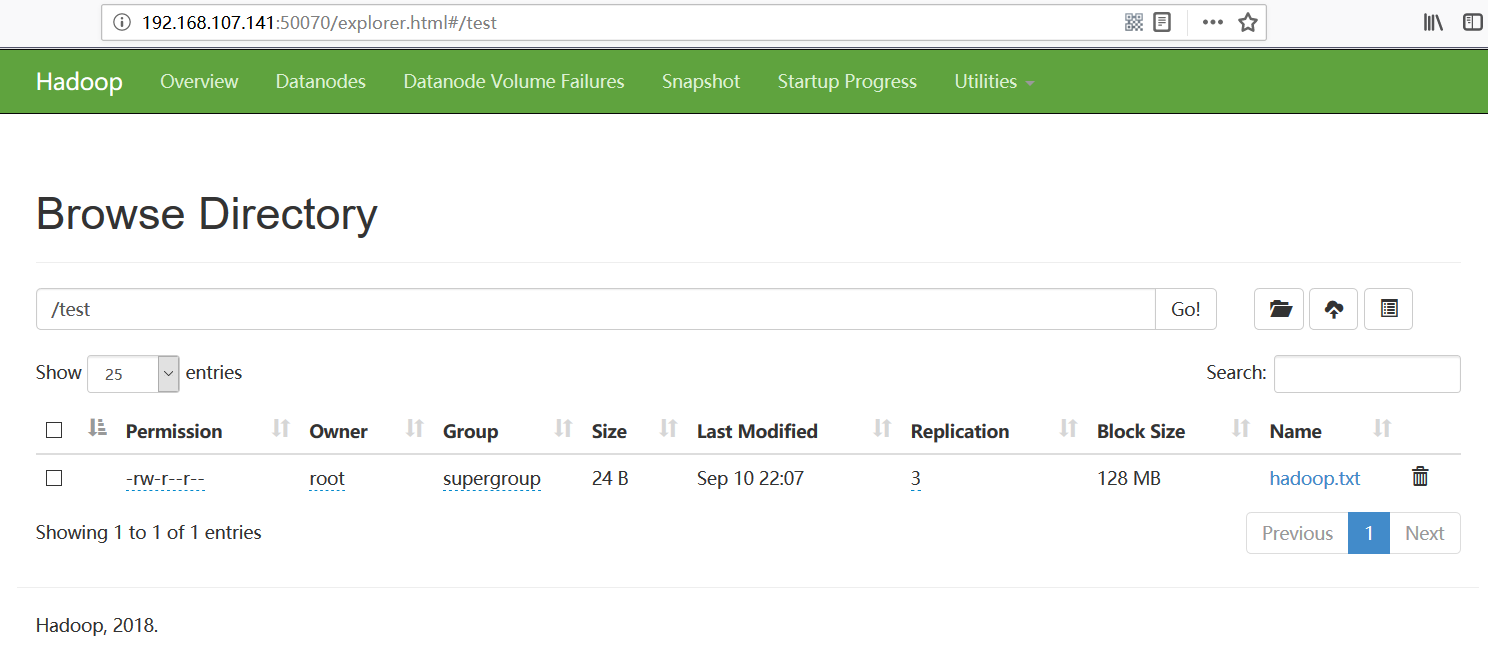

访问http://192.168.107.141:50070/explorer.html#,可查看上传的文件: