基于Kubernetess集群部署完整示例——Guestbook

目录贴:Kubernetes学习系列

本文依赖环境:Centos7部署Kubernetes集群、基于Kubernetes集群部署skyDNS服务

该示例中,我们将创建一个redis-master、两个redis-slave、三个frontend。其中,slave会实时备份master中数据,frontend会向master中写数据,之后会从slave中读取数据。所有系统间的调用(例如slave找master同步数据;frontend找master写数据;frontend找slave读数据等),采用的是dns方式实现。

1、准备工作

1.1镜像准备

本示例中依赖以下几个镜像,请提前准备好:

|

docker.io/redis:latest 1a8a9ee54eb7 registry.access.redhat.com/rhel7/pod-infrastructure:latest 34d3450d733b gcr.io/google_samples/gb-frontend:v3 c038466384ab gcr.io/google_samples/gb-redisslave:v1 5f026ddffa27 |

1.2环境准备

需要一套kubernetes运行环境,及Cluster DNS,如下:

[root@k8s-master ~]# kubectl cluster-info Kubernetes master is running at http://localhost:8080 KubeDNS is running at http://localhost:8080/api/v1/proxy/namespaces/kube-system/services/kube-dns kubernetes-dashboard is running at http://localhost:8080/api/v1/proxy/namespaces/kube-system/services/kubernetes-dashboard To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'. [root@k8s-master ~]# kubectl get componentstatus NAME STATUS MESSAGE ERROR scheduler Healthy ok controller-manager Healthy ok etcd-0 Healthy {"health": "true"} [root@k8s-master ~]# kubectl get nodes NAME STATUS AGE k8s-node-1 Ready 7d k8s-node-2 Ready 7d [root@k8s-master ~]# kubectl get deployment --all-namespaces NAMESPACE NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE kube-system kube-dns 1 1 1 1 5d kube-system kubernetes-dashboard-latest 1 1 1 1 6d [root@k8s-master ~]#

2、运行redis-master

2.1yaml文件

1)redis-master-controller.yaml

apiVersion: v1 kind: ReplicationController metadata: name: redis-master labels: name: redis-master spec: replicas: 1 selector: name: redis-master template: metadata: labels: name: redis-master spec: containers: - name: master image: redis ports: - containerPort: 6379

2)redis-master-service.yaml

apiVersion: v1 kind: Service metadata: name: redis-master labels: name: redis-master spec: ports: # the port that this service should serve on - port: 6379 targetPort: 6379 selector: name: redis-master

2.2创建rc及service

Master上执行:

[root@k8s-master yaml]# kubectl create -f redis-master-controller.yaml replicationcontroller " redis-master" created [root@k8s-master yaml]# kubectl create -f redis-master-service.yaml service " redis-master" created [root@k8s-master yaml]# kubectl get rc NAME DESIRED CURRENT READY AGE redis-master 1 1 1 1d [root@k8s-master yaml]# kubectl get pod NAME READY STATUS RESTARTS AGE redis-master-5wyku 1/1 Running 0 1d

3、运行redis-slave

3.1yaml文件

1)redis-slave-controller.yaml

apiVersion: v1 kind: ReplicationController metadata: name: redis-slave labels: name: redis-slave spec: replicas: 2 selector: name: redis-slave template: metadata: labels: name: redis-slave spec: containers: - name: worker image: gcr.io/google_samples/gb-redisslave:v1 env: - name: GET_HOSTS_FROM value: dns ports: - containerPort: 6379

2)redis-slave-service.yaml

apiVersion: v1 kind: Service metadata: name: redis-slave labels: name: redis-slave spec: ports: - port: 6379 selector: name: redis-slave

3.2创建rc及service

Master上执行:

[root@k8s-master yaml]# kubectl create -f redis-slave-controller.yaml replicationcontroller "redis-slave" created [root@k8s-master yaml]# kubectl create -f redis-slave-service.yaml service "redis-slave" created [root@k8s-master yaml]# kubectl get rc NAME DESIRED CURRENT READY AGE redis-master 1 1 1 1d redis-slave 2 2 2 44m [root@k8s-master yaml]# kubectl get pod NAME READY STATUS RESTARTS AGE redis-master-5wyku 1/1 Running 0 1d redis-slave-7h295 1/1 Running 0 44m redis-slave-r355y 1/1 Running 0 44m

4、运行frontend

4.1yaml文件

1)frontend-controller.yaml

apiVersion: v1 kind: ReplicationController metadata: name: frontend labels: name: frontend spec: replicas: 3 selector: name: frontend template: metadata: labels: name: frontend spec: containers: - name: frontend image: gcr.io/google_samples/gb-frontend:v3 env: - name: GET_HOSTS_FROM value: dns ports: - containerPort: 80

2)frontend-service.yaml

apiVersion: v1 kind: Service metadata: name: frontend labels: name: fronted spec: type: NodePort ports: - port: 80 nodePort: 30001 selector: name: frontend

4.2创建rc及service

Master上执行:

[root@k8s-master yaml]# kubectl create -f frontend-controller.yaml replicationcontroller "frontend" created [root@k8s-master yaml]# kubectl create -f frontend-service.yaml service "frontend" created [root@k8s-master yaml]# kubectl get rc NAME DESIRED CURRENT READY AGE frontend 3 3 3 28m redis-master 1 1 1 1d redis-slave 2 2 2 44m [root@k8s-master yaml]# kubectl get pod NAME READY STATUS RESTARTS AGE frontend-ax654 1/1 Running 0 29m frontend-k8caj 1/1 Running 0 29m frontend-x6bhl 1/1 Running 0 29m redis-master-5wyku 1/1 Running 0 1d redis-slave-7h295 1/1 Running 0 44m redis-slave-r355y 1/1 Running 0 44m [root@k8s-master yaml]# kubectl get service NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE frontend 10.254.93.91 <nodes> 80/TCP 47m kubernetes 10.254.0.1 <none> 443/TCP 7d redis-master 10.254.132.210 <none> 6379/TCP 1d redis-slave 10.254.104.23 <none> 6379/TCP 1h

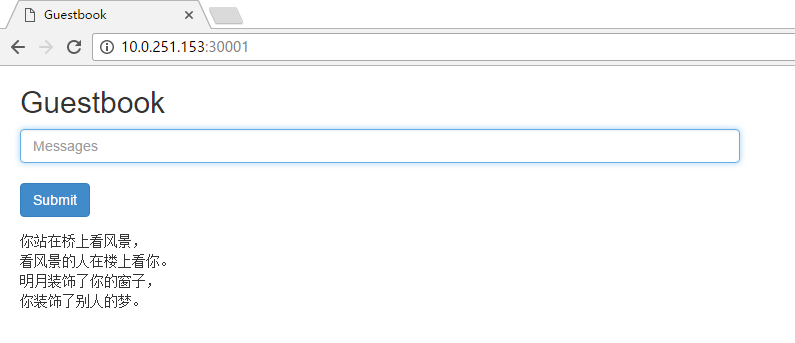

4.3页面验证

至此,Guestbook已经运行在了kubernetes中了,但是外部是无法通过通过frontend-service的IP10.0.93.91这个IP来进行访问的。Service的虚拟IP是kubernetes虚拟出来的内部网络,在外部网络中是无法寻址到的,这时候就需要增加一层外网到内网的网络转发。我们的示例中采用的是NodePort的方式实现的,之前在创建frontend-service时设置了nodePort: 30001,即kubernetes将会在每个Node上设置端口,成为NodePort,通过NodePort端口可以访问到真正的服务。