idea本地执行sparksql没有lzo包

问题描述:

sparksql 本地idea测试运行时,没有lzo依赖,也找不到相应的pom依赖

报错日志:

Caused by: java.lang.IllegalArgumentException: Compression codec com.hadoop.compression.lzo.LzoCodec not found. at org.apache.hadoop.io.compress.CompressionCodecFactory.getCodecClasses(CompressionCodecFactory.java:139) at org.apache.hadoop.io.compress.CompressionCodecFactory.<init>(CompressionCodecFactory.java:180) at org.apache.hadoop.mapred.TextInputFormat.configure(TextInputFormat.java:45) ... 129 more Caused by: java.lang.ClassNotFoundException: Class com.hadoop.compression.lzo.LzoCodec not found at org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:2409) at org.apache.hadoop.io.compress.CompressionCodecFactory.getCodecClasses(CompressionCodecFactory.java:132) ... 131 more

解决方案:

pom引用本地jar包方式

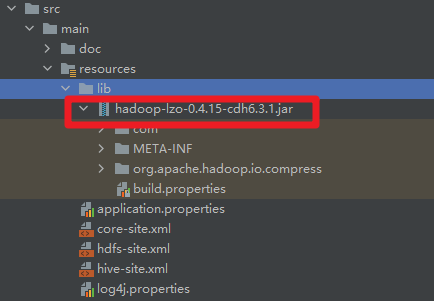

同时jar包放入resources/lib目录下(jar包可以到集群中找到)

<dependency> <groupId>com.hadoop.compression</groupId> <artifactId>com.hadoop.compression</artifactId> <version>1.0</version> <scope>system</scope> <systemPath>${project.basedir}/src/main/resources/lib/hadoop-lzo-0.4.15-cdh6.3.1.jar</systemPath> </dependency>