Keras猫狗大战六:用resnet50预训练模型进行迁移学习,精度提高到95.3%

前面用一个简单的4层卷积网络,以猫狗共25000张图片作为训练数据,经过100 epochs的训练,最终得到的准确度为90%。

深度学习中有一种重要的学习方法是迁移学习,可以在现有训练好的模型基础上针对具体的问题进行学习训练,简化学习过程。

这里以imagenet的resnet50模型进行迁移学习训练猫狗分类模型。

import os from keras import layers, optimizers, models from keras.applications.resnet50 import ResNet50 from keras.layers import * from keras.models import Model

定义数据目录

src_path = r'D:\BaiduNetdiskDownload\train' dst_path = r'D:\BaiduNetdiskDownload\large' train_dir = os.path.join(dst_path, 'train') validation_dir = os.path.join(dst_path, 'valid') test_dir = os.path.join(dst_path, 'test') class_name = ['cat', 'dog']

定义网络:

conv_base = ResNet50(weights='imagenet', include_top=False, input_shape=(150, 150, 3)) model = models.Sequential() model.add(conv_base) model.add(layers.Flatten()) model.add(layers.Dense(1, activation='sigmoid')) conv_base.trainable = False model.compile(loss='binary_crossentropy', optimizer=optimizers.RMSprop(lr=1e-4), metrics=['acc'])

model.summary()

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= resnet50 (Model) (None, 5, 5, 2048) 23587712 _________________________________________________________________ flatten_1 (Flatten) (None, 51200) 0 _________________________________________________________________ dense_1 (Dense) (None, 1) 51201 ================================================================= Total params: 23,638,913 Trainable params: 51,201 Non-trainable params: 23,587,712 _________________________________________________________________

定义数据:

from keras.preprocessing.image import ImageDataGenerator batch_size = 64 train_datagen = ImageDataGenerator( rotation_range=40, width_shift_range=0.2, height_shift_range=0.2, shear_range=0.2, zoom_range=0.2, horizontal_flip=True, ) test_datagen = ImageDataGenerator() train_generator = train_datagen.flow_from_directory( # This is the target directory train_dir, # All images will be resized to 150x150 target_size=(150, 150), batch_size=batch_size, # Since we use binary_crossentropy loss, we need binary labels class_mode='binary') validation_generator = test_datagen.flow_from_directory( validation_dir, target_size=(150, 150), batch_size=batch_size, class_mode='binary')

训练:

history = model.fit_generator( train_generator, steps_per_epoch=train_generator.samples//batch_size, epochs=20, validation_data=validation_generator, validation_steps=validation_generator.samples//batch_size)

训练过程:

Epoch 1/20 281/281 [==============================] - 155s 550ms/step - loss: 0.3354 - acc: 0.8644 - val_loss: 0.2028 - val_acc: 0.9433 Epoch 2/20 281/281 [==============================] - 79s 282ms/step - loss: 0.2502 - acc: 0.9008 - val_loss: 0.2067 - val_acc: 0.9432 Epoch 3/20 281/281 [==============================] - 79s 280ms/step - loss: 0.2318 - acc: 0.9125 - val_loss: 0.1934 - val_acc: 0.9484 Epoch 4/20 281/281 [==============================] - 79s 282ms/step - loss: 0.2179 - acc: 0.9147 - val_loss: 0.2026 - val_acc: 0.9459

......

281/281 [==============================] - 82s 292ms/step - loss: 0.1747 - acc: 0.9332 - val_loss: 0.2202 - val_acc: 0.9452 Epoch 16/20 281/281 [==============================] - 79s 283ms/step - loss: 0.1829 - acc: 0.9329 - val_loss: 0.2256 - val_acc: 0.9513 Epoch 17/20 281/281 [==============================] - 79s 280ms/step - loss: 0.1811 - acc: 0.9322 - val_loss: 0.2079 - val_acc: 0.9466 Epoch 18/20 281/281 [==============================] - 81s 288ms/step - loss: 0.1731 - acc: 0.9345 - val_loss: 0.2149 - val_acc: 0.9466 Epoch 19/20 281/281 [==============================] - 81s 289ms/step - loss: 0.1735 - acc: 0.9346 - val_loss: 0.2038 - val_acc: 0.9504 Epoch 20/20 281/281 [==============================] - 82s 291ms/step - loss: 0.1723 - acc: 0.9347 - val_loss: 0.2228 - val_acc: 0.9463

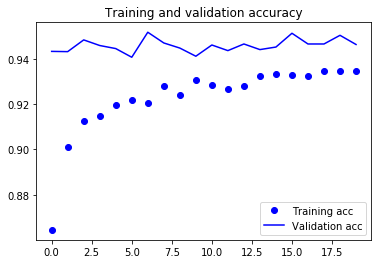

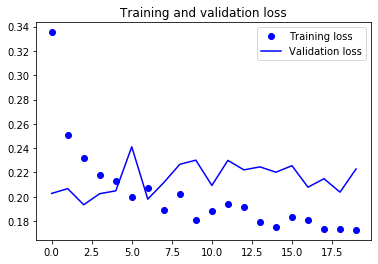

训练曲线:

可以看到在第3轮的时候,就得到最佳模型。

测试结果:

test_generator = test_datagen.flow_from_directory( test_dir, target_size=(150, 150), batch_size=batch_size, class_mode='binary') test_loss, test_acc = model.evaluate_generator(test_generator, steps=test_generator.samples // batch_size) print('test acc:', test_acc)

Found 2500 images belonging to 2 classes. test acc: 0.9536

可以看到迁移学习可以利用已有训练好的模型,进行特征提取,大大加快训练过程,提高模型精度。