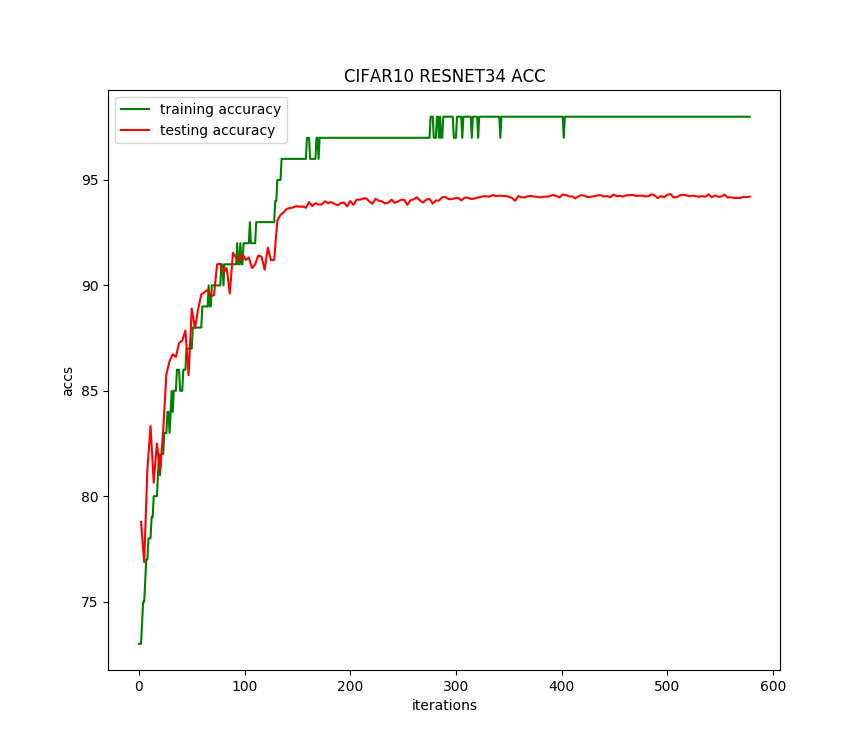

pytorch识别CIFAR10:训练ResNet-34(自定义transform,动态调整学习率,准确率提升到94.33%)

版权声明:本文为博主原创文章,欢迎转载,并请注明出处。联系方式:460356155@qq.com

前面通过数据增强,ResNet-34残差网络识别CIFAR10,准确率达到了92.6。

这里对训练过程增加2个处理:

1、训练数据集做进一步处理:对图片随机加正方形马赛克。

2、每50个epoch,学习率降低0.1倍。

代码具体修改如下:

自定义transform:

1 class Cutout(object): 2 def __init__(self, hole_size): 3 # 正方形马赛克的边长,像素为单位 4 self.hole_size = hole_size 5 6 def __call__(self, img): 7 return cutout(img, self.hole_size) 8 9 10 def cutout(img, hole_size): 11 y = np.random.randint(32) 12 x = np.random.randint(32) 13 14 half_size = hole_size // 2 15 16 x1 = np.clip(x - half_size, 0, 32) 17 x2 = np.clip(x + half_size, 0, 32) 18 y1 = np.clip(y - half_size, 0, 32) 19 y2 = np.clip(y + half_size, 0, 32) 20 21 imgnp = np.array(img) 22 23 imgnp[y1:y2, x1:x2] = 0 24 img = Image.fromarray(imgnp.astype('uint8')).convert('RGB') 25 return img

数据集处理修改:

1 transform_train = transforms.Compose([ 2 # 对原始32*32图像四周各填充4个0像素(40*40),然后随机裁剪成32*32 3 transforms.RandomCrop(32, padding=4), 4 5 # 随机马赛克,大小为6*6 6 Cutout(6), 7 8 # 按0.5的概率水平翻转图片 9 transforms.RandomHorizontalFlip(), 10 11 transforms.ToTensor(), 12 transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])]) 13 14 transform_test = tv.transforms.Compose([ 15 transforms.ToTensor(), 16 transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])]) 17 18 # 定义数据集 19 train_data = tv.datasets.CIFAR10(root=ROOT, train=True, download=True, transform=transform_train) 20 test_data = tv.datasets.CIFAR10(root=ROOT, train=False, download=False, transform=transform_test)

训练过程中调整学习率:

1 for epoch in range(1, args.epochs + 1): 2 if epoch % 50 == 0: 3 lr = args.lr * (0.1 ** (epoch // 50)) 4 5 for params in optimizer.param_groups: 6 params['lr'] = lr 7 8 net_train(net, train_load, optimizer, epoch, args.log_interval) 9 10 # 每个epoch结束后用测试集检查识别准确度 11 net_test(net, test_load, epoch)

运行结果如下:

Files already downloaded and verified

ResNet34(

(first): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace)

(3): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=False)

)

(layer1): Sequential(

(0): ResBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(1): ResBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(2): ResBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer2): Sequential(

(0): ResBlock(

(conv1): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(64, 128, kernel_size=(1, 1), stride=(2, 2))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResBlock(

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(2): ResBlock(

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(3): ResBlock(

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer3): Sequential(

(0): ResBlock(

(conv1): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(128, 256, kernel_size=(1, 1), stride=(2, 2))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResBlock(

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(2): ResBlock(

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(3): ResBlock(

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(4): ResBlock(

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(5): ResBlock(

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer4): Sequential(

(0): ResBlock(

(conv1): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResBlock(

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(2): ResBlock(

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(avg_pool): AvgPool2d(kernel_size=4, stride=4, padding=0)

(fc): Linear(in_features=512, out_features=10, bias=True)

)

one epoch spend: 0:01:11.775634

EPOCH:1, ACC:44.28

one epoch spend: 0:01:12.244757

EPOCH:2, ACC:54.46

one epoch spend: 0:01:12.360205

EPOCH:3, ACC:56.84

............

one epoch spend: 0:01:19.172188

EPOCH:198, ACC:94.2

one epoch spend: 0:01:19.213334

EPOCH:199, ACC:94.19

one epoch spend: 0:01:19.222612

EPOCH:200, ACC:94.21

CIFAR10 pytorch ResNet34 Train: EPOCH:200, BATCH_SZ:128, LR:0.1, ACC:94.33

train spend time: 4:21:32.548834

运行200个迭代,每个迭代耗时80秒,准确率提升了1.73%,达到94.33%。准确率变化曲线如下:

浙公网安备 33010602011771号

浙公网安备 33010602011771号