云原生第七周-k8s日志收集

k8s日志收集

日志收集的目的:

- 分布式日志数据统一收集,实现集中式查询和管理

- 故障排查

- 安全信息和事件管理

- 报表统计及展示功能

日志收集的价值:

- 日志查询,问题排查,故障恢复,故障自愈

- 应用日志分析,错误报警

- 性能分析,用户行为分析

日志收集方式:

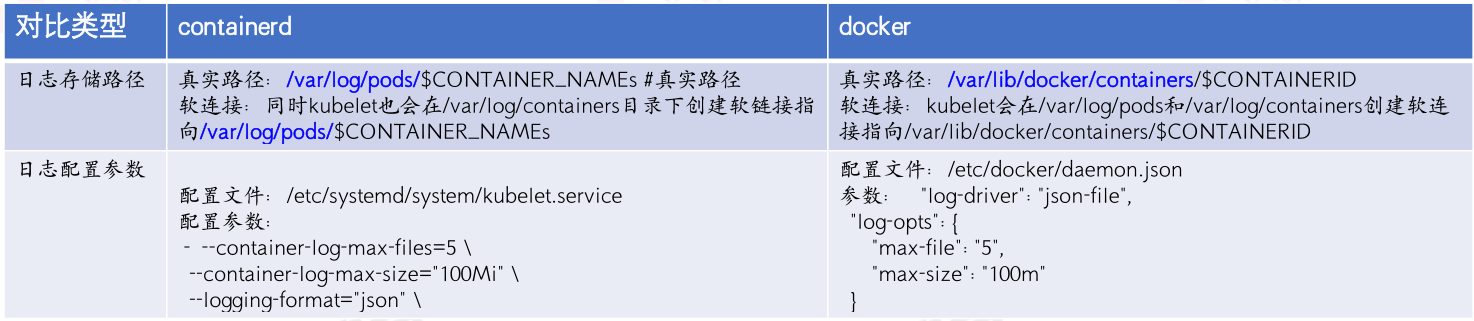

- node节点收集,基于daemonset部署日志收集进程,实现json-file类型(标准输出/dev/stdout、错误输出/dev/stderr)日志收集。

- 使用sidcar容器(一个pod多容器)收集当前pod内一个或者多个业务容器的日志(通常基于emptyDir实现业务容器与sidcar之间的日志共享)。

- 在容器内置日志收集服务进程。

安装elasticsearch

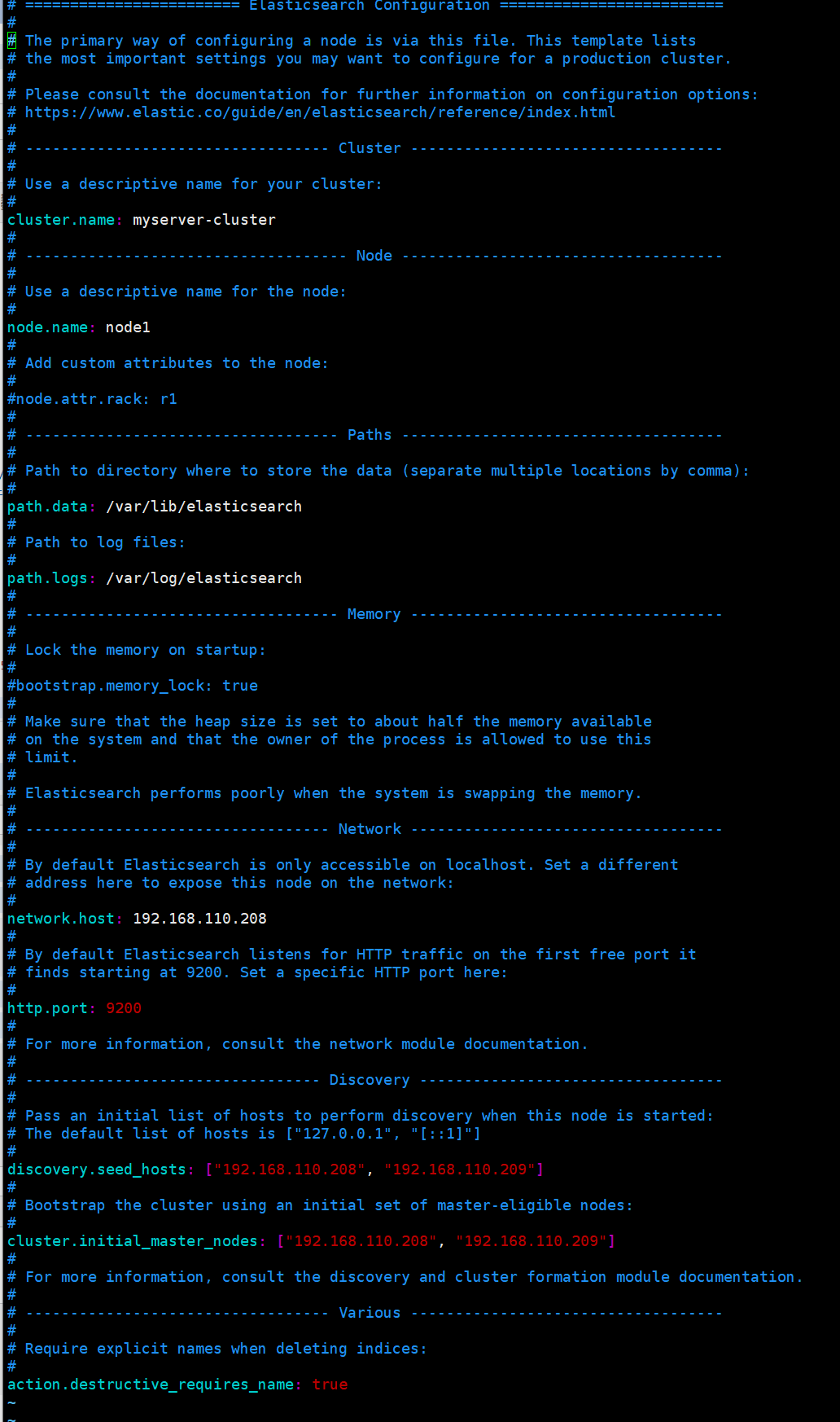

计划在192.168.110.208 192.168.110.209安装elasticsearch

在elasticsearch官网下载elasticsearch-7.12.1-amd64.deb 执行安装命令

dpkg -i elasticsearch-7.12.1-amd64.deb

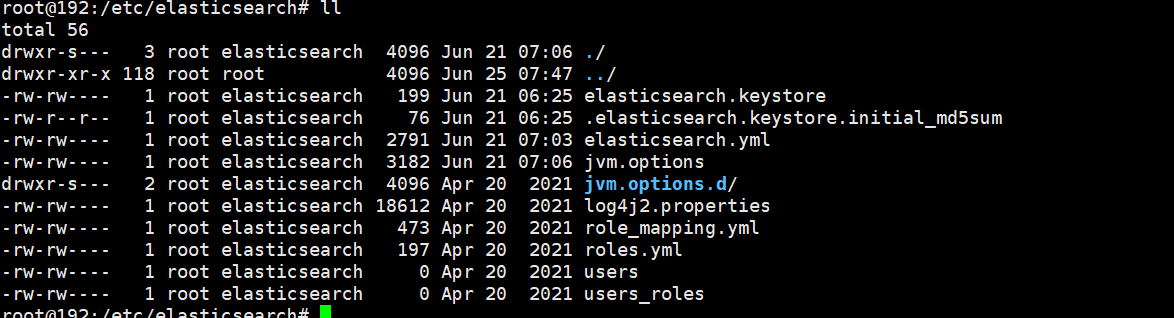

进入/etc/elasticsearch 修改 elasticsearch.yml

执行 systemctl restart elasticsearch.service 启动es

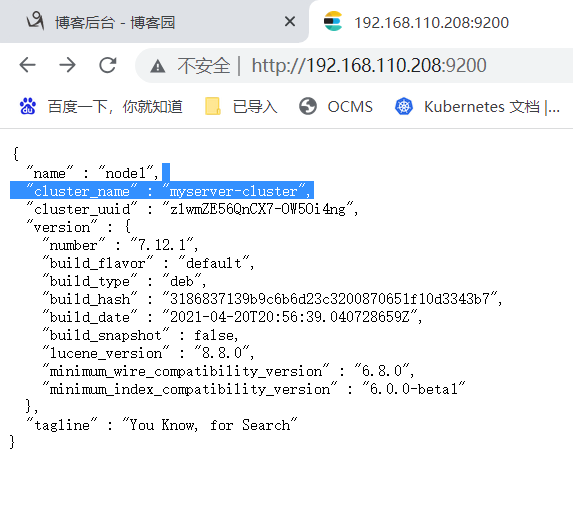

然后浏览器访问9200端口检查

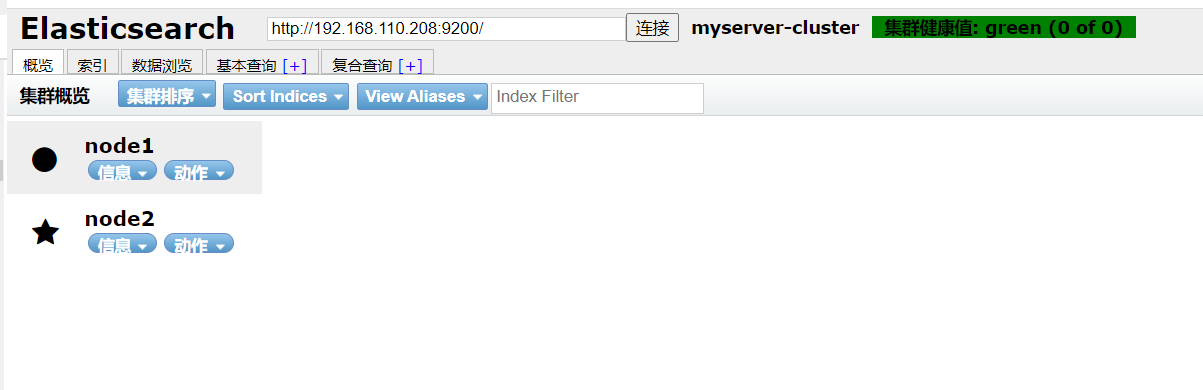

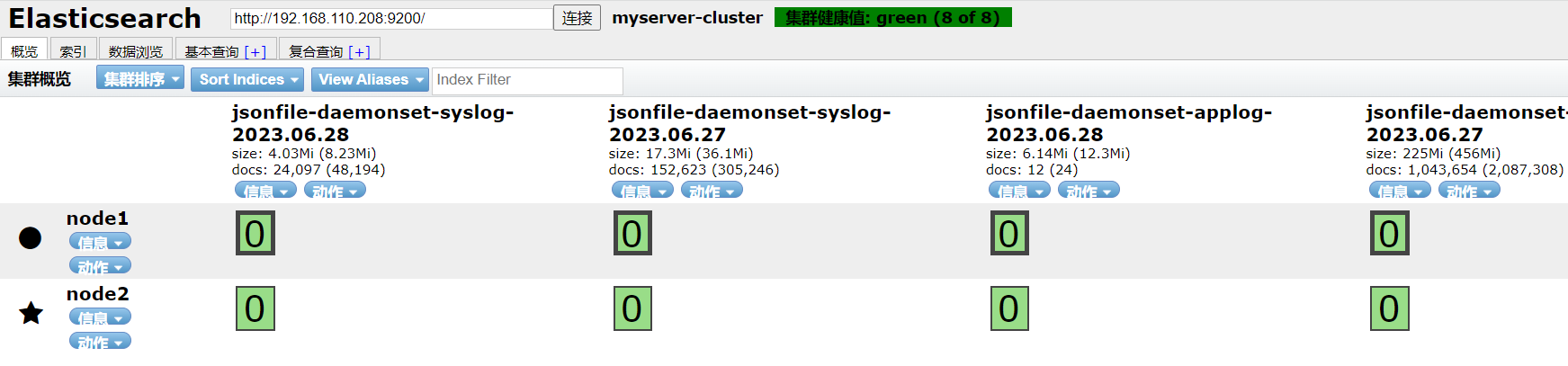

使用插件检查集群情况

安装zookeeper

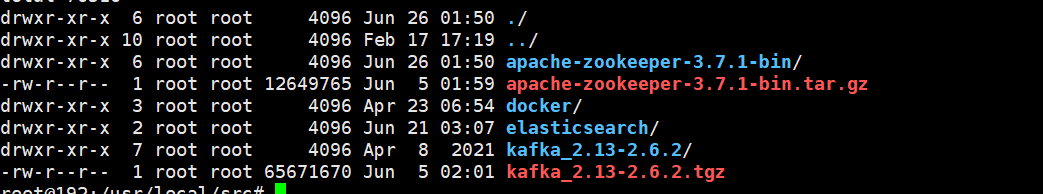

mv apache-zookeeper-3.7.1-bin /usr/local/zookeeper

root@192:/usr/local/zookeeper/conf# cat /usr/local/zookeeper/conf/zoo_sample.cfg

# The number of milliseconds of each tick

tickTime=2000 ##数据周期时间

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5 ##周期数

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/data/zookeeper ##zookeeper数据目录

# the port at which the clients will connect

clientPort=2181 ##客户端端口

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

## Metrics Providers

#

# https://prometheus.io Metrics Exporter

#metricsProvider.className=org.apache.zookeeper.metrics.prometheus.PrometheusMetricsProvider

#metricsProvider.httpPort=7000

#metricsProvider.exportJvmInfo=true

server.1=192.168.110.208:2888:3888

server.2=192.168.110.209:2888:3888

创建目录数据目录

root@192:/usr/local/zookeeper/conf# mkdir -p /data/zookeeper

208服务器id是1 209服务器id是2

root@192:/usr/local/zookeeper/conf# echo 1 > /data/zookeeper/myid

root@192:/usr/local/zookeeper/conf# echo 2 > /data/zookeeper/myid

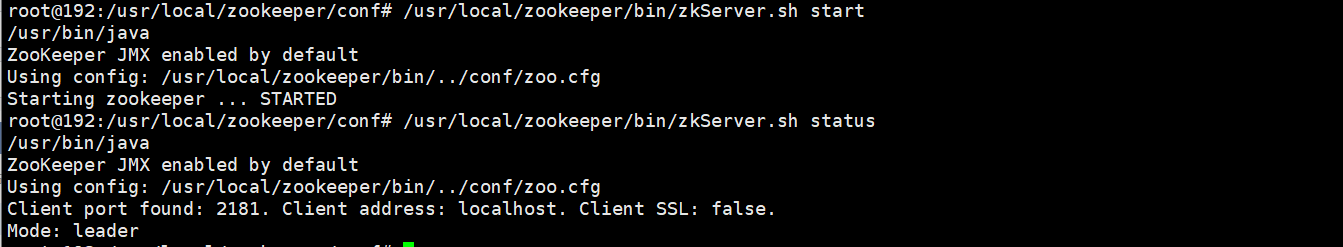

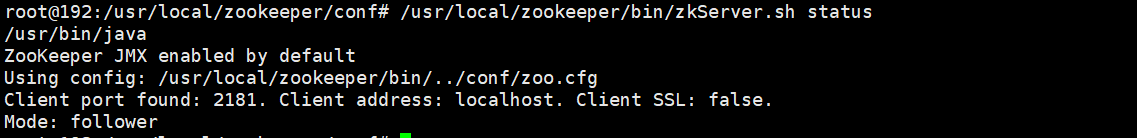

快速启动两个zookeeper

/usr/local/zookeeper/bin/zkServer.sh start

查看zookeeper是否启动成功,以及是否是集群

安装kafka

mv kafka_2.13-2.6.2 /usr/local/kafka

修改server.properties配置文件

cat /usr/local/kafka/config/server.properties

root@192:/usr/local/kafka/config# cat /usr/local/kafka/config/server.properties

listeners=PLAINTEXT://192.168.110.208:9092 ## 监听ip和端口

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/data/kafka-logs

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=192.168.110.208:2181,192.168.110.209:2181 #zookeeper集群ip 重要

zookeeper.connection.timeout.ms=18000

group.initial.rebalance.delay.ms=0

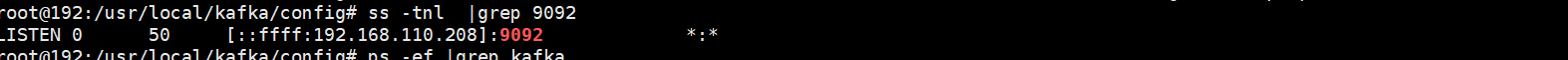

执行启动脚本

/usr/local/kafka/bin/kafka-server-start.sh -daemon /usr/local/kafka/config/server.properties

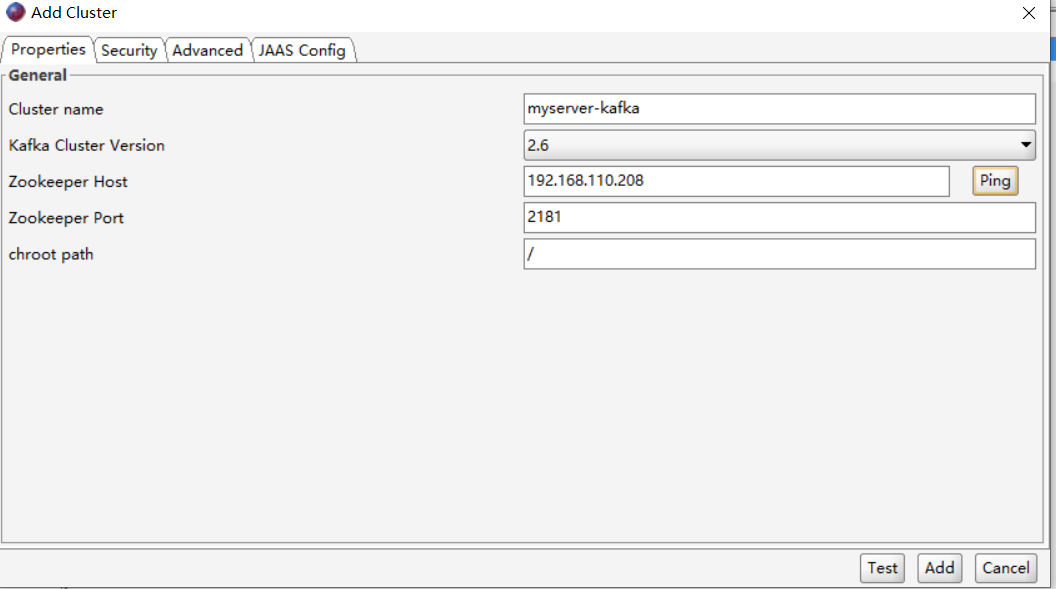

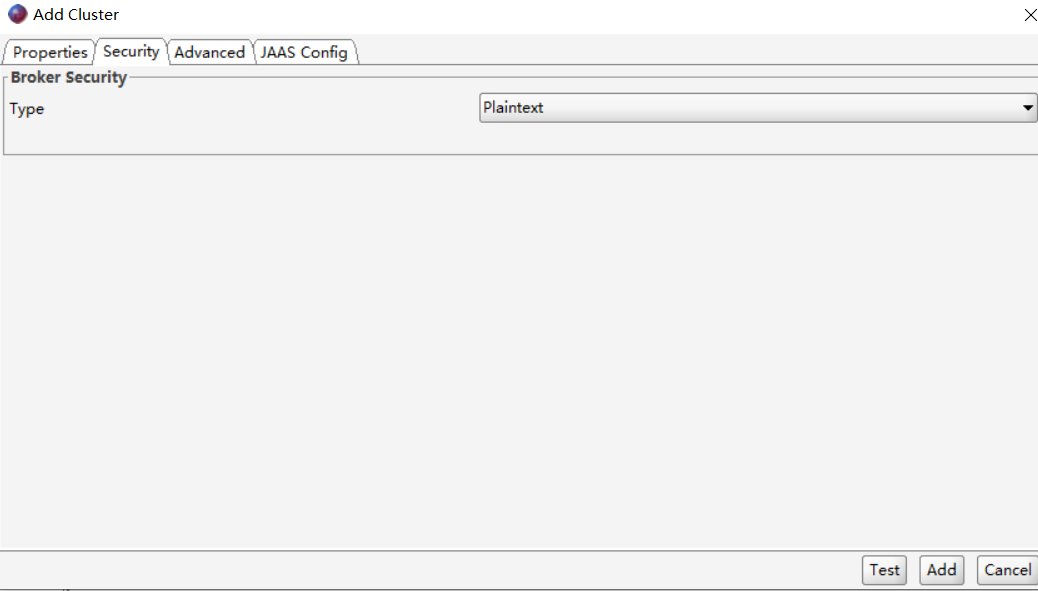

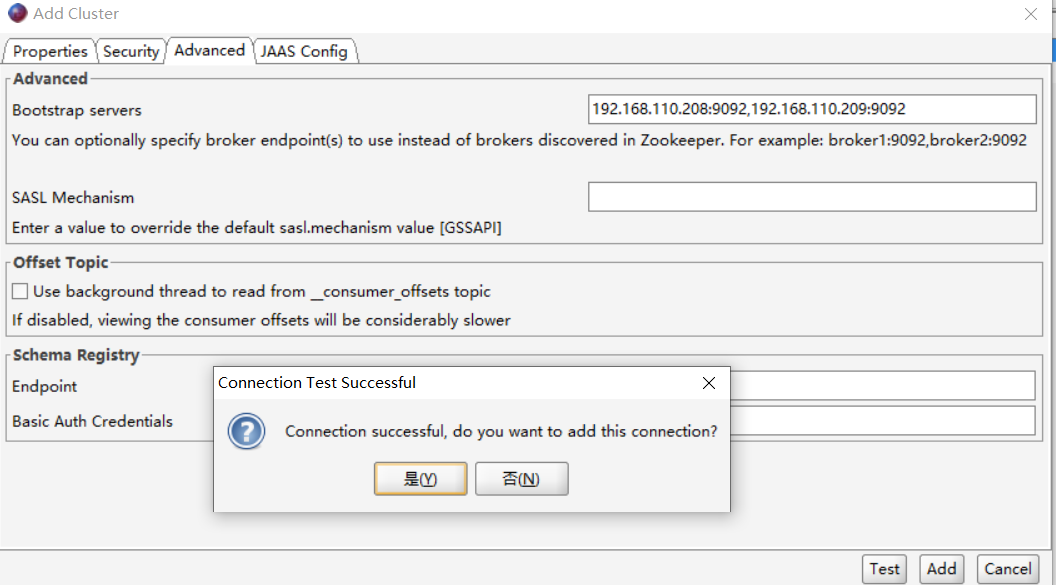

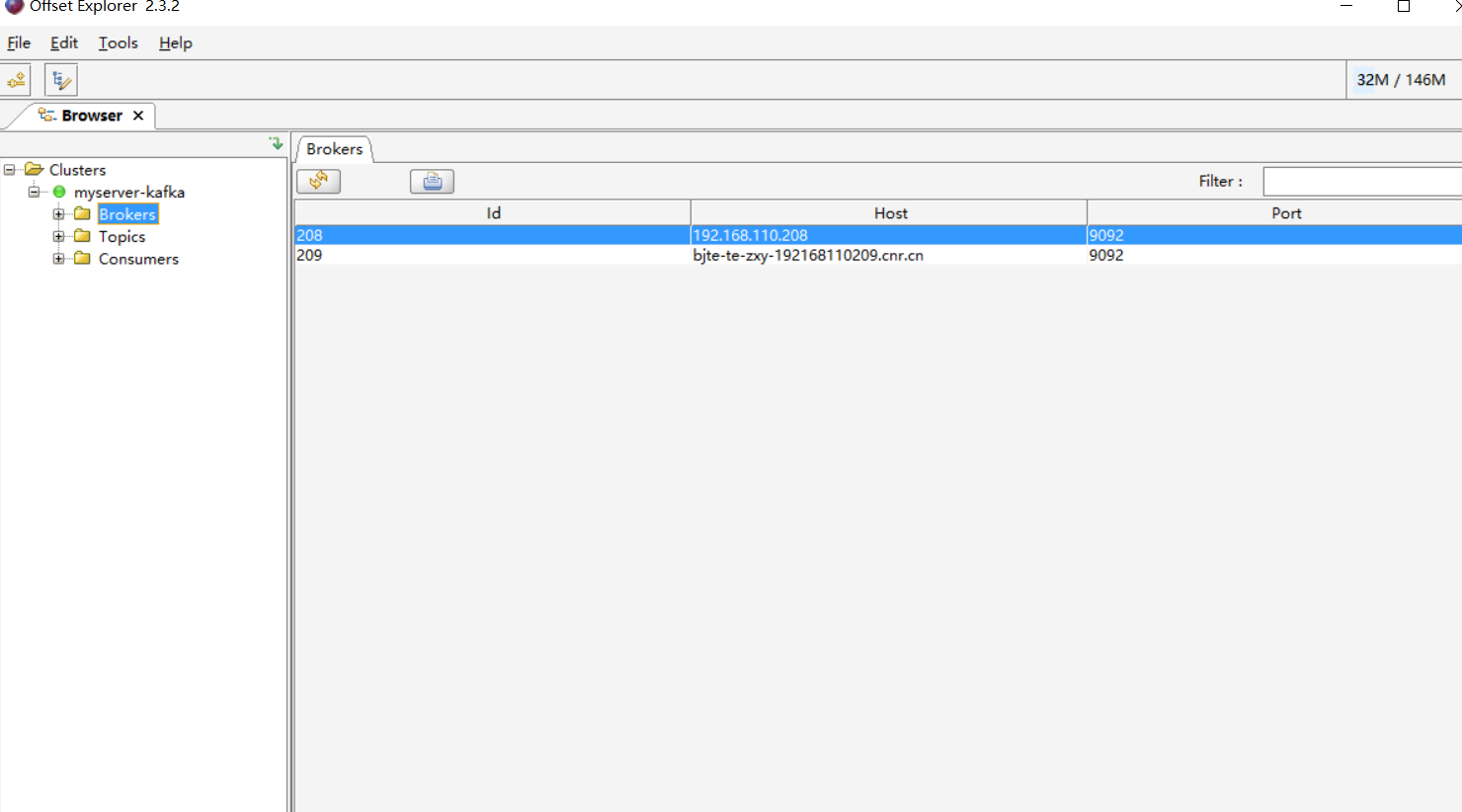

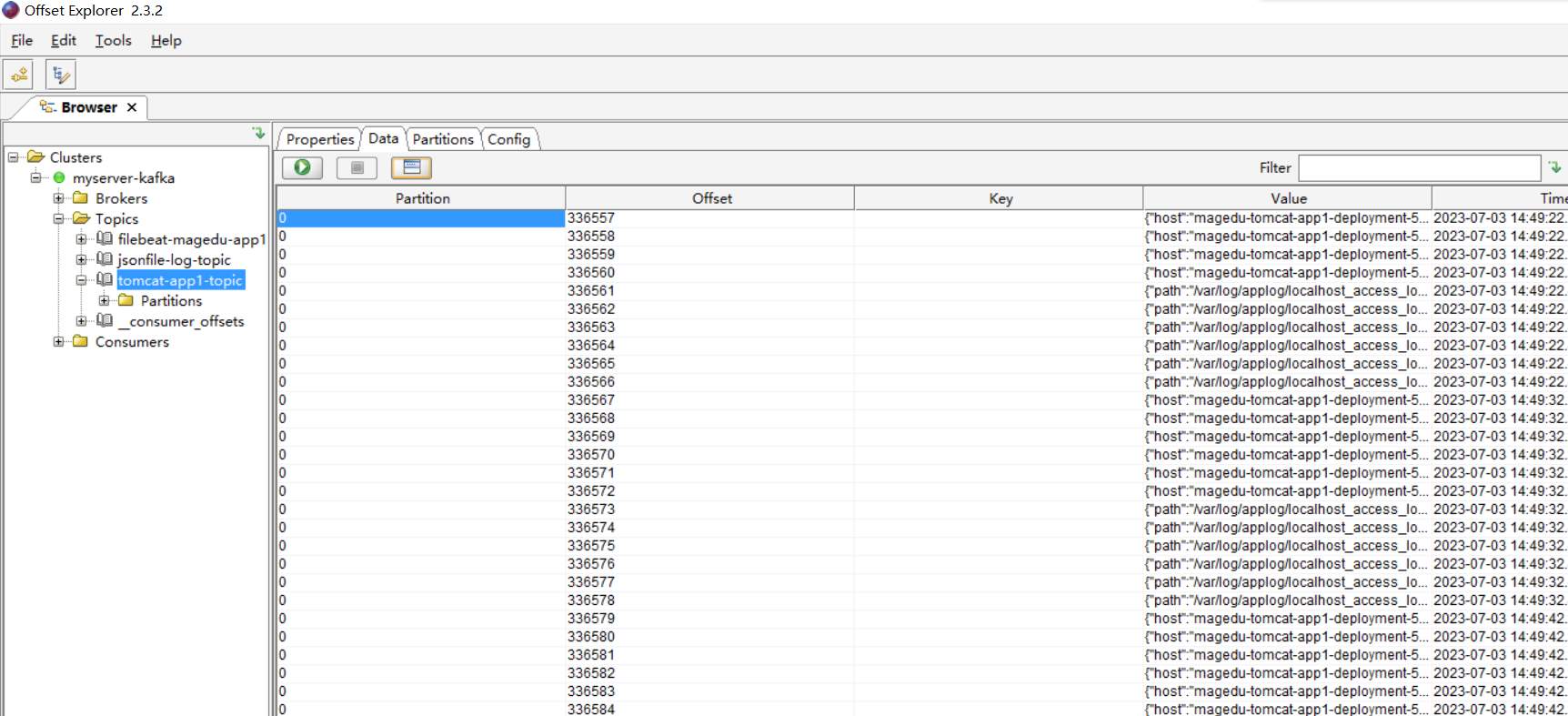

安装offset explorer软件 如图配置

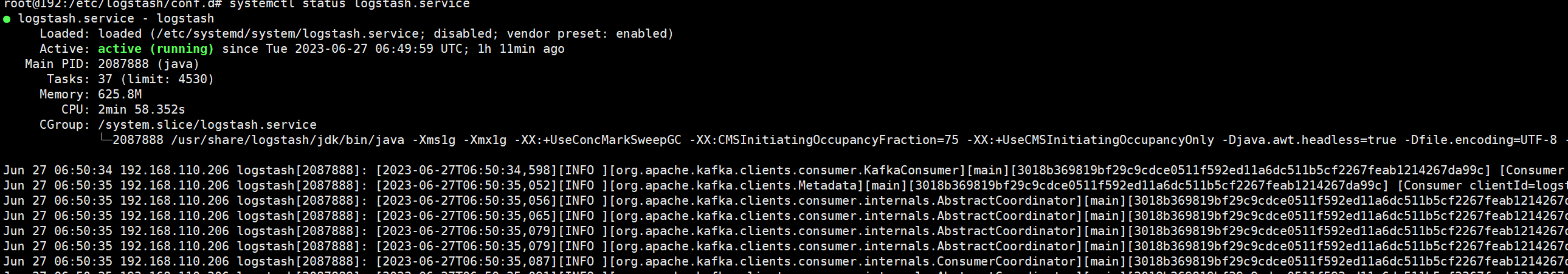

安装logstash

解压安装包 进入logstash目录,添加配置文件

dpkg -i logstash-7.12.1-amd64.deb

root@192:/etc/logstash/conf.d# pwd

/etc/logstash/conf.d

root@192:/etc/logstash/conf.d# cat log-to-es.conf

input {

kafka {

bootstrap_servers => "192.168.110.208:9092,192.168.110.209:9092"

topics => ["jsonfile-log-topic"]

codec => "json"

}

}

output {

#if [fields][type] == "app1-access-log" {

if [type] == "jsonfile-daemonset-applog" {

elasticsearch {

hosts => ["192.168.110.208:9200","192.168.110.209:9200"]

index => "jsonfile-daemonset-applog-%{+YYYY.MM.dd}"

}}

if [type] == "jsonfile-daemonset-syslog" {

elasticsearch {

hosts => ["192.168.110.208:9200","192.168.110.209:9200"]

index => "jsonfile-daemonset-syslog-%{+YYYY.MM.dd}"

}}

}

root@192:/etc/logstash/conf.d# systemctl start logstash.service

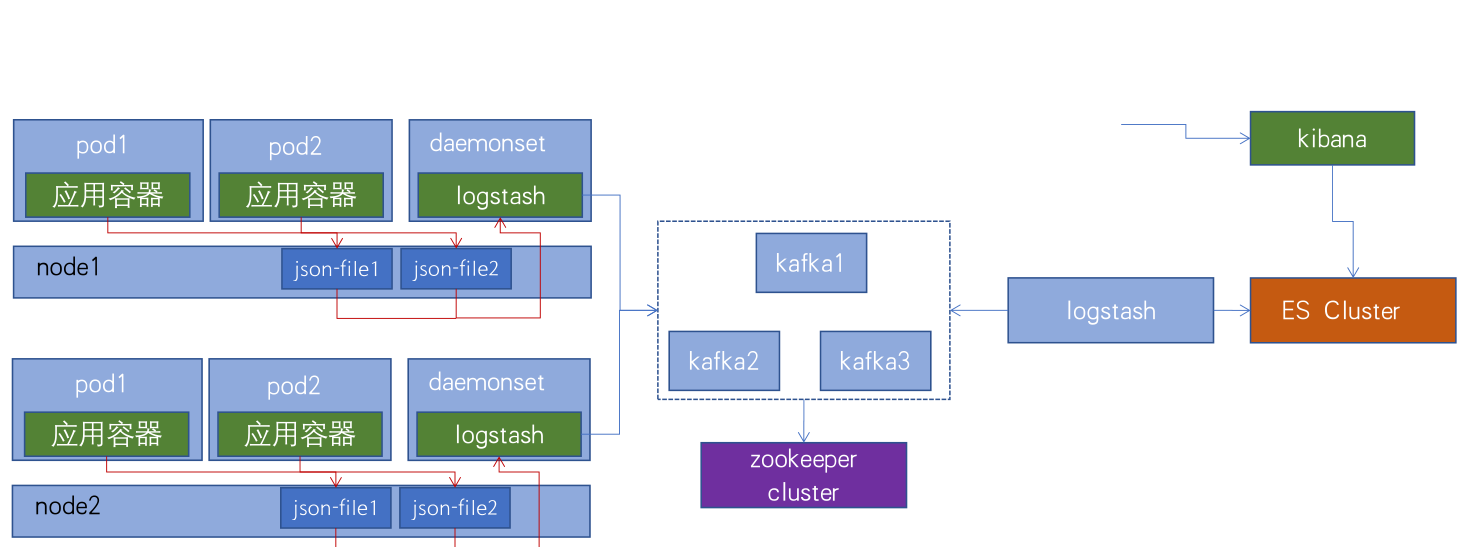

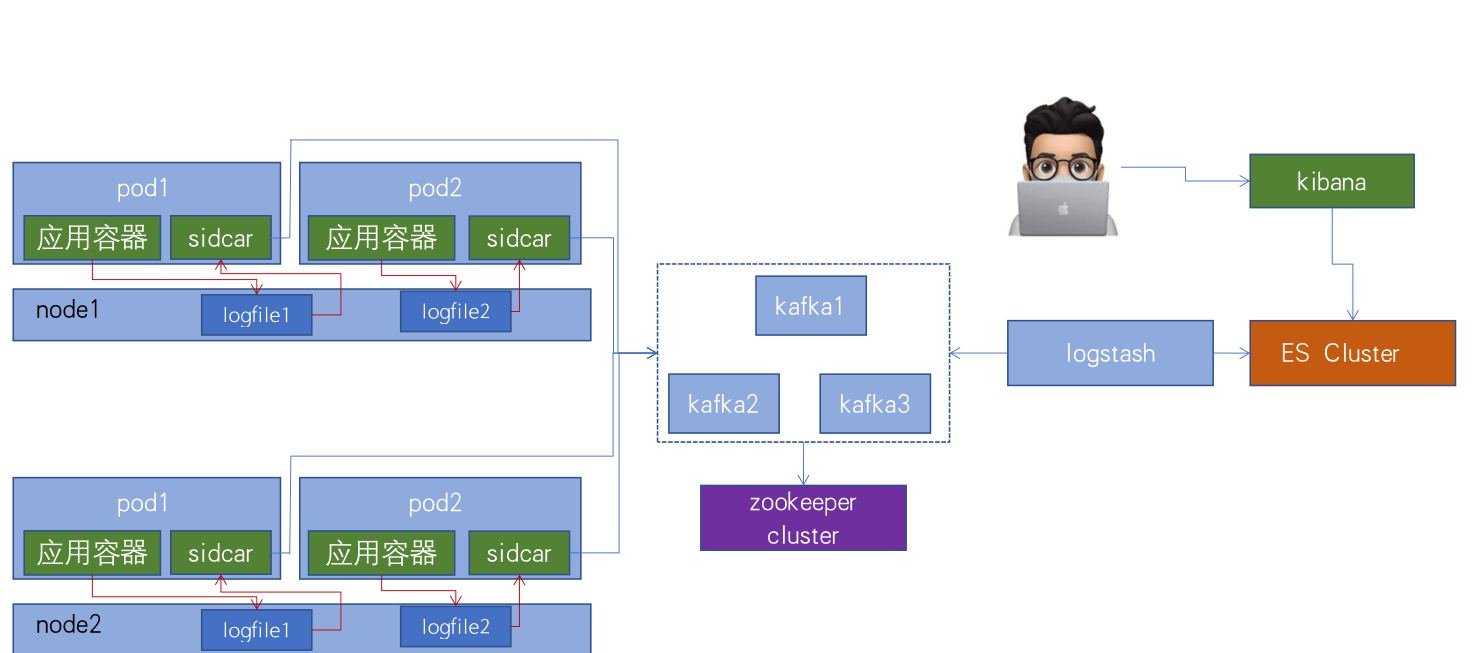

日志示例一:daemonset收集日志

基于daemonset运行日志收集服务,主要收集以下类型日志:

- node节点收集,基于daemonset部署日志收集进程,实现json-file类型(标准输出/dev/stdout、错误输出/dev/stderr)日志收集,即应用程序产生的标准输出和错误输出的日志。

- 宿主机系统日志等以日志文件形式保存的日志。

daemonset收集日志架构

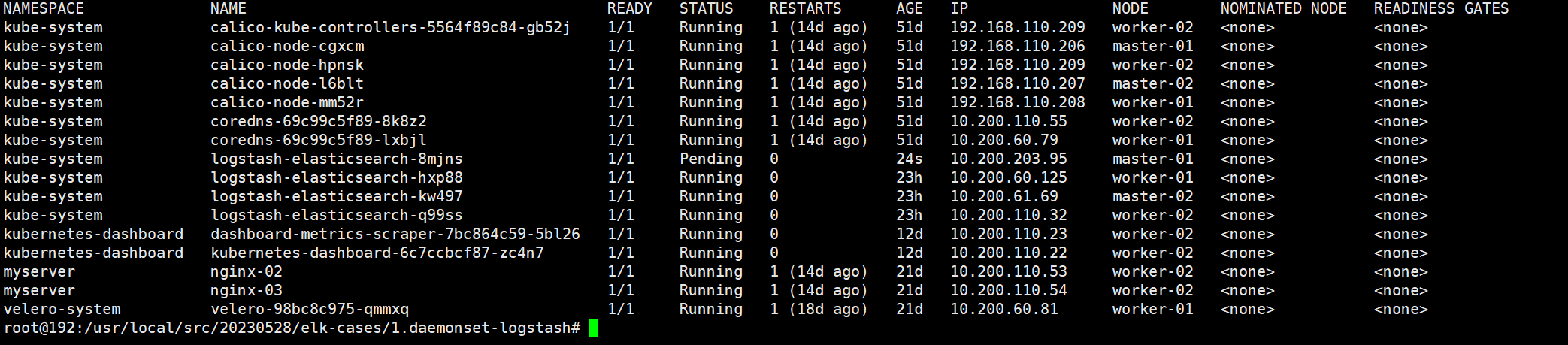

daemonset yaml文件

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: logstash-elasticsearch

namespace: kube-system

labels:

k8s-app: logstash-logging

spec:

selector:

matchLabels:

name: logstash-elasticsearch

template:

metadata:

labels:

name: logstash-elasticsearch

spec:

tolerations:

# this toleration is to have the daemonset runnable on master nodes

# remove it if your masters can't run pods

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

containers:

- name: logstash-elasticsearch

image: harbor.linuxarchitect.io/baseimages/logstash:v7.12.1-json-file-log-v1

env:

- name: "KAFKA_SERVER"

value: "192.168.110.208:9092,192.168.110.209:9092"

- name: "TOPIC_ID"

value: "jsonfile-log-topic"

- name: "CODEC"

value: "json"

# resources:

# limits:

# cpu: 1000m

# memory: 1024Mi

# requests:

# cpu: 500m

# memory: 1024Mi

volumeMounts:

- name: varlog #定义宿主机系统日志挂载路径

mountPath: /var/log #宿主机系统日志挂载点

- name: varlibdockercontainers #定义容器日志挂载路径,和logstash配置文件中的收集路径保持一直

#mountPath: /var/lib/docker/containers #docker挂载路径

mountPath: /var/log/pods #containerd挂载路径,此路径与logstash的日志收集路径必须一致

readOnly: false

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log #宿主机系统日志

- name: varlibdockercontainers

hostPath:

#path: /var/lib/docker/containers #docker的宿主机日志路径

path: /var/log/pods #containerd的宿主机日志路径

此时elasticsearch可以看到数据

安装kibana

dpkg -i kibana-7.12.1-amd64.deb

root@192:/etc/kibana# cat /etc/kibana/kibana.yml |grep -v "^#" | grep -v "^$"

server.host: "192.168.110.206"

elasticsearch.hosts: ["http://192.168.110.208:9200"]

i18n.locale: "zh-CN"

root@192:/etc/kibana# systemctl start kibana.service

root@192:/etc/kibana# systemctl status kibana.service

● kibana.service - Kibana

Loaded: loaded (/etc/systemd/system/kibana.service; disabled; vendor preset: enabled)

Active: active (running) since Wed 2023-06-28 06:23:01 UTC; 7s ago

Docs: https://www.elastic.co

Main PID: 2852340 (node)

Tasks: 11 (limit: 4530)

Memory: 192.9M

CPU: 8.034s

CGroup: /system.slice/kibana.service

└─2852340 /usr/share/kibana/bin/../node/bin/node /usr/share/kibana/bin/../src/cli/dist --logging.dest=/var/log/kibana/kibana.log --pid.file=/run/kibana/kibana.pid

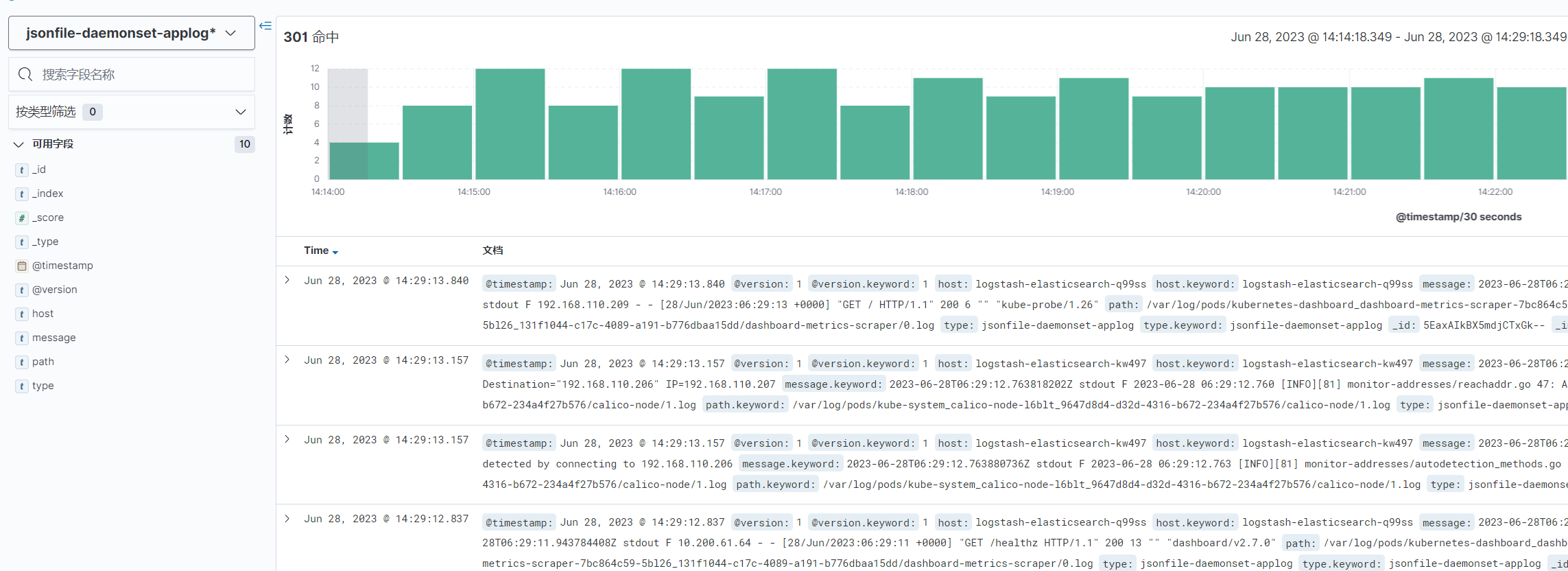

进入kibana 如图 进入stack management创建索引

进入discover 可以看到系统日志

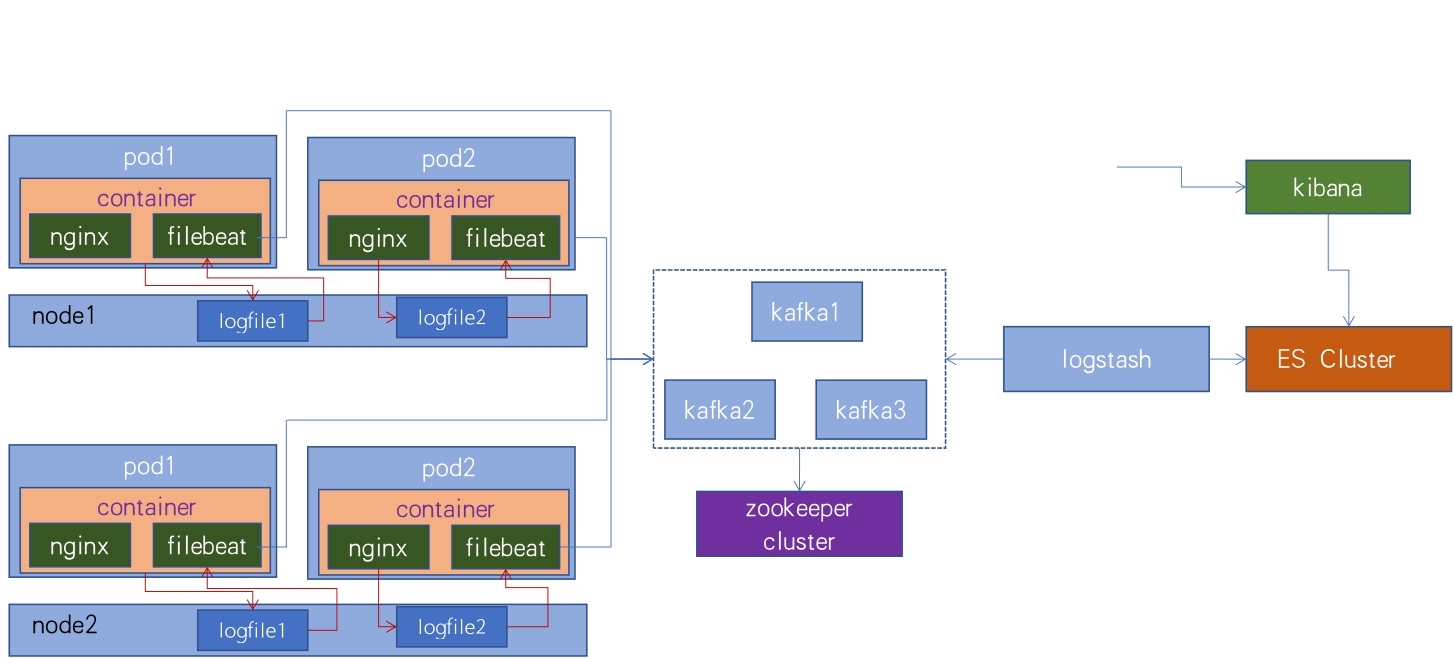

日志收集之二:sidecar 模式实现日志收集

使用sidcar容器(一个pod多容器)收集当前pod内一个或者多个业务容器的日志(通常基于emptyDir实现业务容器与sidcar之间的日志共享)。

与Damonset收集方式比较,缺点是占用的内存更高,更消耗资源;优点是针对每个pod执行日志收集,可以对日志有索引,日志归属有更好的区分,

1 构建sidecar镜像

root@192:/usr/local/src/20230528/elk-cases/2.sidecar-logstash/1.logstash-image-Dockerfile# cat Dockerfile

FROM logstash:7.12.1

USER root

WORKDIR /usr/share/logstash

#RUN rm -rf config/logstash-sample.conf

ADD logstash.yml /usr/share/logstash/config/logstash.yml

ADD logstash.conf /usr/share/logstash/pipeline/logstash.conf

root@192:/usr/local/src/20230528/elk-cases/2.sidecar-logstash/1.logstash-image-Dockerfile# cat build-commond.sh

#!/bin/bash

#docker build -t harbor.magedu.local/baseimages/logstash:v7.12.1-sidecar .

#docker push harbor.magedu.local/baseimages/logstash:v7.12.1-sidecar

nerdctl build -t harbor.linuxarchitect.io/baseimages/logstash:v7.12.1-sidecar .

nerdctl push harbor.linuxarchitect.io/baseimages/logstash:v7.12.1-sidecar

root@192:/usr/local/src/20230528/elk-cases/2.sidecar-logstash/1.logstash-image-Dockerfile# cat logstash.conf

input {

file {

path => "/var/log/applog/catalina.out"

start_position => "beginning"

type => "app1-sidecar-catalina-log"

}

file {

path => "/var/log/applog/localhost_access_log.*.txt"

start_position => "beginning"

type => "app1-sidecar-access-log"

}

}

output {

if [type] == "app1-sidecar-catalina-log" {

kafka {

bootstrap_servers => "${KAFKA_SERVER}"

topic_id => "${TOPIC_ID}"

batch_size => 16384 #logstash每次向ES传输的数据量大小,单位为字节

codec => "${CODEC}"

} }

if [type] == "app1-sidecar-access-log" {

kafka {

bootstrap_servers => "${KAFKA_SERVER}"

topic_id => "${TOPIC_ID}"

batch_size => 16384

codec => "${CODEC}"

}}

}

2部署web服务

root@192:/usr/local/src/20230528/elk-cases/2.sidecar-logstash# cat 2.tomcat-app1.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: magedu-tomcat-app1-deployment-label

name: magedu-tomcat-app1-deployment #µ±Ç°°æ±¾µÄdeployment Ãû³Æ

namespace: magedu

spec:

replicas: 2

selector:

matchLabels:

app: magedu-tomcat-app1-selector

template:

metadata:

labels:

app: magedu-tomcat-app1-selector

spec:

containers:

- name: magedu-tomcat-app1-container

image: registry.cn-hangzhou.aliyuncs.com/zhangshijie/tomcat-app1:v1

imagePullPolicy: IfNotPresent

#imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

env:

- name: "password"

value: "123456"

- name: "age"

value: "18"

resources:

limits:

cpu: 1

memory: "512Mi"

requests:

cpu: 500m

memory: "512Mi"

volumeMounts:

- name: applogs

mountPath: /apps/tomcat/logs

startupProbe:

httpGet:

path: /myapp/index.html

port: 8080

initialDelaySeconds: 5 #Ê״μì²âÑÓ³Ù5s

failureThreshold: 3 #´Ó³É¹¦×ªÎªÊ§°ÜµÄ´ÎÊý

periodSeconds: 3 #̽²â¼ä¸ôÖÜÆÚ

readinessProbe:

httpGet:

#path: /monitor/monitor.html

path: /myapp/index.html

port: 8080

initialDelaySeconds: 5

periodSeconds: 3

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 3

livenessProbe:

httpGet:

#path: /monitor/monitor.html

path: /myapp/index.html

port: 8080

initialDelaySeconds: 5

periodSeconds: 3

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 3

- name: sidecar-container

image: harbor.linuxarchitect.io/baseimages/logstash:v7.12.1-sidecar

imagePullPolicy: IfNotPresent

#imagePullPolicy: Always

env:

- name: "KAFKA_SERVER"

value: "192.168.110.208:9092,192.168.110.209:9092"

- name: "TOPIC_ID"

value: "tomcat-app1-topic"

- name: "CODEC"

value: "json"

volumeMounts:

- name: applogs

mountPath: /var/log/applog

volumes:

- name: applogs #

emptyDir: {}

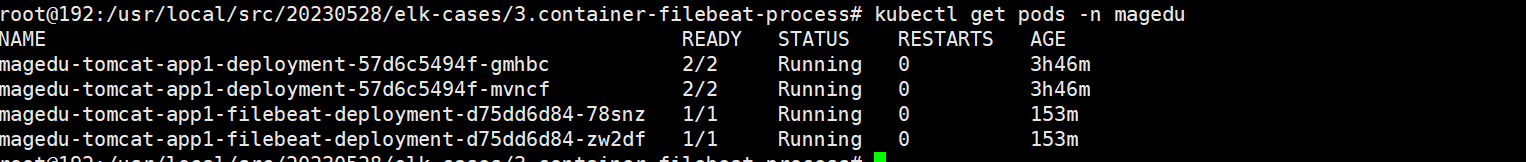

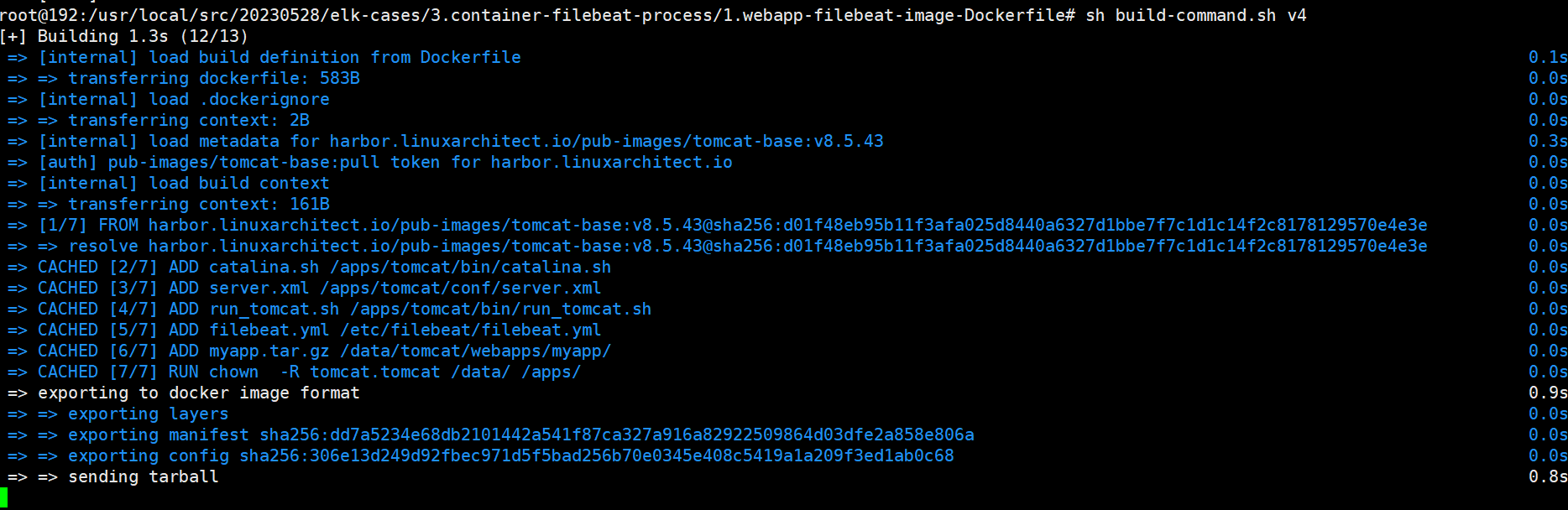

日志示例之三:容器内置日志收集服务进程简介

制作镜像

查看 build脚本和dockerfile

root@192:/usr/local/src/20230528/elk-cases/3.container-filebeat-process/1.webapp-filebeat-image-Dockerfile# cat build-command.sh

#!/bin/bash

TAG=$1

#docker build -t harbor.linuxarchitect.io/magedu/tomcat-app1:${TAG} .

#sleep 3

#docker push harbor.linuxarchitect.io/magedu/tomcat-app1:${TAG}

nerdctl build -t harbor.linuxarchitect.io/magedu/tomcat-app1:${TAG} .

nerdctl push harbor.linuxarchitect.io/magedu/tomcat-app1:${TAG}

root@192:/usr/local/src/20230528/elk-cases/3.container-filebeat-process/1.webapp-filebeat-image-Dockerfile# cat Dockerfile

#tomcat web1

FROM harbor.linuxarchitect.io/pub-images/tomcat-base:v8.5.43

ADD catalina.sh /apps/tomcat/bin/catalina.sh

ADD server.xml /apps/tomcat/conf/server.xml

#ADD myapp/* /data/tomcat/webapps/myapp/

ADD run_tomcat.sh /apps/tomcat/bin/run_tomcat.sh

ADD filebeat.yml /etc/filebeat/filebeat.yml

ADD myapp.tar.gz /data/tomcat/webapps/myapp/

RUN chown -R tomcat.tomcat /data/ /apps/

#ADD filebeat-7.5.1-x86_64.rpm /tmp/

#RUN cd /tmp && yum localinstall -y filebeat-7.5.1-amd64.deb

EXPOSE 8080 8443

CMD ["/apps/tomcat/bin/run_tomcat.sh"]

查看添加的filebeat配置文件

root@192:/usr/local/src/20230528/elk-cases/3.container-filebeat-process/1.webapp-filebeat-image-Dockerfile# cat filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /apps/tomcat/logs/catalina.out

fields:

type: filebeat-tomcat-catalina

- type: log

enabled: true

paths:

- /apps/tomcat/logs/localhost_access_log.*.txt

fields:

type: filebeat-tomcat-accesslog

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 1

setup.kibana:

output.kafka:

hosts: ["192.168.110.208:9092","192.168.110.209:9092"]

required_acks: 1

topic: "filebeat-magedu-app1"

compression: gzip

max_message_bytes: 1000000

执行sh build-command.sh v4

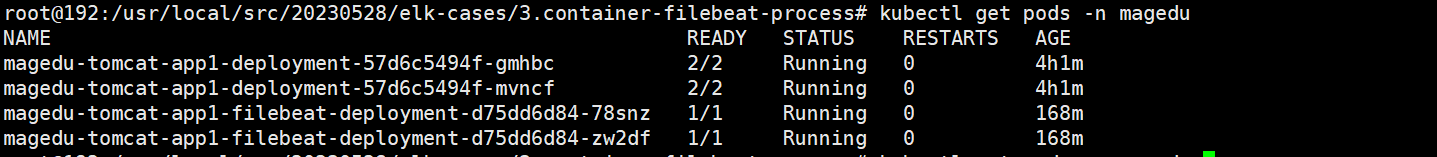

查看tomcat.yaml

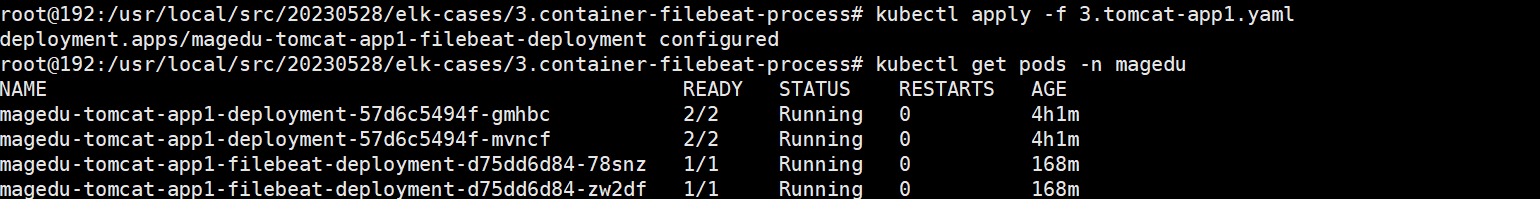

root@192:/usr/local/src/20230528/elk-cases/3.container-filebeat-process# cat 3.tomcat-app1.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: magedu-tomcat-app1-filebeat-deployment-label

name: magedu-tomcat-app1-filebeat-deployment

namespace: magedu

spec:

replicas: 2

selector:

matchLabels:

app: magedu-tomcat-app1-filebeat-selector

template:

metadata:

labels:

app: magedu-tomcat-app1-filebeat-selector

spec:

containers:

- name: magedu-tomcat-app1-filebeat-container

image: harbor.linuxarchitect.io/magedu/tomcat-app1:v4

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

env:

- name: "password"

value: "123456"

- name: "age"

value: "18"

resources:

limits:

cpu: 1

memory: "512Mi"

requests:

cpu: 500m

memory: "512Mi"

查看logestash.conf配置文件

kafka {

bootstrap_servers => "172.31.2.107:9092,172.31.2.108:9092,172.31.2.109:9092"

topics => ["jsonfile-log-topic","tomcat-app1-topic","filebeat-magedu-app1"]

codec => "json"

}

}

output {

if [fields][type] == "filebeat-tomcat-catalina" {

elasticsearch {

hosts => ["172.31.2.101:9200","172.31.2.102:9200"]

index => "filebeat-tomcat-catalina-%{+YYYY.MM.dd}"

}}

if [fields][type] == "filebeat-tomcat-accesslog" {

elasticsearch {

hosts => ["172.31.2.101:9200","172.31.2.102:9200"]

index => "filebeat-tomcat-accesslog-%{+YYYY.MM.dd}"

}}

if [type] == "app1-sidecar-access-log" {

elasticsearch {

hosts => ["172.31.2.101:9200","172.31.2.102:9200"]

index => "sidecar-app1-accesslog-%{+YYYY.MM.dd}"

}

}

if [type] == "app1-sidecar-catalina-log" {

elasticsearch {

hosts => ["172.31.2.101:9200","172.31.2.102:9200"]

index => "sidecar-app1-catalinalog-%{+YYYY.MM.dd}"

}

}

if [type] == "jsonfile-daemonset-applog" {

elasticsearch {

hosts => ["172.31.2.101:9200","172.31.2.102:9200"]

index => "jsonfile-daemonset-applog-%{+YYYY.MM.dd}"

}}

if [type] == "jsonfile-daemonset-syslog" {

elasticsearch {

hosts => ["172.31.2.101:9200","172.31.2.102:9200"]

index => "jsonfile-daemonset-syslog-%{+YYYY.MM.dd}"

}}

}

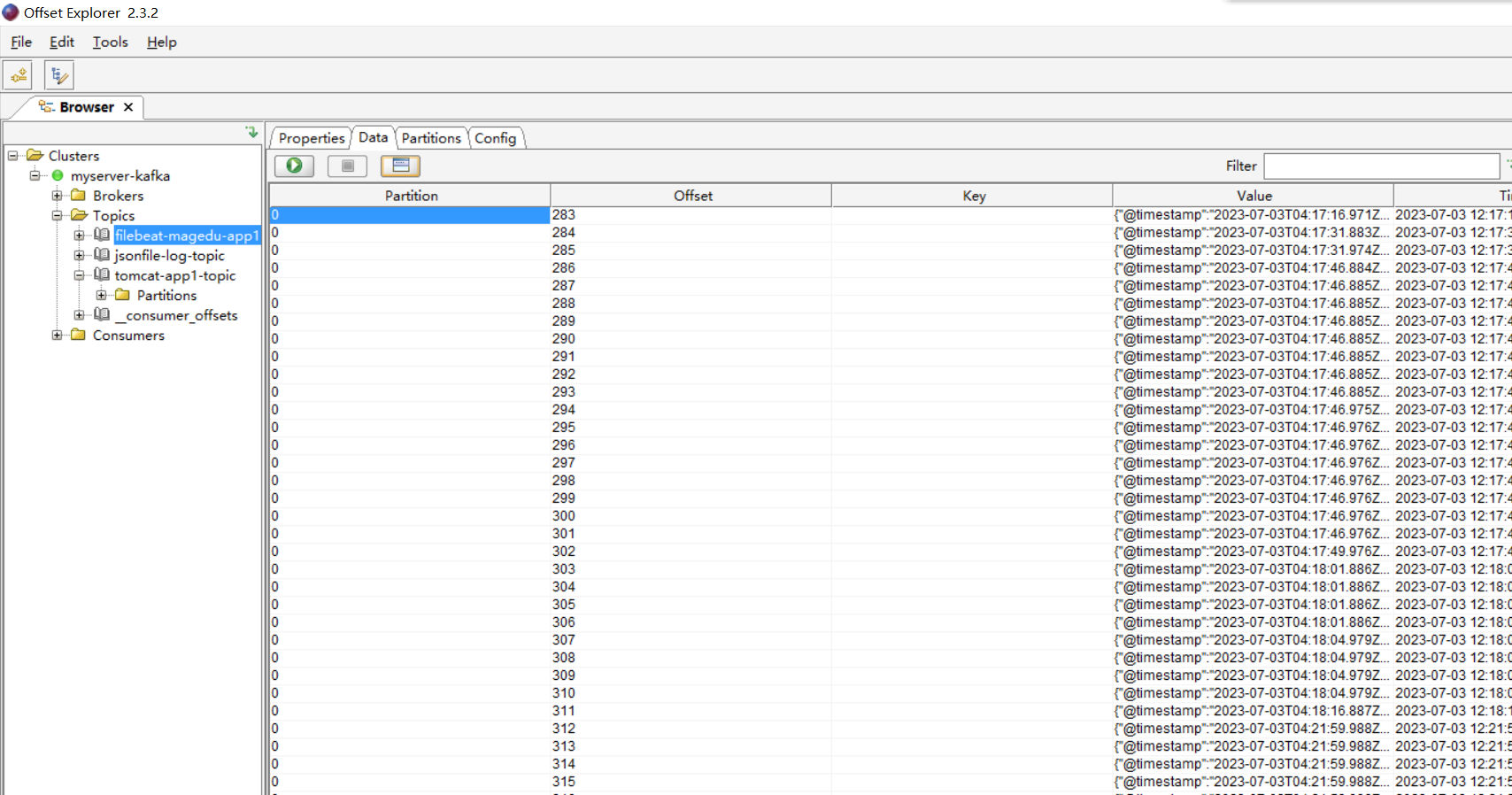

查看kafka

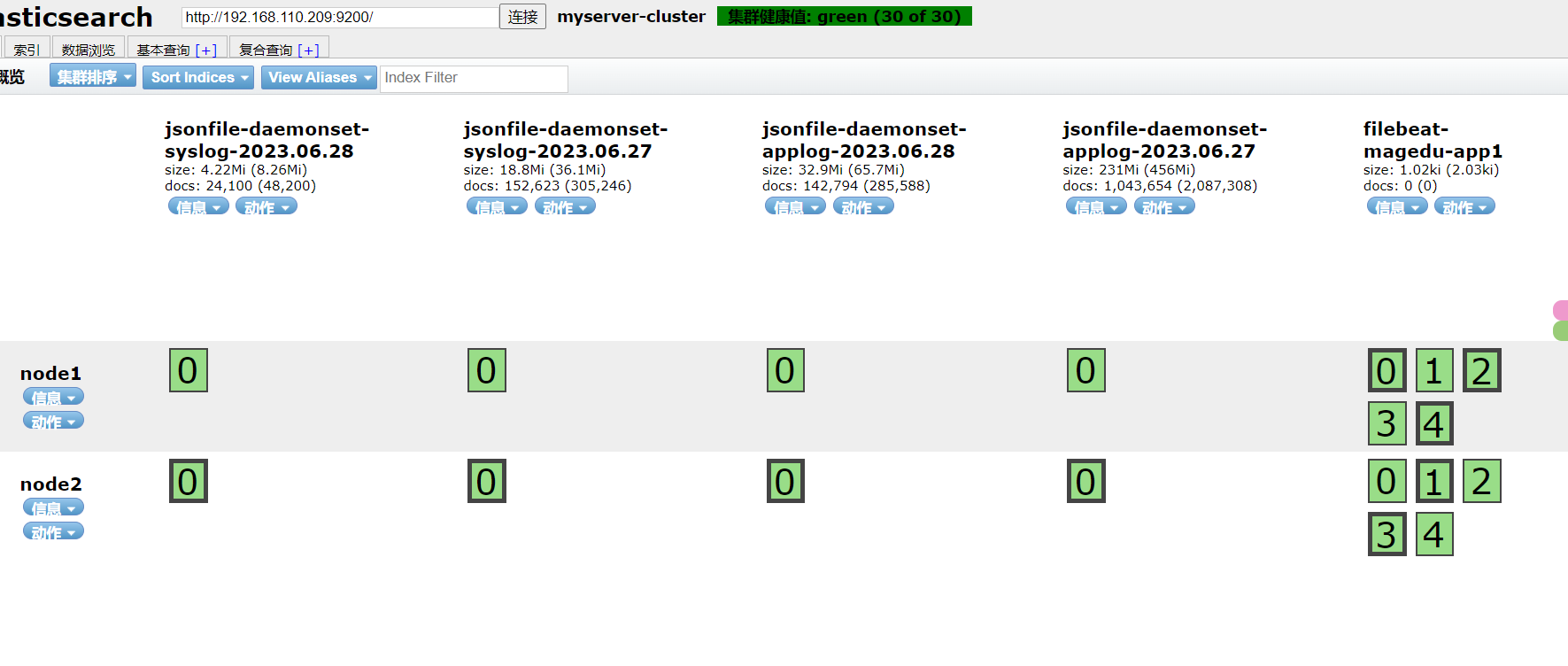

查看es

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 【杭电多校比赛记录】2025“钉耙编程”中国大学生算法设计春季联赛(1)