07-GoogLenet 图像分类

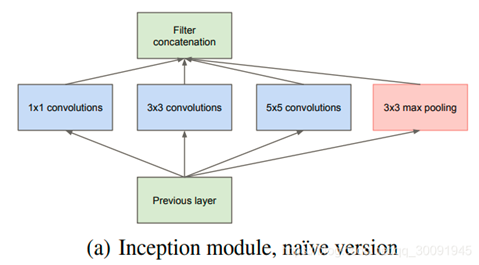

显然从上图中可以看出,原始Inception 结构采用 1 × 1、 3 × 3和 5 × 5三种卷积核的卷积层进行并行提取特征,这可以加大网络模型的宽度,不同大小的卷积核也就意味着原始Inception 结构可以获取到不同大小的感受野,上图中的最后拼接就是将不同尺度特征进行深度融合。

同时在原始Inception 结构之所以卷积核大小采用1、3和5,主要是为了方便对齐。设定卷积步长stride=1之后,只要分别设定pad=0、1、2,那么卷积之后便可以得到相同维度的特征,然后这些特征就可以直接深度融合了。

最后文章说很多地方都表明pooling挺有效,所以原始Inception结构里面也加入了最大池化层来降低网络模型参数。特别重要的是网络越到后面,特征越抽象,而且每个特征所涉及的感受野也更大了,因此随着层数的增加,GoogLeNet中3x3和5x5卷积的比例也要增加。

但是原始Inception结构中 5 × 5 、卷积核仍然会带来巨大的计算量。降低 5 × 5 卷积核带来的计算量,GoogLeNet中借鉴了NIN(Network in Network)的思想使用 1 × 1 卷积层与 5 × 5卷积层相结合来实现参数降维。

对于 1 × 1卷积层与 5 × 5卷积层实现参数降维,在这里也举一个简单的例子进行说明。假如上一层的输出为 100 × 100 × 128,经过具有 256个输出的5×5卷积层之后(stride=1,pad=2),输出数据为 100 × 100 × 256。其中,那么卷积层的参数为 128 × 5 × 5 × 256。此时如果上一层输出先经过具有 32个输出的 1 × 1卷积层,再经过具有 256个输出的 5 × 5卷积层,那么最终的输出数据仍为为 100 × 100 × 256,但卷积参数量已经减少为 128 × 1 × 1 × 32 + 32 × 5 × 5 × 256,相比之下参数大约减少了4倍。

因此在 3 × 3和 5 × 5卷积层之前加入合适的 1 × 1卷积层可以在一定程度上减少模型参数,那么在GoogLeNet中基础Inception结构也就做出了相应的改进,改进后的Inception结构如下图所示。

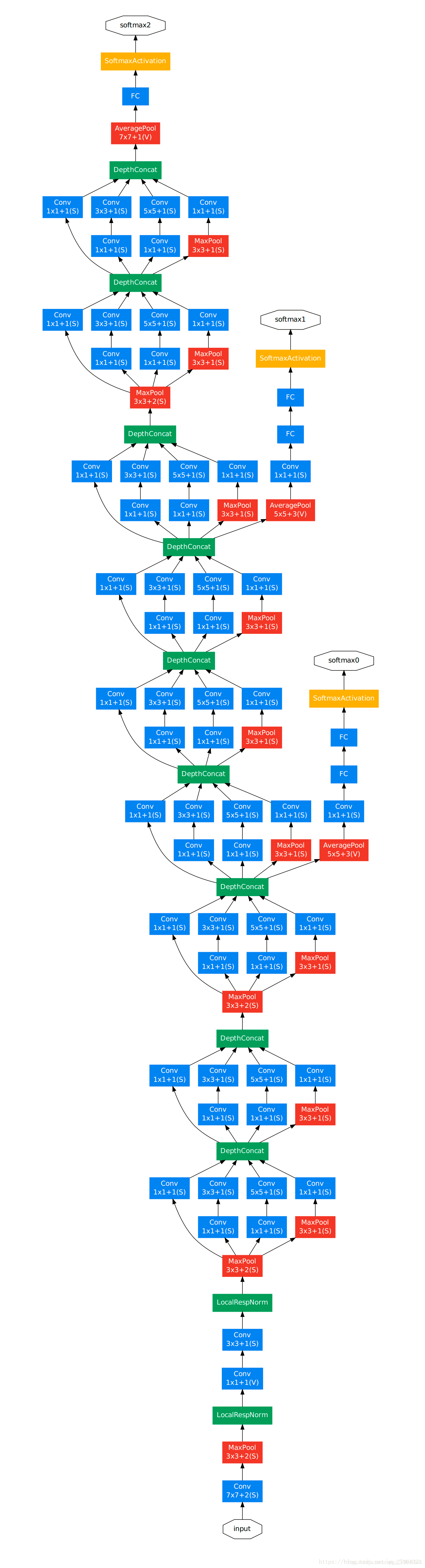

GoogLeNet的整体网络架构如下:

参考:https://blog.csdn.net/qq_30091945/article/details/105128249

GoogLenet的pytorch实现:

1 import torch 2 import torch.nn as nn 3 import torch.nn.functional as F 4 5 class BasicConv2d(nn.Module): 6 def __init__(self,in_channels, out_channels, kernel, stride=1, padding=0): 7 super(BasicConv2d, self).__init__() 8 self.conv = nn.Conv2d(in_channels, out_channels, 9 kernel_size=kernel, stride=stride, padding=padding, 10 bias=False) 11 self.bn = nn.BatchNorm2d(out_channels, eps=0.001) 12 13 def forward(self, x): 14 x = self.conv(x) 15 x = self.bn(x) 16 return F.relu(x, inplace=True) 17 18 ''' 19 in_channels 输入数据的通道 20 out_channels_1x1 1*1卷积深度 21 out_channels_1x1_3 3*3前面的1*1卷积深度 22 out_channels_3x3 3*3卷积深度 23 out_channels_1x1_5 5*5前面的1*1卷积深度 24 out_channels_5x5 5*5卷积深度 25 out_channels_pool 池化后面的1*1卷积深度 26 ''' 27 class Inception(nn.Module): 28 def __init__(self, in_channels, out_channels_1x1, 29 out_channels_1x1_3, out_channels_3x3, 30 out_channels_1x1_5, out_channels_5x5, 31 out_channels_pool ): 32 super(Inception, self).__init__() 33 ##第一条线 34 self.branch1x1 = BasicConv2d(in_channels, out_channels_1x1, 1) 35 36 ##第二条线 37 self.branch3x3 = nn.Sequential( 38 BasicConv2d(in_channels, out_channels_1x1_3, 1), 39 BasicConv2d(out_channels_1x1_3, out_channels_3x3, 3, 1, 1) 40 ) 41 42 ##第三条线 43 self.branch5x5 = nn.Sequential( 44 BasicConv2d(in_channels, out_channels_1x1_5, 1), 45 BasicConv2d(out_channels_1x1_5, out_channels_5x5, 5, 1, 2) 46 ) 47 48 ##第四条线 49 self.branch_pool = nn.Sequential( 50 nn.MaxPool2d(kernel_size=3, stride=1, padding=1), 51 BasicConv2d(in_channels, out_channels_pool, 1) 52 ) 53 54 def forward(self, x): 55 branch1x1 = self.branch1x1(x) 56 57 branch3x3 = self.branch3x3(x) 58 59 branch5x5 = self.branch5x5(x) 60 61 branch_pool = self.branch_pool(x) 62 63 output = [branch1x1, branch3x3, branch5x5, branch_pool] 64 return torch.cat(output, 1) 65 66 class GoogLeNet(nn.Module): 67 def __init__(self, in_channels=3, num_class=10): 68 super(GoogLeNet, self).__init__() 69 ##第 1 个模块 70 self.block1 = nn.Sequential( 71 nn.Conv2d(in_channels, 64, 7, 2, 3), 72 nn.MaxPool2d(3, 2, 1) 73 ) 74 ##第 2 个模块 75 self.block2 = nn.Sequential( 76 nn.Conv2d(64, 192, 3, 1, 1), 77 nn.Conv2d(192, 192, 3, 1, 1), 78 nn.MaxPool2d(3, 2, 1) 79 ) 80 ##第 3 个模块 81 self.block3 = nn.Sequential( 82 Inception(192, 64, 96, 128, 16, 32, 32), 83 Inception(256, 128, 128, 192, 32, 96, 64), 84 nn.MaxPool2d(3, 2, 1) 85 ) 86 ##第 4 个模块 87 self.block4 = nn.Sequential( 88 Inception(480, 192, 96, 208, 16, 48, 64), 89 Inception(512, 160, 112, 224, 24, 64, 64), #这里究极体会输出 90 Inception(512, 128, 128, 256, 24, 64, 64), 91 Inception(512, 112, 144, 288, 32, 64, 64), 92 Inception(528, 256, 160, 320, 32, 128, 128), #这里究极体会输出 93 nn.MaxPool2d(3, 2, 1) 94 ) 95 ##第 5 个模块 96 self.block5 = nn.Sequential( 97 Inception(832, 256, 160, 320, 32, 128, 128), 98 Inception(832, 384, 192, 384, 48, 128, 128), 99 nn.AvgPool2d(7, 1) 100 ) 101 self.classifier = nn.Sequential( 102 nn.Dropout(0.4), 103 nn.Linear(1024, num_class), 104 # nn.Sigmoid(1024,out_channels) 105 ) 106 107 def forward(self, x): 108 x = self.block1(x) 109 x = self.block2(x) 110 x = self.block3(x) 111 x = self.block4(x) 112 x = self.block5(x) 113 114 x = torch.reshape(x, (x.shape[0], -1)) 115 x = self.classifier(x) 116 117 return x

classfyNet_main.py

1 import torch 2 from torch.utils.data import DataLoader 3 from torch import nn, optim 4 from torchvision import datasets, transforms 5 from torchvision.transforms.functional import InterpolationMode 6 7 from matplotlib import pyplot as plt 8 9 10 import time 11 12 from Lenet5 import Lenet5_new 13 from Resnet18 import ResNet18,ResNet18_new 14 from AlexNet import AlexNet 15 from Vgg16 import VGGNet16 16 from Densenet import DenseNet121, DenseNet169, DenseNet201, DenseNet264 17 18 from NIN import NIN_Net 19 from GoogleNet import GoogLeNet 20 21 def main(): 22 23 print("Load datasets...") 24 25 # transforms.RandomHorizontalFlip(p=0.5)---以0.5的概率对图片做水平横向翻转 26 # transforms.ToTensor()---shape从(H,W,C)->(C,H,W), 每个像素点从(0-255)映射到(0-1):直接除以255 27 # transforms.Normalize---先将输入归一化到(0,1),像素点通过"(x-mean)/std",将每个元素分布到(-1,1) 28 transform_train = transforms.Compose([ 29 transforms.Resize((224, 224), interpolation=InterpolationMode.BICUBIC), 30 # transforms.RandomCrop(32, padding=4), # 先四周填充0,在吧图像随机裁剪成32*32 31 transforms.RandomHorizontalFlip(p=0.5), 32 transforms.ToTensor(), 33 transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225)) 34 ]) 35 36 transform_test = transforms.Compose([ 37 transforms.Resize((224, 224), interpolation=InterpolationMode.BICUBIC), 38 # transforms.RandomCrop(32, padding=4), # 先四周填充0,在吧图像随机裁剪成32*32 39 transforms.ToTensor(), 40 transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225)) 41 ]) 42 43 # 内置函数下载数据集 44 train_dataset = datasets.CIFAR10(root="./data/Cifar10/", train=True, 45 transform = transform_train, 46 download=True) 47 test_dataset = datasets.CIFAR10(root = "./data/Cifar10/", 48 train = False, 49 transform = transform_test, 50 download=True) 51 52 print(len(train_dataset), len(test_dataset)) 53 54 Batch_size = 64 55 train_loader = DataLoader(train_dataset, batch_size=Batch_size, shuffle = True, num_workers=4) 56 test_loader = DataLoader(test_dataset, batch_size = Batch_size, shuffle = False, num_workers=4) 57 58 # 设置CUDA 59 device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") 60 61 # 初始化模型 62 # 直接更换模型就行,其他无需操作 63 # model = Lenet5_new().to(device) 64 # model = ResNet18().to(device) 65 # model = ResNet18_new().to(device) 66 # model = VGGNet16().to(device) 67 # model = DenseNet121().to(device) 68 # model = DenseNet169().to(device) 69 70 # model = NIN_Net().to(device) 71 72 model = GoogLeNet().to(device) 73 74 # model = AlexNet(num_classes=10, init_weights=True).to(device) 75 print(" GoogLeNet train...") 76 77 # 构造损失函数和优化器 78 criterion = nn.CrossEntropyLoss() # 多分类softmax构造损失 79 # opt = optim.SGD(model.parameters(), lr=0.01, momentum=0.8, weight_decay=0.001) 80 opt = optim.SGD(model.parameters(), lr=0.01, momentum=0.9, weight_decay=0.0005) 81 82 # 动态更新学习率 ------每隔step_size : lr = lr * gamma 83 schedule = optim.lr_scheduler.StepLR(opt, step_size=10, gamma=0.6, last_epoch=-1) 84 85 # 开始训练 86 print("Start Train...") 87 88 epochs = 100 89 90 loss_list = [] 91 train_acc_list =[] 92 test_acc_list = [] 93 epochs_list = [] 94 95 for epoch in range(0, epochs): 96 97 start = time.time() 98 99 model.train() 100 101 running_loss = 0.0 102 batch_num = 0 103 104 for i, (inputs, labels) in enumerate(train_loader): 105 106 inputs, labels = inputs.to(device), labels.to(device) 107 108 # 将数据送入模型训练 109 outputs = model(inputs) 110 # 计算损失 111 loss = criterion(outputs, labels).to(device) 112 113 # 重置梯度 114 opt.zero_grad() 115 # 计算梯度,反向传播 116 loss.backward() 117 # 根据反向传播的梯度值优化更新参数 118 opt.step() 119 120 # 100个batch的 loss 之和 121 running_loss += loss.item() 122 # loss_list.append(loss.item()) 123 batch_num+=1 124 125 126 epochs_list.append(epoch) 127 128 # 每一轮结束输出一下当前的学习率 lr 129 lr_1 = opt.param_groups[0]['lr'] 130 print("learn_rate:%.15f" % lr_1) 131 schedule.step() 132 133 end = time.time() 134 print('epoch = %d/100, batch_num = %d, loss = %.6f, time = %.3f' % (epoch+1, batch_num, running_loss/batch_num, end-start)) 135 running_loss=0.0 136 137 # 每个epoch训练结束,都进行一次测试验证 138 model.eval() 139 train_correct = 0.0 140 train_total = 0 141 142 test_correct = 0.0 143 test_total = 0 144 145 # 训练模式不需要反向传播更新梯度 146 with torch.no_grad(): 147 148 # print("=======================train=======================") 149 for inputs, labels in train_loader: 150 inputs, labels = inputs.to(device), labels.to(device) 151 outputs = model(inputs) 152 153 pred = outputs.argmax(dim=1) # 返回每一行中最大值元素索引 154 train_total += inputs.size(0) 155 train_correct += torch.eq(pred, labels).sum().item() 156 157 158 # print("=======================test=======================") 159 for inputs, labels in test_loader: 160 inputs, labels = inputs.to(device), labels.to(device) 161 outputs = model(inputs) 162 163 pred = outputs.argmax(dim=1) # 返回每一行中最大值元素索引 164 test_total += inputs.size(0) 165 test_correct += torch.eq(pred, labels).sum().item() 166 167 print("train_total = %d, Accuracy = %.5f %%, test_total= %d, Accuracy = %.5f %%" %(train_total, 100 * train_correct / train_total, test_total, 100 * test_correct / test_total)) 168 169 train_acc_list.append(100 * train_correct / train_total) 170 test_acc_list.append(100 * test_correct / test_total) 171 172 # print("Accuracy of the network on the 10000 test images:%.5f %%" % (100 * test_correct / test_total)) 173 # print("===============================================") 174 175 fig = plt.figure(figsize=(4, 4)) 176 177 plt.plot(epochs_list, train_acc_list, label='train_acc_list') 178 plt.plot(epochs_list, test_acc_list, label='test_acc_list') 179 plt.legend() 180 plt.title("train_test_acc") 181 plt.savefig('GoogLeNet_acc_epoch_{:04d}.png'.format(epochs)) 182 plt.close() 183 184 if __name__ == "__main__": 185 186 main()

loss和精度acc

1 torch.Size([64, 10]) 2 PyTorch Version: 1.12.1+cu102 3 Torchvision Version: 0.13.1+cu102 4 Load datasets... 5 Files already downloaded and verified 6 Files already downloaded and verified 7 50000 10000 8 NIN_Net train... 9 Start Train... 10 learn_rate:0.010000000000000 11 epoch = 1/100, batch_num = 782, loss = 1.370396, time = 95.395 12 train_total = 50000, Accuracy = 63.17600 %, test_total= 10000, Accuracy = 62.50000 % 13 learn_rate:0.010000000000000 14 epoch = 2/100, batch_num = 782, loss = 0.789557, time = 94.268 15 train_total = 50000, Accuracy = 75.33600 %, test_total= 10000, Accuracy = 73.89000 % 16 learn_rate:0.010000000000000 17 epoch = 3/100, batch_num = 782, loss = 0.581644, time = 95.266 18 train_total = 50000, Accuracy = 82.81800 %, test_total= 10000, Accuracy = 79.88000 % 19 learn_rate:0.010000000000000 20 epoch = 4/100, batch_num = 782, loss = 0.470994, time = 93.822 21 train_total = 50000, Accuracy = 84.31000 %, test_total= 10000, Accuracy = 81.43000 % 22 learn_rate:0.010000000000000 23 epoch = 5/100, batch_num = 782, loss = 0.405856, time = 94.878 24 train_total = 50000, Accuracy = 84.01400 %, test_total= 10000, Accuracy = 80.49000 % 25 learn_rate:0.010000000000000 26 epoch = 6/100, batch_num = 782, loss = 0.357098, time = 94.099 27 train_total = 50000, Accuracy = 90.65000 %, test_total= 10000, Accuracy = 86.07000 % 28 learn_rate:0.010000000000000 29 epoch = 7/100, batch_num = 782, loss = 0.308426, time = 95.302 30 train_total = 50000, Accuracy = 86.57200 %, test_total= 10000, Accuracy = 82.14000 % 31 learn_rate:0.010000000000000 32 epoch = 8/100, batch_num = 782, loss = 0.276895, time = 95.147 33 train_total = 50000, Accuracy = 90.81600 %, test_total= 10000, Accuracy = 85.88000 % 34 learn_rate:0.010000000000000 35 epoch = 9/100, batch_num = 782, loss = 0.244451, time = 95.211 36 train_total = 50000, Accuracy = 90.74600 %, test_total= 10000, Accuracy = 84.79000 % 37 learn_rate:0.010000000000000 38 epoch = 10/100, batch_num = 782, loss = 0.226096, time = 96.584 39 train_total = 50000, Accuracy = 93.82000 %, test_total= 10000, Accuracy = 87.90000 % 40 learn_rate:0.006000000000000 41 epoch = 11/100, batch_num = 782, loss = 0.135348, time = 96.430 42 train_total = 50000, Accuracy = 97.40800 %, test_total= 10000, Accuracy = 90.50000 % 43 learn_rate:0.006000000000000 44 epoch = 12/100, batch_num = 782, loss = 0.105362, time = 95.864 45 train_total = 50000, Accuracy = 96.17800 %, test_total= 10000, Accuracy = 89.17000 % 46 learn_rate:0.006000000000000 47 epoch = 13/100, batch_num = 782, loss = 0.095123, time = 96.124 48 train_total = 50000, Accuracy = 96.89200 %, test_total= 10000, Accuracy = 88.89000 % 49 learn_rate:0.006000000000000 50 epoch = 14/100, batch_num = 782, loss = 0.087209, time = 96.014 51 train_total = 50000, Accuracy = 98.14000 %, test_total= 10000, Accuracy = 90.53000 % 52 learn_rate:0.006000000000000 53 epoch = 15/100, batch_num = 782, loss = 0.080366, time = 95.506 54 train_total = 50000, Accuracy = 98.32600 %, test_total= 10000, Accuracy = 90.32000 % 55 learn_rate:0.006000000000000 56 epoch = 16/100, batch_num = 782, loss = 0.078542, time = 96.306 57 train_total = 50000, Accuracy = 97.61600 %, test_total= 10000, Accuracy = 89.51000 % 58 learn_rate:0.006000000000000 59 epoch = 17/100, batch_num = 782, loss = 0.070368, time = 95.010 60 train_total = 50000, Accuracy = 98.47200 %, test_total= 10000, Accuracy = 90.23000 % 61 learn_rate:0.006000000000000 62 epoch = 18/100, batch_num = 782, loss = 0.073643, time = 96.235 63 train_total = 50000, Accuracy = 97.79400 %, test_total= 10000, Accuracy = 89.39000 % 64 learn_rate:0.006000000000000 65 epoch = 19/100, batch_num = 782, loss = 0.072382, time = 95.035 66 train_total = 50000, Accuracy = 98.30000 %, test_total= 10000, Accuracy = 89.65000 % 67 learn_rate:0.006000000000000 68 epoch = 20/100, batch_num = 782, loss = 0.061964, time = 95.757 69 train_total = 50000, Accuracy = 97.55800 %, test_total= 10000, Accuracy = 89.25000 % 70 learn_rate:0.003600000000000 71 epoch = 21/100, batch_num = 782, loss = 0.031406, time = 95.418 72 train_total = 50000, Accuracy = 99.81400 %, test_total= 10000, Accuracy = 92.06000 % 73 learn_rate:0.003600000000000 74 epoch = 22/100, batch_num = 782, loss = 0.016657, time = 95.774 75 train_total = 50000, Accuracy = 99.86000 %, test_total= 10000, Accuracy = 92.09000 % 76 learn_rate:0.003600000000000 77 epoch = 23/100, batch_num = 782, loss = 0.011798, time = 96.257 78 train_total = 50000, Accuracy = 99.95600 %, test_total= 10000, Accuracy = 92.47000 % 79 learn_rate:0.003600000000000 80 epoch = 24/100, batch_num = 782, loss = 0.008725, time = 96.043 81 train_total = 50000, Accuracy = 99.97800 %, test_total= 10000, Accuracy = 92.47000 % 82 learn_rate:0.003600000000000 83 epoch = 25/100, batch_num = 782, loss = 0.007397, time = 95.633 84 train_total = 50000, Accuracy = 99.97800 %, test_total= 10000, Accuracy = 92.49000 % 85 learn_rate:0.003600000000000 86 epoch = 26/100, batch_num = 782, loss = 0.007058, time = 95.648 87 train_total = 50000, Accuracy = 99.99200 %, test_total= 10000, Accuracy = 92.58000 % 88 learn_rate:0.003600000000000 89 epoch = 27/100, batch_num = 782, loss = 0.005411, time = 96.113 90 train_total = 50000, Accuracy = 99.99800 %, test_total= 10000, Accuracy = 92.51000 % 91 learn_rate:0.003600000000000 92 epoch = 28/100, batch_num = 782, loss = 0.004637, time = 95.960 93 train_total = 50000, Accuracy = 99.99800 %, test_total= 10000, Accuracy = 92.64000 % 94 learn_rate:0.003600000000000 95 epoch = 29/100, batch_num = 782, loss = 0.003989, time = 95.811 96 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 92.70000 % 97 learn_rate:0.003600000000000 98 epoch = 30/100, batch_num = 782, loss = 0.003333, time = 96.130 99 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 92.77000 % 100 learn_rate:0.002160000000000 101 epoch = 31/100, batch_num = 782, loss = 0.002816, time = 96.139 102 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 92.80000 % 103 learn_rate:0.002160000000000 104 epoch = 32/100, batch_num = 782, loss = 0.002434, time = 95.981 105 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 92.91000 % 106 learn_rate:0.002160000000000 107 epoch = 33/100, batch_num = 782, loss = 0.002460, time = 95.911 108 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 92.93000 % 109 learn_rate:0.002160000000000 110 epoch = 34/100, batch_num = 782, loss = 0.002303, time = 96.058 111 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 92.88000 % 112 learn_rate:0.002160000000000 113 epoch = 35/100, batch_num = 782, loss = 0.002129, time = 95.845 114 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 92.84000 % 115 learn_rate:0.002160000000000 116 epoch = 36/100, batch_num = 782, loss = 0.002029, time = 96.210 117 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 92.93000 % 118 learn_rate:0.002160000000000 119 epoch = 37/100, batch_num = 782, loss = 0.002060, time = 96.028 120 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 92.95000 % 121 learn_rate:0.002160000000000 122 epoch = 38/100, batch_num = 782, loss = 0.002066, time = 96.241 123 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 92.90000 % 124 learn_rate:0.002160000000000 125 epoch = 39/100, batch_num = 782, loss = 0.002101, time = 95.963 126 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.04000 % 127 learn_rate:0.002160000000000 128 epoch = 40/100, batch_num = 782, loss = 0.002091, time = 95.792 129 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 92.93000 % 130 learn_rate:0.001296000000000 131 epoch = 41/100, batch_num = 782, loss = 0.001967, time = 95.815 132 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.06000 % 133 learn_rate:0.001296000000000 134 epoch = 42/100, batch_num = 782, loss = 0.002068, time = 95.753 135 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.04000 % 136 learn_rate:0.001296000000000 137 epoch = 43/100, batch_num = 782, loss = 0.002134, time = 96.074 138 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 92.98000 % 139 learn_rate:0.001296000000000 140 epoch = 44/100, batch_num = 782, loss = 0.001972, time = 95.305 141 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.03000 % 142 learn_rate:0.001296000000000 143 epoch = 45/100, batch_num = 782, loss = 0.002112, time = 95.660 144 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.19000 % 145 learn_rate:0.001296000000000 146 epoch = 46/100, batch_num = 782, loss = 0.002134, time = 95.843 147 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.07000 % 148 learn_rate:0.001296000000000 149 epoch = 47/100, batch_num = 782, loss = 0.002034, time = 96.097 150 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.02000 % 151 learn_rate:0.001296000000000 152 epoch = 48/100, batch_num = 782, loss = 0.001948, time = 96.196 153 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.19000 % 154 learn_rate:0.001296000000000 155 epoch = 49/100, batch_num = 782, loss = 0.002036, time = 95.903 156 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.21000 % 157 learn_rate:0.001296000000000 158 epoch = 50/100, batch_num = 782, loss = 0.001898, time = 96.279 159 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.15000 % 160 learn_rate:0.000777600000000 161 epoch = 51/100, batch_num = 782, loss = 0.001916, time = 96.313 162 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.10000 % 163 learn_rate:0.000777600000000 164 epoch = 52/100, batch_num = 782, loss = 0.002036, time = 96.318 165 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.14000 % 166 learn_rate:0.000777600000000 167 epoch = 53/100, batch_num = 782, loss = 0.001904, time = 96.007 168 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.11000 % 169 learn_rate:0.000777600000000 170 epoch = 54/100, batch_num = 782, loss = 0.001932, time = 96.268 171 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.25000 % 172 learn_rate:0.000777600000000 173 epoch = 55/100, batch_num = 782, loss = 0.001854, time = 96.555 174 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.05000 % 175 learn_rate:0.000777600000000 176 epoch = 56/100, batch_num = 782, loss = 0.001827, time = 95.625 177 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.07000 % 178 learn_rate:0.000777600000000 179 epoch = 57/100, batch_num = 782, loss = 0.001922, time = 96.120 180 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.17000 % 181 learn_rate:0.000777600000000 182 epoch = 58/100, batch_num = 782, loss = 0.001896, time = 95.852 183 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.09000 % 184 learn_rate:0.000777600000000 185 epoch = 59/100, batch_num = 782, loss = 0.001902, time = 96.587 186 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.23000 % 187 learn_rate:0.000777600000000 188 epoch = 60/100, batch_num = 782, loss = 0.002246, time = 96.677 189 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.04000 % 190 learn_rate:0.000466560000000 191 epoch = 61/100, batch_num = 782, loss = 0.002110, time = 96.461 192 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.13000 % 193 learn_rate:0.000466560000000 194 epoch = 62/100, batch_num = 782, loss = 0.001956, time = 96.045 195 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.26000 % 196 learn_rate:0.000466560000000 197 epoch = 63/100, batch_num = 782, loss = 0.001929, time = 96.355 198 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.03000 % 199 learn_rate:0.000466560000000 200 epoch = 64/100, batch_num = 782, loss = 0.001950, time = 95.994 201 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.17000 % 202 learn_rate:0.000466560000000 203 epoch = 65/100, batch_num = 782, loss = 0.001885, time = 95.865 204 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.04000 % 205 learn_rate:0.000466560000000 206 epoch = 66/100, batch_num = 782, loss = 0.001948, time = 95.952 207 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.25000 % 208 learn_rate:0.000466560000000 209 epoch = 67/100, batch_num = 782, loss = 0.001930, time = 96.614 210 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.01000 % 211 learn_rate:0.000466560000000 212 epoch = 68/100, batch_num = 782, loss = 0.001885, time = 96.163 213 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.02000 % 214 learn_rate:0.000466560000000 215 epoch = 69/100, batch_num = 782, loss = 0.001973, time = 96.255 216 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.04000 % 217 learn_rate:0.000466560000000 218 epoch = 70/100, batch_num = 782, loss = 0.001952, time = 96.205 219 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.04000 % 220 learn_rate:0.000279936000000 221 epoch = 71/100, batch_num = 782, loss = 0.001946, time = 95.993 222 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.10000 % 223 learn_rate:0.000279936000000 224 epoch = 72/100, batch_num = 782, loss = 0.001898, time = 96.283 225 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.10000 % 226 learn_rate:0.000279936000000 227 epoch = 73/100, batch_num = 782, loss = 0.001921, time = 96.161 228 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.16000 % 229 learn_rate:0.000279936000000 230 epoch = 74/100, batch_num = 782, loss = 0.001922, time = 96.465 231 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.15000 % 232 learn_rate:0.000279936000000 233 epoch = 75/100, batch_num = 782, loss = 0.001906, time = 95.855 234 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.25000 % 235 learn_rate:0.000279936000000 236 epoch = 76/100, batch_num = 782, loss = 0.001935, time = 96.168 237 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.06000 % 238 learn_rate:0.000279936000000 239 epoch = 77/100, batch_num = 782, loss = 0.001922, time = 96.120 240 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.08000 % 241 learn_rate:0.000279936000000 242 epoch = 78/100, batch_num = 782, loss = 0.001993, time = 96.087 243 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.17000 % 244 learn_rate:0.000279936000000 245 epoch = 79/100, batch_num = 782, loss = 0.001924, time = 96.356 246 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.14000 % 247 learn_rate:0.000279936000000 248 epoch = 80/100, batch_num = 782, loss = 0.001958, time = 96.420 249 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.20000 % 250 learn_rate:0.000167961600000 251 epoch = 81/100, batch_num = 782, loss = 0.001943, time = 95.762 252 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.14000 % 253 learn_rate:0.000167961600000 254 epoch = 82/100, batch_num = 782, loss = 0.001855, time = 96.882 255 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.06000 % 256 learn_rate:0.000167961600000 257 epoch = 83/100, batch_num = 782, loss = 0.001985, time = 96.236 258 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 92.95000 % 259 learn_rate:0.000167961600000 260 epoch = 84/100, batch_num = 782, loss = 0.002065, time = 96.286 261 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.04000 % 262 learn_rate:0.000167961600000 263 epoch = 85/100, batch_num = 782, loss = 0.001957, time = 96.562 264 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.02000 % 265 learn_rate:0.000167961600000 266 epoch = 86/100, batch_num = 782, loss = 0.001996, time = 96.504 267 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.05000 % 268 learn_rate:0.000167961600000 269 epoch = 87/100, batch_num = 782, loss = 0.001853, time = 96.209 270 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.22000 % 271 learn_rate:0.000167961600000 272 epoch = 88/100, batch_num = 782, loss = 0.001897, time = 95.828 273 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.11000 % 274 learn_rate:0.000167961600000 275 epoch = 89/100, batch_num = 782, loss = 0.002014, time = 96.319 276 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.29000 % 277 learn_rate:0.000167961600000 278 epoch = 90/100, batch_num = 782, loss = 0.001906, time = 95.519 279 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.14000 % 280 learn_rate:0.000100776960000 281 epoch = 91/100, batch_num = 782, loss = 0.001962, time = 95.681 282 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.16000 % 283 learn_rate:0.000100776960000 284 epoch = 92/100, batch_num = 782, loss = 0.001853, time = 96.188 285 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.15000 % 286 learn_rate:0.000100776960000 287 epoch = 93/100, batch_num = 782, loss = 0.001917, time = 96.333 288 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.03000 % 289 learn_rate:0.000100776960000 290 epoch = 94/100, batch_num = 782, loss = 0.001926, time = 95.970 291 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.12000 % 292 learn_rate:0.000100776960000 293 epoch = 95/100, batch_num = 782, loss = 0.002016, time = 96.025 294 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.04000 % 295 learn_rate:0.000100776960000 296 epoch = 96/100, batch_num = 782, loss = 0.001895, time = 95.694 297 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.17000 % 298 learn_rate:0.000100776960000 299 epoch = 97/100, batch_num = 782, loss = 0.001929, time = 95.846 300 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.29000 % 301 learn_rate:0.000100776960000 302 epoch = 98/100, batch_num = 782, loss = 0.001896, time = 95.482 303 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.09000 % 304 learn_rate:0.000100776960000 305 epoch = 99/100, batch_num = 782, loss = 0.001956, time = 96.497 306 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.07000 % 307 learn_rate:0.000100776960000 308 epoch = 100/100, batch_num = 782, loss = 0.001868, time = 95.987 309 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.00000 %

图 GoogLenet_acc_epoch_100

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 25岁的心里话

· 闲置电脑爆改个人服务器(超详细) #公网映射 #Vmware虚拟网络编辑器

· 零经验选手,Compose 一天开发一款小游戏!

· 通过 API 将Deepseek响应流式内容输出到前端

· 因为Apifox不支持离线,我果断选择了Apipost!