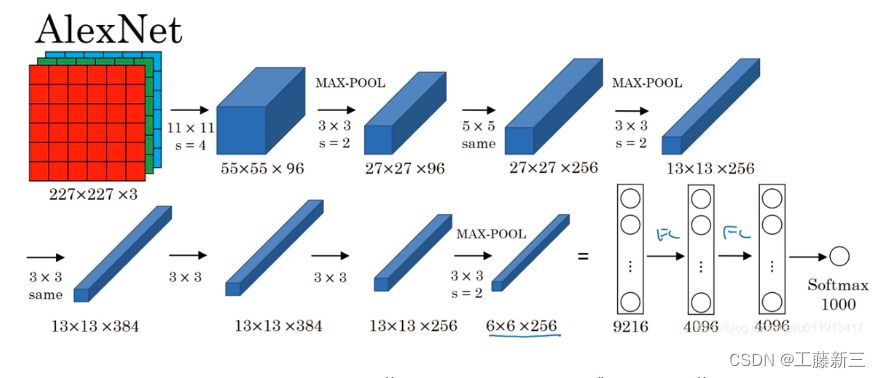

03-Alexnet 图像分类

图1 AlexNet网络结构

Cifar10 数据集的AlexNet的框架实现(Pytorch):

1 import numpy as np 2 import cv2 3 import torch 4 import torch.nn as nn 5 import torch.optim as optim 6 import torch.nn.functional as F 7 8 class AlexNet(nn.Module): 9 def __init__(self,num_classes=1000,init_weights=False): 10 super(AlexNet, self).__init__() 11 12 self.features = nn.Sequential( #Sequential能将层结构打包 13 nn.Conv2d(3,48,kernel_size=11,stride=4,padding=2), #input_channel=3,output_channel=48 14 nn.ReLU(inplace=True), 15 nn.MaxPool2d(kernel_size=3,stride=2), 16 17 nn.Conv2d(48, 128, kernel_size=5, padding=2), # input_channel=3,output_channel=48 18 nn.ReLU(inplace=True), 19 nn.MaxPool2d(kernel_size=3, stride=2), 20 21 nn.Conv2d(128, 192, kernel_size=3, padding=1), # input_channel=3,output_channel=48 22 nn.ReLU(inplace=True), 23 24 nn.Conv2d(192, 192, kernel_size=3, padding=1), # input_channel=3,output_channel=48 25 nn.ReLU(inplace=True), 26 27 nn.Conv2d(192, 128, kernel_size=3, padding=1), # input_channel=3,output_channel=48 28 nn.ReLU(inplace=True), 29 nn.MaxPool2d(kernel_size=3, stride=2), 30 ) 31 32 self.classifier = nn.Sequential( 33 nn.Dropout(p=0.5), #默认随机失活 34 nn.Linear(128*6*6,2048), 35 nn.ReLU(inplace=True), 36 nn.Dropout(p=0.5), # 默认随机失活 37 nn.Linear(2048, 2048), 38 nn.ReLU(inplace=True), 39 nn.Linear(2048,num_classes), 40 ) 41 if init_weights: 42 self._initialize_weights() 43 44 def forward(self,x): 45 x = self.features(x) 46 x = torch.flatten(x,start_dim=1) 47 x = self.classifier(x) 48 return x 49 50 def _initialize_weights(self): 51 for m in self.modules(): 52 if isinstance(m,nn.Conv2d): 53 nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu') #凯明初始化,国人大佬 54 if m.bias is not None: 55 nn.init.constant_(m.bias,0) 56 elif isinstance(m,nn.Linear): 57 nn.init.normal_(m.weight,0,0.01) 58 nn.init.constant_(m.bias,0)

classfyNet_train.py

1 import torch 2 from torch.utils.data import DataLoader 3 from torch import nn, optim 4 from torchvision import datasets, transforms 5 from torchvision.transforms.functional import InterpolationMode 6 7 from matplotlib import pyplot as plt 8 9 10 import time 11 12 from Lenet5 import Lenet5_new 13 from Resnet18 import ResNet18 14 from AlexNet import AlexNet 15 16 def main(): 17 18 print("Load datasets...") 19 20 # transforms.RandomHorizontalFlip(p=0.5)---以0.5的概率对图片做水平横向翻转 21 # transforms.ToTensor()---shape从(H,W,C)->(C,H,W), 每个像素点从(0-255)映射到(0-1):直接除以255 22 # transforms.Normalize---先将输入归一化到(0,1),像素点通过"(x-mean)/std",将每个元素分布到(-1,1) 23 transform_train = transforms.Compose([ 24 transforms.Resize((224, 224), interpolation=InterpolationMode.BICUBIC), 25 transforms.RandomHorizontalFlip(p=0.5), 26 transforms.ToTensor(), 27 transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225)) 28 ]) 29 30 transform_test = transforms.Compose([ 31 transforms.Resize((224, 224), interpolation=InterpolationMode.BICUBIC), 32 transforms.ToTensor(), 33 transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225)) 34 ]) 35 36 # 内置函数下载数据集 37 train_dataset = datasets.CIFAR10(root="./data/Cifar10/", train=True, 38 transform = transform_train, 39 download=True) 40 test_dataset = datasets.CIFAR10(root = "./data/Cifar10/", 41 train = False, 42 transform = transform_test, 43 download=True) 44 45 print(len(train_dataset), len(test_dataset)) 46 47 Batch_size = 128 48 train_loader = DataLoader(train_dataset, batch_size=Batch_size, shuffle = True, num_workers=4) 49 test_loader = DataLoader(test_dataset, batch_size = Batch_size, shuffle = False, num_workers=4) 50 51 # 设置CUDA 52 device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") 53 54 # 初始化模型 55 # 直接更换模型就行,其他无需操作 56 # model = Lenet5_new().to(device) 57 # model = ResNet18().to(device) 58 model = AlexNet(num_classes=10, init_weights=True).to(device) 59 60 # 构造损失函数和优化器 61 criterion = nn.CrossEntropyLoss() # 多分类softmax构造损失 62 # opt = optim.SGD(model.parameters(), lr=0.01, momentum=0.8, weight_decay=0.001) 63 opt = optim.SGD(model.parameters(), lr=0.01, momentum=0.9, weight_decay=0.0005) 64 65 # 动态更新学习率 ------每隔step_size : lr = lr * gamma 66 schedule = optim.lr_scheduler.StepLR(opt, step_size=10, gamma=0.6, last_epoch=-1) 67 68 # 开始训练 69 print("Start Train...") 70 71 epochs = 100 72 73 loss_list = [] 74 train_acc_list =[] 75 test_acc_list = [] 76 epochs_list = [] 77 78 for epoch in range(0, epochs): 79 80 start = time.time() 81 82 model.train() 83 84 running_loss = 0.0 85 batch_num = 0 86 87 for i, (inputs, labels) in enumerate(train_loader): 88 89 inputs, labels = inputs.to(device), labels.to(device) 90 91 # 将数据送入模型训练 92 outputs = model(inputs) 93 # 计算损失 94 loss = criterion(outputs, labels).to(device) 95 96 # 重置梯度 97 opt.zero_grad() 98 # 计算梯度,反向传播 99 loss.backward() 100 # 根据反向传播的梯度值优化更新参数 101 opt.step() 102 103 # 100个batch的 loss 之和 104 running_loss += loss.item() 105 # loss_list.append(loss.item()) 106 batch_num+=1 107 108 109 epochs_list.append(epoch) 110 111 # 每一轮结束输出一下当前的学习率 lr 112 lr_1 = opt.param_groups[0]['lr'] 113 print("learn_rate:%.15f" % lr_1) 114 schedule.step() 115 116 end = time.time() 117 print('epoch = %d/100, batch_num = %d, loss = %.6f, time = %.3f' % (epoch+1, batch_num, running_loss/batch_num, end-start)) 118 running_loss=0.0 119 120 # 每个epoch训练结束,都进行一次测试验证 121 model.eval() 122 train_correct = 0.0 123 train_total = 0 124 125 test_correct = 0.0 126 test_total = 0 127 128 # 训练模式不需要反向传播更新梯度 129 with torch.no_grad(): 130 131 # print("=======================train=======================") 132 for inputs, labels in train_loader: 133 inputs, labels = inputs.to(device), labels.to(device) 134 outputs = model(inputs) 135 136 pred = outputs.argmax(dim=1) # 返回每一行中最大值元素索引 137 train_total += inputs.size(0) 138 train_correct += torch.eq(pred, labels).sum().item() 139 140 141 # print("=======================test=======================") 142 for inputs, labels in test_loader: 143 inputs, labels = inputs.to(device), labels.to(device) 144 outputs = model(inputs) 145 146 pred = outputs.argmax(dim=1) # 返回每一行中最大值元素索引 147 test_total += inputs.size(0) 148 test_correct += torch.eq(pred, labels).sum().item() 149 150 print("train_total = %d, Accuracy = %.5f %%, test_total= %d, Accuracy = %.5f %%" %(train_total, 100 * train_correct / train_total, test_total, 100 * test_correct / test_total)) 151 152 train_acc_list.append(100 * train_correct / train_total) 153 test_acc_list.append(100 * test_correct / test_total) 154 155 # print("Accuracy of the network on the 10000 test images:%.5f %%" % (100 * test_correct / test_total)) 156 # print("===============================================") 157 158 fig = plt.figure(figsize=(4, 4)) 159 160 plt.plot(epochs_list, train_acc_list, label='train_acc_list') 161 plt.plot(epochs_list, test_acc_list, label='test_acc_list') 162 plt.legend() 163 plt.title("train_test_acc") 164 plt.savefig('Lenet5_acc_epoch_{:04d}.png'.format(epochs)) 165 plt.close() 166 167 if __name__ == "__main__": 168 169 main()

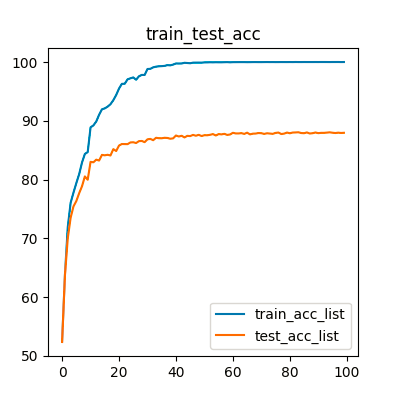

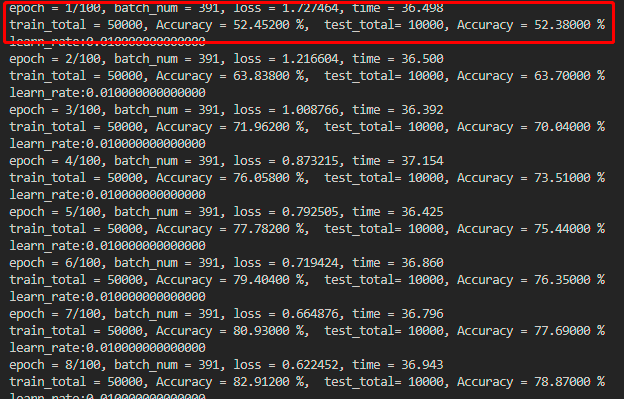

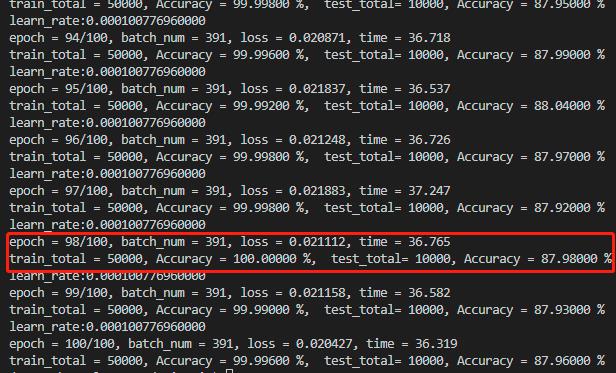

loss和acc变化

1 torch.Size([64, 10]) 2 Load datasets... 3 Files already downloaded and verified 4 Files already downloaded and verified 5 50000 10000 6 Start Train... 7 learn_rate:0.010000000000000 8 epoch = 1/100, batch_num = 391, loss = 1.727464, time = 36.498 9 train_total = 50000, Accuracy = 52.45200 %, test_total= 10000, Accuracy = 52.38000 % 10 learn_rate:0.010000000000000 11 epoch = 2/100, batch_num = 391, loss = 1.216604, time = 36.500 12 train_total = 50000, Accuracy = 63.83800 %, test_total= 10000, Accuracy = 63.70000 % 13 learn_rate:0.010000000000000 14 epoch = 3/100, batch_num = 391, loss = 1.008766, time = 36.392 15 train_total = 50000, Accuracy = 71.96200 %, test_total= 10000, Accuracy = 70.04000 % 16 learn_rate:0.010000000000000 17 epoch = 4/100, batch_num = 391, loss = 0.873215, time = 37.154 18 train_total = 50000, Accuracy = 76.05800 %, test_total= 10000, Accuracy = 73.51000 % 19 learn_rate:0.010000000000000 20 epoch = 5/100, batch_num = 391, loss = 0.792505, time = 36.425 21 train_total = 50000, Accuracy = 77.78200 %, test_total= 10000, Accuracy = 75.44000 % 22 learn_rate:0.010000000000000 23 epoch = 6/100, batch_num = 391, loss = 0.719424, time = 36.860 24 train_total = 50000, Accuracy = 79.40400 %, test_total= 10000, Accuracy = 76.35000 % 25 learn_rate:0.010000000000000 26 epoch = 7/100, batch_num = 391, loss = 0.664876, time = 36.796 27 train_total = 50000, Accuracy = 80.93000 %, test_total= 10000, Accuracy = 77.69000 % 28 learn_rate:0.010000000000000 29 epoch = 8/100, batch_num = 391, loss = 0.622452, time = 36.943 30 train_total = 50000, Accuracy = 82.91200 %, test_total= 10000, Accuracy = 78.87000 % 31 learn_rate:0.010000000000000 32 epoch = 9/100, batch_num = 391, loss = 0.587154, time = 36.859 33 train_total = 50000, Accuracy = 84.36000 %, test_total= 10000, Accuracy = 80.54000 % 34 learn_rate:0.010000000000000 35 epoch = 10/100, batch_num = 391, loss = 0.540577, time = 36.299 36 train_total = 50000, Accuracy = 84.67400 %, test_total= 10000, Accuracy = 79.98000 % 37 learn_rate:0.006000000000000 38 epoch = 11/100, batch_num = 391, loss = 0.452174, time = 36.273 39 train_total = 50000, Accuracy = 88.88400 %, test_total= 10000, Accuracy = 83.02000 % 40 learn_rate:0.006000000000000 41 epoch = 12/100, batch_num = 391, loss = 0.423968, time = 36.287 42 train_total = 50000, Accuracy = 89.21800 %, test_total= 10000, Accuracy = 82.94000 % 43 learn_rate:0.006000000000000 44 epoch = 13/100, batch_num = 391, loss = 0.397755, time = 36.149 45 train_total = 50000, Accuracy = 89.88200 %, test_total= 10000, Accuracy = 83.39000 % 46 learn_rate:0.006000000000000 47 epoch = 14/100, batch_num = 391, loss = 0.385166, time = 36.782 48 train_total = 50000, Accuracy = 91.02200 %, test_total= 10000, Accuracy = 83.24000 % 49 learn_rate:0.006000000000000 50 epoch = 15/100, batch_num = 391, loss = 0.362211, time = 36.290 51 train_total = 50000, Accuracy = 91.95400 %, test_total= 10000, Accuracy = 84.20000 % 52 learn_rate:0.006000000000000 53 epoch = 16/100, batch_num = 391, loss = 0.346161, time = 36.188 54 train_total = 50000, Accuracy = 92.11200 %, test_total= 10000, Accuracy = 84.13000 % 55 learn_rate:0.006000000000000 56 epoch = 17/100, batch_num = 391, loss = 0.334280, time = 36.307 57 train_total = 50000, Accuracy = 92.40800 %, test_total= 10000, Accuracy = 84.22000 % 58 learn_rate:0.006000000000000 59 epoch = 18/100, batch_num = 391, loss = 0.326841, time = 36.467 60 train_total = 50000, Accuracy = 92.81800 %, test_total= 10000, Accuracy = 84.10000 % 61 learn_rate:0.006000000000000 62 epoch = 19/100, batch_num = 391, loss = 0.309976, time = 36.388 63 train_total = 50000, Accuracy = 93.51000 %, test_total= 10000, Accuracy = 85.18000 % 64 learn_rate:0.006000000000000 65 epoch = 20/100, batch_num = 391, loss = 0.298686, time = 36.942 66 train_total = 50000, Accuracy = 94.38400 %, test_total= 10000, Accuracy = 84.86000 % 67 learn_rate:0.003600000000000 68 epoch = 21/100, batch_num = 391, loss = 0.240491, time = 35.967 69 train_total = 50000, Accuracy = 95.48800 %, test_total= 10000, Accuracy = 85.79000 % 70 learn_rate:0.003600000000000 71 epoch = 22/100, batch_num = 391, loss = 0.217680, time = 36.585 72 train_total = 50000, Accuracy = 96.28200 %, test_total= 10000, Accuracy = 86.05000 % 73 learn_rate:0.003600000000000 74 epoch = 23/100, batch_num = 391, loss = 0.211073, time = 36.719 75 train_total = 50000, Accuracy = 96.30000 %, test_total= 10000, Accuracy = 86.04000 % 76 learn_rate:0.003600000000000 77 epoch = 24/100, batch_num = 391, loss = 0.200940, time = 36.871 78 train_total = 50000, Accuracy = 97.05000 %, test_total= 10000, Accuracy = 86.03000 % 79 learn_rate:0.003600000000000 80 epoch = 25/100, batch_num = 391, loss = 0.185021, time = 37.046 81 train_total = 50000, Accuracy = 97.22600 %, test_total= 10000, Accuracy = 86.32000 % 82 learn_rate:0.003600000000000 83 epoch = 26/100, batch_num = 391, loss = 0.187093, time = 36.523 84 train_total = 50000, Accuracy = 97.37600 %, test_total= 10000, Accuracy = 86.35000 % 85 learn_rate:0.003600000000000 86 epoch = 27/100, batch_num = 391, loss = 0.179190, time = 36.332 87 train_total = 50000, Accuracy = 96.98600 %, test_total= 10000, Accuracy = 86.22000 % 88 learn_rate:0.003600000000000 89 epoch = 28/100, batch_num = 391, loss = 0.167953, time = 36.809 90 train_total = 50000, Accuracy = 97.56800 %, test_total= 10000, Accuracy = 86.54000 % 91 learn_rate:0.003600000000000 92 epoch = 29/100, batch_num = 391, loss = 0.167085, time = 36.486 93 train_total = 50000, Accuracy = 97.80400 %, test_total= 10000, Accuracy = 86.57000 % 94 learn_rate:0.003600000000000 95 epoch = 30/100, batch_num = 391, loss = 0.162673, time = 36.913 96 train_total = 50000, Accuracy = 97.79400 %, test_total= 10000, Accuracy = 86.37000 % 97 learn_rate:0.002160000000000 98 epoch = 31/100, batch_num = 391, loss = 0.130083, time = 36.981 99 train_total = 50000, Accuracy = 98.81000 %, test_total= 10000, Accuracy = 86.85000 % 100 learn_rate:0.002160000000000 101 epoch = 32/100, batch_num = 391, loss = 0.116471, time = 36.890 102 train_total = 50000, Accuracy = 98.83800 %, test_total= 10000, Accuracy = 86.92000 % 103 learn_rate:0.002160000000000 104 epoch = 33/100, batch_num = 391, loss = 0.108332, time = 36.568 105 train_total = 50000, Accuracy = 99.09200 %, test_total= 10000, Accuracy = 86.70000 % 106 learn_rate:0.002160000000000 107 epoch = 34/100, batch_num = 391, loss = 0.101327, time = 37.373 108 train_total = 50000, Accuracy = 99.20800 %, test_total= 10000, Accuracy = 87.11000 % 109 learn_rate:0.002160000000000 110 epoch = 35/100, batch_num = 391, loss = 0.096986, time = 36.520 111 train_total = 50000, Accuracy = 99.27200 %, test_total= 10000, Accuracy = 87.04000 % 112 learn_rate:0.002160000000000 113 epoch = 36/100, batch_num = 391, loss = 0.096955, time = 36.757 114 train_total = 50000, Accuracy = 99.29600 %, test_total= 10000, Accuracy = 87.03000 % 115 learn_rate:0.002160000000000 116 epoch = 37/100, batch_num = 391, loss = 0.094020, time = 37.187 117 train_total = 50000, Accuracy = 99.34000 %, test_total= 10000, Accuracy = 87.11000 % 118 learn_rate:0.002160000000000 119 epoch = 38/100, batch_num = 391, loss = 0.087212, time = 36.777 120 train_total = 50000, Accuracy = 99.46800 %, test_total= 10000, Accuracy = 87.08000 % 121 learn_rate:0.002160000000000 122 epoch = 39/100, batch_num = 391, loss = 0.084191, time = 36.623 123 train_total = 50000, Accuracy = 99.43000 %, test_total= 10000, Accuracy = 86.95000 % 124 learn_rate:0.002160000000000 125 epoch = 40/100, batch_num = 391, loss = 0.086169, time = 37.182 126 train_total = 50000, Accuracy = 99.55600 %, test_total= 10000, Accuracy = 87.01000 % 127 learn_rate:0.001296000000000 128 epoch = 41/100, batch_num = 391, loss = 0.071286, time = 37.197 129 train_total = 50000, Accuracy = 99.75000 %, test_total= 10000, Accuracy = 87.50000 % 130 learn_rate:0.001296000000000 131 epoch = 42/100, batch_num = 391, loss = 0.063353, time = 37.097 132 train_total = 50000, Accuracy = 99.74000 %, test_total= 10000, Accuracy = 87.33000 % 133 learn_rate:0.001296000000000 134 epoch = 43/100, batch_num = 391, loss = 0.061826, time = 36.941 135 train_total = 50000, Accuracy = 99.75000 %, test_total= 10000, Accuracy = 87.45000 % 136 learn_rate:0.001296000000000 137 epoch = 44/100, batch_num = 391, loss = 0.059741, time = 36.205 138 train_total = 50000, Accuracy = 99.86400 %, test_total= 10000, Accuracy = 87.17000 % 139 learn_rate:0.001296000000000 140 epoch = 45/100, batch_num = 391, loss = 0.056397, time = 36.835 141 train_total = 50000, Accuracy = 99.83000 %, test_total= 10000, Accuracy = 87.44000 % 142 learn_rate:0.001296000000000 143 epoch = 46/100, batch_num = 391, loss = 0.054009, time = 36.849 144 train_total = 50000, Accuracy = 99.78800 %, test_total= 10000, Accuracy = 87.40000 % 145 learn_rate:0.001296000000000 146 epoch = 47/100, batch_num = 391, loss = 0.053588, time = 36.394 147 train_total = 50000, Accuracy = 99.88600 %, test_total= 10000, Accuracy = 87.60000 % 148 learn_rate:0.001296000000000 149 epoch = 48/100, batch_num = 391, loss = 0.053057, time = 37.785 150 train_total = 50000, Accuracy = 99.89200 %, test_total= 10000, Accuracy = 87.46000 % 151 learn_rate:0.001296000000000 152 epoch = 49/100, batch_num = 391, loss = 0.045930, time = 36.526 153 train_total = 50000, Accuracy = 99.89600 %, test_total= 10000, Accuracy = 87.61000 % 154 learn_rate:0.001296000000000 155 epoch = 50/100, batch_num = 391, loss = 0.049549, time = 36.887 156 train_total = 50000, Accuracy = 99.89000 %, test_total= 10000, Accuracy = 87.41000 % 157 learn_rate:0.000777600000000 158 epoch = 51/100, batch_num = 391, loss = 0.045231, time = 36.780 159 train_total = 50000, Accuracy = 99.93200 %, test_total= 10000, Accuracy = 87.55000 % 160 learn_rate:0.000777600000000 161 epoch = 52/100, batch_num = 391, loss = 0.039894, time = 36.363 162 train_total = 50000, Accuracy = 99.93800 %, test_total= 10000, Accuracy = 87.54000 % 163 learn_rate:0.000777600000000 164 epoch = 53/100, batch_num = 391, loss = 0.038822, time = 37.128 165 train_total = 50000, Accuracy = 99.95600 %, test_total= 10000, Accuracy = 87.62000 % 166 learn_rate:0.000777600000000 167 epoch = 54/100, batch_num = 391, loss = 0.037930, time = 36.859 168 train_total = 50000, Accuracy = 99.94400 %, test_total= 10000, Accuracy = 87.74000 % 169 learn_rate:0.000777600000000 170 epoch = 55/100, batch_num = 391, loss = 0.039034, time = 36.948 171 train_total = 50000, Accuracy = 99.96000 %, test_total= 10000, Accuracy = 87.50000 % 172 learn_rate:0.000777600000000 173 epoch = 56/100, batch_num = 391, loss = 0.036626, time = 36.273 174 train_total = 50000, Accuracy = 99.95200 %, test_total= 10000, Accuracy = 87.74000 % 175 learn_rate:0.000777600000000 176 epoch = 57/100, batch_num = 391, loss = 0.035077, time = 36.407 177 train_total = 50000, Accuracy = 99.95000 %, test_total= 10000, Accuracy = 87.69000 % 178 learn_rate:0.000777600000000 179 epoch = 58/100, batch_num = 391, loss = 0.037595, time = 36.269 180 train_total = 50000, Accuracy = 99.96600 %, test_total= 10000, Accuracy = 87.78000 % 181 learn_rate:0.000777600000000 182 epoch = 59/100, batch_num = 391, loss = 0.034734, time = 37.324 183 train_total = 50000, Accuracy = 99.97800 %, test_total= 10000, Accuracy = 87.60000 % 184 learn_rate:0.000777600000000 185 epoch = 60/100, batch_num = 391, loss = 0.033837, time = 36.963 186 train_total = 50000, Accuracy = 99.94800 %, test_total= 10000, Accuracy = 87.67000 % 187 learn_rate:0.000466560000000 188 epoch = 61/100, batch_num = 391, loss = 0.031147, time = 37.240 189 train_total = 50000, Accuracy = 99.97200 %, test_total= 10000, Accuracy = 87.96000 % 190 learn_rate:0.000466560000000 191 epoch = 62/100, batch_num = 391, loss = 0.032038, time = 37.097 192 train_total = 50000, Accuracy = 99.97600 %, test_total= 10000, Accuracy = 87.85000 % 193 learn_rate:0.000466560000000 194 epoch = 63/100, batch_num = 391, loss = 0.031524, time = 36.390 195 train_total = 50000, Accuracy = 99.98000 %, test_total= 10000, Accuracy = 87.85000 % 196 learn_rate:0.000466560000000 197 epoch = 64/100, batch_num = 391, loss = 0.029830, time = 36.537 198 train_total = 50000, Accuracy = 99.98200 %, test_total= 10000, Accuracy = 87.90000 % 199 learn_rate:0.000466560000000 200 epoch = 65/100, batch_num = 391, loss = 0.028459, time = 37.747 201 train_total = 50000, Accuracy = 99.98200 %, test_total= 10000, Accuracy = 87.77000 % 202 learn_rate:0.000466560000000 203 epoch = 66/100, batch_num = 391, loss = 0.027390, time = 36.716 204 train_total = 50000, Accuracy = 99.97200 %, test_total= 10000, Accuracy = 87.98000 % 205 learn_rate:0.000466560000000 206 epoch = 67/100, batch_num = 391, loss = 0.027200, time = 36.640 207 train_total = 50000, Accuracy = 99.97600 %, test_total= 10000, Accuracy = 87.69000 % 208 learn_rate:0.000466560000000 209 epoch = 68/100, batch_num = 391, loss = 0.029614, time = 36.406 210 train_total = 50000, Accuracy = 99.98600 %, test_total= 10000, Accuracy = 87.81000 % 211 learn_rate:0.000466560000000 212 epoch = 69/100, batch_num = 391, loss = 0.026783, time = 36.637 213 train_total = 50000, Accuracy = 99.98800 %, test_total= 10000, Accuracy = 87.83000 % 214 learn_rate:0.000466560000000 215 epoch = 70/100, batch_num = 391, loss = 0.029514, time = 36.458 216 train_total = 50000, Accuracy = 99.98200 %, test_total= 10000, Accuracy = 87.92000 % 217 learn_rate:0.000279936000000 218 epoch = 71/100, batch_num = 391, loss = 0.026174, time = 36.751 219 train_total = 50000, Accuracy = 99.98200 %, test_total= 10000, Accuracy = 87.89000 % 220 learn_rate:0.000279936000000 221 epoch = 72/100, batch_num = 391, loss = 0.024701, time = 36.821 222 train_total = 50000, Accuracy = 99.98800 %, test_total= 10000, Accuracy = 87.77000 % 223 learn_rate:0.000279936000000 224 epoch = 73/100, batch_num = 391, loss = 0.025671, time = 37.318 225 train_total = 50000, Accuracy = 99.99400 %, test_total= 10000, Accuracy = 87.88000 % 226 learn_rate:0.000279936000000 227 epoch = 74/100, batch_num = 391, loss = 0.024448, time = 36.599 228 train_total = 50000, Accuracy = 99.98600 %, test_total= 10000, Accuracy = 87.83000 % 229 learn_rate:0.000279936000000 230 epoch = 75/100, batch_num = 391, loss = 0.025472, time = 37.062 231 train_total = 50000, Accuracy = 99.99000 %, test_total= 10000, Accuracy = 87.78000 % 232 learn_rate:0.000279936000000 233 epoch = 76/100, batch_num = 391, loss = 0.024742, time = 36.788 234 train_total = 50000, Accuracy = 99.98400 %, test_total= 10000, Accuracy = 87.94000 % 235 learn_rate:0.000279936000000 236 epoch = 77/100, batch_num = 391, loss = 0.024732, time = 36.909 237 train_total = 50000, Accuracy = 99.98600 %, test_total= 10000, Accuracy = 88.00000 % 238 learn_rate:0.000279936000000 239 epoch = 78/100, batch_num = 391, loss = 0.023314, time = 37.003 240 train_total = 50000, Accuracy = 99.99200 %, test_total= 10000, Accuracy = 87.75000 % 241 learn_rate:0.000279936000000 242 epoch = 79/100, batch_num = 391, loss = 0.023640, time = 37.048 243 train_total = 50000, Accuracy = 99.99000 %, test_total= 10000, Accuracy = 87.82000 % 244 learn_rate:0.000279936000000 245 epoch = 80/100, batch_num = 391, loss = 0.023612, time = 36.823 246 train_total = 50000, Accuracy = 99.98800 %, test_total= 10000, Accuracy = 88.00000 % 247 learn_rate:0.000167961600000 248 epoch = 81/100, batch_num = 391, loss = 0.023991, time = 37.118 249 train_total = 50000, Accuracy = 99.99400 %, test_total= 10000, Accuracy = 87.88000 % 250 learn_rate:0.000167961600000 251 epoch = 82/100, batch_num = 391, loss = 0.022168, time = 37.438 252 train_total = 50000, Accuracy = 99.98800 %, test_total= 10000, Accuracy = 88.00000 % 253 learn_rate:0.000167961600000 254 epoch = 83/100, batch_num = 391, loss = 0.022910, time = 36.649 255 train_total = 50000, Accuracy = 99.99800 %, test_total= 10000, Accuracy = 88.02000 % 256 learn_rate:0.000167961600000 257 epoch = 84/100, batch_num = 391, loss = 0.022220, time = 36.602 258 train_total = 50000, Accuracy = 99.99200 %, test_total= 10000, Accuracy = 88.05000 % 259 learn_rate:0.000167961600000 260 epoch = 85/100, batch_num = 391, loss = 0.022573, time = 36.785 261 train_total = 50000, Accuracy = 99.99200 %, test_total= 10000, Accuracy = 87.92000 % 262 learn_rate:0.000167961600000 263 epoch = 86/100, batch_num = 391, loss = 0.022758, time = 36.705 264 train_total = 50000, Accuracy = 99.99600 %, test_total= 10000, Accuracy = 87.89000 % 265 learn_rate:0.000167961600000 266 epoch = 87/100, batch_num = 391, loss = 0.022738, time = 36.700 267 train_total = 50000, Accuracy = 99.99400 %, test_total= 10000, Accuracy = 88.02000 % 268 learn_rate:0.000167961600000 269 epoch = 88/100, batch_num = 391, loss = 0.020802, time = 36.288 270 train_total = 50000, Accuracy = 99.99400 %, test_total= 10000, Accuracy = 87.84000 % 271 learn_rate:0.000167961600000 272 epoch = 89/100, batch_num = 391, loss = 0.022297, time = 36.614 273 train_total = 50000, Accuracy = 99.99400 %, test_total= 10000, Accuracy = 87.89000 % 274 learn_rate:0.000167961600000 275 epoch = 90/100, batch_num = 391, loss = 0.021643, time = 36.498 276 train_total = 50000, Accuracy = 99.99600 %, test_total= 10000, Accuracy = 88.01000 % 277 learn_rate:0.000100776960000 278 epoch = 91/100, batch_num = 391, loss = 0.022491, time = 36.515 279 train_total = 50000, Accuracy = 99.99400 %, test_total= 10000, Accuracy = 87.90000 % 280 learn_rate:0.000100776960000 281 epoch = 92/100, batch_num = 391, loss = 0.020951, time = 36.592 282 train_total = 50000, Accuracy = 99.99800 %, test_total= 10000, Accuracy = 87.95000 % 283 learn_rate:0.000100776960000 284 epoch = 93/100, batch_num = 391, loss = 0.020818, time = 37.168 285 train_total = 50000, Accuracy = 99.99800 %, test_total= 10000, Accuracy = 87.95000 % 286 learn_rate:0.000100776960000 287 epoch = 94/100, batch_num = 391, loss = 0.020871, time = 36.718 288 train_total = 50000, Accuracy = 99.99600 %, test_total= 10000, Accuracy = 87.99000 % 289 learn_rate:0.000100776960000 290 epoch = 95/100, batch_num = 391, loss = 0.021837, time = 36.537 291 train_total = 50000, Accuracy = 99.99200 %, test_total= 10000, Accuracy = 88.04000 % 292 learn_rate:0.000100776960000 293 epoch = 96/100, batch_num = 391, loss = 0.021248, time = 36.726 294 train_total = 50000, Accuracy = 99.99800 %, test_total= 10000, Accuracy = 87.97000 % 295 learn_rate:0.000100776960000 296 epoch = 97/100, batch_num = 391, loss = 0.021883, time = 37.247 297 train_total = 50000, Accuracy = 99.99800 %, test_total= 10000, Accuracy = 87.92000 % 298 learn_rate:0.000100776960000 299 epoch = 98/100, batch_num = 391, loss = 0.021112, time = 36.765 300 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 87.98000 % 301 learn_rate:0.000100776960000 302 epoch = 99/100, batch_num = 391, loss = 0.021158, time = 36.582 303 train_total = 50000, Accuracy = 99.99000 %, test_total= 10000, Accuracy = 87.93000 % 304 learn_rate:0.000100776960000 305 epoch = 100/100, batch_num = 391, loss = 0.020427, time = 36.319 306 train_total = 50000, Accuracy = 99.99600 %, test_total= 10000, Accuracy = 87.96000 %

图2 AlexNet_acc_epoch_0100

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律