05-Resnet18 图像分类

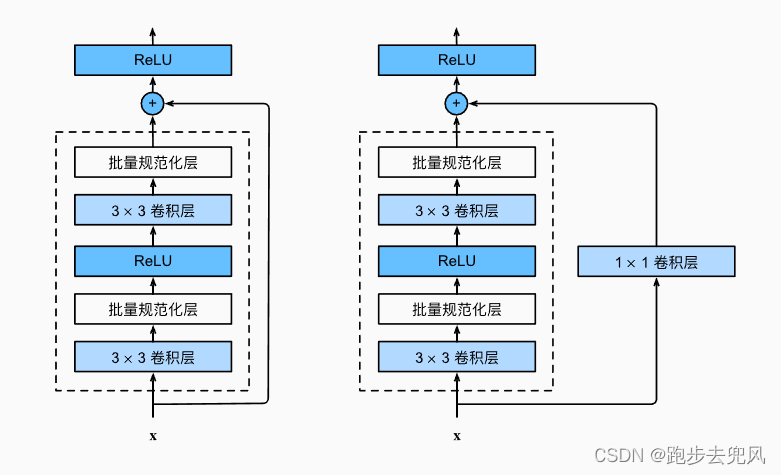

图1 Resnet的残差块

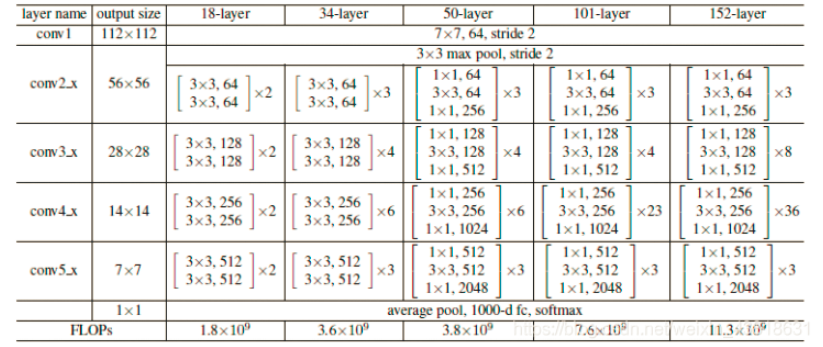

图2 Resnet18 网络架构

Cifar10 数据集的Resnet10的框架实现(Pytorch):

1 import torch 2 from torch import nn 3 4 # 基础块 5 from torch.nn import Conv2d, BatchNorm2d, ReLU, MaxPool2d, AdaptiveAvgPool2d, Linear 6 7 8 # ResNet18_BasicBlock-残差单元 9 class ResNet18_BasicBlock(nn.Module): 10 def __init__(self, input_channel, output_channel, stride, use_conv1_1): 11 super(ResNet18_BasicBlock, self).__init__() 12 13 # 第一层卷积 14 self.conv1 = nn.Conv2d(input_channel, output_channel, kernel_size=3, stride=stride, padding=1) 15 # 第二层卷积 16 self.conv2 = nn.Conv2d(output_channel, output_channel, kernel_size=3, stride=1, padding=1) 17 18 # 1*1卷积核,在不改变图片尺寸的情况下给通道升维 19 self.extra = nn.Sequential( 20 nn.Conv2d(input_channel, output_channel, kernel_size=1, stride=stride, padding=0), 21 nn.BatchNorm2d(output_channel) 22 ) 23 24 self.use_conv1_1 = use_conv1_1 25 26 self.bn = nn.BatchNorm2d(output_channel) 27 self.relu = nn.ReLU(inplace=True) 28 29 def forward(self, x): 30 out = self.bn(self.conv1(x)) 31 out = self.relu(out) 32 33 out = self.bn(self.conv2(out)) 34 35 # 残差连接-(B,C,H,W)维度一致才能进行残差连接 36 if self.use_conv1_1: 37 out = self.extra(x) + out 38 39 out = self.relu(out) 40 return out 41 42 43 # 构建 ResNet18 网络模型 44 class ResNet18(nn.Module): 45 def __init__(self): 46 super(ResNet18, self).__init__() 47 48 self.conv1 = nn.Sequential( 49 nn.Conv2d(3, 64, kernel_size=3, stride=3, padding=1), 50 nn.BatchNorm2d(64) 51 ) 52 self.block1_1 = ResNet18_BasicBlock(input_channel=64, output_channel=64, stride=1, use_conv1_1=False) 53 self.block1_2 = ResNet18_BasicBlock(input_channel=64, output_channel=64, stride=1, use_conv1_1=False) 54 55 self.block2_1 = ResNet18_BasicBlock(input_channel=64, output_channel=128, stride=2, use_conv1_1=True) 56 self.block2_2 = ResNet18_BasicBlock(input_channel=128, output_channel=128, stride=1, use_conv1_1=False) 57 58 self.block3_1 = ResNet18_BasicBlock(input_channel=128, output_channel=256, stride=2, use_conv1_1=True) 59 self.block3_2 = ResNet18_BasicBlock(input_channel=256, output_channel=256, stride=1, use_conv1_1=False) 60 61 self.block4_1 = ResNet18_BasicBlock(input_channel=256, output_channel=512, stride=2, use_conv1_1=True) 62 self.block4_2 = ResNet18_BasicBlock(input_channel=512, output_channel=512, stride=1, use_conv1_1=False) 63 64 self.FC_layer = nn.Linear(512 * 1 * 1, 10) 65 66 self.adaptive_avg_pool2d = nn.AdaptiveAvgPool2d((1,1)) 67 self.relu = nn.ReLU(inplace=True) 68 69 def forward(self, x): 70 71 x = self.relu(self.conv1(x)) 72 73 # ResNet18-网络模型 74 x = self.block1_1(x) 75 x = self.block1_2(x) 76 x = self.block2_1(x) 77 x = self.block2_2(x) 78 x = self.block3_1(x) 79 x = self.block3_2(x) 80 x = self.block4_1(x) 81 x = self.block4_2(x) 82 83 # 平均值池化 84 x = self.adaptive_avg_pool2d(x) 85 86 # 数据平坦化处理,为接下来的全连接层做准备 87 x = x.view(x.size(0), -1) 88 x = self.FC_layer(x) 89 90 return x 91 92 93 94 class BasicBlock(nn.Module): 95 96 def __init__(self, in_features, out_features) -> None: 97 super().__init__() 98 99 self.in_features = in_features 100 self.out_features = out_features 101 102 stride = 1 103 _features = out_features 104 if self.in_features != self.out_features: 105 # 在输入通道和输出通道不相等的情况下计算通道是否为2倍差值 106 if self.out_features / self.in_features == 2.0: 107 stride = 2 # 在输出特征是输入特征的2倍的情况下 要想参数不翻倍 步长就必须翻倍 108 else: 109 raise ValueError("输出特征数最多为输入特征数的2倍!") 110 111 self.conv1 = Conv2d(in_features, _features, kernel_size=3, stride=stride, padding=1, bias=False) 112 self.bn1 = BatchNorm2d(_features, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 113 self.relu = ReLU(inplace=True) 114 self.conv2 = Conv2d(_features, _features, kernel_size=3, stride=1, padding=1, bias=False) 115 self.bn2 = BatchNorm2d(_features, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 116 117 # 下采样 118 self.downsample = None if self.in_features == self.out_features else nn.Sequential( 119 Conv2d(in_features, out_features, kernel_size=1, stride=2, bias=False), 120 BatchNorm2d(out_features, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 121 ) 122 123 def forward(self, x): 124 identity = x 125 out = self.conv1(x) 126 out = self.bn1(out) 127 out = self.relu(out) 128 out = self.conv2(out) 129 out = self.bn2(out) 130 131 # 输入输出的特征数不同时使用下采样层 132 if self.in_features != self.out_features: 133 identity = self.downsample(x) 134 135 # 残差求和 136 out += identity 137 out = self.relu(out) 138 return out 139 140 141 class ResNet18_new(nn.Module): 142 def __init__(self) -> None: 143 super().__init__() 144 145 self.conv1 = Conv2d(3, 64, kernel_size=3, stride=1, padding=1, bias=False) 146 self.bn1 = BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) 147 self.relu = ReLU(inplace=True) 148 # self.maxpool = MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False) 149 self.layer1 = nn.Sequential( 150 BasicBlock(64, 64), 151 BasicBlock(64, 64) 152 ) 153 self.layer2 = nn.Sequential( 154 BasicBlock(64, 128), 155 BasicBlock(128, 128) 156 ) 157 self.layer3 = nn.Sequential( 158 BasicBlock(128, 256), 159 BasicBlock(256, 256) 160 ) 161 self.layer4 = nn.Sequential( 162 BasicBlock(256, 512), 163 BasicBlock(512, 512) 164 ) 165 self.avgpool = AdaptiveAvgPool2d(output_size=(1, 1)) 166 self.fc = Linear(in_features=512, out_features=10, bias=True) 167 168 def forward(self, x): 169 x = self.conv1(x) 170 x = self.bn1(x) 171 x = self.relu(x) 172 # x = self.maxpool(x) 173 x = self.layer1(x) 174 x = self.layer2(x) 175 x = self.layer3(x) 176 x = self.layer4(x) 177 x = self.avgpool(x) # <---- 输出为{Tensor:(64,512,1,1)} 178 x = torch.flatten(x, 1) # <----------------这里是个坑 很容易漏 从池化层到全连接需要一个压平 输出为{Tensor:(64,512)} 179 x = self.fc(x) # <------------ 输出为{Tensor:(64,10)} 180 return x 181

classfyNet_main.py

1 import torch 2 from torch.utils.data import DataLoader 3 from torch import nn, optim 4 from torchvision import datasets, transforms 5 from torchvision.transforms.functional import InterpolationMode 6 7 from matplotlib import pyplot as plt 8 9 10 import time 11 12 from Lenet5 import Lenet5_new 13 from Resnet18 import ResNet18,ResNet18_new 14 from AlexNet import AlexNet 15 16 def main(): 17 18 print("Load datasets...") 19 20 # transforms.RandomHorizontalFlip(p=0.5)---以0.5的概率对图片做水平横向翻转 21 # transforms.ToTensor()---shape从(H,W,C)->(C,H,W), 每个像素点从(0-255)映射到(0-1):直接除以255 22 # transforms.Normalize---先将输入归一化到(0,1),像素点通过"(x-mean)/std",将每个元素分布到(-1,1) 23 transform_train = transforms.Compose([ 24 # transforms.Resize((224, 224), interpolation=InterpolationMode.BICUBIC), 25 transforms.RandomCrop(32, padding=4), # 先四周填充0,在吧图像随机裁剪成32*32 26 transforms.RandomHorizontalFlip(p=0.5), 27 transforms.ToTensor(), 28 transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225)) 29 ]) 30 31 transform_test = transforms.Compose([ 32 # transforms.Resize((224, 224), interpolation=InterpolationMode.BICUBIC), 33 transforms.RandomCrop(32, padding=4), # 先四周填充0,在吧图像随机裁剪成32*32 34 transforms.ToTensor(), 35 transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225)) 36 ]) 37 38 # 内置函数下载数据集 39 train_dataset = datasets.CIFAR10(root="./data/Cifar10/", train=True, 40 transform = transform_train, 41 download=True) 42 test_dataset = datasets.CIFAR10(root = "./data/Cifar10/", 43 train = False, 44 transform = transform_test, 45 download=True) 46 47 print(len(train_dataset), len(test_dataset)) 48 49 Batch_size = 64 50 train_loader = DataLoader(train_dataset, batch_size=Batch_size, shuffle = True, num_workers=4) 51 test_loader = DataLoader(test_dataset, batch_size = Batch_size, shuffle = False, num_workers=4) 52 53 # 设置CUDA 54 device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") 55 56 # 初始化模型 57 # 直接更换模型就行,其他无需操作 58 # model = Lenet5_new().to(device) 59 # model = ResNet18().to(device) 60 model = ResNet18_new().to(device) 61 62 # model = AlexNet(num_classes=10, init_weights=True).to(device) 63 print("Resnet_new train...") 64 65 # 构造损失函数和优化器 66 criterion = nn.CrossEntropyLoss() # 多分类softmax构造损失 67 # opt = optim.SGD(model.parameters(), lr=0.01, momentum=0.8, weight_decay=0.001) 68 opt = optim.SGD(model.parameters(), lr=0.01, momentum=0.9, weight_decay=0.0005) 69 70 # 动态更新学习率 ------每隔step_size : lr = lr * gamma 71 schedule = optim.lr_scheduler.StepLR(opt, step_size=10, gamma=0.6, last_epoch=-1) 72 73 # 开始训练 74 print("Start Train...") 75 76 epochs = 100 77 78 loss_list = [] 79 train_acc_list =[] 80 test_acc_list = [] 81 epochs_list = [] 82 83 for epoch in range(0, epochs): 84 85 start = time.time() 86 87 model.train() 88 89 running_loss = 0.0 90 batch_num = 0 91 92 for i, (inputs, labels) in enumerate(train_loader): 93 94 inputs, labels = inputs.to(device), labels.to(device) 95 96 # 将数据送入模型训练 97 outputs = model(inputs) 98 # 计算损失 99 loss = criterion(outputs, labels).to(device) 100 101 # 重置梯度 102 opt.zero_grad() 103 # 计算梯度,反向传播 104 loss.backward() 105 # 根据反向传播的梯度值优化更新参数 106 opt.step() 107 108 # 100个batch的 loss 之和 109 running_loss += loss.item() 110 # loss_list.append(loss.item()) 111 batch_num+=1 112 113 114 epochs_list.append(epoch) 115 116 # 每一轮结束输出一下当前的学习率 lr 117 lr_1 = opt.param_groups[0]['lr'] 118 print("learn_rate:%.15f" % lr_1) 119 schedule.step() 120 121 end = time.time() 122 print('epoch = %d/100, batch_num = %d, loss = %.6f, time = %.3f' % (epoch+1, batch_num, running_loss/batch_num, end-start)) 123 running_loss=0.0 124 125 # 每个epoch训练结束,都进行一次测试验证 126 model.eval() 127 train_correct = 0.0 128 train_total = 0 129 130 test_correct = 0.0 131 test_total = 0 132 133 # 训练模式不需要反向传播更新梯度 134 with torch.no_grad(): 135 136 # print("=======================train=======================") 137 for inputs, labels in train_loader: 138 inputs, labels = inputs.to(device), labels.to(device) 139 outputs = model(inputs) 140 141 pred = outputs.argmax(dim=1) # 返回每一行中最大值元素索引 142 train_total += inputs.size(0) 143 train_correct += torch.eq(pred, labels).sum().item() 144 145 146 # print("=======================test=======================") 147 for inputs, labels in test_loader: 148 inputs, labels = inputs.to(device), labels.to(device) 149 outputs = model(inputs) 150 151 pred = outputs.argmax(dim=1) # 返回每一行中最大值元素索引 152 test_total += inputs.size(0) 153 test_correct += torch.eq(pred, labels).sum().item() 154 155 print("train_total = %d, Accuracy = %.5f %%, test_total= %d, Accuracy = %.5f %%" %(train_total, 100 * train_correct / train_total, test_total, 100 * test_correct / test_total)) 156 157 train_acc_list.append(100 * train_correct / train_total) 158 test_acc_list.append(100 * test_correct / test_total) 159 160 # print("Accuracy of the network on the 10000 test images:%.5f %%" % (100 * test_correct / test_total)) 161 # print("===============================================") 162 163 fig = plt.figure(figsize=(4, 4)) 164 165 plt.plot(epochs_list, train_acc_list, label='train_acc_list') 166 plt.plot(epochs_list, test_acc_list, label='test_acc_list') 167 plt.legend() 168 plt.title("train_test_acc") 169 plt.savefig('Resnet18_acc_epoch_{:04d}.png'.format(epochs)) 170 plt.close() 171 172 if __name__ == "__main__": 173 174 main()

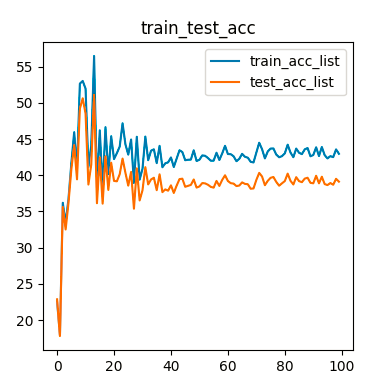

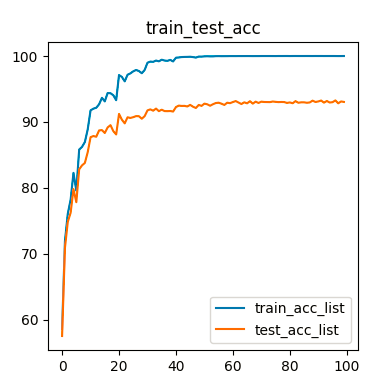

对比代码中的Resnet18模型和Resnet18_new模型的loss:

Resnet18的loss:

1 (pytorch-CycleGAN-and-pix2pix) python classfyNet_train.py 2 torch.Size([64, 10]) 3 Load datasets... 4 Files already downloaded and verified 5 Files already downloaded and verified 6 50000 10000 7 Start Train... 8 learn_rate:0.010000000000000 9 epoch = 1/100, batch_num = 782, loss = 1.478429, time = 20.609 10 train_total = 50000, Accuracy = 31.06600 %, test_total= 10000, Accuracy = 30.85000 % 11 learn_rate:0.010000000000000 12 epoch = 2/100, batch_num = 782, loss = 1.082994, time = 20.526 13 train_total = 50000, Accuracy = 35.33400 %, test_total= 10000, Accuracy = 35.15000 % 14 learn_rate:0.010000000000000 15 epoch = 3/100, batch_num = 782, loss = 0.888856, time = 20.156 16 train_total = 50000, Accuracy = 37.90400 %, test_total= 10000, Accuracy = 37.06000 % 17 learn_rate:0.010000000000000 18 epoch = 4/100, batch_num = 782, loss = 0.768423, time = 20.295 19 train_total = 50000, Accuracy = 39.49000 %, test_total= 10000, Accuracy = 38.76000 % 20 learn_rate:0.010000000000000 21 epoch = 5/100, batch_num = 782, loss = 0.677779, time = 20.365 22 train_total = 50000, Accuracy = 43.15800 %, test_total= 10000, Accuracy = 42.09000 % 23 learn_rate:0.010000000000000 24 epoch = 6/100, batch_num = 782, loss = 0.615038, time = 20.242 25 train_total = 50000, Accuracy = 49.67600 %, test_total= 10000, Accuracy = 48.32000 % 26 learn_rate:0.010000000000000 27 epoch = 7/100, batch_num = 782, loss = 0.557970, time = 20.275 28 train_total = 50000, Accuracy = 41.97000 %, test_total= 10000, Accuracy = 40.59000 % 29 learn_rate:0.010000000000000 30 epoch = 8/100, batch_num = 782, loss = 0.515727, time = 20.195 31 train_total = 50000, Accuracy = 43.56000 %, test_total= 10000, Accuracy = 42.16000 % 32 learn_rate:0.010000000000000 33 epoch = 9/100, batch_num = 782, loss = 0.475527, time = 20.332 34 train_total = 50000, Accuracy = 49.66600 %, test_total= 10000, Accuracy = 47.85000 % 35 learn_rate:0.010000000000000 36 epoch = 10/100, batch_num = 782, loss = 0.439108, time = 20.374 37 train_total = 50000, Accuracy = 45.68400 %, test_total= 10000, Accuracy = 43.56000 % 38 learn_rate:0.006000000000000 39 epoch = 11/100, batch_num = 782, loss = 0.319711, time = 20.288 40 train_total = 50000, Accuracy = 38.37200 %, test_total= 10000, Accuracy = 36.85000 % 41 learn_rate:0.006000000000000 42 epoch = 12/100, batch_num = 782, loss = 0.283212, time = 20.325 43 train_total = 50000, Accuracy = 47.74200 %, test_total= 10000, Accuracy = 45.71000 % 44 learn_rate:0.006000000000000 45 epoch = 13/100, batch_num = 782, loss = 0.272696, time = 20.214 46 train_total = 50000, Accuracy = 52.00200 %, test_total= 10000, Accuracy = 48.94000 % 47 learn_rate:0.006000000000000 48 epoch = 14/100, batch_num = 782, loss = 0.255000, time = 20.214 49 train_total = 50000, Accuracy = 47.10800 %, test_total= 10000, Accuracy = 44.11000 % 50 learn_rate:0.006000000000000 51 epoch = 15/100, batch_num = 782, loss = 0.239320, time = 20.285 52 train_total = 50000, Accuracy = 49.55000 %, test_total= 10000, Accuracy = 46.55000 % 53 learn_rate:0.006000000000000 54 epoch = 16/100, batch_num = 782, loss = 0.224698, time = 20.250 55 train_total = 50000, Accuracy = 45.11400 %, test_total= 10000, Accuracy = 41.64000 % 56 learn_rate:0.006000000000000 57 epoch = 17/100, batch_num = 782, loss = 0.215493, time = 20.155 58 train_total = 50000, Accuracy = 38.18400 %, test_total= 10000, Accuracy = 36.62000 % 59 learn_rate:0.006000000000000 60 epoch = 18/100, batch_num = 782, loss = 0.204470, time = 20.333 61 train_total = 50000, Accuracy = 43.55400 %, test_total= 10000, Accuracy = 40.96000 % 62 learn_rate:0.006000000000000 63 epoch = 19/100, batch_num = 782, loss = 0.192821, time = 20.248 64 train_total = 50000, Accuracy = 47.45000 %, test_total= 10000, Accuracy = 44.57000 % 65 learn_rate:0.006000000000000 66 epoch = 20/100, batch_num = 782, loss = 0.186118, time = 20.287 67 train_total = 50000, Accuracy = 46.74800 %, test_total= 10000, Accuracy = 43.04000 % 68 learn_rate:0.003600000000000 69 epoch = 21/100, batch_num = 782, loss = 0.107671, time = 20.394 70 train_total = 50000, Accuracy = 42.69800 %, test_total= 10000, Accuracy = 40.10000 % 71 learn_rate:0.003600000000000 72 epoch = 22/100, batch_num = 782, loss = 0.078854, time = 20.334 73 train_total = 50000, Accuracy = 48.80000 %, test_total= 10000, Accuracy = 45.33000 % 74 learn_rate:0.003600000000000 75 epoch = 23/100, batch_num = 782, loss = 0.075158, time = 20.473 76 train_total = 50000, Accuracy = 47.88200 %, test_total= 10000, Accuracy = 43.72000 % 77 learn_rate:0.003600000000000 78 epoch = 24/100, batch_num = 782, loss = 0.073704, time = 20.326 79 train_total = 50000, Accuracy = 50.65200 %, test_total= 10000, Accuracy = 46.09000 % 80 learn_rate:0.003600000000000 81 epoch = 25/100, batch_num = 782, loss = 0.065607, time = 20.183 82 train_total = 50000, Accuracy = 58.66600 %, test_total= 10000, Accuracy = 52.69000 % 83 learn_rate:0.003600000000000 84 epoch = 26/100, batch_num = 782, loss = 0.073630, time = 20.378 85 train_total = 50000, Accuracy = 51.99200 %, test_total= 10000, Accuracy = 47.71000 % 86 learn_rate:0.003600000000000 87 epoch = 27/100, batch_num = 782, loss = 0.070075, time = 20.228 88 train_total = 50000, Accuracy = 45.31600 %, test_total= 10000, Accuracy = 41.69000 % 89 learn_rate:0.003600000000000 90 epoch = 28/100, batch_num = 782, loss = 0.069032, time = 19.904 91 train_total = 50000, Accuracy = 48.39000 %, test_total= 10000, Accuracy = 44.84000 % 92 learn_rate:0.003600000000000 93 epoch = 29/100, batch_num = 782, loss = 0.071921, time = 20.349 94 train_total = 50000, Accuracy = 55.82400 %, test_total= 10000, Accuracy = 50.86000 % 95 learn_rate:0.003600000000000 96 epoch = 30/100, batch_num = 782, loss = 0.071051, time = 20.184 97 train_total = 50000, Accuracy = 53.07200 %, test_total= 10000, Accuracy = 48.05000 % 98 learn_rate:0.002160000000000 99 epoch = 31/100, batch_num = 782, loss = 0.039924, time = 20.472 100 train_total = 50000, Accuracy = 52.69200 %, test_total= 10000, Accuracy = 48.10000 % 101 learn_rate:0.002160000000000 102 epoch = 32/100, batch_num = 782, loss = 0.020253, time = 20.357 103 train_total = 50000, Accuracy = 51.39600 %, test_total= 10000, Accuracy = 47.06000 % 104 learn_rate:0.002160000000000 105 epoch = 33/100, batch_num = 782, loss = 0.016212, time = 20.455 106 train_total = 50000, Accuracy = 48.52400 %, test_total= 10000, Accuracy = 44.42000 % 107 learn_rate:0.002160000000000 108 epoch = 34/100, batch_num = 782, loss = 0.013587, time = 20.243 109 train_total = 50000, Accuracy = 54.30800 %, test_total= 10000, Accuracy = 48.65000 % 110 learn_rate:0.002160000000000 111 epoch = 35/100, batch_num = 782, loss = 0.012355, time = 20.483 112 train_total = 50000, Accuracy = 56.43400 %, test_total= 10000, Accuracy = 51.17000 % 113 learn_rate:0.002160000000000 114 epoch = 36/100, batch_num = 782, loss = 0.013484, time = 20.341 115 train_total = 50000, Accuracy = 56.60800 %, test_total= 10000, Accuracy = 50.81000 % 116 learn_rate:0.002160000000000 117 epoch = 37/100, batch_num = 782, loss = 0.010007, time = 20.286 118 train_total = 50000, Accuracy = 50.47000 %, test_total= 10000, Accuracy = 45.95000 % 119 learn_rate:0.002160000000000 120 epoch = 38/100, batch_num = 782, loss = 0.009641, time = 20.268 121 train_total = 50000, Accuracy = 55.58600 %, test_total= 10000, Accuracy = 50.60000 % 122 learn_rate:0.002160000000000 123 epoch = 39/100, batch_num = 782, loss = 0.008131, time = 20.245 124 train_total = 50000, Accuracy = 50.35200 %, test_total= 10000, Accuracy = 46.15000 % 125 learn_rate:0.002160000000000 126 epoch = 40/100, batch_num = 782, loss = 0.009149, time = 20.268 127 train_total = 50000, Accuracy = 47.47200 %, test_total= 10000, Accuracy = 43.46000 % 128 learn_rate:0.001296000000000 129 epoch = 41/100, batch_num = 782, loss = 0.005920, time = 20.269 130 train_total = 50000, Accuracy = 51.38200 %, test_total= 10000, Accuracy = 46.52000 % 131 learn_rate:0.001296000000000 132 epoch = 42/100, batch_num = 782, loss = 0.003914, time = 20.295 133 train_total = 50000, Accuracy = 51.71200 %, test_total= 10000, Accuracy = 47.06000 % 134 learn_rate:0.001296000000000 135 epoch = 43/100, batch_num = 782, loss = 0.003080, time = 20.224 136 train_total = 50000, Accuracy = 51.05800 %, test_total= 10000, Accuracy = 46.26000 % 137 learn_rate:0.001296000000000 138 epoch = 44/100, batch_num = 782, loss = 0.002413, time = 20.320 139 train_total = 50000, Accuracy = 53.42600 %, test_total= 10000, Accuracy = 48.32000 % 140 learn_rate:0.001296000000000 141 epoch = 45/100, batch_num = 782, loss = 0.002489, time = 20.297 142 train_total = 50000, Accuracy = 54.24800 %, test_total= 10000, Accuracy = 49.09000 % 143 learn_rate:0.001296000000000 144 epoch = 46/100, batch_num = 782, loss = 0.002178, time = 20.256 145 train_total = 50000, Accuracy = 55.79000 %, test_total= 10000, Accuracy = 50.57000 % 146 learn_rate:0.001296000000000 147 epoch = 47/100, batch_num = 782, loss = 0.002345, time = 20.361 148 train_total = 50000, Accuracy = 53.50000 %, test_total= 10000, Accuracy = 48.63000 % 149 learn_rate:0.001296000000000 150 epoch = 48/100, batch_num = 782, loss = 0.001805, time = 20.261 151 train_total = 50000, Accuracy = 55.94000 %, test_total= 10000, Accuracy = 50.40000 % 152 learn_rate:0.001296000000000 153 epoch = 49/100, batch_num = 782, loss = 0.001786, time = 20.178 154 train_total = 50000, Accuracy = 54.48600 %, test_total= 10000, Accuracy = 48.75000 % 155 learn_rate:0.001296000000000 156 epoch = 50/100, batch_num = 782, loss = 0.001872, time = 20.300 157 train_total = 50000, Accuracy = 54.22400 %, test_total= 10000, Accuracy = 48.62000 % 158 learn_rate:0.000777600000000 159 epoch = 51/100, batch_num = 782, loss = 0.001727, time = 20.183 160 train_total = 50000, Accuracy = 53.55400 %, test_total= 10000, Accuracy = 48.19000 % 161 learn_rate:0.000777600000000 162 epoch = 52/100, batch_num = 782, loss = 0.001520, time = 20.245 163 train_total = 50000, Accuracy = 53.02800 %, test_total= 10000, Accuracy = 48.03000 % 164 learn_rate:0.000777600000000 165 epoch = 53/100, batch_num = 782, loss = 0.001509, time = 20.378 166 train_total = 50000, Accuracy = 52.19200 %, test_total= 10000, Accuracy = 46.78000 % 167 learn_rate:0.000777600000000 168 epoch = 54/100, batch_num = 782, loss = 0.001584, time = 20.280 169 train_total = 50000, Accuracy = 52.67200 %, test_total= 10000, Accuracy = 47.31000 % 170 learn_rate:0.000777600000000 171 epoch = 55/100, batch_num = 782, loss = 0.001645, time = 20.257 172 train_total = 50000, Accuracy = 53.00600 %, test_total= 10000, Accuracy = 47.83000 % 173 learn_rate:0.000777600000000 174 epoch = 56/100, batch_num = 782, loss = 0.001490, time = 20.254 175 train_total = 50000, Accuracy = 55.50400 %, test_total= 10000, Accuracy = 49.49000 % 176 learn_rate:0.000777600000000 177 epoch = 57/100, batch_num = 782, loss = 0.001473, time = 20.464 178 train_total = 50000, Accuracy = 53.84200 %, test_total= 10000, Accuracy = 48.34000 % 179 learn_rate:0.000777600000000 180 epoch = 58/100, batch_num = 782, loss = 0.001487, time = 20.422 181 train_total = 50000, Accuracy = 54.26800 %, test_total= 10000, Accuracy = 48.84000 % 182 learn_rate:0.000777600000000 183 epoch = 59/100, batch_num = 782, loss = 0.001462, time = 20.241 184 train_total = 50000, Accuracy = 55.90800 %, test_total= 10000, Accuracy = 50.13000 % 185 learn_rate:0.000777600000000 186 epoch = 60/100, batch_num = 782, loss = 0.001440, time = 20.214 187 train_total = 50000, Accuracy = 54.86600 %, test_total= 10000, Accuracy = 49.09000 % 188 learn_rate:0.000466560000000 189 epoch = 61/100, batch_num = 782, loss = 0.001482, time = 20.234 190 train_total = 50000, Accuracy = 54.52800 %, test_total= 10000, Accuracy = 48.95000 % 191 learn_rate:0.000466560000000 192 epoch = 62/100, batch_num = 782, loss = 0.001477, time = 20.284 193 train_total = 50000, Accuracy = 54.04400 %, test_total= 10000, Accuracy = 48.53000 % 194 learn_rate:0.000466560000000 195 epoch = 63/100, batch_num = 782, loss = 0.001458, time = 20.278 196 train_total = 50000, Accuracy = 55.12200 %, test_total= 10000, Accuracy = 49.43000 % 197 learn_rate:0.000466560000000 198 epoch = 64/100, batch_num = 782, loss = 0.001461, time = 20.281 199 train_total = 50000, Accuracy = 55.31400 %, test_total= 10000, Accuracy = 49.36000 % 200 learn_rate:0.000466560000000 201 epoch = 65/100, batch_num = 782, loss = 0.001469, time = 20.215 202 train_total = 50000, Accuracy = 54.66600 %, test_total= 10000, Accuracy = 48.85000 % 203 learn_rate:0.000466560000000 204 epoch = 66/100, batch_num = 782, loss = 0.001485, time = 20.369 205 train_total = 50000, Accuracy = 54.86400 %, test_total= 10000, Accuracy = 49.28000 % 206 learn_rate:0.000466560000000 207 epoch = 67/100, batch_num = 782, loss = 0.001469, time = 20.219 208 train_total = 50000, Accuracy = 54.04800 %, test_total= 10000, Accuracy = 48.46000 % 209 learn_rate:0.000466560000000 210 epoch = 68/100, batch_num = 782, loss = 0.001484, time = 20.315 211 train_total = 50000, Accuracy = 55.65400 %, test_total= 10000, Accuracy = 49.71000 % 212 learn_rate:0.000466560000000 213 epoch = 69/100, batch_num = 782, loss = 0.001469, time = 20.336 214 train_total = 50000, Accuracy = 54.09200 %, test_total= 10000, Accuracy = 48.69000 % 215 learn_rate:0.000466560000000 216 epoch = 70/100, batch_num = 782, loss = 0.001514, time = 20.383 217 train_total = 50000, Accuracy = 54.48400 %, test_total= 10000, Accuracy = 48.92000 % 218 learn_rate:0.000279936000000 219 epoch = 71/100, batch_num = 782, loss = 0.001444, time = 20.361 220 train_total = 50000, Accuracy = 54.71600 %, test_total= 10000, Accuracy = 49.39000 % 221 learn_rate:0.000279936000000 222 epoch = 72/100, batch_num = 782, loss = 0.001429, time = 20.252 223 train_total = 50000, Accuracy = 53.90800 %, test_total= 10000, Accuracy = 48.27000 % 224 learn_rate:0.000279936000000 225 epoch = 73/100, batch_num = 782, loss = 0.001452, time = 20.221 226 train_total = 50000, Accuracy = 55.43600 %, test_total= 10000, Accuracy = 49.66000 % 227 learn_rate:0.000279936000000 228 epoch = 74/100, batch_num = 782, loss = 0.001466, time = 20.291 229 train_total = 50000, Accuracy = 55.00800 %, test_total= 10000, Accuracy = 49.34000 % 230 learn_rate:0.000279936000000 231 epoch = 75/100, batch_num = 782, loss = 0.001456, time = 20.268 232 train_total = 50000, Accuracy = 53.05600 %, test_total= 10000, Accuracy = 47.84000 % 233 learn_rate:0.000279936000000 234 epoch = 76/100, batch_num = 782, loss = 0.001482, time = 20.403 235 train_total = 50000, Accuracy = 54.63400 %, test_total= 10000, Accuracy = 49.00000 % 236 learn_rate:0.000279936000000 237 epoch = 77/100, batch_num = 782, loss = 0.001481, time = 20.300 238 train_total = 50000, Accuracy = 54.45400 %, test_total= 10000, Accuracy = 48.95000 % 239 learn_rate:0.000279936000000 240 epoch = 78/100, batch_num = 782, loss = 0.001469, time = 20.349 241 train_total = 50000, Accuracy = 55.05000 %, test_total= 10000, Accuracy = 49.47000 % 242 learn_rate:0.000279936000000 243 epoch = 79/100, batch_num = 782, loss = 0.001516, time = 20.179 244 train_total = 50000, Accuracy = 54.71000 %, test_total= 10000, Accuracy = 48.91000 % 245 learn_rate:0.000279936000000 246 epoch = 80/100, batch_num = 782, loss = 0.001528, time = 20.335 247 train_total = 50000, Accuracy = 53.93200 %, test_total= 10000, Accuracy = 48.56000 % 248 learn_rate:0.000167961600000 249 epoch = 81/100, batch_num = 782, loss = 0.001489, time = 20.238 250 train_total = 50000, Accuracy = 55.02000 %, test_total= 10000, Accuracy = 49.48000 % 251 learn_rate:0.000167961600000 252 epoch = 82/100, batch_num = 782, loss = 0.001494, time = 20.359 253 train_total = 50000, Accuracy = 54.74800 %, test_total= 10000, Accuracy = 48.92000 % 254 learn_rate:0.000167961600000 255 epoch = 83/100, batch_num = 782, loss = 0.001531, time = 20.469 256 train_total = 50000, Accuracy = 53.87200 %, test_total= 10000, Accuracy = 48.33000 % 257 learn_rate:0.000167961600000 258 epoch = 84/100, batch_num = 782, loss = 0.001547, time = 20.333 259 train_total = 50000, Accuracy = 54.89000 %, test_total= 10000, Accuracy = 49.50000 % 260 learn_rate:0.000167961600000 261 epoch = 85/100, batch_num = 782, loss = 0.001479, time = 20.248 262 train_total = 50000, Accuracy = 52.95000 %, test_total= 10000, Accuracy = 47.83000 % 263 learn_rate:0.000167961600000 264 epoch = 86/100, batch_num = 782, loss = 0.001508, time = 20.275 265 train_total = 50000, Accuracy = 56.31800 %, test_total= 10000, Accuracy = 50.58000 % 266 learn_rate:0.000167961600000 267 epoch = 87/100, batch_num = 782, loss = 0.001515, time = 20.320 268 train_total = 50000, Accuracy = 55.44400 %, test_total= 10000, Accuracy = 49.58000 % 269 learn_rate:0.000167961600000 270 epoch = 88/100, batch_num = 782, loss = 0.001507, time = 20.327 271 train_total = 50000, Accuracy = 53.90000 %, test_total= 10000, Accuracy = 48.48000 % 272 learn_rate:0.000167961600000 273 epoch = 89/100, batch_num = 782, loss = 0.001480, time = 20.315 274 train_total = 50000, Accuracy = 56.02600 %, test_total= 10000, Accuracy = 50.01000 % 275 learn_rate:0.000167961600000 276 epoch = 90/100, batch_num = 782, loss = 0.001472, time = 20.310 277 train_total = 50000, Accuracy = 55.20000 %, test_total= 10000, Accuracy = 49.51000 % 278 learn_rate:0.000100776960000 279 epoch = 91/100, batch_num = 782, loss = 0.001521, time = 20.263 280 train_total = 50000, Accuracy = 56.23400 %, test_total= 10000, Accuracy = 50.64000 % 281 learn_rate:0.000100776960000 282 epoch = 92/100, batch_num = 782, loss = 0.001501, time = 20.237 283 train_total = 50000, Accuracy = 55.11600 %, test_total= 10000, Accuracy = 49.61000 % 284 learn_rate:0.000100776960000 285 epoch = 93/100, batch_num = 782, loss = 0.001574, time = 20.377 286 train_total = 50000, Accuracy = 54.95800 %, test_total= 10000, Accuracy = 49.18000 % 287 learn_rate:0.000100776960000 288 epoch = 94/100, batch_num = 782, loss = 0.001501, time = 20.296 289 train_total = 50000, Accuracy = 55.77600 %, test_total= 10000, Accuracy = 49.97000 % 290 learn_rate:0.000100776960000 291 epoch = 95/100, batch_num = 782, loss = 0.001461, time = 20.223 292 train_total = 50000, Accuracy = 54.73600 %, test_total= 10000, Accuracy = 49.17000 % 293 learn_rate:0.000100776960000 294 epoch = 96/100, batch_num = 782, loss = 0.001491, time = 20.399 295 train_total = 50000, Accuracy = 53.49400 %, test_total= 10000, Accuracy = 48.26000 % 296 learn_rate:0.000100776960000 297 epoch = 97/100, batch_num = 782, loss = 0.001490, time = 20.291 298 train_total = 50000, Accuracy = 54.63800 %, test_total= 10000, Accuracy = 49.04000 % 299 learn_rate:0.000100776960000 300 epoch = 98/100, batch_num = 782, loss = 0.001608, time = 20.332 301 train_total = 50000, Accuracy = 52.93600 %, test_total= 10000, Accuracy = 47.82000 % 302 learn_rate:0.000100776960000 303 epoch = 99/100, batch_num = 782, loss = 0.001496, time = 20.266 304 train_total = 50000, Accuracy = 54.14800 %, test_total= 10000, Accuracy = 48.89000 % 305 learn_rate:0.000100776960000 306 epoch = 100/100, batch_num = 782, loss = 0.001528, time = 20.385 307 train_total = 50000, Accuracy = 54.02000 %, test_total= 10000, Accuracy = 48.37000 %

Resnet18_new的loss:

1 torch.Size([64, 10]) 2 Load datasets... 3 Files already downloaded and verified 4 Files already downloaded and verified 5 50000 10000 6 Resnet_new train... 7 Start Train... 8 learn_rate:0.010000000000000 9 epoch = 1/100, batch_num = 782, loss = 1.451053, time = 23.282 10 train_total = 50000, Accuracy = 58.61800 %, test_total= 10000, Accuracy = 57.56000 % 11 learn_rate:0.010000000000000 12 epoch = 2/100, batch_num = 782, loss = 0.928293, time = 21.907 13 train_total = 50000, Accuracy = 71.94400 %, test_total= 10000, Accuracy = 70.90000 % 14 learn_rate:0.010000000000000 15 epoch = 3/100, batch_num = 782, loss = 0.724947, time = 22.050 16 train_total = 50000, Accuracy = 76.09000 %, test_total= 10000, Accuracy = 74.86000 % 17 learn_rate:0.010000000000000 18 epoch = 4/100, batch_num = 782, loss = 0.603777, time = 21.881 19 train_total = 50000, Accuracy = 78.22600 %, test_total= 10000, Accuracy = 76.24000 % 20 learn_rate:0.010000000000000 21 epoch = 5/100, batch_num = 782, loss = 0.539772, time = 22.380 22 train_total = 50000, Accuracy = 82.28600 %, test_total= 10000, Accuracy = 79.82000 % 23 learn_rate:0.010000000000000 24 epoch = 6/100, batch_num = 782, loss = 0.477935, time = 22.702 25 train_total = 50000, Accuracy = 79.59600 %, test_total= 10000, Accuracy = 77.84000 % 26 learn_rate:0.010000000000000 27 epoch = 7/100, batch_num = 782, loss = 0.438771, time = 21.975 28 train_total = 50000, Accuracy = 85.82200 %, test_total= 10000, Accuracy = 82.82000 % 29 learn_rate:0.010000000000000 30 epoch = 8/100, batch_num = 782, loss = 0.405585, time = 21.700 31 train_total = 50000, Accuracy = 86.22400 %, test_total= 10000, Accuracy = 83.42000 % 32 learn_rate:0.010000000000000 33 epoch = 9/100, batch_num = 782, loss = 0.374122, time = 21.807 34 train_total = 50000, Accuracy = 86.95600 %, test_total= 10000, Accuracy = 83.79000 % 35 learn_rate:0.010000000000000 36 epoch = 10/100, batch_num = 782, loss = 0.345220, time = 21.419 37 train_total = 50000, Accuracy = 88.87400 %, test_total= 10000, Accuracy = 85.42000 % 38 learn_rate:0.006000000000000 39 epoch = 11/100, batch_num = 782, loss = 0.269898, time = 21.788 40 train_total = 50000, Accuracy = 91.76000 %, test_total= 10000, Accuracy = 87.69000 % 41 learn_rate:0.006000000000000 42 epoch = 12/100, batch_num = 782, loss = 0.251359, time = 22.020 43 train_total = 50000, Accuracy = 92.01600 %, test_total= 10000, Accuracy = 87.87000 % 44 learn_rate:0.006000000000000 45 epoch = 13/100, batch_num = 782, loss = 0.237086, time = 22.743 46 train_total = 50000, Accuracy = 92.16000 %, test_total= 10000, Accuracy = 87.76000 % 47 learn_rate:0.006000000000000 48 epoch = 14/100, batch_num = 782, loss = 0.224472, time = 21.741 49 train_total = 50000, Accuracy = 92.68000 %, test_total= 10000, Accuracy = 88.72000 % 50 learn_rate:0.006000000000000 51 epoch = 15/100, batch_num = 782, loss = 0.216702, time = 21.709 52 train_total = 50000, Accuracy = 93.65800 %, test_total= 10000, Accuracy = 88.77000 % 53 learn_rate:0.006000000000000 54 epoch = 16/100, batch_num = 782, loss = 0.203758, time = 21.665 55 train_total = 50000, Accuracy = 93.11800 %, test_total= 10000, Accuracy = 88.33000 % 56 learn_rate:0.006000000000000 57 epoch = 17/100, batch_num = 782, loss = 0.200266, time = 21.782 58 train_total = 50000, Accuracy = 94.38600 %, test_total= 10000, Accuracy = 89.17000 % 59 learn_rate:0.006000000000000 60 epoch = 18/100, batch_num = 782, loss = 0.190930, time = 22.121 61 train_total = 50000, Accuracy = 94.36000 %, test_total= 10000, Accuracy = 89.52000 % 62 learn_rate:0.006000000000000 63 epoch = 19/100, batch_num = 782, loss = 0.181329, time = 22.707 64 train_total = 50000, Accuracy = 94.04400 %, test_total= 10000, Accuracy = 88.61000 % 65 learn_rate:0.006000000000000 66 epoch = 20/100, batch_num = 782, loss = 0.173605, time = 22.876 67 train_total = 50000, Accuracy = 93.30000 %, test_total= 10000, Accuracy = 88.11000 % 68 learn_rate:0.003600000000000 69 epoch = 21/100, batch_num = 782, loss = 0.126447, time = 22.123 70 train_total = 50000, Accuracy = 97.11200 %, test_total= 10000, Accuracy = 91.22000 % 71 learn_rate:0.003600000000000 72 epoch = 22/100, batch_num = 782, loss = 0.107373, time = 21.784 73 train_total = 50000, Accuracy = 96.83000 %, test_total= 10000, Accuracy = 90.35000 % 74 learn_rate:0.003600000000000 75 epoch = 23/100, batch_num = 782, loss = 0.104210, time = 22.198 76 train_total = 50000, Accuracy = 96.15800 %, test_total= 10000, Accuracy = 89.79000 % 77 learn_rate:0.003600000000000 78 epoch = 24/100, batch_num = 782, loss = 0.103576, time = 21.430 79 train_total = 50000, Accuracy = 97.16000 %, test_total= 10000, Accuracy = 90.71000 % 80 learn_rate:0.003600000000000 81 epoch = 25/100, batch_num = 782, loss = 0.096196, time = 21.878 82 train_total = 50000, Accuracy = 97.36600 %, test_total= 10000, Accuracy = 90.61000 % 83 learn_rate:0.003600000000000 84 epoch = 26/100, batch_num = 782, loss = 0.095333, time = 21.986 85 train_total = 50000, Accuracy = 97.67800 %, test_total= 10000, Accuracy = 90.73000 % 86 learn_rate:0.003600000000000 87 epoch = 27/100, batch_num = 782, loss = 0.092612, time = 21.593 88 train_total = 50000, Accuracy = 97.88400 %, test_total= 10000, Accuracy = 90.89000 % 89 learn_rate:0.003600000000000 90 epoch = 28/100, batch_num = 782, loss = 0.087495, time = 21.586 91 train_total = 50000, Accuracy = 97.71600 %, test_total= 10000, Accuracy = 90.87000 % 92 learn_rate:0.003600000000000 93 epoch = 29/100, batch_num = 782, loss = 0.082360, time = 21.487 94 train_total = 50000, Accuracy = 97.39000 %, test_total= 10000, Accuracy = 90.49000 % 95 learn_rate:0.003600000000000 96 epoch = 30/100, batch_num = 782, loss = 0.080648, time = 22.697 97 train_total = 50000, Accuracy = 97.88200 %, test_total= 10000, Accuracy = 90.88000 % 98 learn_rate:0.002160000000000 99 epoch = 31/100, batch_num = 782, loss = 0.051497, time = 21.724 100 train_total = 50000, Accuracy = 98.98000 %, test_total= 10000, Accuracy = 91.76000 % 101 learn_rate:0.002160000000000 102 epoch = 32/100, batch_num = 782, loss = 0.043576, time = 21.598 103 train_total = 50000, Accuracy = 99.15200 %, test_total= 10000, Accuracy = 91.91000 % 104 learn_rate:0.002160000000000 105 epoch = 33/100, batch_num = 782, loss = 0.040170, time = 22.077 106 train_total = 50000, Accuracy = 99.11400 %, test_total= 10000, Accuracy = 91.71000 % 107 learn_rate:0.002160000000000 108 epoch = 34/100, batch_num = 782, loss = 0.036510, time = 21.743 109 train_total = 50000, Accuracy = 99.31000 %, test_total= 10000, Accuracy = 92.03000 % 110 learn_rate:0.002160000000000 111 epoch = 35/100, batch_num = 782, loss = 0.034952, time = 22.898 112 train_total = 50000, Accuracy = 99.19400 %, test_total= 10000, Accuracy = 91.64000 % 113 learn_rate:0.002160000000000 114 epoch = 36/100, batch_num = 782, loss = 0.034848, time = 22.946 115 train_total = 50000, Accuracy = 99.43800 %, test_total= 10000, Accuracy = 91.86000 % 116 learn_rate:0.002160000000000 117 epoch = 37/100, batch_num = 782, loss = 0.030716, time = 21.696 118 train_total = 50000, Accuracy = 99.31200 %, test_total= 10000, Accuracy = 91.65000 % 119 learn_rate:0.002160000000000 120 epoch = 38/100, batch_num = 782, loss = 0.032617, time = 21.502 121 train_total = 50000, Accuracy = 99.26400 %, test_total= 10000, Accuracy = 91.64000 % 122 learn_rate:0.002160000000000 123 epoch = 39/100, batch_num = 782, loss = 0.031037, time = 21.488 124 train_total = 50000, Accuracy = 99.41200 %, test_total= 10000, Accuracy = 91.66000 % 125 learn_rate:0.002160000000000 126 epoch = 40/100, batch_num = 782, loss = 0.028539, time = 22.117 127 train_total = 50000, Accuracy = 99.17400 %, test_total= 10000, Accuracy = 91.58000 % 128 learn_rate:0.001296000000000 129 epoch = 41/100, batch_num = 782, loss = 0.020754, time = 21.825 130 train_total = 50000, Accuracy = 99.74400 %, test_total= 10000, Accuracy = 92.26000 % 131 learn_rate:0.001296000000000 132 epoch = 42/100, batch_num = 782, loss = 0.016894, time = 21.502 133 train_total = 50000, Accuracy = 99.77600 %, test_total= 10000, Accuracy = 92.48000 % 134 learn_rate:0.001296000000000 135 epoch = 43/100, batch_num = 782, loss = 0.013981, time = 22.001 136 train_total = 50000, Accuracy = 99.84000 %, test_total= 10000, Accuracy = 92.43000 % 137 learn_rate:0.001296000000000 138 epoch = 44/100, batch_num = 782, loss = 0.013949, time = 21.460 139 train_total = 50000, Accuracy = 99.85800 %, test_total= 10000, Accuracy = 92.44000 % 140 learn_rate:0.001296000000000 141 epoch = 45/100, batch_num = 782, loss = 0.013909, time = 21.732 142 train_total = 50000, Accuracy = 99.86600 %, test_total= 10000, Accuracy = 92.35000 % 143 learn_rate:0.001296000000000 144 epoch = 46/100, batch_num = 782, loss = 0.012369, time = 22.156 145 train_total = 50000, Accuracy = 99.88600 %, test_total= 10000, Accuracy = 92.59000 % 146 learn_rate:0.001296000000000 147 epoch = 47/100, batch_num = 782, loss = 0.012164, time = 21.712 148 train_total = 50000, Accuracy = 99.83400 %, test_total= 10000, Accuracy = 92.31000 % 149 learn_rate:0.001296000000000 150 epoch = 48/100, batch_num = 782, loss = 0.012542, time = 22.416 151 train_total = 50000, Accuracy = 99.76000 %, test_total= 10000, Accuracy = 92.12000 % 152 learn_rate:0.001296000000000 153 epoch = 49/100, batch_num = 782, loss = 0.010346, time = 21.668 154 train_total = 50000, Accuracy = 99.91200 %, test_total= 10000, Accuracy = 92.58000 % 155 learn_rate:0.001296000000000 156 epoch = 50/100, batch_num = 782, loss = 0.011311, time = 21.650 157 train_total = 50000, Accuracy = 99.90800 %, test_total= 10000, Accuracy = 92.44000 % 158 learn_rate:0.000777600000000 159 epoch = 51/100, batch_num = 782, loss = 0.008120, time = 21.966 160 train_total = 50000, Accuracy = 99.94400 %, test_total= 10000, Accuracy = 92.78000 % 161 learn_rate:0.000777600000000 162 epoch = 52/100, batch_num = 782, loss = 0.007178, time = 21.983 163 train_total = 50000, Accuracy = 99.96200 %, test_total= 10000, Accuracy = 92.68000 % 164 learn_rate:0.000777600000000 165 epoch = 53/100, batch_num = 782, loss = 0.007624, time = 22.092 166 train_total = 50000, Accuracy = 99.94200 %, test_total= 10000, Accuracy = 92.46000 % 167 learn_rate:0.000777600000000 168 epoch = 54/100, batch_num = 782, loss = 0.006125, time = 21.804 169 train_total = 50000, Accuracy = 99.94400 %, test_total= 10000, Accuracy = 92.69000 % 170 learn_rate:0.000777600000000 171 epoch = 55/100, batch_num = 782, loss = 0.006559, time = 21.689 172 train_total = 50000, Accuracy = 99.97600 %, test_total= 10000, Accuracy = 92.87000 % 173 learn_rate:0.000777600000000 174 epoch = 56/100, batch_num = 782, loss = 0.005900, time = 21.473 175 train_total = 50000, Accuracy = 99.98200 %, test_total= 10000, Accuracy = 92.92000 % 176 learn_rate:0.000777600000000 177 epoch = 57/100, batch_num = 782, loss = 0.005508, time = 22.094 178 train_total = 50000, Accuracy = 99.97400 %, test_total= 10000, Accuracy = 92.77000 % 179 learn_rate:0.000777600000000 180 epoch = 58/100, batch_num = 782, loss = 0.006126, time = 21.645 181 train_total = 50000, Accuracy = 99.97600 %, test_total= 10000, Accuracy = 92.58000 % 182 learn_rate:0.000777600000000 183 epoch = 59/100, batch_num = 782, loss = 0.005585, time = 21.700 184 train_total = 50000, Accuracy = 99.98000 %, test_total= 10000, Accuracy = 92.92000 % 185 learn_rate:0.000777600000000 186 epoch = 60/100, batch_num = 782, loss = 0.005175, time = 22.570 187 train_total = 50000, Accuracy = 99.98800 %, test_total= 10000, Accuracy = 92.85000 % 188 learn_rate:0.000466560000000 189 epoch = 61/100, batch_num = 782, loss = 0.004352, time = 21.799 190 train_total = 50000, Accuracy = 99.98800 %, test_total= 10000, Accuracy = 93.02000 % 191 learn_rate:0.000466560000000 192 epoch = 62/100, batch_num = 782, loss = 0.004305, time = 22.381 193 train_total = 50000, Accuracy = 99.98600 %, test_total= 10000, Accuracy = 93.17000 % 194 learn_rate:0.000466560000000 195 epoch = 63/100, batch_num = 782, loss = 0.004180, time = 22.000 196 train_total = 50000, Accuracy = 99.99200 %, test_total= 10000, Accuracy = 92.94000 % 197 learn_rate:0.000466560000000 198 epoch = 64/100, batch_num = 782, loss = 0.004102, time = 21.666 199 train_total = 50000, Accuracy = 99.99000 %, test_total= 10000, Accuracy = 92.72000 % 200 learn_rate:0.000466560000000 201 epoch = 65/100, batch_num = 782, loss = 0.004598, time = 21.820 202 train_total = 50000, Accuracy = 99.99200 %, test_total= 10000, Accuracy = 92.98000 % 203 learn_rate:0.000466560000000 204 epoch = 66/100, batch_num = 782, loss = 0.003746, time = 21.805 205 train_total = 50000, Accuracy = 99.99400 %, test_total= 10000, Accuracy = 92.83000 % 206 learn_rate:0.000466560000000 207 epoch = 67/100, batch_num = 782, loss = 0.004035, time = 21.787 208 train_total = 50000, Accuracy = 99.99200 %, test_total= 10000, Accuracy = 93.15000 % 209 learn_rate:0.000466560000000 210 epoch = 68/100, batch_num = 782, loss = 0.004126, time = 21.785 211 train_total = 50000, Accuracy = 99.98600 %, test_total= 10000, Accuracy = 92.78000 % 212 learn_rate:0.000466560000000 213 epoch = 69/100, batch_num = 782, loss = 0.003450, time = 21.634 214 train_total = 50000, Accuracy = 99.99000 %, test_total= 10000, Accuracy = 93.08000 % 215 learn_rate:0.000466560000000 216 epoch = 70/100, batch_num = 782, loss = 0.003564, time = 21.636 217 train_total = 50000, Accuracy = 99.99000 %, test_total= 10000, Accuracy = 92.88000 % 218 learn_rate:0.000279936000000 219 epoch = 71/100, batch_num = 782, loss = 0.003281, time = 21.802 220 train_total = 50000, Accuracy = 99.99600 %, test_total= 10000, Accuracy = 93.08000 % 221 learn_rate:0.000279936000000 222 epoch = 72/100, batch_num = 782, loss = 0.003167, time = 21.694 223 train_total = 50000, Accuracy = 99.99600 %, test_total= 10000, Accuracy = 93.03000 % 224 learn_rate:0.000279936000000 225 epoch = 73/100, batch_num = 782, loss = 0.003314, time = 22.032 226 train_total = 50000, Accuracy = 99.99600 %, test_total= 10000, Accuracy = 93.02000 % 227 learn_rate:0.000279936000000 228 epoch = 74/100, batch_num = 782, loss = 0.003216, time = 21.721 229 train_total = 50000, Accuracy = 99.99600 %, test_total= 10000, Accuracy = 93.01000 % 230 learn_rate:0.000279936000000 231 epoch = 75/100, batch_num = 782, loss = 0.003373, time = 21.596 232 train_total = 50000, Accuracy = 99.99400 %, test_total= 10000, Accuracy = 93.10000 % 233 learn_rate:0.000279936000000 234 epoch = 76/100, batch_num = 782, loss = 0.003131, time = 22.089 235 train_total = 50000, Accuracy = 99.99200 %, test_total= 10000, Accuracy = 93.05000 % 236 learn_rate:0.000279936000000 237 epoch = 77/100, batch_num = 782, loss = 0.003092, time = 22.110 238 train_total = 50000, Accuracy = 99.99800 %, test_total= 10000, Accuracy = 93.01000 % 239 learn_rate:0.000279936000000 240 epoch = 78/100, batch_num = 782, loss = 0.003060, time = 21.796 241 train_total = 50000, Accuracy = 99.99600 %, test_total= 10000, Accuracy = 93.03000 % 242 learn_rate:0.000279936000000 243 epoch = 79/100, batch_num = 782, loss = 0.002961, time = 21.776 244 train_total = 50000, Accuracy = 99.99800 %, test_total= 10000, Accuracy = 93.02000 % 245 learn_rate:0.000279936000000 246 epoch = 80/100, batch_num = 782, loss = 0.003169, time = 22.426 247 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 92.89000 % 248 learn_rate:0.000167961600000 249 epoch = 81/100, batch_num = 782, loss = 0.002930, time = 22.116 250 train_total = 50000, Accuracy = 99.99400 %, test_total= 10000, Accuracy = 92.96000 % 251 learn_rate:0.000167961600000 252 epoch = 82/100, batch_num = 782, loss = 0.003191, time = 22.034 253 train_total = 50000, Accuracy = 99.99800 %, test_total= 10000, Accuracy = 92.84000 % 254 learn_rate:0.000167961600000 255 epoch = 83/100, batch_num = 782, loss = 0.002860, time = 22.164 256 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.16000 % 257 learn_rate:0.000167961600000 258 epoch = 84/100, batch_num = 782, loss = 0.002700, time = 22.663 259 train_total = 50000, Accuracy = 99.99800 %, test_total= 10000, Accuracy = 92.91000 % 260 learn_rate:0.000167961600000 261 epoch = 85/100, batch_num = 782, loss = 0.002644, time = 22.250 262 train_total = 50000, Accuracy = 99.99800 %, test_total= 10000, Accuracy = 92.98000 % 263 learn_rate:0.000167961600000 264 epoch = 86/100, batch_num = 782, loss = 0.002656, time = 22.101 265 train_total = 50000, Accuracy = 99.99800 %, test_total= 10000, Accuracy = 92.98000 % 266 learn_rate:0.000167961600000 267 epoch = 87/100, batch_num = 782, loss = 0.003052, time = 21.886 268 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 92.91000 % 269 learn_rate:0.000167961600000 270 epoch = 88/100, batch_num = 782, loss = 0.002605, time = 22.293 271 train_total = 50000, Accuracy = 99.99800 %, test_total= 10000, Accuracy = 92.96000 % 272 learn_rate:0.000167961600000 273 epoch = 89/100, batch_num = 782, loss = 0.002603, time = 21.796 274 train_total = 50000, Accuracy = 99.99600 %, test_total= 10000, Accuracy = 93.23000 % 275 learn_rate:0.000167961600000 276 epoch = 90/100, batch_num = 782, loss = 0.002599, time = 22.199 277 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.03000 % 278 learn_rate:0.000100776960000 279 epoch = 91/100, batch_num = 782, loss = 0.002655, time = 23.211 280 train_total = 50000, Accuracy = 99.99800 %, test_total= 10000, Accuracy = 93.11000 % 281 learn_rate:0.000100776960000 282 epoch = 92/100, batch_num = 782, loss = 0.002482, time = 21.936 283 train_total = 50000, Accuracy = 100.00000 %, test_total= 10000, Accuracy = 93.25000 % 284 learn_rate:0.000100776960000 285 epoch = 93/100, batch_num = 782, loss = 0.002680, time = 21.564 286 train_total = 50000, Accuracy = 99.99600 %, test_total= 10000, Accuracy = 92.93000 % 287 learn_rate:0.000100776960000 288 epoch = 94/100, batch_num = 782, loss = 0.002440, time = 21.776 289 train_total = 50000, Accuracy = 99.99800 %, test_total= 10000, Accuracy = 93.18000 % 290 learn_rate:0.000100776960000 291 epoch = 95/100, batch_num = 782, loss = 0.002384, time = 22.679 292 train_total = 50000, Accuracy = 99.99800 %, test_total= 10000, Accuracy = 92.95000 % 293 learn_rate:0.000100776960000 294 epoch = 96/100, batch_num = 782, loss = 0.002711, time = 21.659 295 train_total = 50000, Accuracy = 99.99800 %, test_total= 10000, Accuracy = 93.00000 % 296 learn_rate:0.000100776960000 297 epoch = 97/100, batch_num = 782, loss = 0.002881, time = 23.327 298 train_total = 50000, Accuracy = 99.99600 %, test_total= 10000, Accuracy = 93.27000 % 299 learn_rate:0.000100776960000 300 epoch = 98/100, batch_num = 782, loss = 0.002583, time = 21.802 301 train_total = 50000, Accuracy = 99.99200 %, test_total= 10000, Accuracy = 92.83000 % 302 learn_rate:0.000100776960000 303 epoch = 99/100, batch_num = 782, loss = 0.002490, time = 21.867 304 train_total = 50000, Accuracy = 99.99400 %, test_total= 10000, Accuracy = 93.11000 % 305 learn_rate:0.000100776960000 306 epoch = 100/100, batch_num = 782, loss = 0.002650, time = 22.361 307 train_total = 50000, Accuracy = 99.99800 %, test_total= 10000, Accuracy = 93.04000 %

图3-1 Resnet18模型的acc

图3-1 Resnet18模型的acc

图3-2 Resnet18_new模型的acc

Resnet18训练CIFAR10 准确率95%改进,参考:https://blog.csdn.net/immc1979/article/details/128324029

1 import torch 2 from torch.utils.data import DataLoader 3 from torch import nn, optim 4 from torchvision import datasets, transforms 5 6 from matplotlib import pyplot as plt 7 8 9 import time 10 11 from Lenet5 import Lenet5_new 12 from Resnet18 import ResNet18 13 14 def main(): 15 16 print("Load datasets...") 17 18 # transforms.RandomHorizontalFlip(p=0.5)---以0.5的概率对图片做水平横向翻转 19 # transforms.ToTensor()---shape从(H,W,C)->(C,H,W), 每个像素点从(0-255)映射到(0-1):直接除以255 20 # transforms.Normalize---先将输入归一化到(0,1),像素点通过"(x-mean)/std",将每个元素分布到(-1,1) 21 transform_train = transforms.Compose([ 22 transforms.RandomHorizontalFlip(p=0.5), 23 transforms.ToTensor(), 24 transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225)) 25 ]) 26 27 transform_test = transforms.Compose([ 28 transforms.ToTensor(), 29 transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225)) 30 ]) 31 32 # 内置函数下载数据集 33 train_dataset = datasets.CIFAR10(root="./data/Cifar10/", train=True, 34 transform = transform_train, 35 download=True) 36 test_dataset = datasets.CIFAR10(root = "./data/Cifar10/", 37 train = False, 38 transform = transform_test, 39 download=True) 40 41 print(len(train_dataset), len(test_dataset)) 42 43 Batch_size = 64 44 train_loader = DataLoader(train_dataset, batch_size=Batch_size, shuffle = True) 45 test_loader = DataLoader(test_dataset, batch_size = Batch_size, shuffle = False) 46 47 # 设置CUDA 48 device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") 49 50 # 初始化模型 51 # 直接更换模型就行,其他无需操作 52 # model = Lenet5_new().to(device) 53 model = ResNet18().to(device) 54 55 # 构造损失函数和优化器 56 criterion = nn.CrossEntropyLoss() # 多分类softmax构造损失 57 opt = optim.SGD(model.parameters(), lr=0.001, momentum=0.8, weight_decay=0.001) 58 59 # 动态更新学习率 ------每隔step_size : lr = lr * gamma 60 schedule = optim.lr_scheduler.StepLR(opt, step_size=10, gamma=0.6, last_epoch=-1) 61 62 # 开始训练 63 print("Start Train...") 64 65 epochs = 100 66 67 loss_list = [] 68 train_acc_list =[] 69 test_acc_list = [] 70 epochs_list = [] 71 72 for epoch in range(0, epochs): 73 74 start = time.time() 75 76 model.train() 77 78 running_loss = 0.0 79 batch_num = 0 80 81 for i, (inputs, labels) in enumerate(train_loader): 82 83 inputs, labels = inputs.to(device), labels.to(device) 84 85 # 将数据送入模型训练 86 outputs = model(inputs) 87 # 计算损失 88 loss = criterion(outputs, labels).to(device) 89 90 # 重置梯度 91 opt.zero_grad() 92 # 计算梯度,反向传播 93 loss.backward() 94 # 根据反向传播的梯度值优化更新参数 95 opt.step() 96 97 # 100个batch的 loss 之和 98 running_loss += loss.item() 99 # loss_list.append(loss.item()) 100 batch_num+=1 101 102 103 epochs_list.append(epoch) 104 105 # 每一轮结束输出一下当前的学习率 lr 106 lr_1 = opt.param_groups[0]['lr'] 107 print("learn_rate:%.15f" % lr_1) 108 schedule.step() 109 110 end = time.time() 111 print('epoch = %d/100, batch_num = %d, loss = %.6f, time = %.3f' % (epoch+1, batch_num, running_loss/batch_num, end-start)) 112 running_loss=0.0 113 114 # 每个epoch训练结束,都进行一次测试验证 115 model.eval() 116 train_correct = 0.0 117 train_total = 0 118 119 test_correct = 0.0 120 test_total = 0 121 122 # 训练模式不需要反向传播更新梯度 123 with torch.no_grad(): 124 125 # print("=======================train=======================") 126 for inputs, labels in train_loader: 127 inputs, labels = inputs.to(device), labels.to(device) 128 outputs = model(inputs) 129 130 pred = outputs.argmax(dim=1) # 返回每一行中最大值元素索引 131 train_total += inputs.size(0) 132 train_correct += torch.eq(pred, labels).sum().item() 133 134 135 # print("=======================test=======================") 136 for inputs, labels in test_loader: 137 inputs, labels = inputs.to(device), labels.to(device) 138 outputs = model(inputs) 139 140 pred = outputs.argmax(dim=1) # 返回每一行中最大值元素索引 141 test_total += inputs.size(0) 142 test_correct += torch.eq(pred, labels).sum().item() 143 144 print("train_total = %d, Accuracy = %.5f %%, test_total= %d, Accuracy = %.5f %%" %(train_total, 100 * train_correct / train_total, test_total, 100 * test_correct / test_total)) 145 146 train_acc_list.append(100 * train_correct / train_total) 147 test_acc_list.append(100 * test_correct / test_total) 148 149 # print("Accuracy of the network on the 10000 test images:%.5f %%" % (100 * test_correct / test_total)) 150 # print("===============================================") 151 152 fig = plt.figure(figsize=(4, 4)) 153 154 plt.plot(epochs_list, train_acc_list, label='train_acc_list') 155 plt.plot(epochs_list, test_acc_list, label='test_acc_list') 156 plt.legend() 157 plt.title("train_test_acc") 158 plt.savefig('resnet18_cc_epoch_{:04d}.png'.format(epochs)) 159 plt.close() 160 161 if __name__ == "__main__": 162 163

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律