B05-openstack高可用-haproxy集群部署

1. 安装haproxy

在全部控制节点安装haproxy,以controller01节点为例

[root@controller03 ~]# yum -y install haproxy

2:配置haproxy.cfg

[root@controller01 ~]# cp /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg.bak

[root@controller01 ~]# cat /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local0

chroot /var/lib/haproxy

daemon

group haproxy

user haproxy

maxconn 4000

pidfile /var/run/haproxy.pid

stats socket /var/lib/haproxy/stats

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

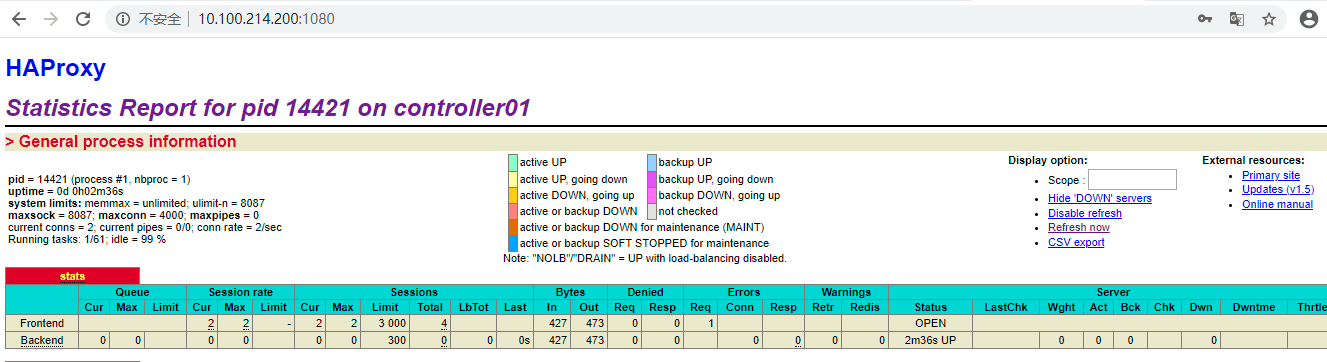

# haproxy监控页

listen stats

bind 0.0.0.0:1080

mode http

stats enable

stats uri /

stats realm OpenStack\ Haproxy

stats auth admin:admin

stats refresh 30s

stats show-node

stats show-legends

stats hide-version

# horizon服务

listen dashboard_cluster

bind 10.100.214.200:80

balance source

option tcpka

option httpchk

option tcplog

server controller01 10.100.214.201:80 check inter 2000 rise 2 fall 5

server controller02 10.100.214.202:80 check inter 2000 rise 2 fall 5

server controller03 10.100.214.203:80 check inter 2000 rise 2 fall 5

# mariadb服务;

# 设置controller01节点为master,controller02/03节点为backup,一主多备的架构可规避数据不一致性;

# 另外官方示例为检测9200(心跳)端口,测试在mariadb服务宕机的情况下,虽然”/usr/bin/clustercheck”脚本已探测不到服务,但受xinetd控制的9200端口依然正常,导致haproxy始终将请求转发到mariadb服务宕机的节点,暂时修改为监听3306端口

listen galera_cluster

bind 10.100.214.200:3306

balance source

mode tcp

server controller01 10.100.214.203:3306 check inter 2000 rise 2 fall 5

server controller02 10.100.214.202:3306 backup check inter 2000 rise 2 fall 5

server controller03 10.100.214.203:3306 backup check inter 2000 rise 2 fall 5

# 为rabbirmq提供ha集群访问端口,供openstack各服务访问;

# 如果openstack各服务直接连接rabbitmq集群,这里可不设置rabbitmq的负载均衡

listen rabbitmq_cluster

bind 10.100.214.200:5673

mode tcp

option tcpka

balance roundrobin

timeout client 3h

timeout server 3h

option clitcpka

server controller01 10.100.214.201:5672 check inter 10s rise 2 fall 5

server controller02 10.100.214.202:5672 check inter 10s rise 2 fall 5

server controller03 10.100.214.203:5672 check inter 10s rise 2 fall 5

# glance_api服务

listen glance_api_cluster

bind 10.100.214.200:9292

balance source

option tcpka

option httpchk

option tcplog

server controller01 10.100.214.201:9292 check inter 2000 rise 2 fall 5

server controller02 10.100.214.202:9292 check inter 2000 rise 2 fall 5

server controller03 10.100.214.203:9292 check inter 2000 rise 2 fall 5

# glance_registry服务

listen glance_registry_cluster

bind 10.100.214.200:9191

balance source

option tcpka

option tcplog

server controller01 10.100.214.201:9191 check inter 2000 rise 2 fall 5

server controller02 10.100.214.202:9191 check inter 2000 rise 2 fall 5

server controller03 10.100.214.203:9191 check inter 2000 rise 2 fall 5

# keystone_admin_internal_api服务

listen keystone_admin_cluster

bind 10.100.214.200:35357

balance source

option tcpka

option httpchk

option tcplog

server controller01 10.100.214.201:35357 check inter 2000 rise 2 fall 5

server controller02 10.100.214.202:35357 check inter 2000 rise 2 fall 5

server controller03 10.100.214.203:35357 check inter 2000 rise 2 fall 5

# keystone_public _api服务

listen keystone_public_cluster

bind 10.100.214.200:5000

balance source

option tcpka

option httpchk

option tcplog

server controller01 10.100.214.201:5000 check inter 2000 rise 2 fall 5

server controller02 10.100.214.202:5000 check inter 2000 rise 2 fall 5

server controller03 10.100.214.203:5000 check inter 2000 rise 2 fall 5

# 兼容aws ec2-api

listen nova_ec2_api_cluster

bind 10.100.214.200:8773

balance source

option tcpka

option tcplog

server controller01 10.100.214.201:8773 check inter 2000 rise 2 fall 5

server controller02 10.100.214.202:8773 check inter 2000 rise 2 fall 5

server controller03 10.100.214.203:8773 check inter 2000 rise 2 fall 5

listen nova_compute_api_cluster

bind 10.100.214.200:8774

balance source

option tcpka

option httpchk

option tcplog

server controller01 10.100.214.201:8774 check inter 2000 rise 2 fall 5

server controller02 10.100.214.202:8774 check inter 2000 rise 2 fall 5

server controller03 10.100.214.203:8774 check inter 2000 rise 2 fall 5

listen nova_placement_cluster

bind 10.100.214.200:8778

balance source

option tcpka

option tcplog

server controller01 10.100.214.201:8778 check inter 2000 rise 2 fall 5

server controller02 10.100.214.202:8778 check inter 2000 rise 2 fall 5

server controller03 10.100.214.203:8778 check inter 2000 rise 2 fall 5

listen nova_metadata_api_cluster

bind 10.100.214.200:8775

balance source

option tcpka

option tcplog

server controller01 10.100.214.201:8775 check inter 2000 rise 2 fall 5

server controller02 10.100.214.202:8775 check inter 2000 rise 2 fall 5

server controller03 10.100.214.203:8775 check inter 2000 rise 2 fall 5

listen nova_vncproxy_cluster

bind 10.100.214.200:6080

balance source

option tcpka

option tcplog

server controller01 10.100.214.201:6080 check inter 2000 rise 2 fall 5

server controller02 10.100.214.202:6080 check inter 2000 rise 2 fall 5

server controller03 10.100.214.203:6080 check inter 2000 rise 2 fall 5

listen neutron_api_cluster

bind 10.100.214.200:9696

balance source

option tcpka

option httpchk

option tcplog

server controller01 10.100.214.201:9696 check inter 2000 rise 2 fall 5

server controller02 10.100.214.202:9696 check inter 2000 rise 2 fall 5

server controller03 10.100.214.203:9696 check inter 2000 rise 2 fall 5

listen cinder_api_cluster

bind 10.100.214.200:8776

balance source

option tcpka

option httpchk

option tcplog

server controller01 10.100.214.201:8776 check inter 2000 rise 2 fall 5

server controller02 10.100.214.202:8776 check inter 2000 rise 2 fall 5

server controller03 10.100.214.203:8776 check inter 2000 rise 2 fall 5

将配置文件拷贝到其他节点中:

[root@controller01 ~]# scp /etc/haproxy/haproxy.cfg 10.100.214.202:/etc/haproxy/haproxy.cfg

[root@controller01 ~]# scp /etc/haproxy/haproxy.cfg 10.100.214.203:/etc/haproxy/haproxy.cfg

3:配置内核参数

# 全部控制节点修改内核参数,以controller01节点为例; # net.ipv4.ip_nonlocal_bind:是否允许no-local ip绑定,关系到haproxy实例与vip能否绑定并切换; # net.ipv4.ip_forward:是否允许转发

[root@controller01 ~]# echo "net.ipv4.ip_nonlocal_bind = 1" >>/etc/sysctl.conf

[root@controller01 ~]# echo "net.ipv4.ip_forward = 1" >>/etc/sysctl.conf

[root@controller01 ~]# sysctl -p

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

4:启动服务

[root@controller01 ~]# systemctl enable haproxy && systemctl restart haproxy && systemctl status haproxy

5:设置pcs资源:

# 任意控制节点操作即可,以controller01节点为例;

# 添加资源lb-haproxy-clone

[root@controller01 ~]# pcs resource create lb-haproxy systemd:haproxy --clone

[root@controller01 ~]# pcs resource

vip (ocf::heartbeat:IPaddr2): Started controller01

Clone Set: lb-haproxy-clone [lb-haproxy]

Started: [ controller01 controller02 controller03 ]

# 设置资源启动顺序,先vip再lb-haproxy-clone;

# 通过“cibadmin --query --scope constraints”可查看资源约束配置

[root@controller01 ~]# pcs constraint order start vip then lb-haproxy-clone kind=Optional --force

Adding vip lb-haproxy-clone (kind: Optional) (Options: first-action=start then-action=start)

# 官方建议设置vip运行在haproxy active的节点,通过绑定lb-haproxy-clone与vip服务,将两种资源约束在1个节点; # 约束后,从资源角度看,其余暂时没有获得vip的节点的haproxy会被pcs关闭

[root@controller01 ~]# pcs constraint colocation add lb-haproxy-clone with vip --force

[root@controller01 ~]# pcs resource

vip (ocf::heartbeat:IPaddr2): Started controller01

Clone Set: lb-haproxy-clone [lb-haproxy]

Started: [ controller01 ]

Stopped: [ controller02 controller03 ]