prometheus学习系列六: Prometheus relabel配置

relabel_config

重新标记是一个功能强大的工具,可以在目标的标签集被抓取之前重写它,每个采集配置可以配置多个重写标签设置,并按照配置的顺序来应用于每个目标的标签集。

目标重新标签之后,以__开头的标签将从标签集中删除的。

如果使用只需要临时的存储临时标签值的,可以使用_tmp作为前缀标识。

relabel的action类型

- replace: 对标签和标签值进行替换。

- keep: 满足特定条件的实例进行采集,其他的不采集。

- drop: 满足特定条件的实例不采集,其他的采集。

- hashmod: 这个我也没看懂啥意思,囧。

- labelmap: 这个我也没看懂啥意思,囧。

- labeldrop: 对抓取的实例特定标签进行删除。

- labelkeep: 对抓取的实例特定标签进行保留,其他标签删除。

常用action的测试

在测试前,同步下配置文件如下。

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: "node"

file_sd_configs:

- refresh_interval: 1m

files:

- "/usr/local/prometheus/prometheus/conf/node*.yml"

[root@node00 prometheus]# cat conf/node-dis.yml

- targets:

- "192.168.100.10:20001"

labels:

__hostname__: node00

__businees_line__: "line_a"

__region_id__: "cn-beijing"

__availability_zone__: "a"

- targets:

- "192.168.100.11:20001"

labels:

__hostname__: node01

__businees_line__: "line_a"

__region_id__: "cn-beijing"

__availability_zone__: "a"

- targets:

- "192.168.100.12:20001"

labels:

__hostname__: node02

__businees_line__: "line_c"

__region_id__: "cn-beijing"

__availability_zone__: "b"

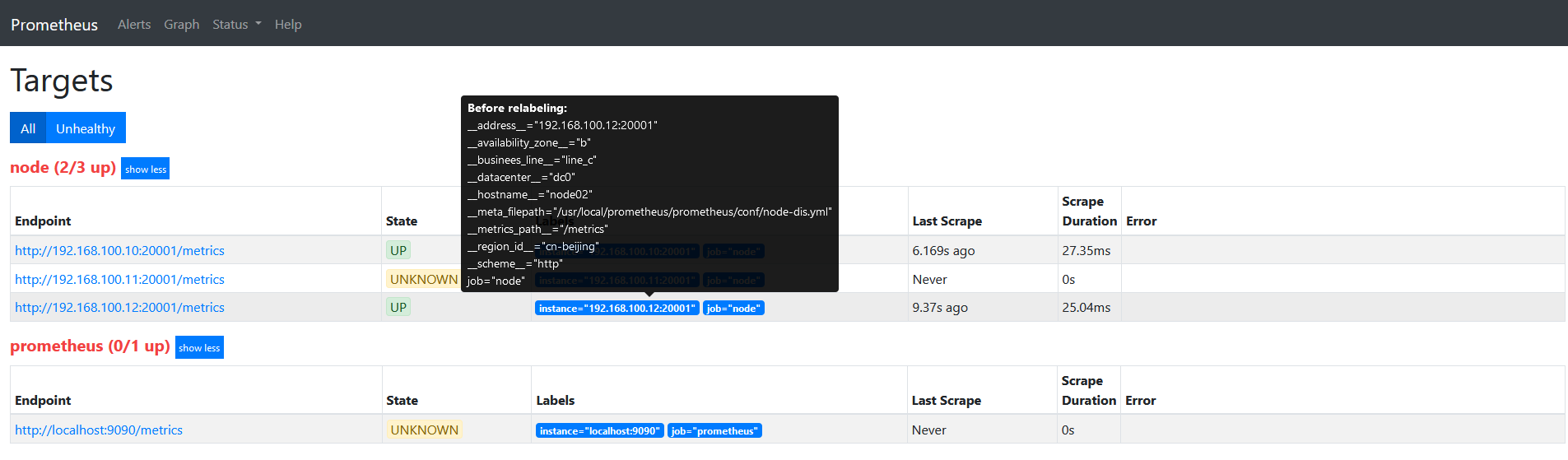

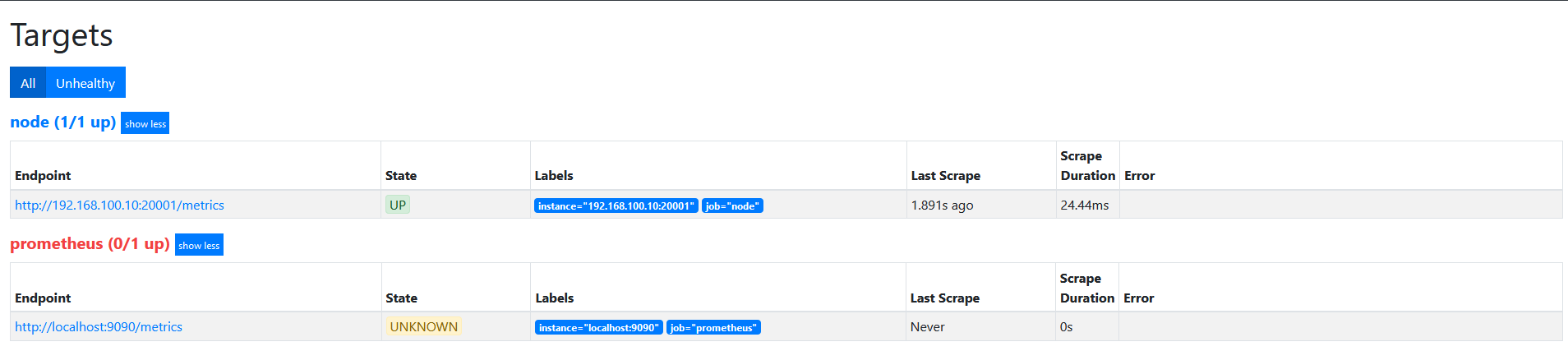

此时如果查看target信息,如下图。

因为我们的label都是以__开头的,目标重新标签之后,以__开头的标签将从标签集中删除的。

一个简单的relabel设置

将labels中的__hostname__替换为node_name。

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: "node"

file_sd_configs:

- refresh_interval: 1m

files:

- "/usr/local/prometheus/prometheus/conf/node*.yml"

relabel_configs:

- source_labels:

- "__hostname__"

regex: "(.*)"

target_label: "nodename"

action: replace

replacement: "$1"

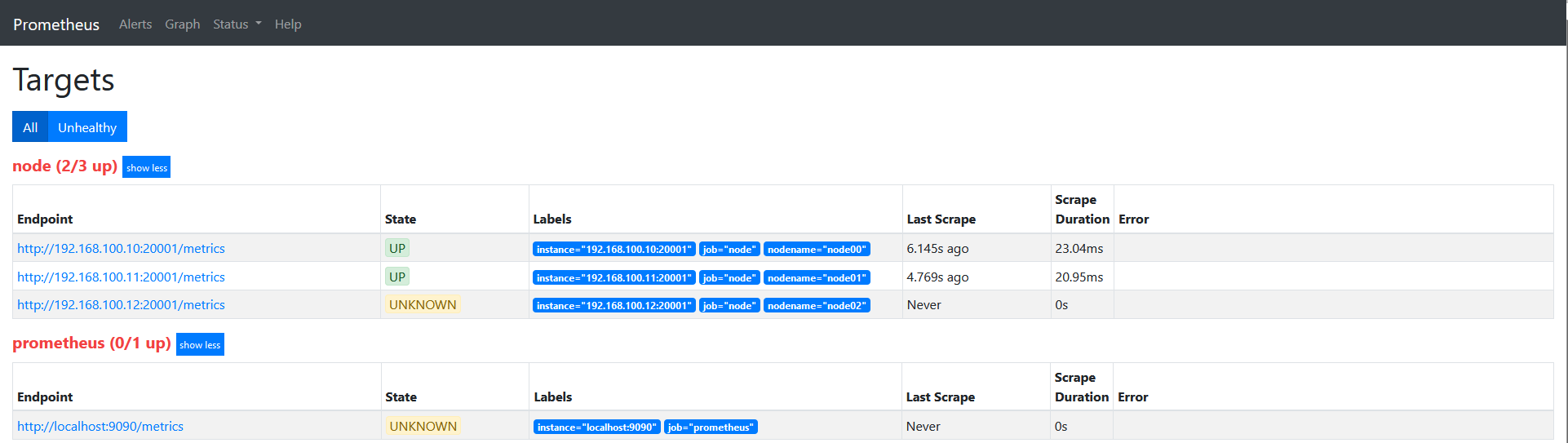

重启服务查看target信息如下图:

说下上面的配置: source_labels指定我们我们需要处理的源标签, target_labels指定了我们要replace后的标签名字, action指定relabel动作,这里使用replace替换动作。 regex去匹配源标签(__hostname__)的值,"(.*)"代表__hostname__这个标签是什么值都匹配的,然后replacement指定的替换后的标签(target_label)对应的数值。采用正则引用方式获取的。

这里修改下上面的正则表达式为 ‘’regex: "(node00)"'的时候可以看到如下图。

keep

修改配置文件

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: "node"

file_sd_configs:

- refresh_interval: 1m

files:

- "/usr/local/prometheus/prometheus/conf/node*.yml"

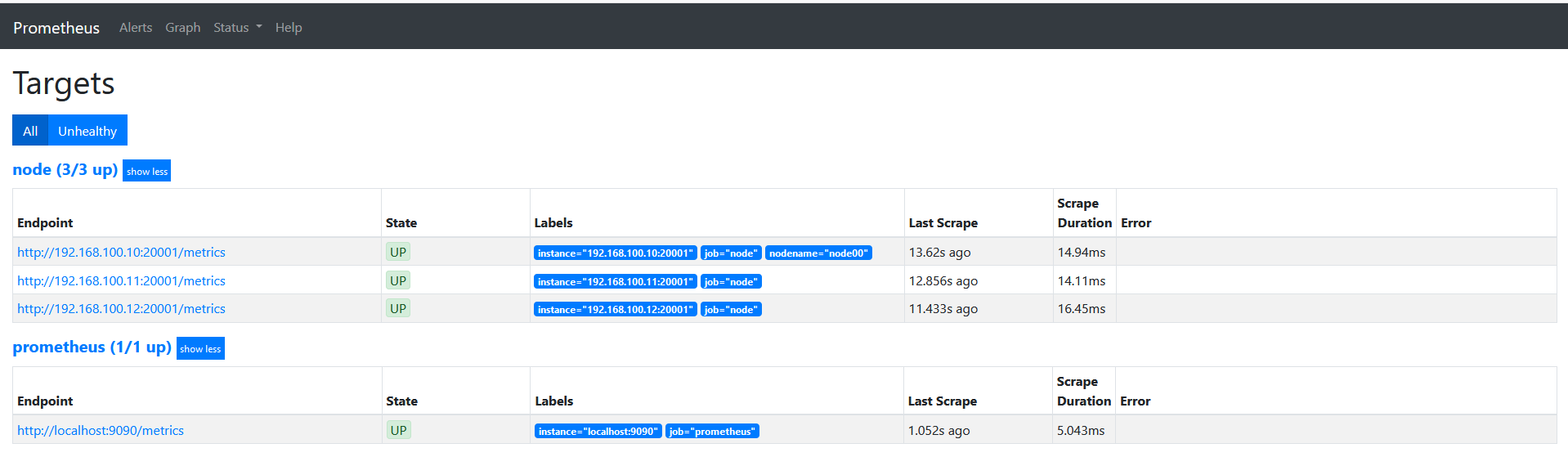

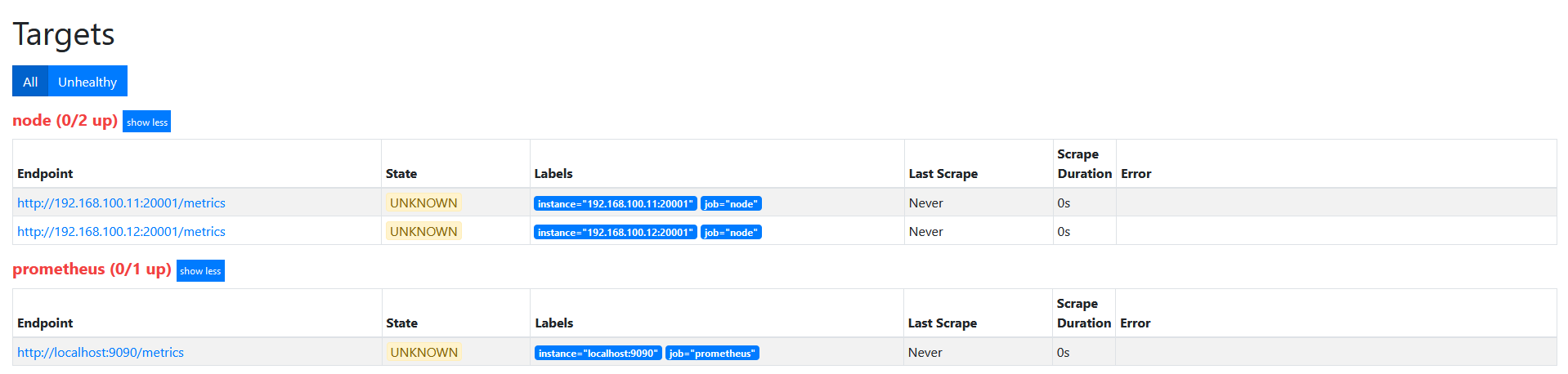

target如下图

修改配置文件如下

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: "node"

file_sd_configs:

- refresh_interval: 1m

files:

- "/usr/local/prometheus/prometheus/conf/node*.yml"

relabel_configs:

- source_labels:

- "__hostname__"

regex: "node00"

action: keep

target如下图

action为keep,只要source_labels的值匹配regex(node00)的实例才能会被采集。 其他的实例不会被采集。

drop

在上面的基础上,修改action为drop。

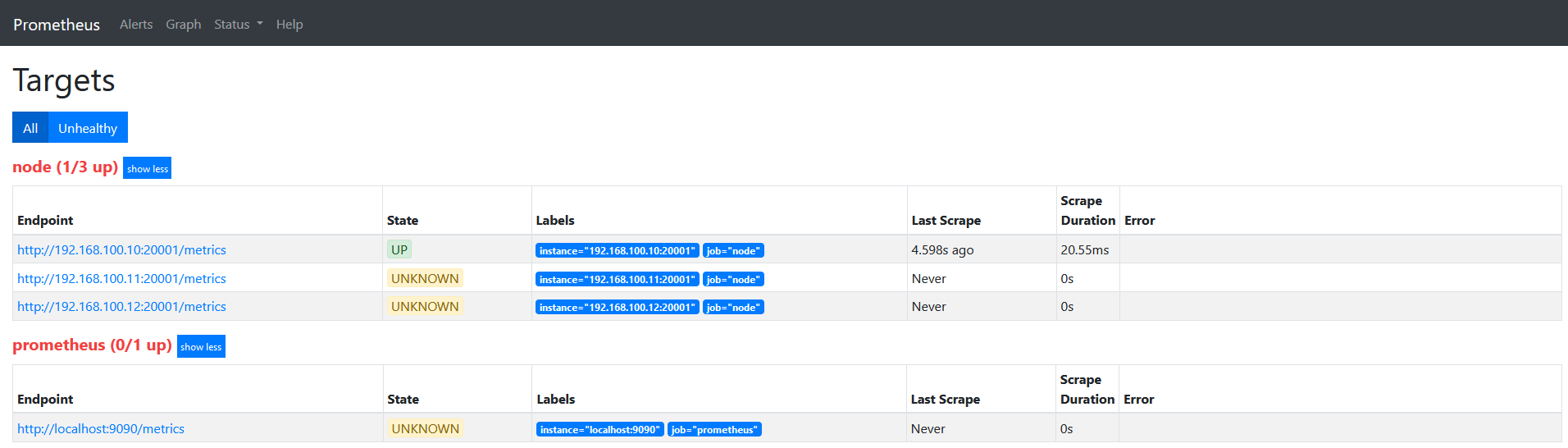

target如下图

action为drop,其实和keep是相似的, 不过是相反的, 只要source_labels的值匹配regex(node00)的实例不会被采集。 其他的实例会被采集。

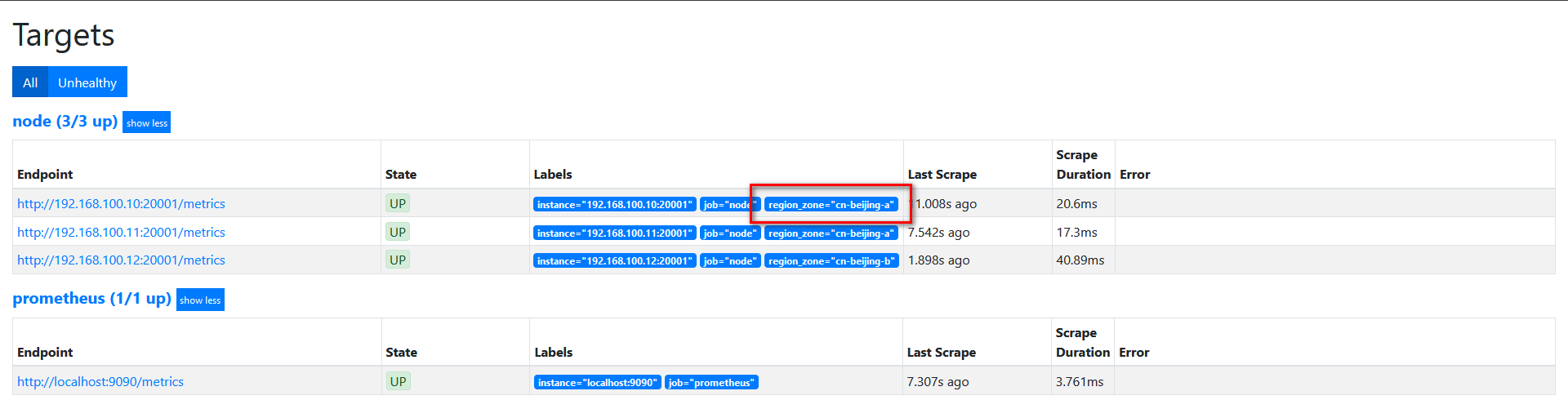

replace

我们的基础信息里面有__region_id__和__availability_zone__,但是我想融合2个字段在一起,可以通过replace来实现。

修改配置如下

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: "node"

file_sd_configs:

- refresh_interval: 1m

files:

- "/usr/local/prometheus/prometheus/conf/node*.yml"

relabel_configs:

- source_labels:

- "__region_id__"

- "__availability_zone__"

separator: "-"

regex: "(.*)"

target_label: "region_zone"

action: replace

replacement: "$1"

target如下图:

labelkeep

配置文件如下

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: "node"

file_sd_configs:

- refresh_interval: 1m

files:

- "/usr/local/prometheus/prometheus/conf/node*.yml"

relabel_configs:

- source_labels:

- "__hostname__"

regex: "(.*)"

target_label: "nodename"

action: replace

replacement: "$1"

- source_labels:

- "__businees_line__"

regex: "(.*)"

target_label: "businees_line"

action: replace

replacement: "$1"

- source_labels:

- "__datacenter__"

regex: "(.*)"

target_label: "datacenter"

action: replace

replacement: "$1"

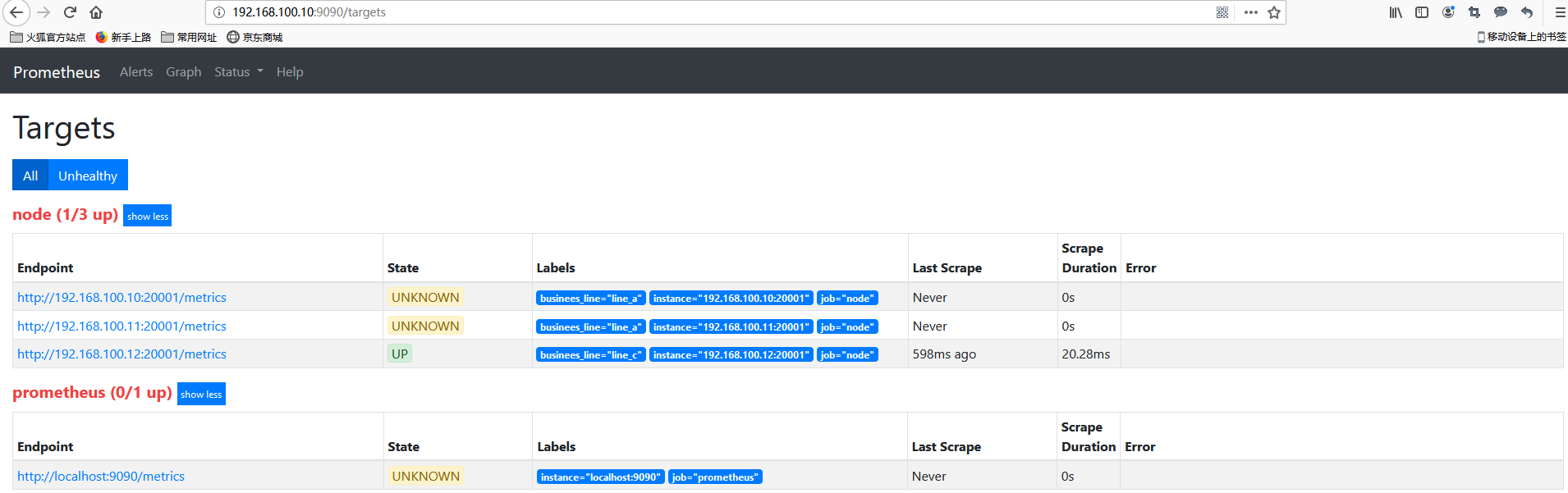

target如下图

修改配置文件如下

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: "node"

file_sd_configs:

- refresh_interval: 1m

files:

- "/usr/local/prometheus/prometheus/conf/node*.yml"

relabel_configs:

- source_labels:

- "__hostname__"

regex: "(.*)"

target_label: "nodename"

action: replace

replacement: "$1"

- source_labels:

- "__businees_line__"

regex: "(.*)"

target_label: "businees_line"

action: replace

replacement: "$1"

- source_labels:

- "__datacenter__"

regex: "(.*)"

target_label: "datacenter"

action: replace

replacement: "$1"

- regex: "(nodename|datacenter)"

action: labeldrop

target如下图

posted on 2019-09-26 17:51 LinuxPanda 阅读(16968) 评论(6) 收藏 举报

浙公网安备 33010602011771号

浙公网安备 33010602011771号