k8s 1.3之集群环境测试配置

1.在kubernetes集群部署一个3个master主机和2个工作节点集群

master节点至少2核4G内存,node至少1核2G内存

实验环境:192.168.213.3 master1

192.168.213.4 master2

192.168.213.7 master3

192.168.213.5 work1

192.168.213.6 work2

2.五台机器都配置好hosts解析,以及master节点机器192.168.213.3 设置免密登录其他几个机器

[root@master1 ~]# ssh-keygen [root@master1 ~]# for i in 4 5 6 7 #for循环下 > do > ssh-copy-id 192.168.213.$i > done

3.升级操作系统内核,因为如果内核版本低会有问题,所有主机都需要升级内核

导入elrepo gpg key

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

4.下载elrepo源,可以去阿里源下载,有教程elrepo镜像_elrepo下载地址_elrepo安装教程-阿里巴巴开源镜像站 (aliyun.com)

[root@master1 ~]# yum install -y https://www.elrepo.org/elrepo-release-7.0-4.el7.elrepo.noarch.rpm

5.安装kernel-ml版本,ml为长期稳定版本,lt为长期维护版本

yum --enablerepo="elrepo-kernel" -y install kernel-ml.x86_64

1 2 3 4 5 6 7 8 9 10 | [root@master1 ~]# grub2-set-default 0 #设置grub2默认引导为0[root@master1 ~]# grub2-mkconfig -o /boot/grub2/grub.cfg #重新生成grub2引导文件Generating grub configuration file ... Found linux image: /boot/vmlinuz-5.18.12-1.el7.elrepo.x86_64Found initrd image: /boot/initramfs-5.18.12-1.el7.elrepo.x86_64.imgFound linux image: /boot/vmlinuz-3.10.0-1160.el7.x86_64Found initrd image: /boot/initramfs-3.10.0-1160.el7.x86_64.imgFound linux image: /boot/vmlinuz-0-rescue-aaad051bb3b24087b8a673a0a9e140d1Found initrd image: /boot/initramfs-0-rescue-aaad051bb3b24087b8a673a0a9e140d1.imgdone<br> |

5.添加网桥的网络转发和内核转发配置文件

1 2 3 4 5 6 | cat > /etc/sysctl.d/k8s.conf <<EOFnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1net.ipv4.ip_forward = 1vm.swappiness = 0EOF |

6.加载br_netfilter模块

[root@master1 ~]# modprobe br_netfilter

查看是否加载

[root@master1 ~]# lsmod | grep br_netfilter br_netfilter 22256 0 bridge 151336 1 br_netfilter

7.重新载入网桥过滤及内核转发配置文件

[root@master1 ~]# sysctl -p /etc/sysctl.d/kubernetes.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 vm.swappiness = 0

8.配置ipvs,安装ipset及ipvsadm

1 | [root@master2 ~]# yum install -y ipset ipvsadm |

添加需要加载的模块

[root@master2 ~]# cat /etc/sysconfig/modules/ipvs.modules

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

检查是否成功开启

[root@master2 ~]# chmod 755 /etc/sysconfig/modules/ipvs.modules

[root@master2 ~]# cd /etc/sysconfig/modules

[root@master2 modules]# ls

ipvs.modules

[root@master2 modules]# ./ipvs.modules

[root@master2 modules]# lsmod | grep -e ip_vs -e nf_conntrack #查看ip_vs是佛开启

nf_conntrack_netlink 36396 0 nfnetlink 14519 2 nf_conntrack_netlink nf_conntrack_ipv4 15053 3 nf_defrag_ipv4 12729 1 nf_conntrack_ipv4 ip_vs_sh 12688 0 ip_vs_wrr 12697 0 ip_vs_rr 12600 0 ip_vs 145458 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr nf_conntrack 139264 7 ip_vs,nf_nat,nf_nat_ipv4,xt_conntrack,nf_nat_masquerade_ipv4,nf_conntrack_netlink,nf_conntrack_ipv4 libcrc32c 12644 4 xfs,ip_vs,nf_nat,nf_conntrack

9.关闭swap分区

临时关闭:swapoff -a

永久关闭,vim /etc/fstab吧swap那行加注释

10.重启,使各种参数生效eboot

11.验证下内核是否更改 uname -r

[root@master1 ~]# uname -r 5.18.12-1.el7.elrepo.x86_64

12.关闭SELINUX

13.所有主机安装docker源(阿里源下载),下载docker

14.

cat > /etc/docker/daemon.json <<EOF { "exec-opts": ["native.cgroupdriver=systemd"] } EOF

15.重启docker设置开机自启

16.安装keepalived和haproxy,harproxy用于负载均衡,适用于访问量特别大的web网站,负载功能比nginx强,keepalived是用于防止集群单点故障,保障了集群的高可用

yum install -y keepalived haproxy

查看两个文件的配置文件,都在etc下

1.(1)先看haproxy的

[root@master1 ~]# vim /etc/haproxy/haproxy.cfg

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305 # 监控HAProxy状态端口

mode http # 协议

option httplog

monitor-uri /monitor # 监控路径

frontend k8s-master

bind 0.0.0.0:16443

bind 127.0.0.1:16443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master # 后端服务器组

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server master1 192.168.213.3:6443 check # 根据自己环境修改IP

server master2 192.168.213.4:6443 check # 根据自己环境修改IP

server master3 192.168.213.7:6443 check # 根据自己环境修改IP

(2)启动haproxy并设置随机自启

[root@master1 ~]# systemctl start haproxy && systemctl enable haproxy

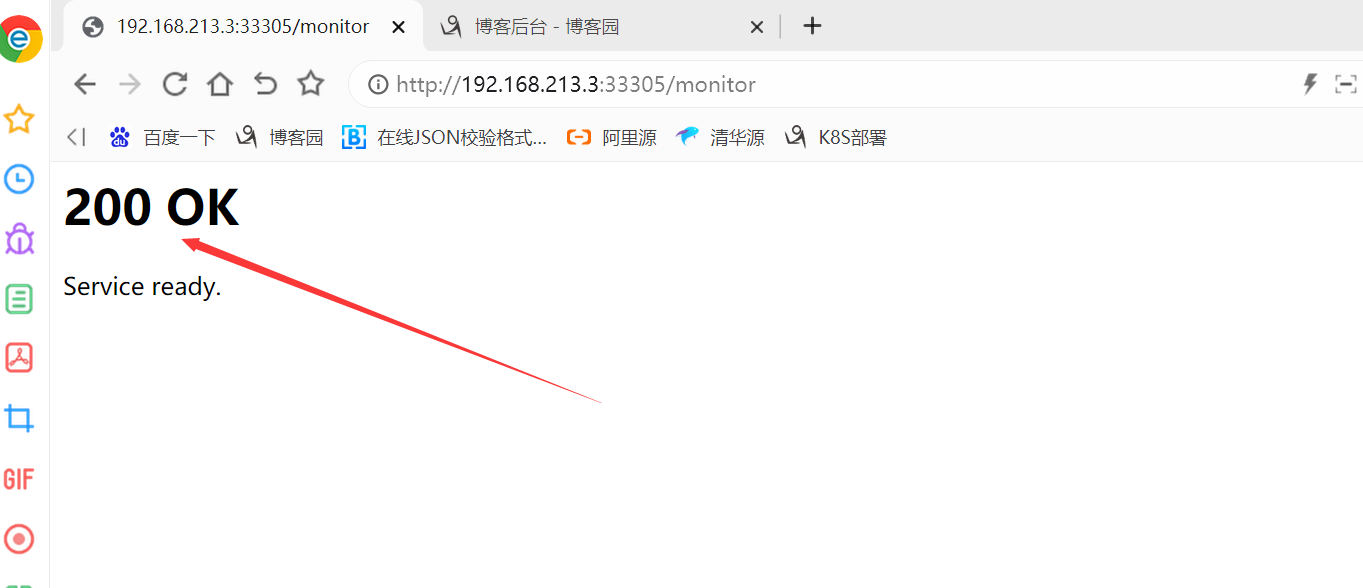

(3)输入ip+33305/monitor (ip指的是启动haproxy的任意一台主机都可以)

2.配置keepalived

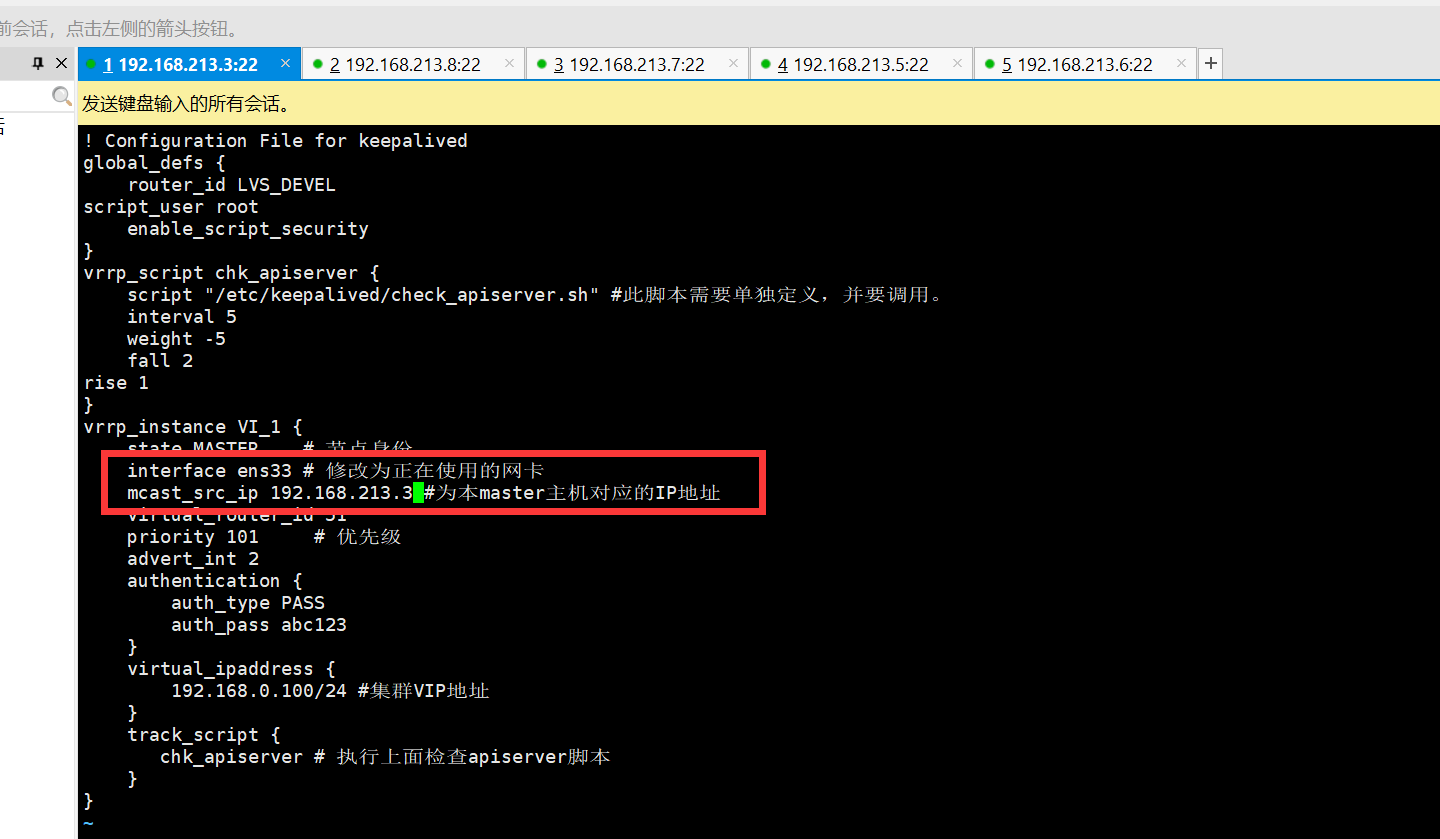

(1)[root@master1 ~]# vim /etc/keepalived/keepalived.conf

修改网卡和本机ip地址

主节点修改ip地址,网卡名

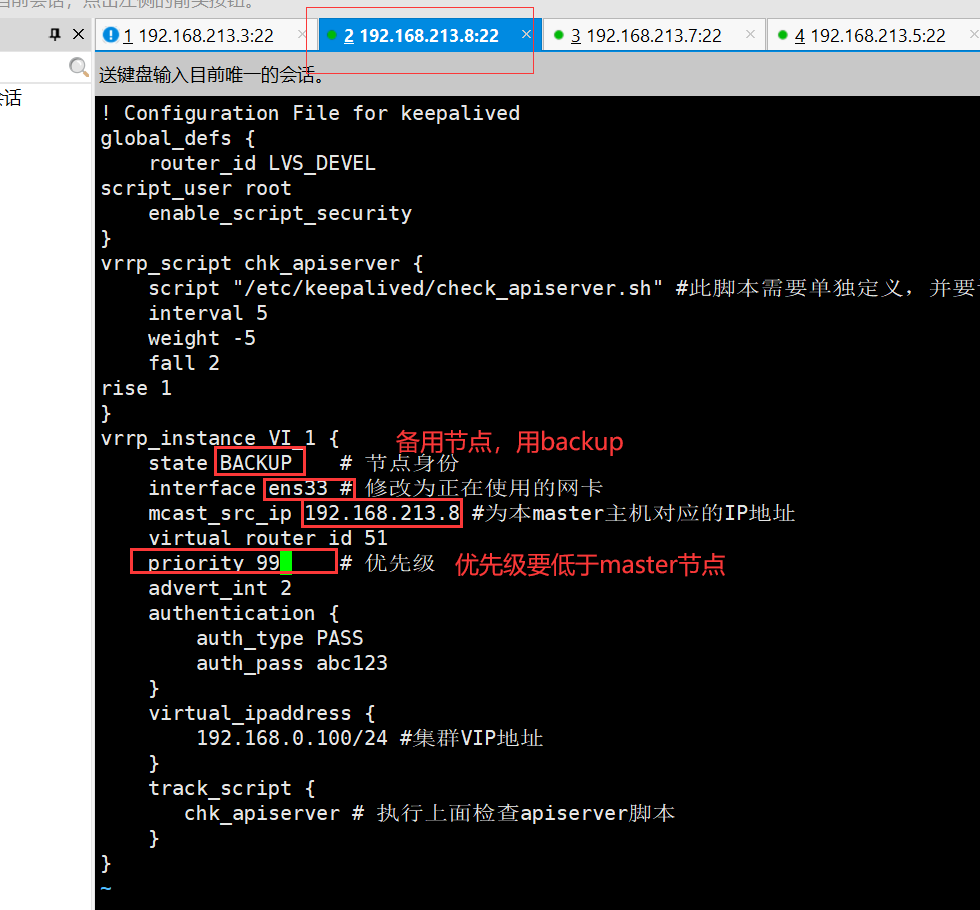

第二个主节点(备用节点),修改节点身份,网卡。ip地址。优先级

(2)配置apiserver脚本,需要和keepalived里配置脚本路径一样

[root@master01 ~]# cat /etc/keepalived/check_apiserver.sh #!/bin/bash err=0 for k in $(seq 1 3) do check_code=$(pgrep haproxy) if [[ $check_code == "" ]]; then err=$(expr $err + 1) sleep 1 continue else err=0 break fi done if [[ $err != "0" ]]; then echo "systemctl stop keepalived" /usr/bin/systemctl stop keepalived exit 1 else exit 0 fi

(3)给脚本加权限

[root@master2 ~]# chmod u+x /etc/keepalived/check_apiserver.sh

(4)启动并随机自启keepalived

[root@master1 ~]# systemctl start keepalived

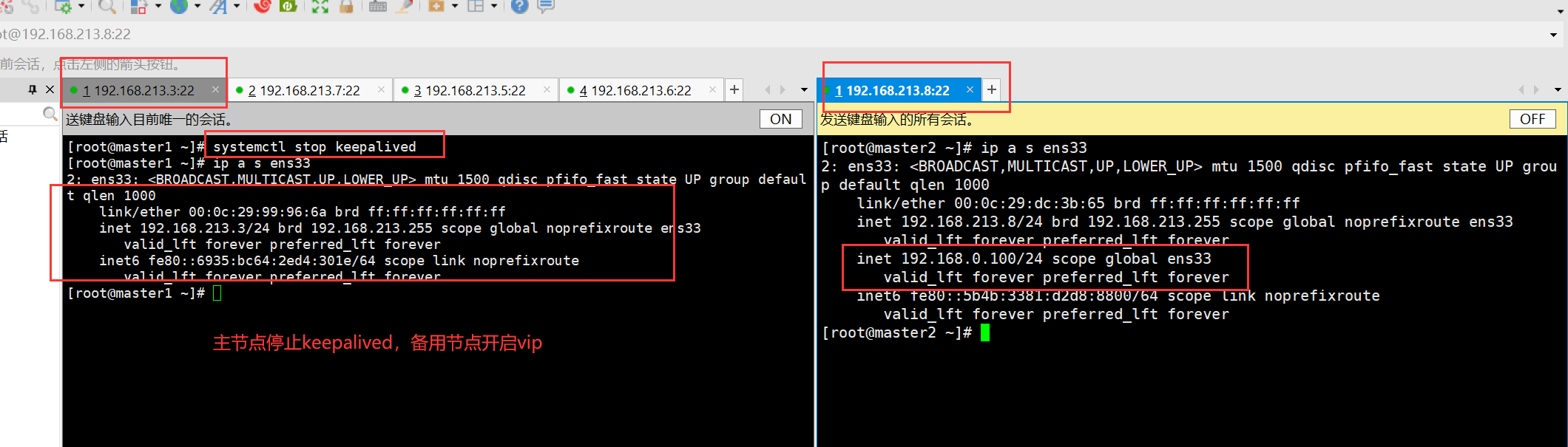

17.验证集群的高可用

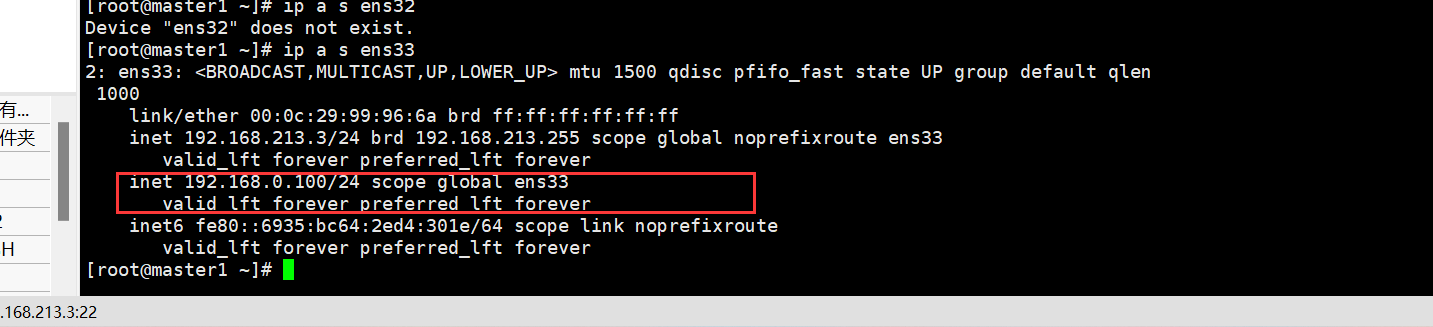

查看vip是否开启(开启keepalived时候只有master主节点有,备用节点没有vip)。当主节点keepalived服务关闭时候,备用节点自动开启vip

如图,192.168.213.3上有vip的ip地址

[root@master1 ~]# ip a s ens33 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:99:96:6a brd ff:ff:ff:ff:ff:ff inet 192.168.213.3/24 brd 192.168.213.255 scope global noprefixroute ens33 valid_lft forever preferred_lft forever inet 192.168.0.100/24 scope global ens33 valid_lft forever preferred_lft forever inet6 fe80::6935:bc64:2ed4:301e/64 scope link noprefixroute valid_lft forever preferred_lft forever

[root@master2 ~]# ip a s ens33 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:dc:3b:65 brd ff:ff:ff:ff:ff:ff inet 192.168.213.8/24 brd 192.168.213.255 scope global noprefixroute ens33 valid_lft forever preferred_lft forever inet6 fe80::5b4b:3381:d2d8:8800/64 scope link noprefixroute valid_lft forever preferred_lft forever

18.查看haproxy端口

[root@master1 ~]# ss -anptul|grep haproxy udp UNCONN 0 0 *:51430 *:* users:(("haproxy",pid=27990,fd=1)) udp UNCONN 0 0 *:43310 *:* users:(("haproxy",pid=28744,fd=1)) udp UNCONN 0 0 *:56083 *:* users:(("haproxy",pid=32594,fd=1)) tcp LISTEN 0 2000 *:33305 *:* users:(("haproxy",pid=32594,fd=5)) tcp LISTEN 0 2000 *:33305 *:* users:(("haproxy",pid=28744,fd=5)) tcp LISTEN 0 2000 *:33305 *:* users:(("haproxy",pid=27990,fd=5)) tcp LISTEN 0 2000 127.0.0.1:16443 *:* users:(("haproxy",pid=32594,fd=7)) tcp LISTEN 0 2000 *:16443 *:* users:(("haproxy",pid=32594,fd=6)) tcp LISTEN 0 2000 127.0.0.1:16443 *:* users:(("haproxy",pid=28744,fd=7)) tcp LISTEN 0 2000 *:16443 *:* users:(("haproxy",pid=28744,fd=6)) tcp LISTEN 0 2000 127.0.0.1:16443 *:* users:(("haproxy",pid=27990,fd=7)) tcp LISTEN 0 2000 *:16443 *:* users:(("haproxy",pid=27990,fd=6

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了