大数据Flink安装部署

目录

1 Local本地模式

Flink支持多种安装模式

- Local—本地单机模式,学习测试时使用

- Standalone—独立集群模式,Flink自带集群,开发测试环境使用

- StandaloneHA—独立集群高可用模式,Flink自带集群,开发测试环境使用

- On Yarn—计算资源统一由Hadoop YARN管理,生产环境使用

1.1 原理

- Flink程序由JobClient进行提交

- JobClient将作业提交给JobManager

- JobManager负责协调资源分配和作业执行。资源分配完成后,任务将提交给相应的

TaskManager - TaskManager启动一个线程以开始执行。TaskManager会向JobManager报告状态更改,如开

始执行,正在进行或已完成。 - 作业执行完成后,结果将发送回客户端(JobClient)

1.2 操作

1.下载安装包

https://archive.apache.org/dist/flink/

2.上传flink-1.12.0-bin-scala_2.12.tgz到node1的指定目录

3.解压

tar -zxvf flink-1.12.0-bin-scala_2.12.tgz

4.如果出现权限问题,需要修改权限

chown -R root:root /export/server/flink-1.12.0

5.改名或创建软链接

mv flink-1.12.0 flink

ln -s /export/server/flink-1.12.0 /export/server/flink

1.3 测试

1.准备文件/root/words.txt

vim /root/words.txt

hello me you her

hello me you

hello me

hello

2.启动Flink本地“集群”

/export/server/flink/bin/start-cluster.sh

3.使用jps可以查看到下面两个进程

- TaskManagerRunner

- StandaloneSessionClusterEntrypoint

4.访问Flink的Web UI

http://node1:8081/#/overview

slot在Flink里面可以认为是资源组,Flink是通过将任务分成子任务并且将这些子任务分配到

slot来并行执行程序。

5.执行官方示例

/export/server/flink/bin/flink run

/export/server/flink/examples/batch/WordCount.jar --input /root/words.txt --output

/root/out

6.停止Flink

/export/server/flink/bin/stop-cluster.sh

启动shell交互式窗口(目前所有Scala 2.12版本的安装包暂时都不支持 Scala Shell)

/export/server/flink/bin/start-scala-shell.sh local

执行如下命令

benv.readTextFile("/root/words.txt").flatMap(_.split(" ")).map((_,1)).groupBy(0).sum(1).print()

退出shell

:quit

2 Standalone独立集群模式

2.1 原理

- client客户端提交任务给JobManager

- JobManager负责申请任务运行所需要的资源并管理任务和资源,

- JobManager分发任务给TaskManager执行

- TaskManager定期向JobManager汇报状态

2.2 操作

1.集群规划:

- 服务器: node1(Master + Slave): JobManager + TaskManager

- 服务器: node2(Slave): TaskManager

- 服务器: node3(Slave): TaskManager

2.修改flink-conf.yaml

vim /export/server/flink/conf/flink-conf.yaml

jobmanager.rpc.address: node1

taskmanager.numberOfTaskSlots: 2

web.submit.enable: true

#历史服务器

jobmanager.archive.fs.dir: hdfs://node1:8020/flink/completed-jobs/

historyserver.web.address: node1

historyserver.web.port: 8082

historyserver.archive.fs.dir: hdfs://node1:8020/flink/completed-jobs/

2.修改masters

vim /export/server/flink/conf/masters

node1:8081

3.修改slaves

vim /export/server/flink/conf/workers

node1

node2

node3

4.添加HADOOP_CONF_DIR环境变量

vim /etc/profile

export HADOOP_CONF_DIR=/export/server/hadoop/etc/hadoop

5.分发

scp -r /export/server/flink node2:/export/server/flink

scp -r /export/server/flink node3:/export/server/flink

scp /etc/profile node2:/etc/profile

scp /etc/profile node3:/etc/profile

或

for i in {2..3}; do scp -r flink node$i:$PWD; done

6.source

source /etc/profile

2.3 测试

1.启动集群,在node1上执行如下命令

/export/server/flink/bin/start-cluster.sh

或者单独启动

/export/server/flink/bin/jobmanager.sh ((start|start-foreground) cluster)|stop|stop-all

/export/server/flink/bin/taskmanager.sh start|start-foreground|stop|stop-all

2.启动历史服务器

/export/server/flink/bin/historyserver.sh start

3.访问Flink UI界面或使用jps查看

http://node1:8081/#/overview

http://node1:8082/#/overview

TaskManager界面:可以查看到当前Flink集群中有多少个TaskManager,每个TaskManager的slots、内存、CPU Core是多少

4.执行官方测试案例

/export/server/flink/bin/flink run

/export/server/flink/examples/batch/WordCount.jar --input

hdfs://node1:8020/wordcount/input/words.txt --output

hdfs://node1:8020/wordcount/output/result.txt --parallelism 2

5.查看历史日志

http://node1:50070/explorer.html#/flink/completed-jobs

http://node1:8082/#/overview

6.停止Flink集群

/export/server/flink/bin/stop-cluster.sh

3 Standalone-HA高可用集群模式

3.1 原理

从之前的架构中我们可以很明显的发现 JobManager 有明显的单点问题(SPOF,single point

of failure)。JobManager 肩负着任务调度以及资源分配,一旦 JobManager 出现意外,其后果

可想而知。

在 Zookeeper 的帮助下,一个 Standalone的Flink集群会同时有多个活着的 JobManager,

其中只有一个处于工作状态,其他处于 Standby 状态。当工作中的 JobManager 失去连接后(如

宕机或 Crash),Zookeeper 会从 Standby 中选一个新的 JobManager 来接管 Flink 集群。

3.2 操作

1.集群规划

- 服务器: node1(Master + Slave): JobManager + TaskManager

- 服务器: node2(Master + Slave): JobManager + TaskManager

- 服务器: node3(Slave): TaskManager

- 2.启动ZooKeeper

zkServer.sh status

zkServer.sh stop

zkServer.sh start

3.启动HDFS

/export/serves/hadoop/sbin/start-dfs.sh

4.停止Flink集群

/export/server/flink/bin/stop-cluster.sh

5.修改flink-conf.yaml

vim /export/server/flink/conf/flink-conf.yaml

增加如下内容G

state.backend: filesystem

state.backend.fs.checkpointdir: hdfs://node1:8020/flink-checkpoints

high-availability: zookeeper

high-availability.storageDir: hdfs://node1:8020/flink/ha/

high-availability.zookeeper.quorum: node1:2181,node2:2181,node3:2181

配置解释

#开启HA,使用文件系统作为快照存储

state.backend: filesystem

#启用检查点,可以将快照保存到HDFS

state.backend.fs.checkpointdir: hdfs://node1:8020/flink-checkpoints

#使用zookeeper搭建高可用

high-availability: zookeeper

# 存储JobManager的元数据到HDFS

high-availability.storageDir: hdfs://node1:8020/flink/ha/

# 配置ZK集群地址

high-availability.zookeeper.quorum: node1:2181,node2:2181,node3:2181

6.修改masters

vim /export/server/flink/conf/masters

node1:8081

node2:8081

7.同步

scp -r /export/server/flink/conf/flink-conf.yaml

node2:/export/server/flink/conf/

scp -r /export/server/flink/conf/flink-conf.yaml

node3:/export/server/flink/conf/

scp -r /export/server/flink/conf/masters node2:/export/server/flink/conf/

scp -r /export/server/flink/conf/masters node3:/export/server/flink/conf/

8.修改node2上的flink-conf.yaml

vim /export/server/flink/conf/flink-conf.yaml

jobmanager.rpc.address: node2

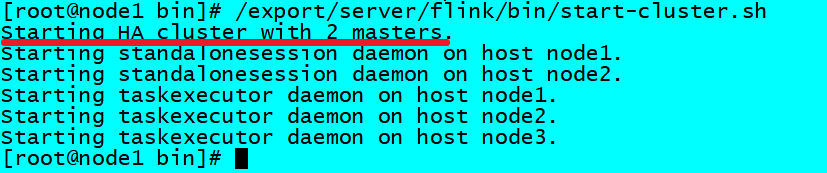

9.重新启动Flink集群,node1上执行

/export/server/flink/bin/stop-cluster.sh

/export/server/flink/bin/start-cluster.sh

10.使用jps命令查看

发现没有Flink相关进程被启动

11.查看日志

cat /export/server/flink/log/flink-root-standalonesession-0-node1.log

发现如下错误

因为在Flink1.8版本后,Flink官方提供的安装包里没有整合HDFS的jar

12.下载jar包并在Flink的lib目录下放入该jar包并分发使Flink能够支持对Hadoop的操作

下载地址

https://flink.apache.org/downloads.html

放入lib目录

cd /export/server/flink/lib

分发

for i in {2…3}; do scp -r flink-shaded-hadoop-2-uber-2.7.5-10.0.jar node

i

:

i:

i:PWD; done

13.重新启动Flink集群,node1上执行

/export/server/flink/bin/start-cluster.sh

14.使用jps命令查看,发现三台机器已经ok

3.3 测试

1.访问WebUI

http://node1:8081/#/job-manager/config

http://node2:8081/#/job-manager/config

2.执行wc

/export/server/flink/bin/flink run /export/server/flink/examples/batch/WordCount.jar

3.kill掉其中一个master

4.重新执行wc,还是可以正常执行

/export/server/flink/bin/flink run /export/server/flink/examples/batch/WordCount.jar

3.停止集群

/export/server/flink/bin/stop-cluster.sh

4 Flink On Yarn模式

4.1 原理

4.1.1 为什么使用Flink On Yarn?

在实际开发中,使用Flink时,更多的使用方式是Flink On Yarn模式,原因如下:

-1.Yarn的资源可以按需使用,提高集群的资源利用率

-2.Yarn的任务有优先级,根据优先级运行作业

-3.基于Yarn调度系统,能够自动化地处理各个角色的 Failover(容错)

○ JobManager 进程和 TaskManager 进程都由 Yarn NodeManager 监控

○ 如果 JobManager 进程异常退出,则 Yarn ResourceManager 会重新调度 JobManager

到其他机器

○ 如果 TaskManager 进程异常退出,JobManager 会收到消息并重新向 Yarn

ResourceManager 申请资源,重新启动 TaskManager

4.1.2 Flink如何和Yarn进行交互?

1.Client上传jar包和配置文件到HDFS集群上

2.Client向Yarn ResourceManager提交任务并申请资源

3.ResourceManager分配Container资源并启动ApplicationMaster,然后AppMaster加载Flink的Jar

包和配置构建环境,启动JobManager

JobManager和ApplicationMaster运行在同一个container上。

一旦他们被成功启动,AppMaster就知道JobManager的地址(AM它自己所在的机器)。

它就会为TaskManager生成一个新的Flink配置文件(他们就可以连接到JobManager)。

这个配置文件也被上传到HDFS上。

此外,AppMaster容器也提供了Flink的web服务接口。

YARN所分配的所有端口都是临时端口,这允许用户并行执行多个Flink

4.ApplicationMaster向ResourceManager申请工作资源,NodeManager加载Flink的Jar包和配置构

建环境并启动TaskManager

5.TaskManager启动后向JobManager发送心跳包,并等待JobManager向其分配任务

4.1.3 两种方式

4.1.3.1 Session模式

特点:需要事先申请资源,启动JobManager和TaskManger

优点:不需要每次递交作业申请资源,而是使用已经申请好的资源,从而提高执行效率

缺点:作业执行完成以后,资源不会被释放,因此一直会占用系统资源

应用场景:适合作业递交比较频繁的场景,小作业比较多的场景

4.1.3.2 Per-Job模式

特点:每次递交作业都需要申请一次资源

优点:作业运行完成,资源会立刻被释放,不会一直占用系统资源

缺点:每次递交作业都需要申请资源,会影响执行效率,因为申请资源需要消耗时间

应用场景:适合作业比较少的场景、大作业的场景

4.2 操作

1.关闭yarn的内存检查

vim /export/server/hadoop/etc/hadoop/yarn-site.xml

添加:

<!-- 关闭yarn内存检查 -->

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

说明:

是否启动一个线程检查每个任务正使用的虚拟内存量,如果任务超出分配值,则直接将其杀

掉,默认是true。

在这里面我们需要关闭,因为对于flink使用yarn模式下,很容易内存超标,这个时候yarn会自

动杀掉job

2.同步

scp -r /export/server/hadoop/etc/hadoop/yarn-site.xml

node2:/export/server/hadoop/etc/hadoop/yarn-site.xml

scp -r /export/server/hadoop/etc/hadoop/yarn-site.xml

node3:/export/server/hadoop/etc/hadoop/yarn-site.xml

3.重启yarn

/export/server/hadoop/sbin/stop-yarn.sh

/export/server/hadoop/sbin/start-yarn.sh

4.3 测试

4.3.1 Session模式

yarn-session.sh(开辟资源) + flink run(提交任务)

1.在yarn上启动一个Flink会话,node1上执行以下命令

/export/server/flink/bin/yarn-session.sh -n 2 -tm 800 -s 1 -d

说明:

申请2个CPU、1600M内存

# -n 表示申请2个容器,这里指的就是多少个taskmanager

# -tm 表示每个TaskManager的内存大小

# -s 表示每个TaskManager的slots数量

# -d 表示以后台程序方式运行

注意:

该警告不用管

WARN org.apache.hadoop.hdfs.DFSClient - Caught exception

java.lang.InterruptedException

2.查看UI界面

3.使用flink run提交任务:

/export/server/flink/bin/flink run /export/server/flink/examples/batch/WordCount.jar

运行完之后可以继续运行其他的小任务

/export/server/flink/bin/flink run /export/server/flink/examples/batch/WordCount.jar

4.通过上方的ApplicationMaster可以进入Flink的管理界面

5.关闭yarn-session:

yarn application -kill application_1599402747874_0001

rm -rf /tmp/.yarn-properties-root

4.3.2 Per-Job分离模式

1.直接提交job

/export/server/flink/bin/flink run -m yarn-cluster -yjm 1024 -ytm 1024

/export/server/flink/examples/batch/WordCount.jar

# -m jobmanager的地址

# -yjm 1024 指定jobmanager的内存信息

# -ytm 1024 指定taskmanager的内存信息

2.查看UI界面

http://node1:8088/cluster

3.注意:

在之前版本中如果使用的是flink on yarn方式,想切换回standalone模式的话,如果报错需要删

除:【/tmp/.yarn-properties-root】

rm -rf /tmp/.yarn-properties-root

因为默认查找当前yarn集群中已有的yarn-session信息中的jobmanager

4.4 参数总结

[root@node1 bin]# /export/server/flink/bin/flink --help

./flink <ACTION> [OPTIONS] [ARGUMENTS]

The following actions are available:

Action "run" compiles and runs a program.

Syntax: run [OPTIONS] <jar-file> <arguments>

"run" action options:

-c,--class <classname> Class with the program entry point

("main()" method). Only needed if the

JAR file does not specify the class in

its manifest.

-C,--classpath <url> Adds a URL to each user code

classloader on all nodes in the

cluster. The paths must specify a

protocol (e.g. file://) and be

accessible on all nodes (e.g. by means

of a NFS share). You can use this

option multiple times for specifying

more than one URL. The protocol must

be supported by the {@link

java.net.URLClassLoader}.

-d,--detached If present, runs the job in detached

mode

-n,--allowNonRestoredState Allow to skip savepoint state that

cannot be restored. You need to allow

this if you removed an operator from

your program that was part of the

program when the savepoint was

triggered.

-p,--parallelism <parallelism> The parallelism with which to run the

program. Optional flag to override the

default value specified in the

configuration.

-py,--python <pythonFile> Python script with the program entry

point. The dependent resources can be

configured with the `--pyFiles`

option.

-pyarch,--pyArchives <arg> Add python archive files for job. The

archive files will be extracted to the

working directory of python UDF

worker. Currently only zip-format is

supported. For each archive file, a

target directory be specified. If the

target directory name is specified,

the archive file will be extracted to

a name can directory with the

specified name. Otherwise, the archive

file will be extracted to a directory

with the same name of the archive

file. The files uploaded via this

option are accessible via relative

path. '#' could be used as the

separator of the archive file path and

the target directory name. Comma (',')

could be used as the separator to

specify multiple archive files. This

option can be used to upload the

virtual environment, the data files

used in Python UDF (e.g.: --pyArchives

file:///tmp/py37.zip,file:///tmp/data.

zip#data --pyExecutable

py37.zip/py37/bin/python). The data

files could be accessed in Python UDF,

e.g.: f = open('data/data.txt', 'r').

-pyexec,--pyExecutable <arg> Specify the path of the python

interpreter used to execute the python

UDF worker (e.g.: --pyExecutable

/usr/local/bin/python3). The python

UDF worker depends on Python 3.5+,

Apache Beam (version == 2.23.0), Pip

(version >= 7.1.0) and SetupTools

(version >= 37.0.0). Please ensure

that the specified environment meets

the above requirements.

-pyfs,--pyFiles <pythonFiles> Attach custom python files for job.

These files will be added to the

PYTHONPATH of both the local client

and the remote python UDF worker. The

standard python resource file suffixes

such as .py/.egg/.zip or directory are

all supported. Comma (',') could be

used as the separator to specify

multiple files (e.g.: --pyFiles

file:///tmp/myresource.zip,hdfs:///$na

menode_address/myresource2.zip).

-pym,--pyModule <pythonModule> Python module with the program entry

point. This option must be used in

conjunction with `--pyFiles`.

-pyreq,--pyRequirements <arg> Specify a requirements.txt file which

defines the third-party dependencies.

These dependencies will be installed

and added to the PYTHONPATH of the

python UDF worker. A directory which

contains the installation packages of

these dependencies could be specified

optionally. Use '#' as the separator

if the optional parameter exists

(e.g.: --pyRequirements

file:///tmp/requirements.txt#file:///t

mp/cached_dir).

-s,--fromSavepoint <savepointPath> Path to a savepoint to restore the job

from (for example

hdfs:///flink/savepoint-1537).

-sae,--shutdownOnAttachedExit If the job is submitted in attached

mode, perform a best-effort cluster

shutdown when the CLI is terminated

abruptly, e.g., in response to a user

interrupt, such as typing Ctrl + C.

Options for Generic CLI mode:

-D <property=value> Allows specifying multiple generic configuration

options. The available options can be found at

https://ci.apache.org/projects/flink/flink-docs-stabl

e/ops/config.html

-e,--executor <arg> DEPRECATED: Please use the -t option instead which is

also available with the "Application Mode".

The name of the executor to be used for executing the

given job, which is equivalent to the

"execution.target" config option. The currently

available executors are: "remote", "local",

"kubernetes-session", "yarn-per-job", "yarn-session".

-t,--target <arg> The deployment target for the given application,

which is equivalent to the "execution.target" config

option. For the "run" action the currently available

targets are: "remote", "local", "kubernetes-session",

"yarn-per-job", "yarn-session". For the

"run-application" action the currently available

targets are: "kubernetes-application",

"yarn-application".

Options for yarn-cluster mode:

-d,--detached If present, runs the job in detached

mode

-m,--jobmanager <arg> Set to yarn-cluster to use YARN

execution mode.

-yat,--yarnapplicationType <arg> Set a custom application type for the

application on YARN

-yD <property=value> use value for given property

-yd,--yarndetached If present, runs the job in detached

mode (deprecated; use non-YARN

specific option instead)

-yh,--yarnhelp Help for the Yarn session CLI.

-yid,--yarnapplicationId <arg> Attach to running YARN session

-yj,--yarnjar <arg> Path to Flink jar file

-yjm,--yarnjobManagerMemory <arg> Memory for JobManager Container with

optional unit (default: MB)

-ynl,--yarnnodeLabel <arg> Specify YARN node label for the YARN

application

-ynm,--yarnname <arg> Set a custom name for the application

on YARN

-yq,--yarnquery Display available YARN resources

(memory, cores)

-yqu,--yarnqueue <arg> Specify YARN queue.

-ys,--yarnslots <arg> Number of slots per TaskManager

-yt,--yarnship <arg> Ship files in the specified directory

(t for transfer)

-ytm,--yarntaskManagerMemory <arg> Memory per TaskManager Container with

optional unit (default: MB)

-yz,--yarnzookeeperNamespace <arg> Namespace to create the Zookeeper

sub-paths for high availability mode

-z,--zookeeperNamespace <arg> Namespace to create the Zookeeper

sub-paths for high availability mode

Options for default mode:

-D <property=value> Allows specifying multiple generic

configuration options. The available

options can be found at

https://ci.apache.org/projects/flink/flink-

docs-stable/ops/config.html

-m,--jobmanager <arg> Address of the JobManager to which to

connect. Use this flag to connect to a

different JobManager than the one specified

in the configuration. Attention: This

option is respected only if the

high-availability configuration is NONE.

-z,--zookeeperNamespace <arg> Namespace to create the Zookeeper sub-paths

for high availability mode

Action "run-application" runs an application in Application Mode.

Syntax: run-application [OPTIONS] <jar-file> <arguments>

Options for Generic CLI mode:

-D <property=value> Allows specifying multiple generic configuration

options. The available options can be found at

https://ci.apache.org/projects/flink/flink-docs-stabl

e/ops/config.html

-e,--executor <arg> DEPRECATED: Please use the -t option instead which is

also available with the "Application Mode".

The name of the executor to be used for executing the

given job, which is equivalent to the

"execution.target" config option. The currently

available executors are: "remote", "local",

"kubernetes-session", "yarn-per-job", "yarn-session".

-t,--target <arg> The deployment target for the given application,

which is equivalent to the "execution.target" config

option. For the "run" action the currently available

targets are: "remote", "local", "kubernetes-session",

"yarn-per-job", "yarn-session". For the

"run-application" action the currently available

targets are: "kubernetes-application",

"yarn-application".

Action "info" shows the optimized execution plan of the program (JSON).

Syntax: info [OPTIONS] <jar-file> <arguments>

"info" action options:

-c,--class <classname> Class with the program entry point

("main()" method). Only needed if the JAR

file does not specify the class in its

manifest.

-p,--parallelism <parallelism> The parallelism with which to run the

program. Optional flag to override the

default value specified in the

configuration.

Action "list" lists running and scheduled programs.

Syntax: list [OPTIONS]

"list" action options:

-a,--all Show all programs and their JobIDs

-r,--running Show only running programs and their JobIDs

-s,--scheduled Show only scheduled programs and their JobIDs

Options for Generic CLI mode:

-D <property=value> Allows specifying multiple generic configuration

options. The available options can be found at

https://ci.apache.org/projects/flink/flink-docs-stabl

e/ops/config.html

-e,--executor <arg> DEPRECATED: Please use the -t option instead which is

also available with the "Application Mode".

The name of the executor to be used for executing the

given job, which is equivalent to the

"execution.target" config option. The currently

available executors are: "remote", "local",

"kubernetes-session", "yarn-per-job", "yarn-session".

-t,--target <arg> The deployment target for the given application,

which is equivalent to the "execution.target" config

option. For the "run" action the currently available

targets are: "remote", "local", "kubernetes-session",

"yarn-per-job", "yarn-session". For the

"run-application" action the currently available

targets are: "kubernetes-application",

"yarn-application".

Options for yarn-cluster mode:

-m,--jobmanager <arg> Set to yarn-cluster to use YARN execution

mode.

-yid,--yarnapplicationId <arg> Attach to running YARN session

-z,--zookeeperNamespace <arg> Namespace to create the Zookeeper

sub-paths for high availability mode

Options for default mode:

-D <property=value> Allows specifying multiple generic

configuration options. The available

options can be found at

https://ci.apache.org/projects/flink/flink-

docs-stable/ops/config.html

-m,--jobmanager <arg> Address of the JobManager to which to

connect. Use this flag to connect to a

different JobManager than the one specified

in the configuration. Attention: This

option is respected only if the

high-availability configuration is NONE.

-z,--zookeeperNamespace <arg> Namespace to create the Zookeeper sub-paths

for high availability mode

Action "stop" stops a running program with a savepoint (streaming jobs only).

Syntax: stop [OPTIONS] <Job ID>

"stop" action options:

-d,--drain Send MAX_WATERMARK before taking the

savepoint and stopping the pipelne.

-p,--savepointPath <savepointPath> Path to the savepoint (for example

hdfs:///flink/savepoint-1537). If no

directory is specified, the configured

default will be used

("state.savepoints.dir").

Options for Generic CLI mode:

-D <property=value> Allows specifying multiple generic configuration

options. The available options can be found at

https://ci.apache.org/projects/flink/flink-docs-stabl

e/ops/config.html

-e,--executor <arg> DEPRECATED: Please use the -t option instead which is

also available with the "Application Mode".

The name of the executor to be used for executing the

given job, which is equivalent to the

"execution.target" config option. The currently

available executors are: "remote", "local",

"kubernetes-session", "yarn-per-job", "yarn-session".

-t,--target <arg> The deployment target for the given application,

which is equivalent to the "execution.target" config

option. For the "run" action the currently available

targets are: "remote", "local", "kubernetes-session",

"yarn-per-job", "yarn-session". For the

"run-application" action the currently available

targets are: "kubernetes-application",

"yarn-application".

Options for yarn-cluster mode:

-m,--jobmanager <arg> Set to yarn-cluster to use YARN execution

mode.

-yid,--yarnapplicationId <arg> Attach to running YARN session

-z,--zookeeperNamespace <arg> Namespace to create the Zookeeper

sub-paths for high availability mode

Options for default mode:

-D <property=value> Allows specifying multiple generic

configuration options. The available

options can be found at

https://ci.apache.org/projects/flink/flink-

docs-stable/ops/config.html

-m,--jobmanager <arg> Address of the JobManager to which to

connect. Use this flag to connect to a

different JobManager than the one specified

in the configuration. Attention: This

option is respected only if the

high-availability configuration is NONE.

-z,--zookeeperNamespace <arg> Namespace to create the Zookeeper sub-paths

for high availability mode

Action "cancel" cancels a running program.

Syntax: cancel [OPTIONS] <Job ID>

"cancel" action options:

-s,--withSavepoint <targetDirectory> **DEPRECATION WARNING**: Cancelling

a job with savepoint is deprecated.

Use "stop" instead.

Trigger savepoint and cancel job.

The target directory is optional. If

no directory is specified, the

configured default directory

(state.savepoints.dir) is used.

Options for Generic CLI mode:

-D <property=value> Allows specifying multiple generic configuration

options. The available options can be found at

https://ci.apache.org/projects/flink/flink-docs-stabl

e/ops/config.html

-e,--executor <arg> DEPRECATED: Please use the -t option instead which is

also available with the "Application Mode".

The name of the executor to be used for executing the

given job, which is equivalent to the

"execution.target" config option. The currently

available executors are: "remote", "local",

"kubernetes-session", "yarn-per-job", "yarn-session".

-t,--target <arg> The deployment target for the given application,

which is equivalent to the "execution.target" config

option. For the "run" action the currently available

targets are: "remote", "local", "kubernetes-session",

"yarn-per-job", "yarn-session". For the

"run-application" action the currently available

targets are: "kubernetes-application",

"yarn-application".

Options for yarn-cluster mode:

-m,--jobmanager <arg> Set to yarn-cluster to use YARN execution

mode.

-yid,--yarnapplicationId <arg> Attach to running YARN session

-z,--zookeeperNamespace <arg> Namespace to create the Zookeeper

sub-paths for high availability mode

Options for default mode:

-D <property=value> Allows specifying multiple generic

configuration options. The available

options can be found at

https://ci.apache.org/projects/flink/flink-

docs-stable/ops/config.html

-m,--jobmanager <arg> Address of the JobManager to which to

connect. Use this flag to connect to a

different JobManager than the one specified

in the configuration. Attention: This

option is respected only if the

high-availability configuration is NONE.

-z,--zookeeperNamespace <arg> Namespace to create the Zookeeper sub-paths

for high availability mode

Action "savepoint" triggers savepoints for a running job or disposes existing ones.

Syntax: savepoint [OPTIONS] <Job ID> [<target directory>]

"savepoint" action options:

-d,--dispose <arg> Path of savepoint to dispose.

-j,--jarfile <jarfile> Flink program JAR file.

Options for Generic CLI mode:

-D <property=value> Allows specifying multiple generic configuration

options. The available options can be found at

https://ci.apache.org/projects/flink/flink-docs-stabl

e/ops/config.html

-e,--executor <arg> DEPRECATED: Please use the -t option instead which is

also available with the "Application Mode".

The name of the executor to be used for executing the

given job, which is equivalent to the

"execution.target" config option. The currently

available executors are: "remote", "local",

"kubernetes-session", "yarn-per-job", "yarn-session".

-t,--target <arg> The deployment target for the given application,

which is equivalent to the "execution.target" config

option. For the "run" action the currently available

targets are: "remote", "local", "kubernetes-session",

"yarn-per-job", "yarn-session". For the

"run-application" action the currently available

targets are: "kubernetes-application",

"yarn-application".

Options for yarn-cluster mode:

-m,--jobmanager <arg> Set to yarn-cluster to use YARN execution

mode.

-yid,--yarnapplicationId <arg> Attach to running YARN session

-z,--zookeeperNamespace <arg> Namespace to create the Zookeeper

sub-paths for high availability mode

Options for default mode:

-D <property=value> Allows specifying multiple generic

configuration options. The available

options can be found at

https://ci.apache.org/projects/flink/flink-

docs-stable/ops/config.html

-m,--jobmanager <arg> Address of the JobManager to which to

connect. Use this flag to connect to a

different JobManager than the one specified

in the configuration. Attention: This

option is respected only if the

high-availability configuration is NONE.

-z,--zookeeperNamespace <arg> Namespace to create the Zookeeper sub-paths

for high availability mode