Kubernetes容器集群部署节点组件(五)

master端下载kubernetes组件:

wget https://storage.googleapis.com/kubernetes-release/release/v1.9.2/kubernetes-server-linux-amd64.tar.gz

node端下工kubernetes node组件:

wget https://dl.k8s.io/v1.9.2/kubernetes-node-linux-amd64.tar.gz

部署master组件

master操作:

把二制文件移动到bin下

[root@master bin]# pwd /root/master_pkg/kubernetes/server/bin [root@master bin]# cp kube-controller-manager kube-scheduler kube-apiserver /opt/kubernetes/bin/ [root@master bin]# chmod +x /opt/kubernetes/bin/*

添加apiserver.sh脚本

#!/bin/bash MASTER_ADDRESS=${1:-"192.168.1.195"} ETCD_SERVERS=${2:-"http://127.0.0.1:2379"} cat <<EOF >/opt/kubernetes/cfg/kube-apiserver KUBE_APISERVER_OPTS="--logtostderr=true \\ --v=4 \\ --etcd-servers=${ETCD_SERVERS} \\ --insecure-bind-address=127.0.0.1 \\ --bind-address=${MASTER_ADDRESS} \\ --insecure-port=8080 \\ --secure-port=6443 \\ --advertise-address=${MASTER_ADDRESS} \\ --allow-privileged=true \\ --service-cluster-ip-range=10.10.10.0/24 \\ --admission-control=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction \ --authorization-mode=RBAC,Node \\ --kubelet-https=true \\ --enable-bootstrap-token-auth \\ --token-auth-file=/opt/kubernetes/cfg/token.csv \\ --service-node-port-range=30000-50000 \\ --tls-cert-file=/opt/kubernetes/ssl/server.pem \\ --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\ --client-ca-file=/opt/kubernetes/ssl/ca.pem \\ --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --etcd-cafile=/opt/kubernetes/ssl/ca.pem \\ --etcd-certfile=/opt/kubernetes/ssl/server.pem \\ --etcd-keyfile=/opt/kubernetes/ssl/server-key.pem" EOF cat <<EOF >/usr/lib/systemd/system/kube-apiserver.service [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable kube-apiserver systemctl restart kube-apiserver

执行apiserver.sh脚本:

[root@master bin]# ./apiserver.sh 192.168.1.101 https://192.168.1.101:2379,https://192.168.1.102:2379,https://192.168.1.103:2379 Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service.

将token.csv放到cfg目录下

cp /opt/kubernetes/ssl/token.csv /opt/kubernetes/cfg/

启动kube-apiserver

[root@master bin]# systemctl start kube-apiserver

添加controller-manager.sh控制器脚本

#!/bin/bash MASTER_ADDRESS=${1:-"127.0.0.1"} cat <<EOF >/opt/kubernetes/cfg/kube-controller-manager KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \\ --v=4 \\ --master=${MASTER_ADDRESS}:8080 \\ --leader-elect=true \\ --address=127.0.0.1 \\ --service-cluster-ip-range=10.10.10.0/24 \\ --cluster-name=kubernetes \\ --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\ --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --root-ca-file=/opt/kubernetes/ssl/ca.pem" EOF cat <<EOF >/usr/lib/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-manager ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable kube-controller-manager systemctl restart kube-controller-manager

执行脚本:

[root@master bin]# ./controller-manager.sh 127.0.0.1

查看服务是否启动

[root@master bin]# ps -ef | grep controller-manager root 16464 1 10 14:34 ? 00:00:01 /opt/kubernetes/bin/kube-controller-manager --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect=true --address=127.0.0.1 --service-cluster-ip-range=10.10.10.0/24 --cluster-name=kubernetes --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem --root-ca-file=/opt/kubernetes/ssl/ca.pem

添加scheduler.sh脚本

#!/bin/bash MASTER_ADDRESS=${1:-"127.0.0.1"} cat <<EOF >/opt/kubernetes/cfg/kube-scheduler KUBE_SCHEDULER_OPTS="--logtostderr=true \\ --v=4 \\ --master=${MASTER_ADDRESS}:8080 \\ --leader-elect" EOF cat <<EOF >/usr/lib/systemd/system/kube-scheduler.service [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-scheduler ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable kube-scheduler systemctl restart kube-scheduler

执行脚本

[root@master bin]# ./scheduler.sh 127.0.0.1

查看服务是否启动

[root@master bin]# ps -ef | grep scheduler root 16531 1 4 14:37 ? 00:00:00 /opt/kubernetes/bin/kube-scheduler --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect

查看节点状态

[root@master bin]# kubectl get cs NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-0 Healthy {"health": "true"} etcd-2 Healthy {"health": "true"} etcd-1 Healthy {"health": "true"}

部署node节点

将master节点生成的kubeconfig文件传到两个节点的cfg目录下

/opt/kubernetes/ssl [root@master ssl]# scp *kubeconfig root@192.168.1.102:/opt/kubernetes/cfg/ [root@master ssl]# scp *kubeconfig root@192.168.1.103:/opt/kubernetes/cfg/

node1节点操作:

解压kubernetes-node-linux-amd64.tar.gz 包

[root@node1 node_pkg]# tar xvf kubernetes-node-linux-amd64.tar.gz

将解压出来的二制移到bin下

[root@node1 bin]# cp kubelet kube-proxy /opt/kubernetes/bin/

[root@node1 bin]# chmod +x /opt/kubernetes/bin/*

添加kubelet.sh脚本

#!/bin/bash NODE_ADDRESS=${1:-"192.168.1.196"} DNS_SERVER_IP=${2:-"10.10.10.2"} cat <<EOF >/opt/kubernetes/cfg/kubelet KUBELET_OPTS="--logtostderr=true \\ --v=4 \\ --address=${NODE_ADDRESS} \\ --hostname-override=${NODE_ADDRESS} \\ --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\ --experimental-bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\ --cert-dir=/opt/kubernetes/ssl \\ --allow-privileged=true \\ --cluster-dns=${DNS_SERVER_IP} \\ --cluster-domain=cluster.local \\ --fail-swap-on=false \\ --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0" EOF cat <<EOF >/usr/lib/systemd/system/kubelet.service [Unit] Description=Kubernetes Kubelet After=docker.service Requires=docker.service [Service] EnvironmentFile=-/opt/kubernetes/cfg/kubelet ExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTS Restart=on-failure KillMode=process [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable kubelet systemctl restart kubelet

执行脚本

[root@node1 bin]# ./kubelet.sh 192.168.0.102 10.10.10.2 Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service. 备注:192.168.0.102为你当前节点的IP 10.10.10.2为你的DNS地址

查看kubelete是否启动

发现有错误日志,创建证权限拒绝

error: failed to run Kubelet: cannot create certificate signing request: certificatesigningrequests.certificates.k8s.io is forbidden: User "kubelet-bootstrap" cannot create certificatesigningrequests.certificates.k8s.io at the cluster scope: clusterrole.rbac.authorization.k8s.io "system:node-bootstrap" not found

解决方法

在master端创建权限分配角色

[root@master ssl]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

node节点再次启动kubelet

创建proxy.sh脚本

#!/bin/bash NODE_ADDRESS=${1:-"192.168.1.200"} cat <<EOF >/opt/kubernetes/cfg/kube-proxy KUBE_PROXY_OPTS="--logtostderr=true \ --v=4 \ --hostname-override=${NODE_ADDRESS} \ --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig" EOF cat <<EOF >/usr/lib/systemd/system/kube-proxy.service [Unit] Description=Kubernetes Proxy After=network.target [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-proxy ExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable kube-proxy systemctl restart kube-proxy

执行脚本

[root@node1 ssl]# ./proxy.sh 192.168.1.102 备注:192.168.1.102是当前节点的地址

在master查看节点请求信息:

[root@master ssl]# kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-iVbj9CKPaWhh7VAQfqK16Xz9in4-Byb_XZaDJLz3zfw 11m kubelet-bootstrap Pending

允许自签证书请求连接

[root@master ssl]# kubectl certificate approve node-csr-iVbj9CKPaWhh7VAQfqK16Xz9in4-Byb_XZaDJLz3zfw

再次查看连接:

[root@master ssl]# kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-iVbj9CKPaWhh7VAQfqK16Xz9in4-Byb_XZaDJLz3zfw 14m kubelet-bootstrap Approved,Issued

查看Node为准备状态

[root@master ssl]# kubectl get node NAME STATUS ROLES AGE VERSION 192.168.1.102 Ready <none> 1m v1.9.2

node2节点操作:

把Node1节点的文个拷到node2节点,或者重复node1节点步骤

[root@node1 ssl]# scp -r /opt/kubernetes/bin root@192.168.1.103:/opt/kubernetes [root@node1 ssl]# scp -r /opt/kubernetes/cfg root@192.168.1.103:/opt/kubernetes

[root@node1 ssl]# scp /usr/lib/systemd/system/kubelet.service root@192.168.1.103:/usr/lib/systemd/system/

[root@node1 ssl]# scp /usr/lib/systemd/system/kube-proxy.service root@192.168.1.103:/usr/lib/systemd/system/

修改node2节点cfg下kubelet配置文件的ip改为当前节点IP

KUBELET_OPTS="--logtostderr=true \ --v=4 \ --address=192.168.1.103 \ --hostname-override=192.168.1.103 \ --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \ --experimental-bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \ --cert-dir=/opt/kubernetes/ssl \ --allow-privileged=true \ --cluster-dns=10.10.10.2 \ --cluster-domain=cluster.local \ --fail-swap-on=false \ --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"

修改node2节点cfg下kube-proxy配置文件的ip改为当前节点IP

KUBE_PROXY_OPTS="--logtostderr=true --v=4 --hostname-override=192.168.1.103 --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig"

启动服务

[root@node2 cfg]# systemctl start kubelet

[root@node2 cfg]# systemctl start kube-proxy

master节点查看是否有请求

[root@master ssl]# kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-OPWss8__QdJqP6QmudtkaVQWeDh278BxzP35hdeAkZI 17s kubelet-bootstrap Pending node-csr-iVbj9CKPaWhh7VAQfqK16Xz9in4-Byb_XZaDJLz3zfw 28m kubelet-bootstrap Approved,Issued

允许自签证书连接

[root@master ssl]# kubectl certificate approve node-csr-OPWss8__QdJqP6QmudtkaVQWeDh278BxzP35hdeAkZI

查看节点

[root@master ssl]# kubectl get node NAME STATUS ROLES AGE VERSION 192.168.1.102 Ready <none> 15m v1.9.2 192.168.1.103 Ready <none> 12s v1.9.2

测试示例

创建nginx实例:

[root@master ssl]# kubectl run nginx --image=nginx --replicas=3

查看Pod

[root@master ssl]# kubectl get pod NAME READY STATUS RESTARTS AGE nginx-8586cf59-7r4zq 0/1 ContainerCreating 0 10s nginx-8586cf59-9wpwr 0/1 ContainerCreating 0 10s nginx-8586cf59-h2n5h 0/1 ContainerCreating 0 10s

查看资源对象

[root@master ssl]# kubectl get all NAME READY STATUS RESTARTS AGE pod/nginx-8586cf59-7r4zq 0/1 ContainerCreating 0 1m pod/nginx-8586cf59-9wpwr 0/1 ContainerCreating 0 1m pod/nginx-8586cf59-h2n5h 0/1 ContainerCreating 0 1m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.10.10.1 <none> 443/TCP 1h NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE deployment.extensions/nginx 3 3 3 0 1m NAME DESIRED CURRENT READY AGE replicaset.extensions/nginx-8586cf59 3 3 0 1m NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE deployment.apps/nginx 3 3 3 0 1m NAME DESIRED CURRENT READY AGE replicaset.apps/nginx-8586cf59 3 3 0 1m

查看容器运行在哪个节点

[root@master ssl]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE nginx-8586cf59-7r4zq 0/1 ImagePullBackOff 0 7m 172.17.47.2 192.168.1.103 nginx-8586cf59-9wpwr 1/1 Running 0 7m 172.17.47.3 192.168.1.103 nginx-8586cf59-h2n5h 1/1 Running 0 7m 172.17.45.2 192.168.1.102

对外发布一个服务

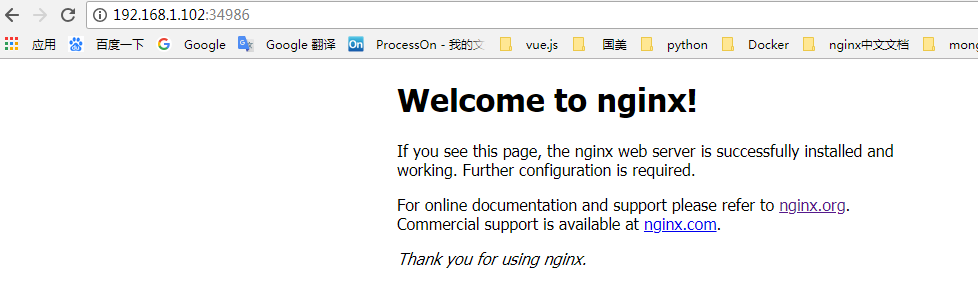

[root@master ssl]# kubectl expose deployment nginx --port=88 --target-port=80 --type=NodePort

[root@master ssl]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.10.10.1 <none> 443/TCP 2h nginx NodePort 10.10.10.130 <none> 88:34986/TCP 13s

备注:88端口是提供node节点访问

34986为随机端口,外问该问

在node节点访问88这个端口

[root@node1 ssl]# curl -I 10.10.10.130:88 HTTP/1.1 200 OK Server: nginx/1.15.2 Date: Wed, 08 Aug 2018 08:54:09 GMT Content-Type: text/html Content-Length: 612 Last-Modified: Tue, 24 Jul 2018 13:02:29 GMT Connection: keep-alive ETag: "5b572365-264" Accept-Ranges: bytes