1. Download Spark 3.4.1

2. Download Java JDK 17

3. Setup Python virtual environment 3.11.9

.bashrc:

sfw=~/Downloads/sfw zpy=~/venvs/zpy311 export JAVA_HOME=$sfw/jdk-17.0.12 PATH=$JAVA_HOME/bin:$PATH export SPARK_HOME=$sfw/spark-3.4.1-bin-hadoop3 PATH=$PATH:$SPARK_HOME/sbin:$SPARK_HOME/bin export PATH=$zpy/bin:$PATH

zzh@ZZHPC:~$ pyspark

Python 3.11.9 (main, Feb 3 2025, 17:07:54) [GCC 11.4.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

25/02/03 17:33:15 WARN Utils: Your hostname, ZZHPC resolves to a loopback address: 127.0.1.1; using 192.168.1.16 instead (on interface wlo1)

25/02/03 17:33:15 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

25/02/03 17:33:16 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 3.4.1

/_/

Using Python version 3.11.9 (main, Feb 3 2025 17:07:54)

Spark context Web UI available at http://192.168.1.16:4040

Spark context available as 'sc' (master = local[*], app id = local-1738575196912).

SparkSession available as 'spark'.

>>>

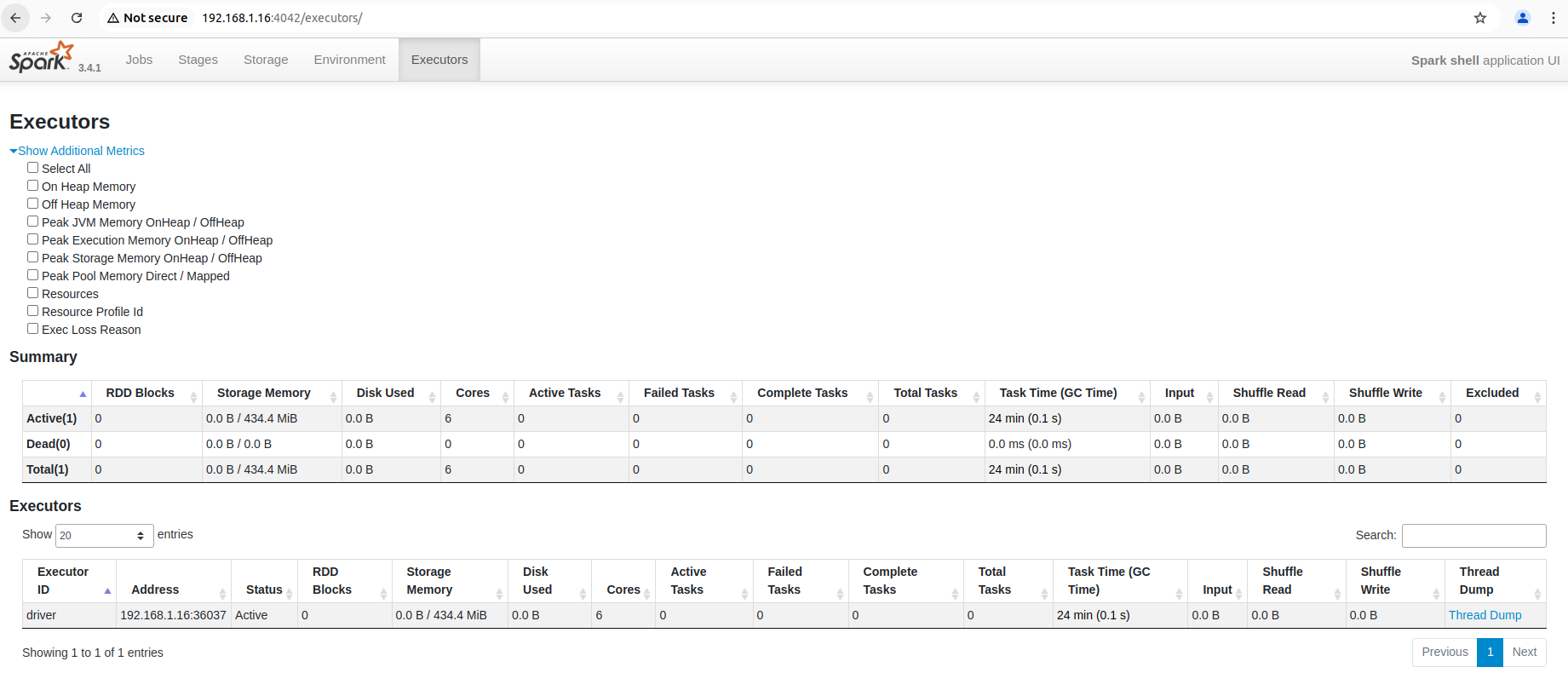

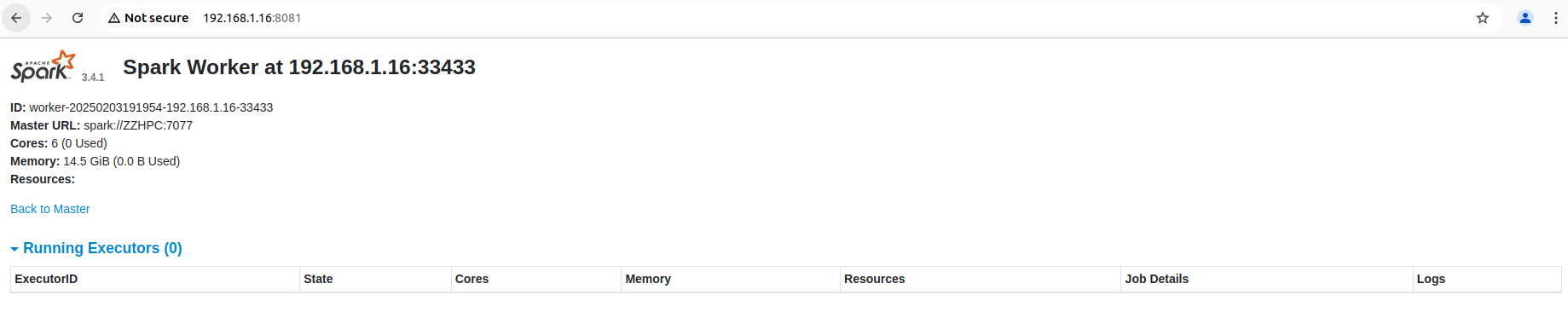

4. Start master and a worker:

zzh@ZZHPC:~$ start-master.sh zzh@ZZHPC:~$ start-worker.sh spark://ZZHPC:7077

5. Install needed Python packages:

zzh@ZZHPC:~$ which pip

/home/zzh/venvs/zpy311/bin/pip

zzh@ZZHPC:~$ pip install --upgrade pip

zzh@ZZHPC:~$ pip install delta-spark==2.4.0

zzh@ZZHPC:~$ pip install deltalake==0.10.0

zzh@ZZHPC:~$ pip install pandas==2.0.1

zzh@ZZHPC:~$ pip install pyspark==3.4.1

zzh@ZZHPC:~$ pip install jupyterlab==4.0.2

zzh@ZZHPC:~$ pip install sparksql-magic==0.0.3

zzh@ZZHPC:~$ pip install kafka-python==2.0.2

Pandas version 2.0.1 doesn't work well. When executed 'import pands as pd', got error:

ValueError: numpy.dtype size changed, may indicate binary incompatibility. Expected 96 from C header, got 88 from PyObject

Uninstalled it and install the latest Pandas version:

zzh@ZZHPC:~$ pip uninstall pandas

Found existing installation: pandas 2.0.1

Uninstalling pandas-2.0.1:

Would remove:

/home/zzh/venvs/zpy311/lib/python3.11/site-packages/pandas-2.0.1.dist-info/*

/home/zzh/venvs/zpy311/lib/python3.11/site-packages/pandas/*

Proceed (Y/n)? Y

Successfully uninstalled pandas-2.0.1

zzh@ZZHPC:~$ pip install pandas

Collecting pandas

Downloading pandas-2.2.3-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (89 kB)

Requirement already satisfied: numpy>=1.23.2 in ./venvs/zpy311/lib/python3.11/site-packages (from pandas) (2.2.2)

Requirement already satisfied: python-dateutil>=2.8.2 in ./venvs/zpy311/lib/python3.11/site-packages (from pandas) (2.9.0.post0)

Requirement already satisfied: pytz>=2020.1 in ./venvs/zpy311/lib/python3.11/site-packages (from pandas) (2025.1)

Requirement already satisfied: tzdata>=2022.7 in ./venvs/zpy311/lib/python3.11/site-packages (from pandas) (2025.1)

Requirement already satisfied: six>=1.5 in ./venvs/zpy311/lib/python3.11/site-packages (from python-dateutil>=2.8.2->pandas) (1.17.0)

Downloading pandas-2.2.3-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (13.1 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 13.1/13.1 MB 698.3 kB/s eta 0:00:00

Installing collected packages: pandas

Successfully installed pandas-2.2.3

The error is gone.

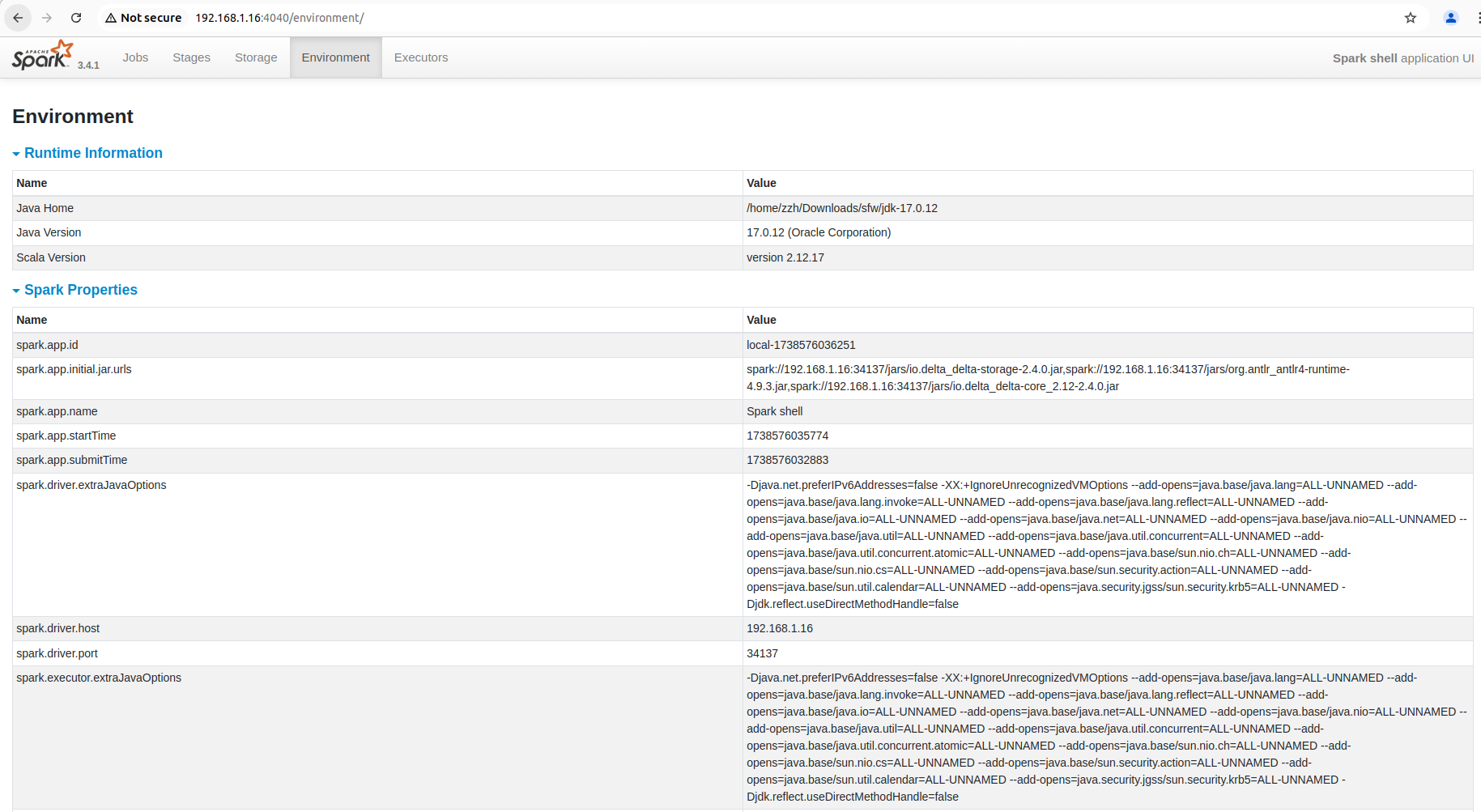

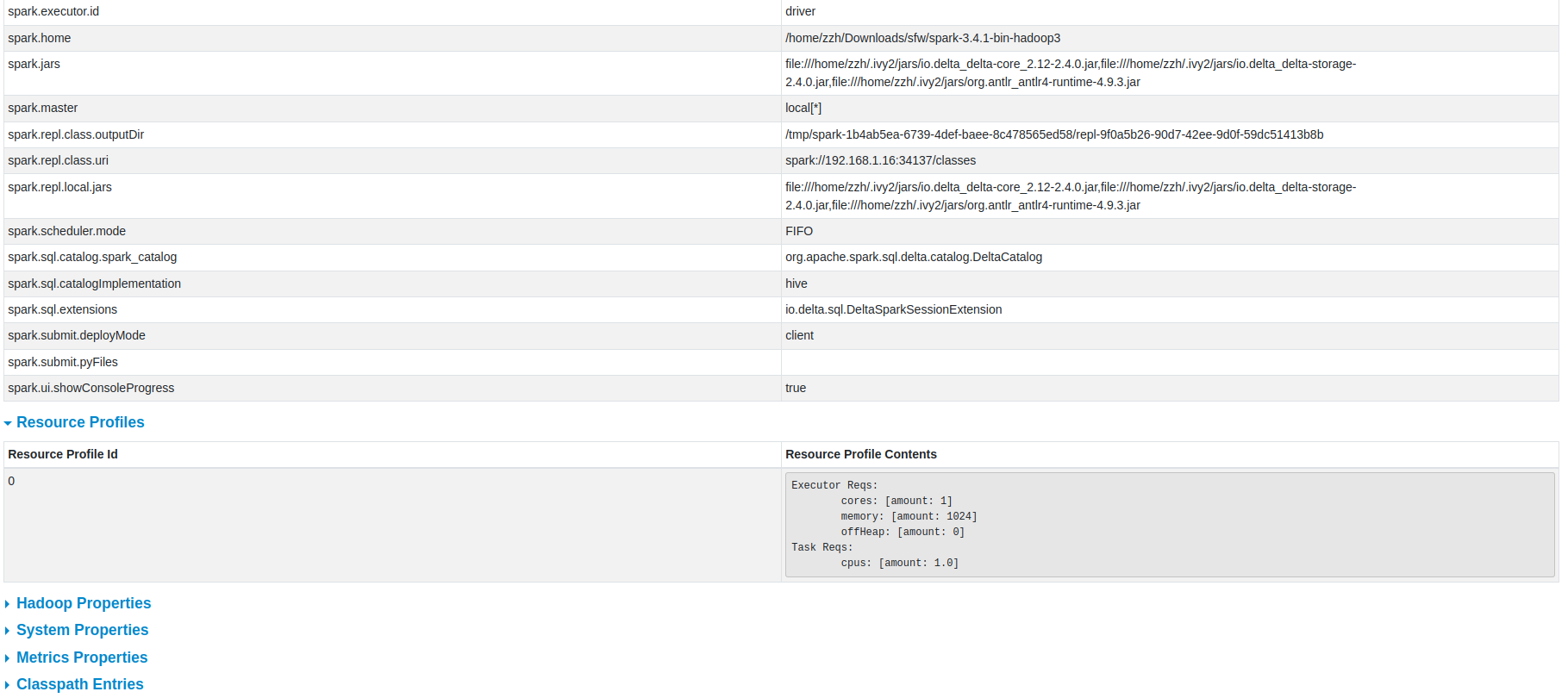

6. Try spark-shell (optional):

zzh@ZZHPC:~$ delta_package_version="delta-core_2.12:2.4.0"

zzh@ZZHPC:~$ spark-shell --packages io.delta:${delta_package_version} \

--conf "spark.sql.extensions=io.delta.sql.DeltaSparkSessionExtension" \

--conf "spark.sql.catalog.spark_catalog=org.apache.spark.sql.delta.catalog.DeltaCatalog"

25/02/03 17:46:57 WARN Utils: Your hostname, ZZHPC resolves to a loopback address: 127.0.1.1; using 192.168.1.16 instead (on interface wlo1)

25/02/03 17:46:57 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

:: loading settings :: url = jar:file:/home/zzh/Downloads/sfw/spark-3.4.1-bin-hadoop3/jars/ivy-2.5.1.jar!/org/apache/ivy/core/settings/ivysettings.xml

Ivy Default Cache set to: /home/zzh/.ivy2/cache

The jars for the packages stored in: /home/zzh/.ivy2/jars

io.delta#delta-core_2.12 added as a dependency

:: resolving dependencies :: org.apache.spark#spark-submit-parent-1268c890-4724-4533-a38f-7a2b1c64aa10;1.0

confs: [default]

found io.delta#delta-core_2.12;2.4.0 in central

found io.delta#delta-storage;2.4.0 in central

found org.antlr#antlr4-runtime;4.9.3 in central

downloading https://repo1.maven.org/maven2/io/delta/delta-core_2.12/2.4.0/delta-core_2.12-2.4.0.jar ...

[SUCCESSFUL ] io.delta#delta-core_2.12;2.4.0!delta-core_2.12.jar (7595ms)

downloading https://repo1.maven.org/maven2/io/delta/delta-storage/2.4.0/delta-storage-2.4.0.jar ...

[SUCCESSFUL ] io.delta#delta-storage;2.4.0!delta-storage.jar (624ms)

downloading https://repo1.maven.org/maven2/org/antlr/antlr4-runtime/4.9.3/antlr4-runtime-4.9.3.jar ...

[SUCCESSFUL ] org.antlr#antlr4-runtime;4.9.3!antlr4-runtime.jar (742ms)

:: resolution report :: resolve 6365ms :: artifacts dl 8966ms

:: modules in use:

io.delta#delta-core_2.12;2.4.0 from central in [default]

io.delta#delta-storage;2.4.0 from central in [default]

org.antlr#antlr4-runtime;4.9.3 from central in [default]

---------------------------------------------------------------------

| | modules || artifacts |

| conf | number| search|dwnlded|evicted|| number|dwnlded|

---------------------------------------------------------------------

| default | 3 | 3 | 3 | 0 || 3 | 3 |

---------------------------------------------------------------------

:: retrieving :: org.apache.spark#spark-submit-parent-1268c890-4724-4533-a38f-7a2b1c64aa10

confs: [default]

3 artifacts copied, 0 already retrieved (4537kB/8ms)

25/02/03 17:47:12 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://192.168.1.16:4040

Spark context available as 'sc' (master = local[*], app id = local-1738576036251).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 3.4.1

/_/

Using Scala version 2.12.17 (Java HotSpot(TM) 64-Bit Server VM, Java 17.0.12)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

zzh@ZZHPC:~$ spark_xml_package_version="spark-xml_2.12:0.16.0"

zzh@ZZHPC:~$ spark-shell --packages com.databricks:${spark_xml_package_version}

25/02/03 17:52:23 WARN Utils: Your hostname, ZZHPC resolves to a loopback address: 127.0.1.1; using 192.168.1.16 instead (on interface wlo1)

25/02/03 17:52:23 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

:: loading settings :: url = jar:file:/home/zzh/Downloads/sfw/spark-3.4.1-bin-hadoop3/jars/ivy-2.5.1.jar!/org/apache/ivy/core/settings/ivysettings.xml

Ivy Default Cache set to: /home/zzh/.ivy2/cache

The jars for the packages stored in: /home/zzh/.ivy2/jars

com.databricks#spark-xml_2.12 added as a dependency

:: resolving dependencies :: org.apache.spark#spark-submit-parent-41f9f806-d163-4743-8375-877e292e5d4e;1.0

confs: [default]

found com.databricks#spark-xml_2.12;0.16.0 in central

found commons-io#commons-io;2.11.0 in central

found org.glassfish.jaxb#txw2;3.0.2 in central

found org.apache.ws.xmlschema#xmlschema-core;2.3.0 in central

found org.scala-lang.modules#scala-collection-compat_2.12;2.9.0 in central

downloading https://repo1.maven.org/maven2/com/databricks/spark-xml_2.12/0.16.0/spark-xml_2.12-0.16.0.jar ...

[SUCCESSFUL ] com.databricks#spark-xml_2.12;0.16.0!spark-xml_2.12.jar (732ms)

downloading https://repo1.maven.org/maven2/commons-io/commons-io/2.11.0/commons-io-2.11.0.jar ...

[SUCCESSFUL ] commons-io#commons-io;2.11.0!commons-io.jar (1110ms)

downloading https://repo1.maven.org/maven2/org/glassfish/jaxb/txw2/3.0.2/txw2-3.0.2.jar ...

[SUCCESSFUL ] org.glassfish.jaxb#txw2;3.0.2!txw2.jar (592ms)

downloading https://repo1.maven.org/maven2/org/apache/ws/xmlschema/xmlschema-core/2.3.0/xmlschema-core-2.3.0.jar ...

[SUCCESSFUL ] org.apache.ws.xmlschema#xmlschema-core;2.3.0!xmlschema-core.jar(bundle) (726ms)

downloading https://repo1.maven.org/maven2/org/scala-lang/modules/scala-collection-compat_2.12/2.9.0/scala-collection-compat_2.12-2.9.0.jar ...

[SUCCESSFUL ] org.scala-lang.modules#scala-collection-compat_2.12;2.9.0!scala-collection-compat_2.12.jar (901ms)

:: resolution report :: resolve 14676ms :: artifacts dl 4064ms

:: modules in use:

com.databricks#spark-xml_2.12;0.16.0 from central in [default]

commons-io#commons-io;2.11.0 from central in [default]

org.apache.ws.xmlschema#xmlschema-core;2.3.0 from central in [default]

org.glassfish.jaxb#txw2;3.0.2 from central in [default]

org.scala-lang.modules#scala-collection-compat_2.12;2.9.0 from central in [default]

---------------------------------------------------------------------

| | modules || artifacts |

| conf | number| search|dwnlded|evicted|| number|dwnlded|

---------------------------------------------------------------------

| default | 5 | 5 | 5 | 0 || 5 | 5 |

---------------------------------------------------------------------

:: retrieving :: org.apache.spark#spark-submit-parent-41f9f806-d163-4743-8375-877e292e5d4e

confs: [default]

5 artifacts copied, 0 already retrieved (986kB/6ms)

25/02/03 17:52:42 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

25/02/03 17:52:46 WARN Utils: Service 'SparkUI' could not bind on port 4040. Attempting port 4041.

Spark context Web UI available at http://192.168.1.16:4041

Spark context available as 'sc' (master = local[*], app id = local-1738576366109).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 3.4.1

/_/

Using Scala version 2.12.17 (Java HotSpot(TM) 64-Bit Server VM, Java 17.0.12)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

zzh@ZZHPC:~$ spark-shell --packages org.apache.spark:spark-sql-kafka-0-10_2.12:3.4.1

25/02/03 17:54:37 WARN Utils: Your hostname, ZZHPC resolves to a loopback address: 127.0.1.1; using 192.168.1.16 instead (on interface wlo1)

25/02/03 17:54:37 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

:: loading settings :: url = jar:file:/home/zzh/Downloads/sfw/spark-3.4.1-bin-hadoop3/jars/ivy-2.5.1.jar!/org/apache/ivy/core/settings/ivysettings.xml

Ivy Default Cache set to: /home/zzh/.ivy2/cache

The jars for the packages stored in: /home/zzh/.ivy2/jars

org.apache.spark#spark-sql-kafka-0-10_2.12 added as a dependency

:: resolving dependencies :: org.apache.spark#spark-submit-parent-15c91cf7-abef-4798-b5a5-3fc13e65227b;1.0

confs: [default]

found org.apache.spark#spark-sql-kafka-0-10_2.12;3.4.1 in central

found org.apache.spark#spark-token-provider-kafka-0-10_2.12;3.4.1 in central

found org.apache.kafka#kafka-clients;3.3.2 in central

found org.lz4#lz4-java;1.8.0 in central

found org.xerial.snappy#snappy-java;1.1.10.1 in central

found org.slf4j#slf4j-api;2.0.6 in central

found org.apache.hadoop#hadoop-client-runtime;3.3.4 in central

found org.apache.hadoop#hadoop-client-api;3.3.4 in central

found commons-logging#commons-logging;1.1.3 in central

found com.google.code.findbugs#jsr305;3.0.0 in central

found org.apache.commons#commons-pool2;2.11.1 in central

downloading https://repo1.maven.org/maven2/org/apache/spark/spark-sql-kafka-0-10_2.12/3.4.1/spark-sql-kafka-0-10_2.12-3.4.1.jar ...

[SUCCESSFUL ] org.apache.spark#spark-sql-kafka-0-10_2.12;3.4.1!spark-sql-kafka-0-10_2.12.jar (1199ms)

downloading https://repo1.maven.org/maven2/org/apache/spark/spark-token-provider-kafka-0-10_2.12/3.4.1/spark-token-provider-kafka-0-10_2.12-3.4.1.jar ...

[SUCCESSFUL ] org.apache.spark#spark-token-provider-kafka-0-10_2.12;3.4.1!spark-token-provider-kafka-0-10_2.12.jar (774ms)

downloading https://repo1.maven.org/maven2/org/apache/kafka/kafka-clients/3.3.2/kafka-clients-3.3.2.jar ...

[SUCCESSFUL ] org.apache.kafka#kafka-clients;3.3.2!kafka-clients.jar (5153ms)

downloading https://repo1.maven.org/maven2/com/google/code/findbugs/jsr305/3.0.0/jsr305-3.0.0.jar ...

[SUCCESSFUL ] com.google.code.findbugs#jsr305;3.0.0!jsr305.jar (547ms)

downloading https://repo1.maven.org/maven2/org/apache/commons/commons-pool2/2.11.1/commons-pool2-2.11.1.jar ...

[SUCCESSFUL ] org.apache.commons#commons-pool2;2.11.1!commons-pool2.jar (708ms)

downloading https://repo1.maven.org/maven2/org/apache/hadoop/hadoop-client-runtime/3.3.4/hadoop-client-runtime-3.3.4.jar ...

[SUCCESSFUL ] org.apache.hadoop#hadoop-client-runtime;3.3.4!hadoop-client-runtime.jar (15369ms)

downloading https://repo1.maven.org/maven2/org/lz4/lz4-java/1.8.0/lz4-java-1.8.0.jar ...

[SUCCESSFUL ] org.lz4#lz4-java;1.8.0!lz4-java.jar (898ms)

downloading https://repo1.maven.org/maven2/org/xerial/snappy/snappy-java/1.1.10.1/snappy-java-1.1.10.1.jar ...

[SUCCESSFUL ] org.xerial.snappy#snappy-java;1.1.10.1!snappy-java.jar(bundle) (1353ms)

downloading https://repo1.maven.org/maven2/org/slf4j/slf4j-api/2.0.6/slf4j-api-2.0.6.jar ...

[SUCCESSFUL ] org.slf4j#slf4j-api;2.0.6!slf4j-api.jar (551ms)

downloading https://repo1.maven.org/maven2/org/apache/hadoop/hadoop-client-api/3.3.4/hadoop-client-api-3.3.4.jar ...

[SUCCESSFUL ] org.apache.hadoop#hadoop-client-api;3.3.4!hadoop-client-api.jar (9740ms)

downloading https://repo1.maven.org/maven2/commons-logging/commons-logging/1.1.3/commons-logging-1.1.3.jar ...

[SUCCESSFUL ] commons-logging#commons-logging;1.1.3!commons-logging.jar (504ms)

:: resolution report :: resolve 20068ms :: artifacts dl 36802ms

:: modules in use:

com.google.code.findbugs#jsr305;3.0.0 from central in [default]

commons-logging#commons-logging;1.1.3 from central in [default]

org.apache.commons#commons-pool2;2.11.1 from central in [default]

org.apache.hadoop#hadoop-client-api;3.3.4 from central in [default]

org.apache.hadoop#hadoop-client-runtime;3.3.4 from central in [default]

org.apache.kafka#kafka-clients;3.3.2 from central in [default]

org.apache.spark#spark-sql-kafka-0-10_2.12;3.4.1 from central in [default]

org.apache.spark#spark-token-provider-kafka-0-10_2.12;3.4.1 from central in [default]

org.lz4#lz4-java;1.8.0 from central in [default]

org.slf4j#slf4j-api;2.0.6 from central in [default]

org.xerial.snappy#snappy-java;1.1.10.1 from central in [default]

---------------------------------------------------------------------

| | modules || artifacts |

| conf | number| search|dwnlded|evicted|| number|dwnlded|

---------------------------------------------------------------------

| default | 11 | 11 | 11 | 0 || 11 | 11 |

---------------------------------------------------------------------

:: retrieving :: org.apache.spark#spark-submit-parent-15c91cf7-abef-4798-b5a5-3fc13e65227b

confs: [default]

11 artifacts copied, 0 already retrieved (56445kB/48ms)

25/02/03 17:55:34 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

25/02/03 17:55:37 WARN Utils: Service 'SparkUI' could not bind on port 4040. Attempting port 4041.

25/02/03 17:55:37 WARN Utils: Service 'SparkUI' could not bind on port 4041. Attempting port 4042.

Spark context Web UI available at http://192.168.1.16:4042

Spark context available as 'sc' (master = local[*], app id = local-1738576537573).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 3.4.1

/_/

Using Scala version 2.12.17 (Java HotSpot(TM) 64-Bit Server VM, Java 17.0.12)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

After exiting theses spark-shell prompts, the above URLs became unavailable.

Load multiple packages at once:

zzh@ZZHPC:~$ delta_package_version="delta-core_2.12:2.4.0"

zzh@ZZHPC:~$ spark_xml_package_version="spark-xml_2.12:0.16.0"

zzh@ZZHPC:~$ spark-shell --packages io.delta:${delta_package_version},com.databricks:${spark_xml_package_version},org.apache.spark:spark-sql-kafka-0-10_2.12:3.4.1 \

--conf "spark.sql.extensions=io.delta.sql.DeltaSparkSessionExtension" \

--conf "spark.sql.catalog.spark_catalog=org.apache.spark.sql.delta.catalog.DeltaCatalog"

25/02/03 18:35:30 WARN Utils: Your hostname, ZZHPC resolves to a loopback address: 127.0.1.1; using 192.168.1.16 instead (on interface wlo1)

25/02/03 18:35:30 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

:: loading settings :: url = jar:file:/home/zzh/Downloads/sfw/spark-3.4.1-bin-hadoop3/jars/ivy-2.5.1.jar!/org/apache/ivy/core/settings/ivysettings.xml

Ivy Default Cache set to: /home/zzh/.ivy2/cache

The jars for the packages stored in: /home/zzh/.ivy2/jars

io.delta#delta-core_2.12 added as a dependency

com.databricks#spark-xml_2.12 added as a dependency

org.apache.spark#spark-sql-kafka-0-10_2.12 added as a dependency

:: resolving dependencies :: org.apache.spark#spark-submit-parent-0cd3c8ec-497c-4202-8686-2f7496ddc31a;1.0

confs: [default]

found io.delta#delta-core_2.12;2.4.0 in central

found io.delta#delta-storage;2.4.0 in central

found org.antlr#antlr4-runtime;4.9.3 in central

found com.databricks#spark-xml_2.12;0.16.0 in central

found commons-io#commons-io;2.11.0 in central

found org.glassfish.jaxb#txw2;3.0.2 in central

found org.apache.ws.xmlschema#xmlschema-core;2.3.0 in central

found org.scala-lang.modules#scala-collection-compat_2.12;2.9.0 in central

found org.apache.spark#spark-sql-kafka-0-10_2.12;3.4.1 in central

found org.apache.spark#spark-token-provider-kafka-0-10_2.12;3.4.1 in central

found org.apache.kafka#kafka-clients;3.3.2 in central

found org.lz4#lz4-java;1.8.0 in central

found org.xerial.snappy#snappy-java;1.1.10.1 in central

found org.slf4j#slf4j-api;2.0.6 in central

found org.apache.hadoop#hadoop-client-runtime;3.3.4 in central

found org.apache.hadoop#hadoop-client-api;3.3.4 in central

found commons-logging#commons-logging;1.1.3 in central

found com.google.code.findbugs#jsr305;3.0.0 in central

found org.apache.commons#commons-pool2;2.11.1 in central

:: resolution report :: resolve 411ms :: artifacts dl 11ms

:: modules in use:

com.databricks#spark-xml_2.12;0.16.0 from central in [default]

com.google.code.findbugs#jsr305;3.0.0 from central in [default]

commons-io#commons-io;2.11.0 from central in [default]

commons-logging#commons-logging;1.1.3 from central in [default]

io.delta#delta-core_2.12;2.4.0 from central in [default]

io.delta#delta-storage;2.4.0 from central in [default]

org.antlr#antlr4-runtime;4.9.3 from central in [default]

org.apache.commons#commons-pool2;2.11.1 from central in [default]

org.apache.hadoop#hadoop-client-api;3.3.4 from central in [default]

org.apache.hadoop#hadoop-client-runtime;3.3.4 from central in [default]

org.apache.kafka#kafka-clients;3.3.2 from central in [default]

org.apache.spark#spark-sql-kafka-0-10_2.12;3.4.1 from central in [default]

org.apache.spark#spark-token-provider-kafka-0-10_2.12;3.4.1 from central in [default]

org.apache.ws.xmlschema#xmlschema-core;2.3.0 from central in [default]

org.glassfish.jaxb#txw2;3.0.2 from central in [default]

org.lz4#lz4-java;1.8.0 from central in [default]

org.scala-lang.modules#scala-collection-compat_2.12;2.9.0 from central in [default]

org.slf4j#slf4j-api;2.0.6 from central in [default]

org.xerial.snappy#snappy-java;1.1.10.1 from central in [default]

---------------------------------------------------------------------

| | modules || artifacts |

| conf | number| search|dwnlded|evicted|| number|dwnlded|

---------------------------------------------------------------------

| default | 19 | 0 | 0 | 0 || 19 | 0 |

---------------------------------------------------------------------

:: retrieving :: org.apache.spark#spark-submit-parent-0cd3c8ec-497c-4202-8686-2f7496ddc31a

confs: [default]

0 artifacts copied, 19 already retrieved (0kB/7ms)

25/02/03 18:35:30 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://192.168.1.16:4040

Spark context available as 'sc' (master = local[*], app id = local-1738578934459).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 3.4.1

/_/

Using Scala version 2.12.17 (Java HotSpot(TM) 64-Bit Server VM, Java 17.0.12)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

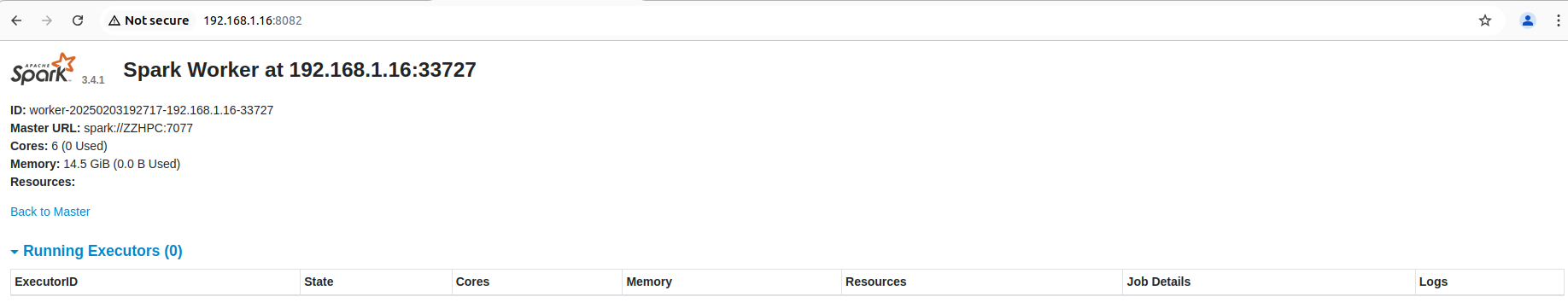

7. Run spark-class to register another worker:

zzh@ZZHPC:~$ spark-class org.apache.spark.deploy.worker.Worker spark://ZZHPC:7077 1>/home/zzh/Downloads/sfw/spark-3.4.1-bin-hadoop3/logs/spark-zzh-org.apache.spark.deploy.worker.Worker-2-ZZHPC.out 2>&1 & [1] 159296 zzh@ZZHPC:~$ cat /home/zzh/Downloads/sfw/spark-3.4.1-bin-hadoop3/logs/spark-zzh-org.apache.spark.deploy.worker.Worker-2-ZZHPC.out

Using Spark's default log4j profile: org/apache/spark/log4j2-defaults.properties 25/02/03 19:27:06 INFO Worker: Started daemon with process name: 159296@ZZHPC 25/02/03 19:27:06 INFO SignalUtils: Registering signal handler for TERM 25/02/03 19:27:06 INFO SignalUtils: Registering signal handler for HUP 25/02/03 19:27:06 INFO SignalUtils: Registering signal handler for INT 25/02/03 19:27:06 WARN Utils: Your hostname, ZZHPC resolves to a loopback address: 127.0.1.1; using 192.168.1.16 instead (on interface wlo1) 25/02/03 19:27:06 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address 25/02/03 19:27:17 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 25/02/03 19:27:17 INFO SecurityManager: Changing view acls to: zzh 25/02/03 19:27:17 INFO SecurityManager: Changing modify acls to: zzh 25/02/03 19:27:17 INFO SecurityManager: Changing view acls groups to: 25/02/03 19:27:17 INFO SecurityManager: Changing modify acls groups to: 25/02/03 19:27:17 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: zzh; groups with view permissions: EMPTY; users with modify permissions: zzh; groups with modify permissions: EMPTY 25/02/03 19:27:17 INFO Utils: Successfully started service 'sparkWorker' on port 33727. 25/02/03 19:27:17 INFO Worker: Worker decommissioning not enabled. 25/02/03 19:27:17 INFO Worker: Starting Spark worker 192.168.1.16:33727 with 6 cores, 14.5 GiB RAM 25/02/03 19:27:17 INFO Worker: Running Spark version 3.4.1 25/02/03 19:27:17 INFO Worker: Spark home: /home/zzh/Downloads/sfw/spark-3.4.1-bin-hadoop3 25/02/03 19:27:17 INFO ResourceUtils: ============================================================== 25/02/03 19:27:17 INFO ResourceUtils: No custom resources configured for spark.worker. 25/02/03 19:27:17 INFO ResourceUtils: ============================================================== 25/02/03 19:27:17 INFO JettyUtils: Start Jetty 0.0.0.0:8081 for WorkerUI 25/02/03 19:27:17 WARN Utils: Service 'WorkerUI' could not bind on port 8081. Attempting port 8082. 25/02/03 19:27:17 INFO Utils: Successfully started service 'WorkerUI' on port 8082. 25/02/03 19:27:17 INFO WorkerWebUI: Bound WorkerWebUI to 0.0.0.0, and started at http://192.168.1.16:8082 25/02/03 19:27:17 INFO Worker: Connecting to master ZZHPC:7077... 25/02/03 19:27:17 INFO TransportClientFactory: Successfully created connection to ZZHPC/127.0.1.1:7077 after 26 ms (0 ms spent in bootstraps) 25/02/03 19:27:17 INFO Worker: Successfully registered with master spark://ZZHPC:7077

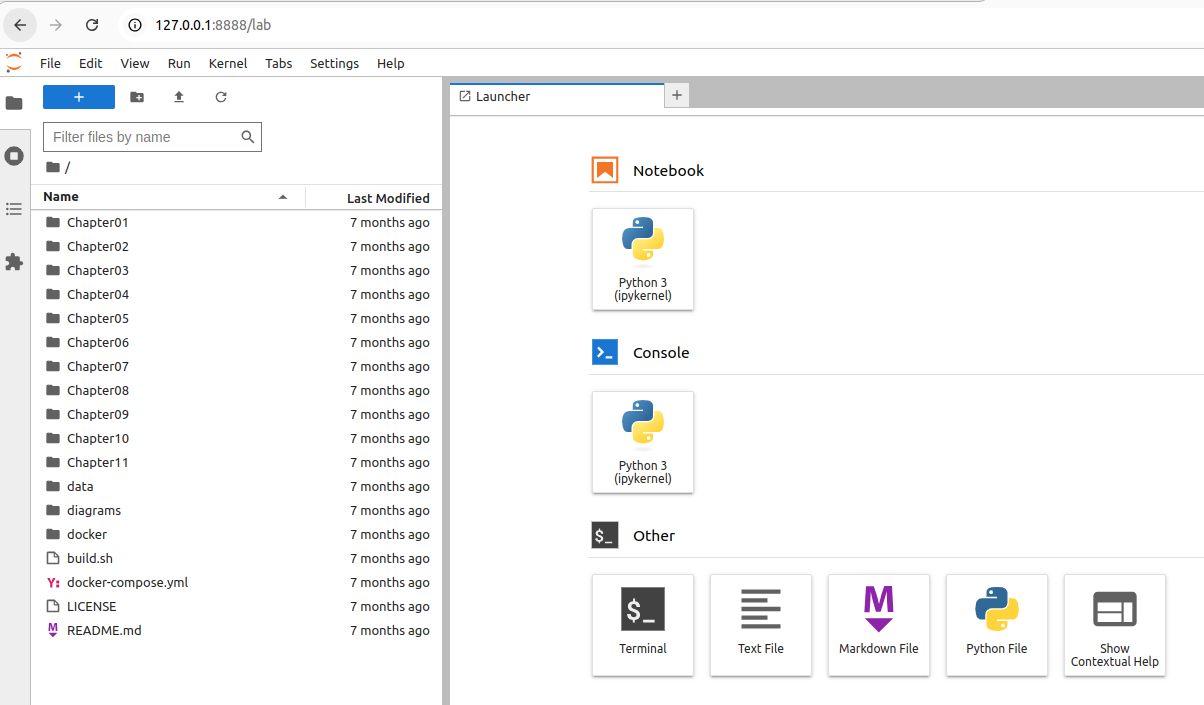

8. Start jupyter-lab:

(zpy311) zzh@ZZHPC:~/zd/Github/Data-Engineering-with-Databricks-Cookbook-main$ jupyter-lab [I 2025-02-03 19:23:32.547 ServerApp] jupyter_lsp | extension was successfully linked. [I 2025-02-03 19:23:32.550 ServerApp] jupyter_server_terminals | extension was successfully linked. [I 2025-02-03 19:23:32.554 ServerApp] jupyterlab | extension was successfully linked. [I 2025-02-03 19:23:32.726 ServerApp] notebook_shim | extension was successfully linked. [I 2025-02-03 19:23:32.739 ServerApp] notebook_shim | extension was successfully loaded. [I 2025-02-03 19:23:32.741 ServerApp] jupyter_lsp | extension was successfully loaded. [I 2025-02-03 19:23:32.742 ServerApp] jupyter_server_terminals | extension was successfully loaded. [I 2025-02-03 19:23:32.743 LabApp] JupyterLab extension loaded from /home/zzh/venvs/zpy311/lib/python3.11/site-packages/jupyterlab [I 2025-02-03 19:23:32.743 LabApp] JupyterLab application directory is /home/zzh/venvs/zpy311/share/jupyter/lab [I 2025-02-03 19:23:32.743 LabApp] Extension Manager is 'pypi'. [I 2025-02-03 19:23:32.746 ServerApp] jupyterlab | extension was successfully loaded. [I 2025-02-03 19:23:32.747 ServerApp] Serving notebooks from local directory: /zdata/Github/Data-Engineering-with-Databricks-Cookbook-main [I 2025-02-03 19:23:32.747 ServerApp] Jupyter Server 2.15.0 is running at: [I 2025-02-03 19:23:32.747 ServerApp] http://localhost:8888/lab?token=34c55f9bc2c8add7b1ef631da5cedbaa07bbc220a08c7661 [I 2025-02-03 19:23:32.747 ServerApp] http://127.0.0.1:8888/lab?token=34c55f9bc2c8add7b1ef631da5cedbaa07bbc220a08c7661 [I 2025-02-03 19:23:32.747 ServerApp] Use Control-C to stop this server and shut down all kernels (twice to skip confirmation).

9. Download Kafka 3.5.1 from https://kafka.apache.org/downloads :

export SPARK_HOME=$sfw/spark-3.4.1-bin-hadoop3 kafka_home=$sfw/kafka_2.13-3.5.1 PATH=$PATH:$SPARK_HOME/sbin:$SPARK_HOME/bin:$kafka_home/bin

10. Start Zookeeper (Kafka needs it to manage cluster metadata like broker registration and leader election. It is being replaced by KRaft.)

zzh@ZZHPC:~$ zookeeper-server-start.sh $kafka_home/config/zookeeper.properties [2025-02-05 19:10:11,213] INFO Reading configuration from: /home/zzh/Downloads/sfw/kafka_2.13-3.5.1/config/zookeeper.properties (org.apache.zookeeper.server.quorum.QuorumPeerConfig) [2025-02-05 19:10:11,216] INFO clientPortAddress is 0.0.0.0:2181 (org.apache.zookeeper.server.quorum.QuorumPeerConfig) [2025-02-05 19:10:11,216] INFO secureClientPort is not set (org.apache.zookeeper.server.quorum.QuorumPeerConfig) [2025-02-05 19:10:11,216] INFO observerMasterPort is not set (org.apache.zookeeper.server.quorum.QuorumPeerConfig) [2025-02-05 19:10:11,216] INFO metricsProvider.className is org.apache.zookeeper.metrics.impl.DefaultMetricsProvider (org.apache.zookeeper.server.quorum.QuorumPeerConfig) [2025-02-05 19:10:11,217] INFO autopurge.snapRetainCount set to 3 (org.apache.zookeeper.server.DatadirCleanupManager) [2025-02-05 19:10:11,218] INFO autopurge.purgeInterval set to 0 (org.apache.zookeeper.server.DatadirCleanupManager) [2025-02-05 19:10:11,218] INFO Purge task is not scheduled. (org.apache.zookeeper.server.DatadirCleanupManager) [2025-02-05 19:10:11,218] WARN Either no config or no quorum defined in config, running in standalone mode (org.apache.zookeeper.server.quorum.QuorumPeerMain) [2025-02-05 19:10:11,219] INFO Log4j 1.2 jmx support not found; jmx disabled. (org.apache.zookeeper.jmx.ManagedUtil) [2025-02-05 19:10:11,219] INFO Reading configuration from: /home/zzh/Downloads/sfw/kafka_2.13-3.5.1/config/zookeeper.properties (org.apache.zookeeper.server.quorum.QuorumPeerConfig) [2025-02-05 19:10:11,219] INFO clientPortAddress is 0.0.0.0:2181 (org.apache.zookeeper.server.quorum.QuorumPeerConfig) [2025-02-05 19:10:11,219] INFO secureClientPort is not set (org.apache.zookeeper.server.quorum.QuorumPeerConfig) [2025-02-05 19:10:11,219] INFO observerMasterPort is not set (org.apache.zookeeper.server.quorum.QuorumPeerConfig) [2025-02-05 19:10:11,219] INFO metricsProvider.className is org.apache.zookeeper.metrics.impl.DefaultMetricsProvider (org.apache.zookeeper.server.quorum.QuorumPeerConfig) [2025-02-05 19:10:11,220] INFO Starting server (org.apache.zookeeper.server.ZooKeeperServerMain) [2025-02-05 19:10:11,229] INFO ServerMetrics initialized with provider org.apache.zookeeper.metrics.impl.DefaultMetricsProvider@3ec300f1 (org.apache.zookeeper.server.ServerMetrics) [2025-02-05 19:10:11,232] INFO zookeeper.snapshot.trust.empty : false (org.apache.zookeeper.server.persistence.FileTxnSnapLog) [2025-02-05 19:10:11,239] INFO (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,239] INFO ______ _ (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,239] INFO |___ / | | (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,239] INFO / / ___ ___ | | __ ___ ___ _ __ ___ _ __ (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,239] INFO / / / _ \ / _ \ | |/ / / _ \ / _ \ | '_ \ / _ \ | '__| (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,239] INFO / /__ | (_) | | (_) | | < | __/ | __/ | |_) | | __/ | | (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,239] INFO /_____| \___/ \___/ |_|\_\ \___| \___| | .__/ \___| |_| (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,240] INFO | | (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,240] INFO |_| (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,240] INFO (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,241] INFO Server environment:zookeeper.version=3.6.4--d65253dcf68e9097c6e95a126463fd5fdeb4521c, built on 12/18/2022 18:10 GMT (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,241] INFO Server environment:host.name=ZZHPC (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,241] INFO Server environment:java.version=17.0.12 (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,241] INFO Server environment:java.vendor=Oracle Corporation (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,241] INFO Server environment:java.home=/home/zzh/Downloads/sfw/jdk-17.0.12 (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,241] INFO Server environment:java.class.path=/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/activation-1.1.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/aopalliance-repackaged-2.6.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/argparse4j-0.7.0.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/audience-annotations-0.13.0.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/commons-cli-1.4.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/commons-lang3-3.8.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/connect-api-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/connect-basic-auth-extension-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/connect-json-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/connect-mirror-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/connect-mirror-client-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/connect-runtime-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/connect-transforms-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/hk2-api-2.6.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/hk2-locator-2.6.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/hk2-utils-2.6.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jackson-annotations-2.13.5.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jackson-core-2.13.5.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jackson-databind-2.13.5.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jackson-dataformat-csv-2.13.5.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jackson-datatype-jdk8-2.13.5.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jackson-jaxrs-base-2.13.5.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jackson-jaxrs-json-provider-2.13.5.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jackson-module-jaxb-annotations-2.13.5.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jackson-module-scala_2.13-2.13.5.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jakarta.activation-api-1.2.2.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jakarta.annotation-api-1.3.5.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jakarta.inject-2.6.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jakarta.validation-api-2.0.2.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jakarta.ws.rs-api-2.1.6.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jakarta.xml.bind-api-2.3.3.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/javassist-3.29.2-GA.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/javax.activation-api-1.2.0.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/javax.annotation-api-1.3.2.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/javax.servlet-api-3.1.0.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/javax.ws.rs-api-2.1.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jaxb-api-2.3.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jersey-client-2.39.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jersey-common-2.39.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jersey-container-servlet-2.39.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jersey-container-servlet-core-2.39.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jersey-hk2-2.39.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jersey-server-2.39.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jetty-client-9.4.51.v20230217.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jetty-continuation-9.4.51.v20230217.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jetty-http-9.4.51.v20230217.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jetty-io-9.4.51.v20230217.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jetty-security-9.4.51.v20230217.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jetty-server-9.4.51.v20230217.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jetty-servlet-9.4.51.v20230217.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jetty-servlets-9.4.51.v20230217.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jetty-util-9.4.51.v20230217.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jetty-util-ajax-9.4.51.v20230217.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jline-3.22.0.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jopt-simple-5.0.4.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jose4j-0.9.3.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka_2.13-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-clients-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-group-coordinator-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-log4j-appender-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-metadata-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-raft-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-server-common-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-shell-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-storage-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-storage-api-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-streams-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-streams-examples-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-streams-scala_2.13-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-streams-test-utils-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-tools-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-tools-api-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/lz4-java-1.8.0.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/maven-artifact-3.8.8.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/metrics-core-2.2.0.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/metrics-core-4.1.12.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/netty-buffer-4.1.94.Final.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/netty-codec-4.1.94.Final.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/netty-common-4.1.94.Final.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/netty-handler-4.1.94.Final.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/netty-resolver-4.1.94.Final.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/netty-transport-4.1.94.Final.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/netty-transport-classes-epoll-4.1.94.Final.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/netty-transport-native-epoll-4.1.94.Final.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/netty-transport-native-unix-common-4.1.94.Final.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/osgi-resource-locator-1.0.3.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/paranamer-2.8.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/plexus-utils-3.3.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/reflections-0.9.12.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/reload4j-1.2.25.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/rocksdbjni-7.1.2.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/scala-collection-compat_2.13-2.10.0.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/scala-java8-compat_2.13-1.0.2.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/scala-library-2.13.10.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/scala-logging_2.13-3.9.4.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/scala-reflect-2.13.10.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/slf4j-api-1.7.36.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/slf4j-reload4j-1.7.36.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/snappy-java-1.1.10.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/swagger-annotations-2.2.8.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/trogdor-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/zookeeper-3.6.4.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/zookeeper-jute-3.6.4.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/zstd-jni-1.5.5-1.jar (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,242] INFO Server environment:java.library.path=/usr/java/packages/lib:/usr/lib64:/lib64:/lib:/usr/lib (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,242] INFO Server environment:java.io.tmpdir=/tmp (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,242] INFO Server environment:java.compiler=<NA> (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,242] INFO Server environment:os.name=Linux (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,242] INFO Server environment:os.arch=amd64 (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,242] INFO Server environment:os.version=6.8.0-52-generic (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,242] INFO Server environment:user.name=zzh (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,242] INFO Server environment:user.home=/home/zzh (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,242] INFO Server environment:user.dir=/home/zzh (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,242] INFO Server environment:os.memory.free=495MB (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,242] INFO Server environment:os.memory.max=512MB (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,242] INFO Server environment:os.memory.total=512MB (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,242] INFO zookeeper.enableEagerACLCheck = false (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,242] INFO zookeeper.digest.enabled = true (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,242] INFO zookeeper.closeSessionTxn.enabled = true (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,242] INFO zookeeper.flushDelay=0 (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,243] INFO zookeeper.maxWriteQueuePollTime=0 (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,243] INFO zookeeper.maxBatchSize=1000 (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,243] INFO zookeeper.intBufferStartingSizeBytes = 1024 (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,245] INFO Weighed connection throttling is disabled (org.apache.zookeeper.server.BlueThrottle) [2025-02-05 19:10:11,246] INFO minSessionTimeout set to 6000 (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,246] INFO maxSessionTimeout set to 60000 (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,247] INFO Response cache size is initialized with value 400. (org.apache.zookeeper.server.ResponseCache) [2025-02-05 19:10:11,247] INFO Response cache size is initialized with value 400. (org.apache.zookeeper.server.ResponseCache) [2025-02-05 19:10:11,247] INFO zookeeper.pathStats.slotCapacity = 60 (org.apache.zookeeper.server.util.RequestPathMetricsCollector) [2025-02-05 19:10:11,247] INFO zookeeper.pathStats.slotDuration = 15 (org.apache.zookeeper.server.util.RequestPathMetricsCollector) [2025-02-05 19:10:11,247] INFO zookeeper.pathStats.maxDepth = 6 (org.apache.zookeeper.server.util.RequestPathMetricsCollector) [2025-02-05 19:10:11,247] INFO zookeeper.pathStats.initialDelay = 5 (org.apache.zookeeper.server.util.RequestPathMetricsCollector) [2025-02-05 19:10:11,247] INFO zookeeper.pathStats.delay = 5 (org.apache.zookeeper.server.util.RequestPathMetricsCollector) [2025-02-05 19:10:11,248] INFO zookeeper.pathStats.enabled = false (org.apache.zookeeper.server.util.RequestPathMetricsCollector) [2025-02-05 19:10:11,249] INFO The max bytes for all large requests are set to 104857600 (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,249] INFO The large request threshold is set to -1 (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,249] INFO Created server with tickTime 3000 minSessionTimeout 6000 maxSessionTimeout 60000 clientPortListenBacklog -1 datadir /tmp/zookeeper/version-2 snapdir /tmp/zookeeper/version-2 (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,256] INFO Using org.apache.zookeeper.server.NIOServerCnxnFactory as server connection factory (org.apache.zookeeper.server.ServerCnxnFactory) [2025-02-05 19:10:11,257] WARN maxCnxns is not configured, using default value 0. (org.apache.zookeeper.server.ServerCnxnFactory) [2025-02-05 19:10:11,258] INFO Configuring NIO connection handler with 10s sessionless connection timeout, 1 selector thread(s), 12 worker threads, and 64 kB direct buffers. (org.apache.zookeeper.server.NIOServerCnxnFactory) [2025-02-05 19:10:11,261] INFO binding to port 0.0.0.0/0.0.0.0:2181 (org.apache.zookeeper.server.NIOServerCnxnFactory) [2025-02-05 19:10:11,271] INFO Using org.apache.zookeeper.server.watch.WatchManager as watch manager (org.apache.zookeeper.server.watch.WatchManagerFactory) [2025-02-05 19:10:11,271] INFO Using org.apache.zookeeper.server.watch.WatchManager as watch manager (org.apache.zookeeper.server.watch.WatchManagerFactory) [2025-02-05 19:10:11,272] INFO zookeeper.snapshotSizeFactor = 0.33 (org.apache.zookeeper.server.ZKDatabase) [2025-02-05 19:10:11,272] INFO zookeeper.commitLogCount=500 (org.apache.zookeeper.server.ZKDatabase) [2025-02-05 19:10:11,280] INFO zookeeper.snapshot.compression.method = CHECKED (org.apache.zookeeper.server.persistence.SnapStream) [2025-02-05 19:10:11,280] INFO Snapshotting: 0x0 to /tmp/zookeeper/version-2/snapshot.0 (org.apache.zookeeper.server.persistence.FileTxnSnapLog) [2025-02-05 19:10:11,282] INFO Snapshot loaded in 10 ms, highest zxid is 0x0, digest is 1371985504 (org.apache.zookeeper.server.ZKDatabase) [2025-02-05 19:10:11,283] INFO Snapshotting: 0x0 to /tmp/zookeeper/version-2/snapshot.0 (org.apache.zookeeper.server.persistence.FileTxnSnapLog) [2025-02-05 19:10:11,283] INFO Snapshot taken in 0 ms (org.apache.zookeeper.server.ZooKeeperServer) [2025-02-05 19:10:11,290] INFO PrepRequestProcessor (sid:0) started, reconfigEnabled=false (org.apache.zookeeper.server.PrepRequestProcessor) [2025-02-05 19:10:11,290] INFO zookeeper.request_throttler.shutdownTimeout = 10000 (org.apache.zookeeper.server.RequestThrottler) [2025-02-05 19:10:11,300] INFO Using checkIntervalMs=60000 maxPerMinute=10000 maxNeverUsedIntervalMs=0 (org.apache.zookeeper.server.ContainerManager) [2025-02-05 19:10:11,301] INFO ZooKeeper audit is disabled. (org.apache.zookeeper.audit.ZKAuditProvider)

11. Start Kafka Broker:

zzh@ZZHPC:~$ kafka-server-start.sh $kafka_home/config/server.properties [2025-02-05 19:12:58,730] INFO Registered kafka:type=kafka.Log4jController MBean (kafka.utils.Log4jControllerRegistration$) [2025-02-05 19:12:58,912] INFO Setting -D jdk.tls.rejectClientInitiatedRenegotiation=true to disable client-initiated TLS renegotiation (org.apache.zookeeper.common.X509Util) [2025-02-05 19:12:58,968] INFO Registered signal handlers for TERM, INT, HUP (org.apache.kafka.common.utils.LoggingSignalHandler) [2025-02-05 19:12:58,970] INFO starting (kafka.server.KafkaServer) [2025-02-05 19:12:58,972] INFO Connecting to zookeeper on localhost:2181 (kafka.server.KafkaServer) [2025-02-05 19:12:58,985] INFO [ZooKeeperClient Kafka server] Initializing a new session to localhost:2181. (kafka.zookeeper.ZooKeeperClient) [2025-02-05 19:12:58,989] INFO Client environment:zookeeper.version=3.6.4--d65253dcf68e9097c6e95a126463fd5fdeb4521c, built on 12/18/2022 18:10 GMT (org.apache.zookeeper.ZooKeeper) [2025-02-05 19:12:58,989] INFO Client environment:host.name=ZZHPC (org.apache.zookeeper.ZooKeeper) [2025-02-05 19:12:58,989] INFO Client environment:java.version=17.0.12 (org.apache.zookeeper.ZooKeeper) [2025-02-05 19:12:58,989] INFO Client environment:java.vendor=Oracle Corporation (org.apache.zookeeper.ZooKeeper) [2025-02-05 19:12:58,989] INFO Client environment:java.home=/home/zzh/Downloads/sfw/jdk-17.0.12 (org.apache.zookeeper.ZooKeeper) [2025-02-05 19:12:58,989] INFO Client environment:java.class.path=/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/activation-1.1.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/aopalliance-repackaged-2.6.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/argparse4j-0.7.0.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/audience-annotations-0.13.0.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/commons-cli-1.4.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/commons-lang3-3.8.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/connect-api-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/connect-basic-auth-extension-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/connect-json-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/connect-mirror-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/connect-mirror-client-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/connect-runtime-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/connect-transforms-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/hk2-api-2.6.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/hk2-locator-2.6.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/hk2-utils-2.6.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jackson-annotations-2.13.5.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jackson-core-2.13.5.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jackson-databind-2.13.5.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jackson-dataformat-csv-2.13.5.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jackson-datatype-jdk8-2.13.5.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jackson-jaxrs-base-2.13.5.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jackson-jaxrs-json-provider-2.13.5.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jackson-module-jaxb-annotations-2.13.5.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jackson-module-scala_2.13-2.13.5.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jakarta.activation-api-1.2.2.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jakarta.annotation-api-1.3.5.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jakarta.inject-2.6.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jakarta.validation-api-2.0.2.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jakarta.ws.rs-api-2.1.6.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jakarta.xml.bind-api-2.3.3.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/javassist-3.29.2-GA.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/javax.activation-api-1.2.0.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/javax.annotation-api-1.3.2.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/javax.servlet-api-3.1.0.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/javax.ws.rs-api-2.1.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jaxb-api-2.3.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jersey-client-2.39.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jersey-common-2.39.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jersey-container-servlet-2.39.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jersey-container-servlet-core-2.39.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jersey-hk2-2.39.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jersey-server-2.39.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jetty-client-9.4.51.v20230217.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jetty-continuation-9.4.51.v20230217.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jetty-http-9.4.51.v20230217.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jetty-io-9.4.51.v20230217.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jetty-security-9.4.51.v20230217.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jetty-server-9.4.51.v20230217.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jetty-servlet-9.4.51.v20230217.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jetty-servlets-9.4.51.v20230217.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jetty-util-9.4.51.v20230217.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jetty-util-ajax-9.4.51.v20230217.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jline-3.22.0.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jopt-simple-5.0.4.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/jose4j-0.9.3.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka_2.13-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-clients-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-group-coordinator-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-log4j-appender-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-metadata-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-raft-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-server-common-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-shell-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-storage-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-storage-api-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-streams-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-streams-examples-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-streams-scala_2.13-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-streams-test-utils-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-tools-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/kafka-tools-api-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/lz4-java-1.8.0.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/maven-artifact-3.8.8.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/metrics-core-2.2.0.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/metrics-core-4.1.12.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/netty-buffer-4.1.94.Final.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/netty-codec-4.1.94.Final.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/netty-common-4.1.94.Final.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/netty-handler-4.1.94.Final.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/netty-resolver-4.1.94.Final.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/netty-transport-4.1.94.Final.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/netty-transport-classes-epoll-4.1.94.Final.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/netty-transport-native-epoll-4.1.94.Final.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/netty-transport-native-unix-common-4.1.94.Final.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/osgi-resource-locator-1.0.3.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/paranamer-2.8.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/plexus-utils-3.3.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/reflections-0.9.12.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/reload4j-1.2.25.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/rocksdbjni-7.1.2.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/scala-collection-compat_2.13-2.10.0.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/scala-java8-compat_2.13-1.0.2.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/scala-library-2.13.10.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/scala-logging_2.13-3.9.4.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/scala-reflect-2.13.10.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/slf4j-api-1.7.36.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/slf4j-reload4j-1.7.36.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/snappy-java-1.1.10.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/swagger-annotations-2.2.8.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/trogdor-3.5.1.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/zookeeper-3.6.4.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/zookeeper-jute-3.6.4.jar:/home/zzh/Downloads/sfw/kafka_2.13-3.5.1/bin/../libs/zstd-jni-1.5.5-1.jar (org.apache.zookeeper.ZooKeeper) [2025-02-05 19:12:58,989] INFO Client environment:java.library.path=/usr/java/packages/lib:/usr/lib64:/lib64:/lib:/usr/lib (org.apache.zookeeper.ZooKeeper) [2025-02-05 19:12:58,989] INFO Client environment:java.io.tmpdir=/tmp (org.apache.zookeeper.ZooKeeper) [2025-02-05 19:12:58,989] INFO Client environment:java.compiler=<NA> (org.apache.zookeeper.ZooKeeper) [2025-02-05 19:12:58,989] INFO Client environment:os.name=Linux (org.apache.zookeeper.ZooKeeper) [2025-02-05 19:12:58,989] INFO Client environment:os.arch=amd64 (org.apache.zookeeper.ZooKeeper) [2025-02-05 19:12:58,990] INFO Client environment:os.version=6.8.0-52-generic (org.apache.zookeeper.ZooKeeper) [2025-02-05 19:12:58,990] INFO Client environment:user.name=zzh (org.apache.zookeeper.ZooKeeper) [2025-02-05 19:12:58,990] INFO Client environment:user.home=/home/zzh (org.apache.zookeeper.ZooKeeper) [2025-02-05 19:12:58,990] INFO Client environment:user.dir=/home/zzh (org.apache.zookeeper.ZooKeeper) [2025-02-05 19:12:58,990] INFO Client environment:os.memory.free=988MB (org.apache.zookeeper.ZooKeeper) [2025-02-05 19:12:58,990] INFO Client environment:os.memory.max=1024MB (org.apache.zookeeper.ZooKeeper) [2025-02-05 19:12:58,990] INFO Client environment:os.memory.total=1024MB (org.apache.zookeeper.ZooKeeper) [2025-02-05 19:12:58,991] INFO Initiating client connection, connectString=localhost:2181 sessionTimeout=18000 watcher=kafka.zookeeper.ZooKeeperClient$ZooKeeperClientWatcher$@64ec96c6 (org.apache.zookeeper.ZooKeeper) [2025-02-05 19:12:58,995] INFO jute.maxbuffer value is 4194304 Bytes (org.apache.zookeeper.ClientCnxnSocket) [2025-02-05 19:12:58,998] INFO zookeeper.request.timeout value is 0. feature enabled=false (org.apache.zookeeper.ClientCnxn) [2025-02-05 19:12:58,999] INFO [ZooKeeperClient Kafka server] Waiting until connected. (kafka.zookeeper.ZooKeeperClient) [2025-02-05 19:12:59,000] INFO Opening socket connection to server localhost/127.0.0.1:2181. (org.apache.zookeeper.ClientCnxn) [2025-02-05 19:12:59,002] INFO Socket connection established, initiating session, client: /127.0.0.1:34888, server: localhost/127.0.0.1:2181 (org.apache.zookeeper.ClientCnxn) [2025-02-05 19:12:59,017] INFO Session establishment complete on server localhost/127.0.0.1:2181, session id = 0x100022175d60000, negotiated timeout = 18000 (org.apache.zookeeper.ClientCnxn) [2025-02-05 19:12:59,019] INFO [ZooKeeperClient Kafka server] Connected. (kafka.zookeeper.ZooKeeperClient) [2025-02-05 19:12:59,193] INFO Cluster ID = bz5tLPeSREKQY2I-qy378w (kafka.server.KafkaServer) [2025-02-05 19:12:59,196] WARN No meta.properties file under dir /tmp/kafka-logs/meta.properties (kafka.server.BrokerMetadataCheckpoint) [2025-02-05 19:12:59,234] INFO KafkaConfig values: advertised.listeners = null alter.config.policy.class.name = null alter.log.dirs.replication.quota.window.num = 11 alter.log.dirs.replication.quota.window.size.seconds = 1 authorizer.class.name = auto.create.topics.enable = true auto.include.jmx.reporter = true auto.leader.rebalance.enable = true background.threads = 10 broker.heartbeat.interval.ms = 2000 broker.id = 0 broker.id.generation.enable = true broker.rack = null broker.session.timeout.ms = 9000 client.quota.callback.class = null compression.type = producer connection.failed.authentication.delay.ms = 100 connections.max.idle.ms = 600000 connections.max.reauth.ms = 0 control.plane.listener.name = null controlled.shutdown.enable = true controlled.shutdown.max.retries = 3 controlled.shutdown.retry.backoff.ms = 5000 controller.listener.names = null controller.quorum.append.linger.ms = 25 controller.quorum.election.backoff.max.ms = 1000 controller.quorum.election.timeout.ms = 1000 controller.quorum.fetch.timeout.ms = 2000 controller.quorum.request.timeout.ms = 2000 controller.quorum.retry.backoff.ms = 20 controller.quorum.voters = [] controller.quota.window.num = 11 controller.quota.window.size.seconds = 1 controller.socket.timeout.ms = 30000 create.topic.policy.class.name = null default.replication.factor = 1 delegation.token.expiry.check.interval.ms = 3600000 delegation.token.expiry.time.ms = 86400000 delegation.token.master.key = null delegation.token.max.lifetime.ms = 604800000 delegation.token.secret.key = null delete.records.purgatory.purge.interval.requests = 1 delete.topic.enable = true early.start.listeners = null fetch.max.bytes = 57671680 fetch.purgatory.purge.interval.requests = 1000 group.consumer.assignors = [] group.consumer.heartbeat.interval.ms = 5000 group.consumer.max.heartbeat.interval.ms = 15000 group.consumer.max.session.timeout.ms = 60000 group.consumer.max.size = 2147483647 group.consumer.min.heartbeat.interval.ms = 5000 group.consumer.min.session.timeout.ms = 45000 group.consumer.session.timeout.ms = 45000 group.coordinator.new.enable = false group.coordinator.threads = 1 group.initial.rebalance.delay.ms = 0 group.max.session.timeout.ms = 1800000 group.max.size = 2147483647 group.min.session.timeout.ms = 6000 initial.broker.registration.timeout.ms = 60000 inter.broker.listener.name = null inter.broker.protocol.version = 3.5-IV2 kafka.metrics.polling.interval.secs = 10 kafka.metrics.reporters = [] leader.imbalance.check.interval.seconds = 300 leader.imbalance.per.broker.percentage = 10 listener.security.protocol.map = PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL listeners = PLAINTEXT://:9092 log.cleaner.backoff.ms = 15000 log.cleaner.dedupe.buffer.size = 134217728 log.cleaner.delete.retention.ms = 86400000 log.cleaner.enable = true log.cleaner.io.buffer.load.factor = 0.9 log.cleaner.io.buffer.size = 524288 log.cleaner.io.max.bytes.per.second = 1.7976931348623157E308 log.cleaner.max.compaction.lag.ms = 9223372036854775807 log.cleaner.min.cleanable.ratio = 0.5 log.cleaner.min.compaction.lag.ms = 0 log.cleaner.threads = 1 log.cleanup.policy = [delete] log.dir = /tmp/kafka-logs log.dirs = /tmp/kafka-logs log.flush.interval.messages = 9223372036854775807 log.flush.interval.ms = null log.flush.offset.checkpoint.interval.ms = 60000 log.flush.scheduler.interval.ms = 9223372036854775807 log.flush.start.offset.checkpoint.interval.ms = 60000 log.index.interval.bytes = 4096 log.index.size.max.bytes = 10485760 log.message.downconversion.enable = true log.message.format.version = 3.0-IV1 log.message.timestamp.difference.max.ms = 9223372036854775807 log.message.timestamp.type = CreateTime log.preallocate = false log.retention.bytes = -1 log.retention.check.interval.ms = 300000 log.retention.hours = 168 log.retention.minutes = null log.retention.ms = null log.roll.hours = 168 log.roll.jitter.hours = 0 log.roll.jitter.ms = null log.roll.ms = null log.segment.bytes = 1073741824 log.segment.delete.delay.ms = 60000 max.connection.creation.rate = 2147483647 max.connections = 2147483647 max.connections.per.ip = 2147483647 max.connections.per.ip.overrides = max.incremental.fetch.session.cache.slots = 1000 message.max.bytes = 1048588 metadata.log.dir = null metadata.log.max.record.bytes.between.snapshots = 20971520 metadata.log.max.snapshot.interval.ms = 3600000 metadata.log.segment.bytes = 1073741824 metadata.log.segment.min.bytes = 8388608 metadata.log.segment.ms = 604800000 metadata.max.idle.interval.ms = 500 metadata.max.retention.bytes = 104857600 metadata.max.retention.ms = 604800000 metric.reporters = [] metrics.num.samples = 2 metrics.recording.level = INFO metrics.sample.window.ms = 30000 min.insync.replicas = 1 node.id = 0 num.io.threads = 8 num.network.threads = 3 num.partitions = 1 num.recovery.threads.per.data.dir = 1 num.replica.alter.log.dirs.threads = null num.replica.fetchers = 1 offset.metadata.max.bytes = 4096 offsets.commit.required.acks = -1 offsets.commit.timeout.ms = 5000 offsets.load.buffer.size = 5242880 offsets.retention.check.interval.ms = 600000 offsets.retention.minutes = 10080 offsets.topic.compression.codec = 0 offsets.topic.num.partitions = 50 offsets.topic.replication.factor = 1 offsets.topic.segment.bytes = 104857600 password.encoder.cipher.algorithm = AES/CBC/PKCS5Padding password.encoder.iterations = 4096 password.encoder.key.length = 128 password.encoder.keyfactory.algorithm = null password.encoder.old.secret = null password.encoder.secret = null principal.builder.class = class org.apache.kafka.common.security.authenticator.DefaultKafkaPrincipalBuilder process.roles = [] producer.id.expiration.check.interval.ms = 600000 producer.id.expiration.ms = 86400000 producer.purgatory.purge.interval.requests = 1000 queued.max.request.bytes = -1 queued.max.requests = 500 quota.window.num = 11 quota.window.size.seconds = 1 remote.log.index.file.cache.total.size.bytes = 1073741824 remote.log.manager.task.interval.ms = 30000 remote.log.manager.task.retry.backoff.max.ms = 30000 remote.log.manager.task.retry.backoff.ms = 500 remote.log.manager.task.retry.jitter = 0.2 remote.log.manager.thread.pool.size = 10 remote.log.metadata.manager.class.name = null remote.log.metadata.manager.class.path = null remote.log.metadata.manager.impl.prefix = null remote.log.metadata.manager.listener.name = null remote.log.reader.max.pending.tasks = 100 remote.log.reader.threads = 10 remote.log.storage.manager.class.name = null remote.log.storage.manager.class.path = null remote.log.storage.manager.impl.prefix = null remote.log.storage.system.enable = false replica.fetch.backoff.ms = 1000 replica.fetch.max.bytes = 1048576 replica.fetch.min.bytes = 1 replica.fetch.response.max.bytes = 10485760 replica.fetch.wait.max.ms = 500 replica.high.watermark.checkpoint.interval.ms = 5000 replica.lag.time.max.ms = 30000 replica.selector.class = null replica.socket.receive.buffer.bytes = 65536 replica.socket.timeout.ms = 30000 replication.quota.window.num = 11 replication.quota.window.size.seconds = 1 request.timeout.ms = 30000 reserved.broker.max.id = 1000 sasl.client.callback.handler.class = null sasl.enabled.mechanisms = [GSSAPI] sasl.jaas.config = null sasl.kerberos.kinit.cmd = /usr/bin/kinit sasl.kerberos.min.time.before.relogin = 60000 sasl.kerberos.principal.to.local.rules = [DEFAULT] sasl.kerberos.service.name = null sasl.kerberos.ticket.renew.jitter = 0.05 sasl.kerberos.ticket.renew.window.factor = 0.8 sasl.login.callback.handler.class = null sasl.login.class = null sasl.login.connect.timeout.ms = null sasl.login.read.timeout.ms = null sasl.login.refresh.buffer.seconds = 300 sasl.login.refresh.min.period.seconds = 60 sasl.login.refresh.window.factor = 0.8 sasl.login.refresh.window.jitter = 0.05 sasl.login.retry.backoff.max.ms = 10000 sasl.login.retry.backoff.ms = 100 sasl.mechanism.controller.protocol = GSSAPI sasl.mechanism.inter.broker.protocol = GSSAPI sasl.oauthbearer.clock.skew.seconds = 30 sasl.oauthbearer.expected.audience = null sasl.oauthbearer.expected.issuer = null sasl.oauthbearer.jwks.endpoint.refresh.ms = 3600000 sasl.oauthbearer.jwks.endpoint.retry.backoff.max.ms = 10000 sasl.oauthbearer.jwks.endpoint.retry.backoff.ms = 100 sasl.oauthbearer.jwks.endpoint.url = null sasl.oauthbearer.scope.claim.name = scope sasl.oauthbearer.sub.claim.name = sub sasl.oauthbearer.token.endpoint.url = null sasl.server.callback.handler.class = null sasl.server.max.receive.size = 524288 security.inter.broker.protocol = PLAINTEXT security.providers = null server.max.startup.time.ms = 9223372036854775807 socket.connection.setup.timeout.max.ms = 30000 socket.connection.setup.timeout.ms = 10000 socket.listen.backlog.size = 50 socket.receive.buffer.bytes = 102400 socket.request.max.bytes = 104857600 socket.send.buffer.bytes = 102400 ssl.cipher.suites = [] ssl.client.auth = none ssl.enabled.protocols = [TLSv1.2, TLSv1.3] ssl.endpoint.identification.algorithm = https ssl.engine.factory.class = null ssl.key.password = null ssl.keymanager.algorithm = SunX509 ssl.keystore.certificate.chain = null ssl.keystore.key = null ssl.keystore.location = null ssl.keystore.password = null ssl.keystore.type = JKS ssl.principal.mapping.rules = DEFAULT ssl.protocol = TLSv1.3 ssl.provider = null ssl.secure.random.implementation = null ssl.trustmanager.algorithm = PKIX ssl.truststore.certificates = null ssl.truststore.location = null ssl.truststore.password = null ssl.truststore.type = JKS transaction.abort.timed.out.transaction.cleanup.interval.ms = 10000 transaction.max.timeout.ms = 900000 transaction.remove.expired.transaction.cleanup.interval.ms = 3600000 transaction.state.log.load.buffer.size = 5242880 transaction.state.log.min.isr = 1 transaction.state.log.num.partitions = 50 transaction.state.log.replication.factor = 1 transaction.state.log.segment.bytes = 104857600 transactional.id.expiration.ms = 604800000 unclean.leader.election.enable = false unstable.api.versions.enable = false zookeeper.clientCnxnSocket = null zookeeper.connect = localhost:2181 zookeeper.connection.timeout.ms = 18000 zookeeper.max.in.flight.requests = 10 zookeeper.metadata.migration.enable = false zookeeper.session.timeout.ms = 18000 zookeeper.set.acl = false zookeeper.ssl.cipher.suites = null zookeeper.ssl.client.enable = false zookeeper.ssl.crl.enable = false zookeeper.ssl.enabled.protocols = null zookeeper.ssl.endpoint.identification.algorithm = HTTPS zookeeper.ssl.keystore.location = null zookeeper.ssl.keystore.password = null zookeeper.ssl.keystore.type = null zookeeper.ssl.ocsp.enable = false zookeeper.ssl.protocol = TLSv1.2 zookeeper.ssl.truststore.location = null zookeeper.ssl.truststore.password = null zookeeper.ssl.truststore.type = null (kafka.server.KafkaConfig) [2025-02-05 19:12:59,259] INFO [ThrottledChannelReaper-Fetch]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper) [2025-02-05 19:12:59,259] INFO [ThrottledChannelReaper-Produce]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper) [2025-02-05 19:12:59,260] INFO [ThrottledChannelReaper-Request]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper) [2025-02-05 19:12:59,262] INFO [ThrottledChannelReaper-ControllerMutation]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper) [2025-02-05 19:12:59,271] INFO Log directory /tmp/kafka-logs not found, creating it. (kafka.log.LogManager) [2025-02-05 19:12:59,281] INFO Loading logs from log dirs ArraySeq(/tmp/kafka-logs) (kafka.log.LogManager) [2025-02-05 19:12:59,285] INFO No logs found to be loaded in /tmp/kafka-logs (kafka.log.LogManager) [2025-02-05 19:12:59,292] INFO Loaded 0 logs in 10ms (kafka.log.LogManager) [2025-02-05 19:12:59,293] INFO Starting log cleanup with a period of 300000 ms. (kafka.log.LogManager) [2025-02-05 19:12:59,293] INFO Starting log flusher with a default period of 9223372036854775807 ms. (kafka.log.LogManager) [2025-02-05 19:12:59,360] INFO [kafka-log-cleaner-thread-0]: Starting (kafka.log.LogCleaner$CleanerThread) [2025-02-05 19:12:59,369] INFO [feature-zk-node-event-process-thread]: Starting (kafka.server.FinalizedFeatureChangeListener$ChangeNotificationProcessorThread) [2025-02-05 19:12:59,376] INFO Feature ZK node at path: /feature does not exist (kafka.server.FinalizedFeatureChangeListener) [2025-02-05 19:12:59,393] INFO [zk-broker-0-to-controller-forwarding-channel-manager]: Starting (kafka.server.BrokerToControllerRequestThread) [2025-02-05 19:12:59,593] INFO Updated connection-accept-rate max connection creation rate to 2147483647 (kafka.network.ConnectionQuotas) [2025-02-05 19:12:59,607] INFO [SocketServer listenerType=ZK_BROKER, nodeId=0] Created data-plane acceptor and processors for endpoint : ListenerName(PLAINTEXT) (kafka.network.SocketServer) [2025-02-05 19:12:59,610] INFO [zk-broker-0-to-controller-alter-partition-channel-manager]: Starting (kafka.server.BrokerToControllerRequestThread) [2025-02-05 19:12:59,624] INFO [ExpirationReaper-0-Produce]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper) [2025-02-05 19:12:59,625] INFO [ExpirationReaper-0-Fetch]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper) [2025-02-05 19:12:59,626] INFO [ExpirationReaper-0-DeleteRecords]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper) [2025-02-05 19:12:59,626] INFO [ExpirationReaper-0-ElectLeader]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper) [2025-02-05 19:12:59,634] INFO [LogDirFailureHandler]: Starting (kafka.server.ReplicaManager$LogDirFailureHandler) [2025-02-05 19:12:59,649] INFO Creating /brokers/ids/0 (is it secure? false) (kafka.zk.KafkaZkClient) [2025-02-05 19:12:59,665] INFO Stat of the created znode at /brokers/ids/0 is: 25,25,1738753979660,1738753979660,1,0,0,72059936772063232,194,0,25 (kafka.zk.KafkaZkClient) [2025-02-05 19:12:59,665] INFO Registered broker 0 at path /brokers/ids/0 with addresses: PLAINTEXT://ZZHPC:9092, czxid (broker epoch): 25 (kafka.zk.KafkaZkClient) [2025-02-05 19:12:59,714] INFO [ExpirationReaper-0-topic]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper) [2025-02-05 19:12:59,720] INFO [ExpirationReaper-0-Heartbeat]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper) [2025-02-05 19:12:59,721] INFO [ExpirationReaper-0-Rebalance]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper) [2025-02-05 19:12:59,721] INFO Successfully created /controller_epoch with initial epoch 0 (kafka.zk.KafkaZkClient) [2025-02-05 19:12:59,732] INFO [GroupCoordinator 0]: Starting up. (kafka.coordinator.group.GroupCoordinator) [2025-02-05 19:12:59,734] INFO Feature ZK node created at path: /feature (kafka.server.FinalizedFeatureChangeListener) [2025-02-05 19:12:59,740] INFO [GroupCoordinator 0]: Startup complete. (kafka.coordinator.group.GroupCoordinator) [2025-02-05 19:12:59,750] INFO [TransactionCoordinator id=0] Starting up. (kafka.coordinator.transaction.TransactionCoordinator) [2025-02-05 19:12:59,755] INFO [TxnMarkerSenderThread-0]: Starting (kafka.coordinator.transaction.TransactionMarkerChannelManager) [2025-02-05 19:12:59,756] INFO [TransactionCoordinator id=0] Startup complete. (kafka.coordinator.transaction.TransactionCoordinator) [2025-02-05 19:12:59,761] INFO [MetadataCache brokerId=0] Updated cache from existing <empty> to latest FinalizedFeaturesAndEpoch(features=Map(), epoch=0). (kafka.server.metadata.ZkMetadataCache) [2025-02-05 19:12:59,781] INFO [ExpirationReaper-0-AlterAcls]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper) [2025-02-05 19:12:59,798] INFO [/config/changes-event-process-thread]: Starting (kafka.common.ZkNodeChangeNotificationListener$ChangeEventProcessThread) [2025-02-05 19:12:59,815] INFO [SocketServer listenerType=ZK_BROKER, nodeId=0] Enabling request processing. (kafka.network.SocketServer) [2025-02-05 19:12:59,816] INFO [Controller id=0, targetBrokerId=0] Node 0 disconnected. (org.apache.kafka.clients.NetworkClient) [2025-02-05 19:12:59,824] INFO Awaiting socket connections on 0.0.0.0:9092. (kafka.network.DataPlaneAcceptor) [2025-02-05 19:12:59,825] WARN [Controller id=0, targetBrokerId=0] Connection to node 0 (ZZHPC/127.0.1.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient) [2025-02-05 19:12:59,827] INFO [Controller id=0, targetBrokerId=0] Client requested connection close from node 0 (org.apache.kafka.clients.NetworkClient) [2025-02-05 19:12:59,831] INFO Kafka version: 3.5.1 (org.apache.kafka.common.utils.AppInfoParser) [2025-02-05 19:12:59,831] INFO Kafka commitId: 2c6fb6c54472e90a (org.apache.kafka.common.utils.AppInfoParser) [2025-02-05 19:12:59,831] INFO Kafka startTimeMs: 1738753979828 (org.apache.kafka.common.utils.AppInfoParser) [2025-02-05 19:12:59,832] INFO [KafkaServer id=0] started (kafka.server.KafkaServer) [2025-02-05 19:13:00,005] INFO [zk-broker-0-to-controller-forwarding-channel-manager]: Recorded new controller, from now on will use node ZZHPC:9092 (id: 0 rack: null) (kafka.server.BrokerToControllerRequestThread) [2025-02-05 19:13:00,011] INFO [zk-broker-0-to-controller-alter-partition-channel-manager]: Recorded new controller, from now on will use node ZZHPC:9092 (id: 0 rack: null) (kafka.server.BrokerToControllerRequestThread)

12. Create a Kafka Topic named 'users':

zzh@ZZHPC:~$ kafka-topics.sh --create --topic users --bootstrap-server localhost:9092 --partitions 3 --replication-factor 1 Created topic users. zzh@ZZHPC:~$ kafka-topics.sh --list --bootstrap-server localhost:9092 users

13. Produce messages:

zzh@ZZHPC:~$ kafka-console-producer.sh --topic users --bootstrap-server localhost:9092 >zzh >

14. Consume messages:

zzh@ZZHPC:~$ kafka-console-consumer.sh --topic users --bootstrap-server localhost:9092 --from-beginning zzh

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通

2023-02-03 gpg - decrypt and encrypt file