1. Download:

https://spark.apache.org/downloads.html

2. Install:

(base) zzh@ZZHPC:~/Downloads/sfw$ tar -xvzf spark-3.5.4-bin-hadoop3.tgz

3. Set environment variables:

export JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64 PATH=$PATH:$JAVA_HOME/bin export SPARK_HOME=~/Downloads/sfw/spark-3.5.4-bin-hadoop3 PATH=$PATH:$SPARK_HOME/sbin:$SPARK_HOME/bin

4. Start master:

(base) zzh@ZZHPC:~$ start-master.sh starting org.apache.spark.deploy.master.Master, logging to /home/zzh/Downloads/sfw/spark-3.5.4-bin-hadoop3/logs/spark-zzh-org.apache.spark.deploy.master.Master-1-ZZHPC.out (base) zzh@ZZHPC:~$ cat /home/zzh/Downloads/sfw/spark-3.5.4-bin-hadoop3/logs/spark-zzh-org.apache.spark.deploy.master.Master-1-ZZHPC.out Spark Command: /usr/lib/jvm/java-11-openjdk-amd64/bin/java -cp /home/zzh/Downloads/sfw/spark-3.5.4-bin-hadoop3/conf/:/home/zzh/Downloads/sfw/spark-3.5.4-bin-hadoop3/jars/* -Xmx1g org.apache.spark.deploy.master.Master --host ZZHPC --port 7077 --webui-port 8080 ======================================== Using Spark's default log4j profile: org/apache/spark/log4j2-defaults.properties 25/01/26 12:13:59 INFO Master: Started daemon with process name: 9307@ZZHPC 25/01/26 12:13:59 INFO SignalUtils: Registering signal handler for TERM 25/01/26 12:13:59 INFO SignalUtils: Registering signal handler for HUP 25/01/26 12:13:59 INFO SignalUtils: Registering signal handler for INT 25/01/26 12:13:59 WARN Utils: Your hostname, ZZHPC resolves to a loopback address: 127.0.1.1; using 192.168.1.16 instead (on interface wlo1) 25/01/26 12:13:59 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address 25/01/26 12:14:00 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 25/01/26 12:14:00 INFO SecurityManager: Changing view acls to: zzh 25/01/26 12:14:00 INFO SecurityManager: Changing modify acls to: zzh 25/01/26 12:14:00 INFO SecurityManager: Changing view acls groups to: 25/01/26 12:14:00 INFO SecurityManager: Changing modify acls groups to: 25/01/26 12:14:00 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: zzh; groups with view permissions: EMPTY; users with modify permissions: zzh; groups with modify permissions: EMPTY 25/01/26 12:14:00 INFO Utils: Successfully started service 'sparkMaster' on port 7077. 25/01/26 12:14:00 INFO Master: Starting Spark master at spark://ZZHPC:7077 25/01/26 12:14:00 INFO Master: Running Spark version 3.5.4 25/01/26 12:14:00 INFO JettyUtils: Start Jetty 0.0.0.0:8080 for MasterUI 25/01/26 12:14:00 INFO Utils: Successfully started service 'MasterUI' on port 8080. 25/01/26 12:14:00 INFO MasterWebUI: Bound MasterWebUI to 0.0.0.0, and started at http://192.168.1.16:8080 25/01/26 12:14:00 INFO Master: I have been elected leader! New state: ALIVE

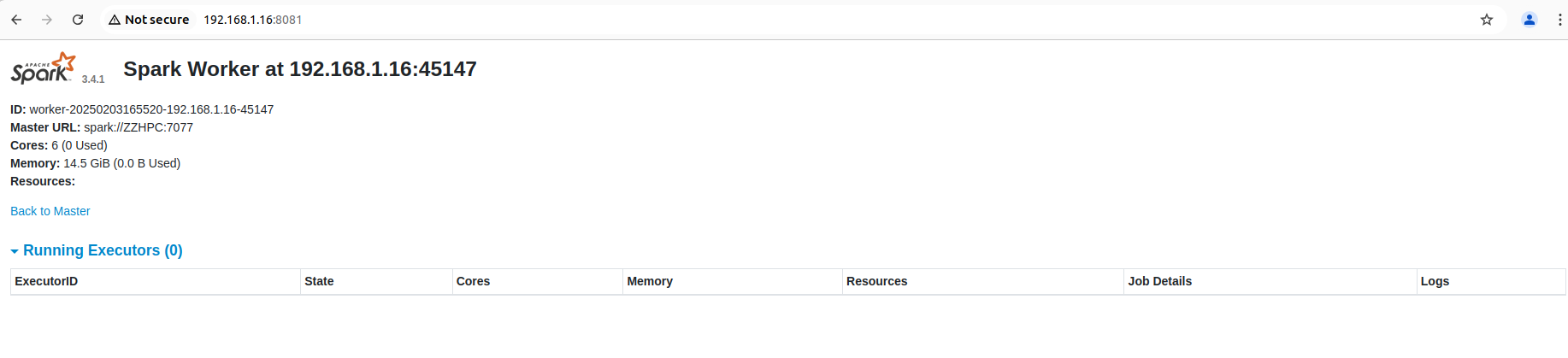

5. Start a worker:

(base) zzh@ZZHPC:~$ start-worker.sh spark://ZZHPC:7077 starting org.apache.spark.deploy.worker.Worker, logging to /home/zzh/Downloads/sfw/spark-3.5.4-bin-hadoop3/logs/spark-zzh-org.apache.spark.deploy.worker.Worker-1-ZZHPC.out (base) zzh@ZZHPC:~$ cat /home/zzh/Downloads/sfw/spark-3.5.4-bin-hadoop3/logs/spark-zzh-org.apache.spark.deploy.worker.Worker-1-ZZHPC.out Spark Command: /usr/lib/jvm/java-11-openjdk-amd64/bin/java -cp /home/zzh/Downloads/sfw/spark-3.5.4-bin-hadoop3/conf/:/home/zzh/Downloads/sfw/spark-3.5.4-bin-hadoop3/jars/* -Xmx1g org.apache.spark.deploy.worker.Worker --webui-port 8081 spark://ZZHPC:7077 ======================================== Using Spark's default log4j profile: org/apache/spark/log4j2-defaults.properties 25/01/26 12:19:46 INFO Worker: Started daemon with process name: 9684@ZZHPC 25/01/26 12:19:46 INFO SignalUtils: Registering signal handler for TERM 25/01/26 12:19:46 INFO SignalUtils: Registering signal handler for HUP 25/01/26 12:19:46 INFO SignalUtils: Registering signal handler for INT 25/01/26 12:19:46 WARN Utils: Your hostname, ZZHPC resolves to a loopback address: 127.0.1.1; using 192.168.1.16 instead (on interface wlo1) 25/01/26 12:19:46 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address 25/01/26 12:19:46 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 25/01/26 12:19:47 INFO SecurityManager: Changing view acls to: zzh 25/01/26 12:19:47 INFO SecurityManager: Changing modify acls to: zzh 25/01/26 12:19:47 INFO SecurityManager: Changing view acls groups to: 25/01/26 12:19:47 INFO SecurityManager: Changing modify acls groups to: 25/01/26 12:19:47 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: zzh; groups with view permissions: EMPTY; users with modify permissions: zzh; groups with modify permissions: EMPTY 25/01/26 12:19:47 INFO Utils: Successfully started service 'sparkWorker' on port 44003. 25/01/26 12:19:47 INFO Worker: Worker decommissioning not enabled. 25/01/26 12:19:47 INFO Worker: Starting Spark worker 192.168.1.16:44003 with 6 cores, 14.5 GiB RAM 25/01/26 12:19:47 INFO Worker: Running Spark version 3.5.4 25/01/26 12:19:47 INFO Worker: Spark home: /home/zzh/Downloads/sfw/spark-3.5.4-bin-hadoop3 25/01/26 12:19:47 INFO ResourceUtils: ============================================================== 25/01/26 12:19:47 INFO ResourceUtils: No custom resources configured for spark.worker. 25/01/26 12:19:47 INFO ResourceUtils: ============================================================== 25/01/26 12:19:47 INFO JettyUtils: Start Jetty 0.0.0.0:8081 for WorkerUI 25/01/26 12:19:47 INFO Utils: Successfully started service 'WorkerUI' on port 8081. 25/01/26 12:19:47 INFO WorkerWebUI: Bound WorkerWebUI to 0.0.0.0, and started at http://192.168.1.16:8081 25/01/26 12:19:47 INFO Worker: Connecting to master ZZHPC:7077... 25/01/26 12:19:47 INFO TransportClientFactory: Successfully created connection to ZZHPC/127.0.1.1:7077 after 23 ms (0 ms spent in bootstraps) 25/01/26 12:19:47 INFO Worker: Successfully registered with master spark://ZZHPC:7077

(below screenshot is from another version of Spark)

6. Use pyspark:

(base) zzh@ZZHPC:~$ which pyspark

/home/zzh/Downloads/sfw/spark-3.5.4-bin-hadoop3/bin/pyspark

(base) zzh@ZZHPC:~/Downloads/sfw/spark-3.5.4-bin-hadoop3$ pyspark

Python 3.12.7 | packaged by Anaconda, Inc. | (main, Oct 4 2024, 13:27:36) [GCC 11.2.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

25/01/26 19:58:24 WARN Utils: Your hostname, ZZHPC resolves to a loopback address: 127.0.1.1; using 192.168.1.16 instead (on interface wlo1)

25/01/26 19:58:24 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

25/01/26 19:58:25 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 3.5.4

/_/

Using Python version 3.12.7 (main, Oct 4 2024 13:27:36)

Spark context Web UI available at http://192.168.1.16:4040

Spark context available as 'sc' (master = local[*], app id = local-1737892706076).

SparkSession available as 'spark'.

>>> textFile = spark.read.text("README.md")

>>> textFile.count()

125

>>> textFile.first()

Row(value='# Apache Spark')

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律