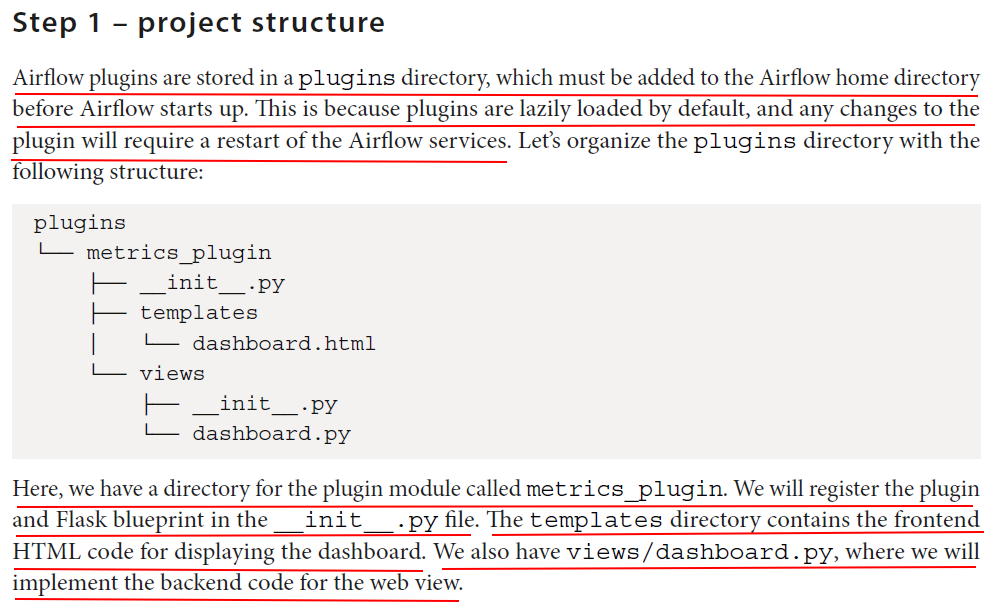

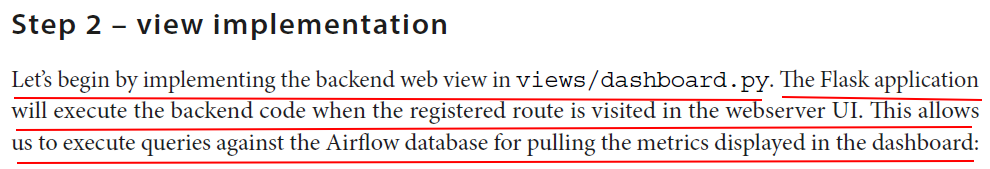

1. First, we will set up the imports that are required for the dashboard view:

from __future__ import annotations from typing import TYPE_CHECKING from airflow.auth.managers.models.resource_details import AccessView from airflow.utils.session import NEW_SESSION, provide_session from airflow.www.auth import has_access_view from flask_appbuilder import BaseView, expose from sqlalchemy import text if TYPE_CHECKING: from sqlalchemy.orm import Session

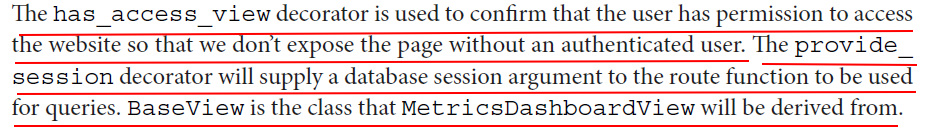

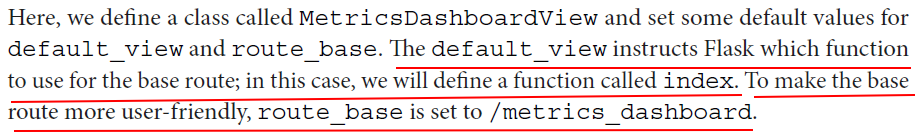

2. Let’s set up the MetricsDashboardView class to define the route for the web view:

class MetricsDashboardView(BaseView): """A Flask-AppBuilder View for a metrics dashboard""" default_view = "index" route_base = "/metrics_dashboard"

3. Finally, the index function will execute the queries we need to run against the Airflow database to provide our metrics:

from __future__ import annotations from typing import TYPE_CHECKING from airflow.auth.managers.models.resource_details import AccessView from airflow.utils.session import NEW_SESSION, provide_session from airflow.www.auth import has_access_view from flask_appbuilder import BaseView, expose from sqlalchemy import text if TYPE_CHECKING: from sqlalchemy.orm import Session class MetricsDashboardView(BaseView): """A Flask-AppBuilder View for a metrics dashboard""" default_view = "index" route_base = "/metrics_dashboard" @provide_session @expose("/") @has_access_view(AccessView.PLUGINS) def index(self, session: Session = NEW_SESSION): """Create dashboard view""" def interval(n: int): return f"now() - interval '{n} days'" dag_run_query = text( f""" SELECT dr.dag_id, SUM(CASE WHEN dr.state = 'success' AND dr.start_date > {interval(1)} THEN 1 ELSE 0 END) AS "1_day_success", SUM(CASE WHEN dr.state = 'failed' AND dr.start_date > {interval(1)} THEN 1 ELSE 0 END) AS "1_day_failed", SUM(CASE WHEN dr.state = 'success' AND dr.start_date > {interval(7)} THEN 1 ELSE 0 END) AS "7_days_success", SUM(CASE WHEN dr.state = 'failed' AND dr.start_date > {interval(7)} THEN 1 ELSE 0 END) AS "7_days_failed", SUM(CASE WHEN dr.state = 'success' AND dr.start_date > {interval(30)} THEN 1 ELSE 0 END) AS "30_days_success", SUM(CASE WHEN dr.state = 'failed' AND dr.start_date > {interval(30)} THEN 1 ELSE 0 END) AS "30_days_failed" FROM dag_run AS dr JOIN dag AS d ON dr.dag_id = d.dag_id WHERE d.is_paused != true GROUP BY dr.dag_id """ ) dag_run_stats = [dict(result) for result in session.execute(dag_run_query)] return self.render_template( "dashboard.html", title="Metrics Dashboard", dag_run_stats=dag_run_stats, )

{% extends base_template %}

{% block title %}

{{ title }}

{% endblock %}

{% block head_meta %}

{{ super() }}

{% endblock %}

Now we need to define the content block where the charts will be displayed. The HTML required for the content block is very simple since we will be rendering the charts with JavaScript:

{% block content %} <h2>{{ title }}</h2> <div class="container-fluid"> <div class="row"> <div class="col-lg-6 col-md-12"> <canvas id="successChart"></canvas> </div> <div class="col-lg-6 col-md-12"> <canvas id="failedChart"></canvas> </div> </div> </div> {% endblock %}

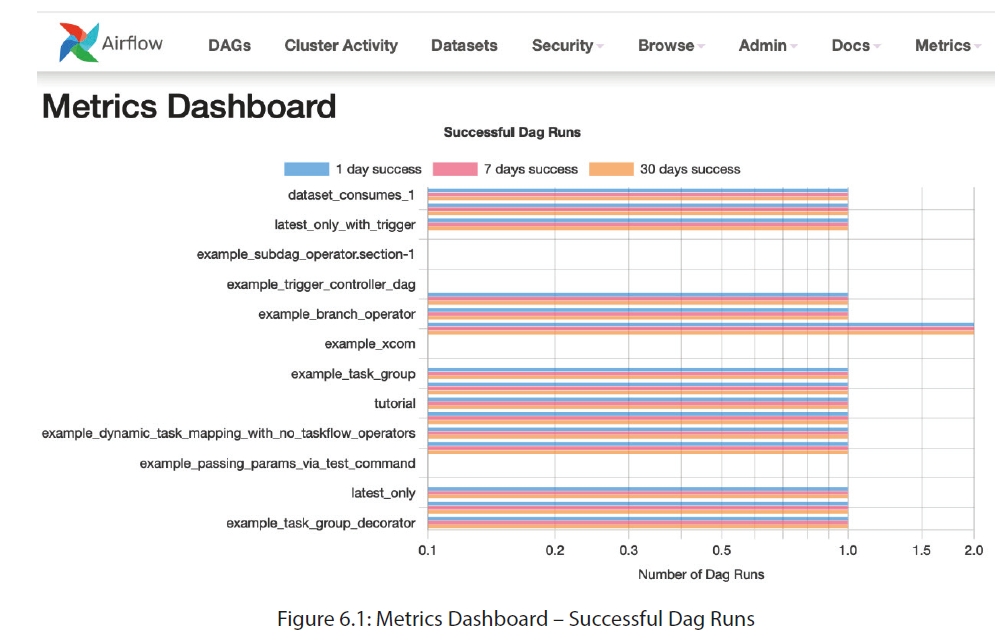

{% block tail %} {{ super() }} <script src="https://cdn.jsdelivr.net/npm/chart.js"></script> <script> const data = {{ dag_run_stats | tojson }}; new Chart( document.getElementById('successChart'), { type: 'bar', title: "Successful Dag Runs", data: { labels: data.map(row => row.dag_id), datasets: [ { label: "1 day success", data: data.map(row => row["1_day_success"]) }, { label: "7 days success", data: data.map(row => row["7_days_success"]) }, { label: "30 days success", data: data.map(row => row["30_days_success"]) } ] }, options: { responsive: true, indexAxis: 'y', scales: { x: { type: 'logarithmic', display: true, title: { display: true, text: "Number of Dag Runs" } } }, plugins: { title: { display: true, text: "Successful Dag Runs" } } } } ); new Chart( document.getElementById('failedChart'), { type: 'bar', data: { labels: data.map(row => row.dag_id), datasets: [ { label: "1 day failed", data: data.map(row => row["1_day_failed"]) }, { label: "7 days failed", data: data.map(row => row["7_days_failed"]) }, { label: "30 days failed", data: data.map(row => row["30_days_failed"]) } ] }, options: { responsive: true, indexAxis: "y", scales: { x: { type: "logarithmic", display: true, title: { display: true, text: "Number of Dag Runs" } } }, plugins: { title: { display: true, text: "Failed Dag Runs" } } } } ); </script> {% endblock %}

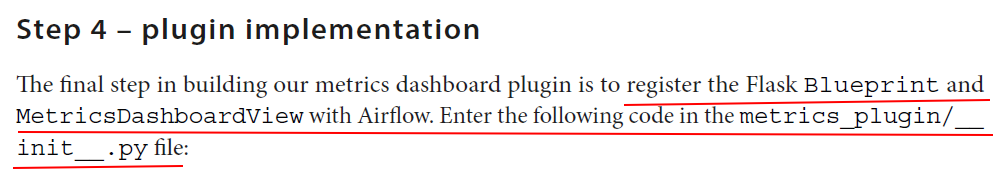

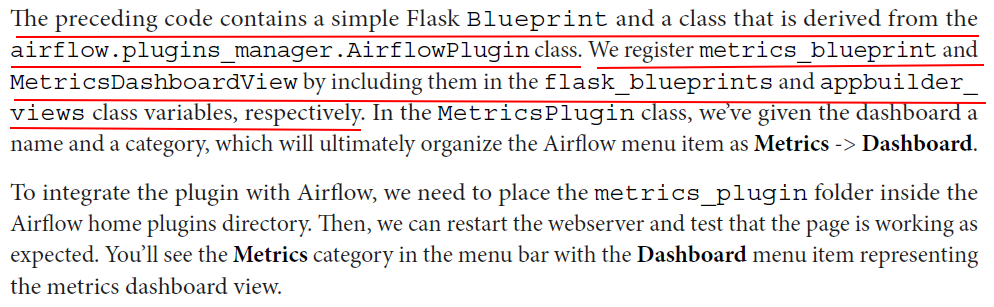

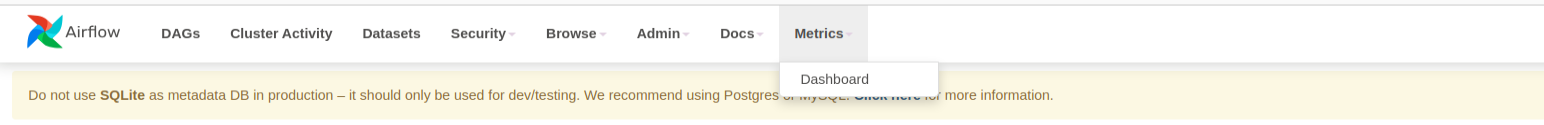

from __future__ import annotations from airflow.plugins_manager import AirflowPlugin from flask import Blueprint from plugins.metrics_plugin.views.dashboard import MetricsDashboardView # Creating a flask blueprint metrics_blueprint = Blueprint( "Metrics", __name__, template_folder="templates", static_folder="static", static_url_path="/static", ) class MetricsPlugin(AirflowPlugin): """Defining the plugin class""" name = "Metrics Dashboard Plugin" flask_blueprints = [metrics_blueprint] appbuilder_views = [ {"name": "Dashboard", "category": "Metrics", "view": MetricsDashboardView()} ]

Error messages:

webserver | return connection._execute_clauseelement(

webserver | ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

webserver | File "/home/frank/venvs/my_airflow_project/.venv/lib/python3.12/site-packages/sqlalchemy/engine/base.py", line 1577, in _execute_clauseelement

webserver | ret = self._execute_context(

webserver | ^^^^^^^^^^^^^^^^^^^^^^

webserver | File "/home/frank/venvs/my_airflow_project/.venv/lib/python3.12/site-packages/sqlalchemy/engine/base.py", line 1953, in _execute_context

webserver | self._handle_dbapi_exception(

webserver | File "/home/frank/venvs/my_airflow_project/.venv/lib/python3.12/site-packages/sqlalchemy/engine/base.py", line 2134, in _handle_dbapi_exception

webserver | util.raise_(

webserver | File "/home/frank/venvs/my_airflow_project/.venv/lib/python3.12/site-packages/sqlalchemy/util/compat.py", line 211, in raise_

webserver | raise exception

webserver | File "/home/frank/venvs/my_airflow_project/.venv/lib/python3.12/site-packages/sqlalchemy/engine/base.py", line 1910, in _execute_context

webserver | self.dialect.do_execute(

webserver | File "/home/frank/venvs/my_airflow_project/.venv/lib/python3.12/site-packages/sqlalchemy/engine/default.py", line 736, in do_execute

webserver | cursor.execute(statement, parameters)

webserver | sqlalchemy.exc.OperationalError: (sqlite3.OperationalError) near "'1 days'": syntax error

webserver | [SQL:

webserver | SELECT

webserver | dr.dag_id,

webserver | SUM(CASE WHEN dr.state = 'success' AND dr.start_date > now() - interval '1 days' THEN 1 ELSE 0 END) AS "1_day_success",

webserver | SUM(CASE WHEN dr.state = 'failed' AND dr.start_date > now() - interval '1 days' THEN 1 ELSE 0 END) AS "1_day_failed",

webserver | SUM(CASE WHEN dr.state = 'success' AND dr.start_date > now() - interval '7 days' THEN 1 ELSE 0 END) AS "7_days_success",

webserver | SUM(CASE WHEN dr.state = 'failed' AND dr.start_date > now() - interval '7 days' THEN 1 ELSE 0 END) AS "7_days_failed",

webserver | SUM(CASE WHEN dr.state = 'success' AND dr.start_date > now() - interval '30 days' THEN 1 ELSE 0 END) AS "30_days_success",

webserver | SUM(CASE WHEN dr.state = 'failed' AND dr.start_date > now() - interval '30 days' THEN 1 ELSE 0 END) AS "30_days_failed"

webserver | FROM dag_run AS dr

webserver | JOIN dag AS d ON dr.dag_id = d.dag_id

webserver | WHERE d.is_paused != true

webserver | GROUP BY dr.dag_id

webserver | ]

webserver | (Background on this error at: https://sqlalche.me/e/14/e3q8)

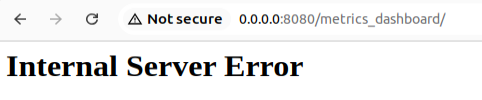

This is becuase the query was written with PostgreSQL syntax (dr.start_date > now() - interval '1 days'), but the default metadata database of Airflow is SQLite.

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

For Airflow's metadata database, the default engine is SQLite. This is because, by default, Airflow uses SQLite for local development or testing environments, unless explicitly configured to use another database backend like PostgreSQL or MySQL.

In the case of Airflow, if you don't specify a connection string in the airflow.cfg file, Airflow will use SQLite as the default.

The relevant setting is in the sql_alchemy_conn configuration key in airflow.cfg. If no database connection is configured, Airflow will fall back to the default SQLite engine, and the connection string will look something like:

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

To fix it, change the interval function using SQLite syntax as below:

def interval(n: int): return f"datetime('now', '-{n} days')"

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律