rank@ZZHUBT:~$ pip install airflowctl

rank@ZZHUBT:~$ airflowctl init my_airflow_project --build-start ...... ebserver | [2025-01-18 20:46:08 +0800] [19140] [INFO] Starting gunicorn 23.0.0 webserver | [2025-01-18 20:46:08 +0800] [19140] [INFO] Listening at: http://0.0.0.0:8080 (19140) webserver | [2025-01-18 20:46:08 +0800] [19140] [INFO] Using worker: sync webserver | [2025-01-18 20:46:08 +0800] [19152] [INFO] Booting worker with pid: 19152 webserver | [2025-01-18 20:46:08 +0800] [19153] [INFO] Booting worker with pid: 19153 standalone | Airflow is ready standalone | Login with username: admin password: aszEnNbvzC8dPmV4 ......

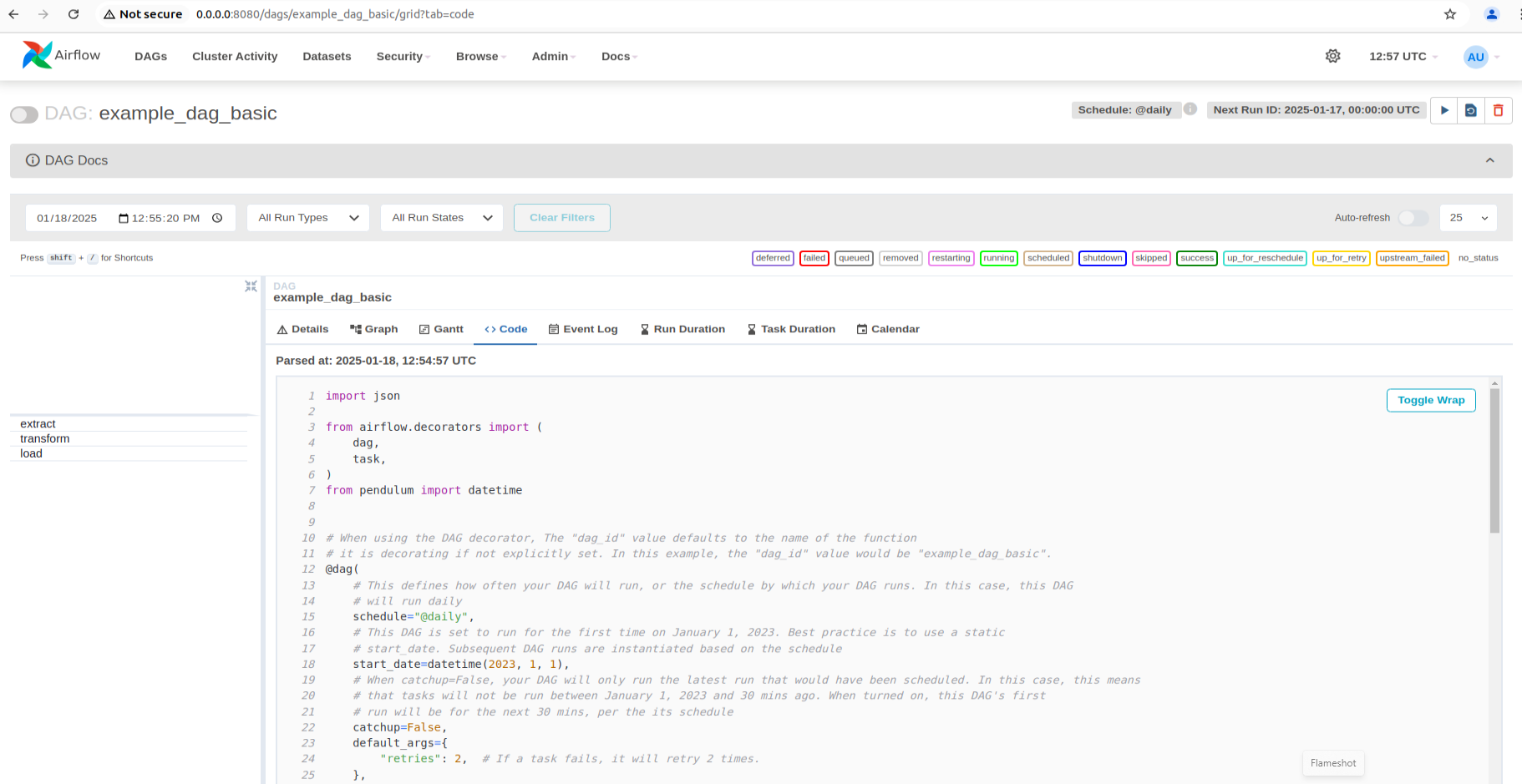

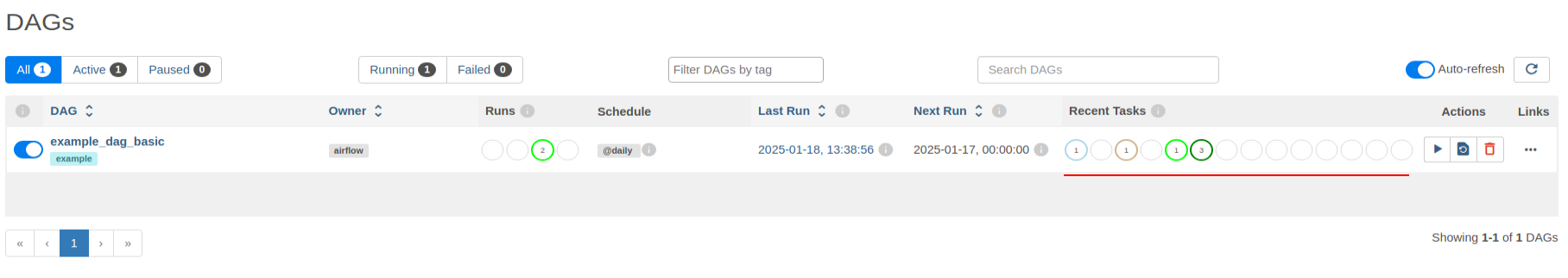

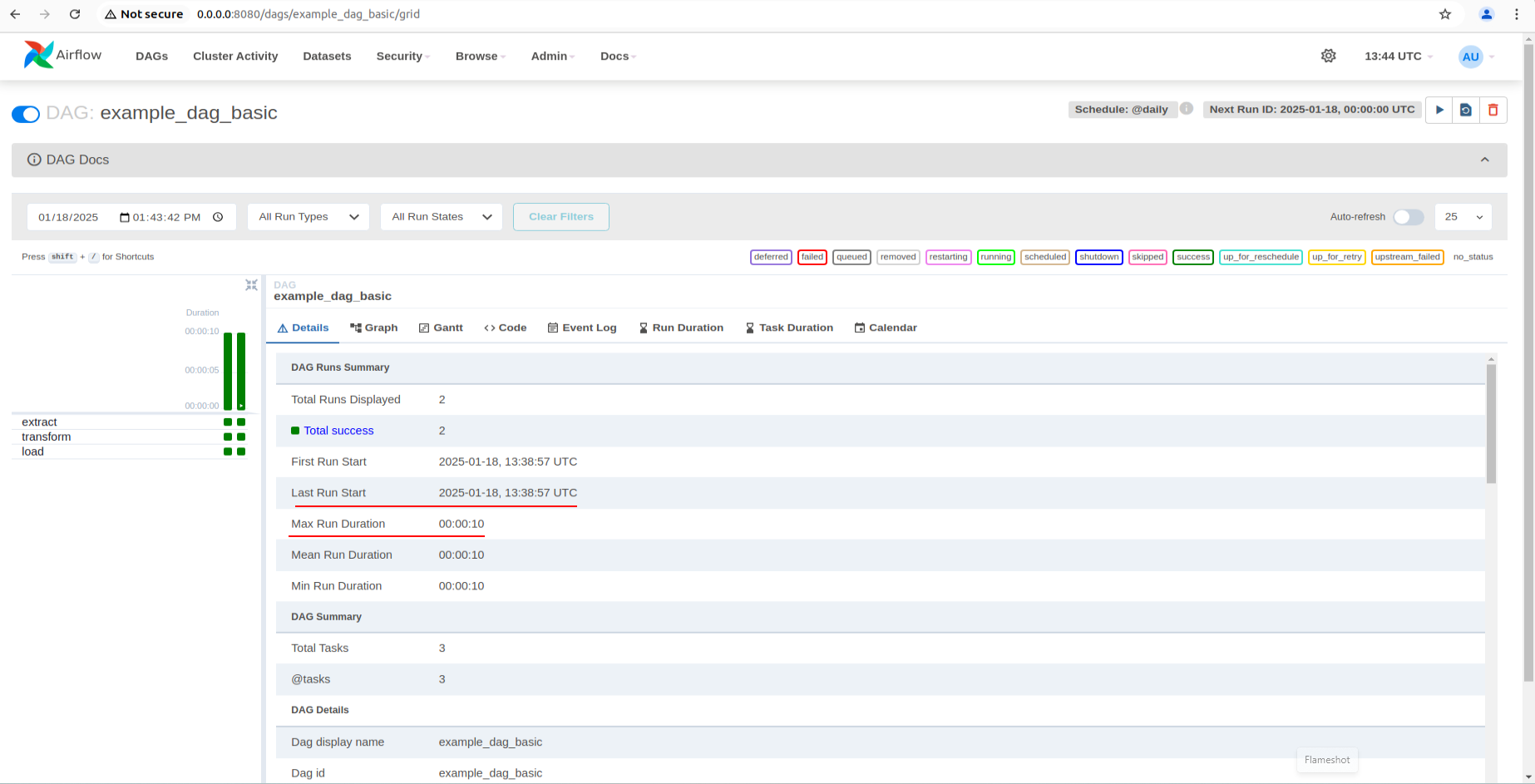

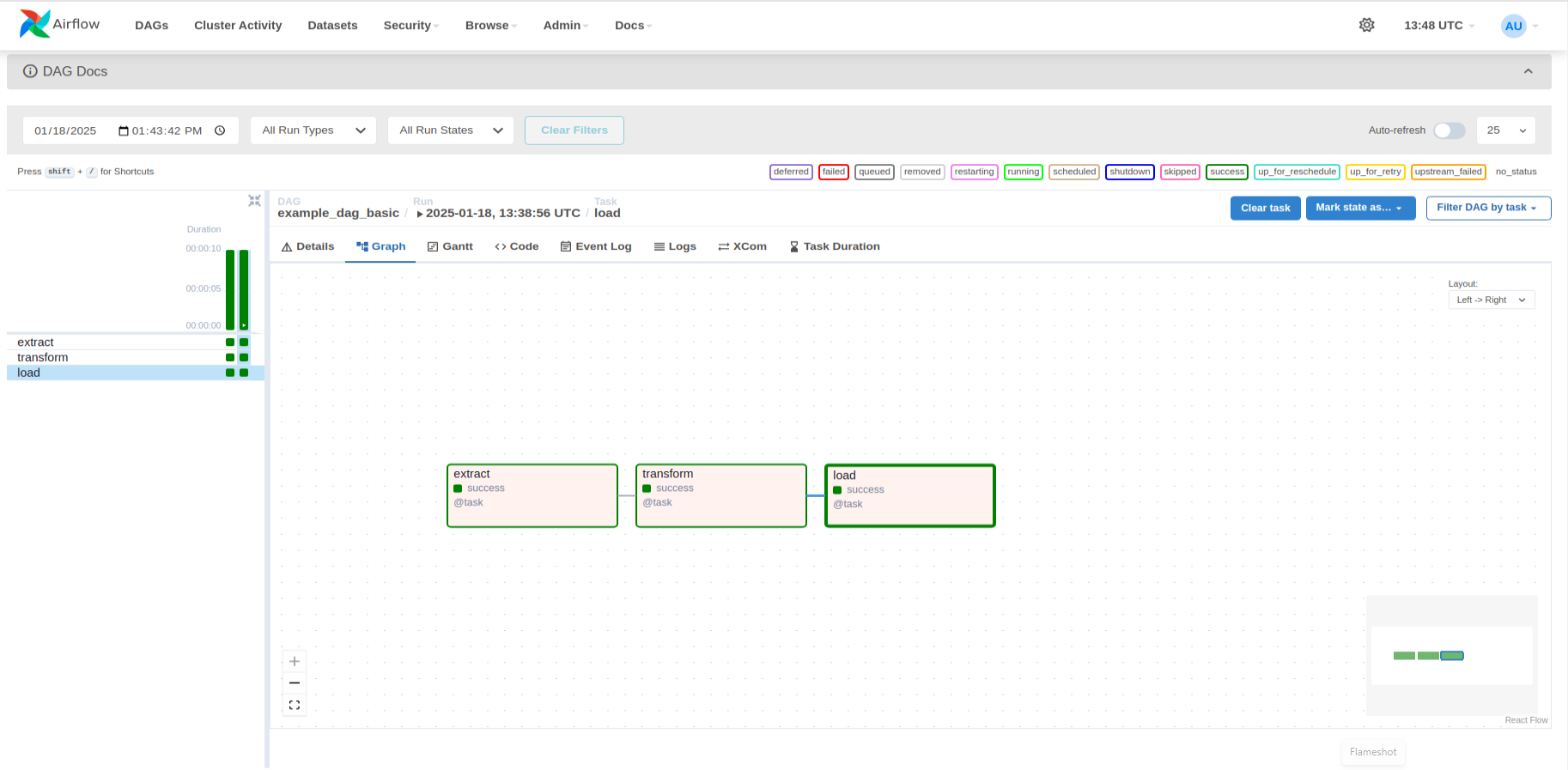

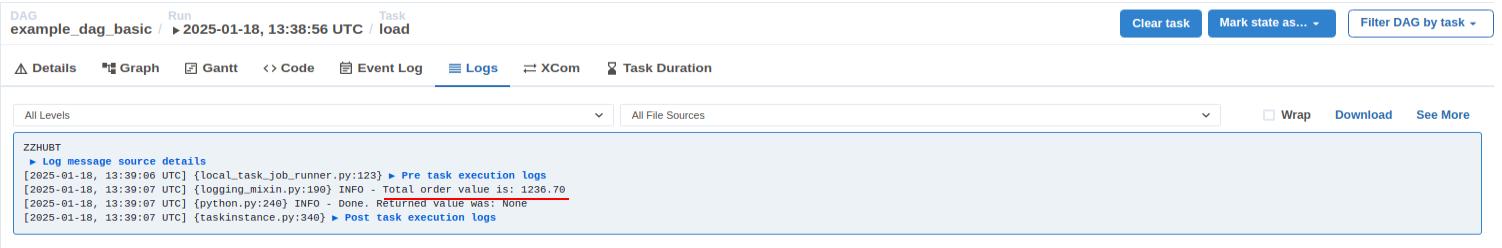

import json from airflow.decorators import ( dag, task, ) from pendulum import datetime # When using the DAG decorator, The "dag_id" value defaults to the name of the function # it is decorating if not explicitly set. In this example, the "dag_id" value would be "example_dag_basic". @dag( # This defines how often your DAG will run, or the schedule by which your DAG runs. In this case, this DAG # will run daily schedule="@daily", # This DAG is set to run for the first time on January 1, 2023. Best practice is to use a static # start_date. Subsequent DAG runs are instantiated based on the schedule start_date=datetime(2023, 1, 1), # When catchup=False, your DAG will only run the latest run that would have been scheduled. In this case, this means # that tasks will not be run between January 1, 2023 and 30 mins ago. When turned on, this DAG's first # run will be for the next 30 mins, per the its schedule catchup=False, default_args={ "retries": 2, # If a task fails, it will retry 2 times. }, tags=["example"], ) # If set, this tag is shown in the DAG view of the Airflow UI def example_dag_basic(): """ ### Basic ETL Dag This is a simple ETL data pipeline example that demonstrates the use of the TaskFlow API using three simple tasks for extract, transform, and load. For more information on Airflow's TaskFlow API, reference documentation here: https://airflow.apache.org/docs/apache-airflow/stable/tutorial_taskflow_api.html """ @task() def extract(): """ #### Extract task A simple "extract" task to get data ready for the rest of the pipeline. In this case, getting data is simulated by reading from a hardcoded JSON string. """ data_string = '{"1001": 301.27, "1002": 433.21, "1003": 502.22}' order_data_dict = json.loads(data_string) return order_data_dict @task(multiple_outputs=True) # multiple_outputs=True unrolls dictionaries into separate XCom values def transform(order_data_dict: dict): """ #### Transform task A simple "transform" task which takes in the collection of order data and computes the total order value. """ total_order_value = 0 for value in order_data_dict.values(): total_order_value += value return {"total_order_value": total_order_value} @task() def load(total_order_value: float): """ #### Load task A simple "load" task that takes in the result of the "transform" task and prints it out, instead of saving it to end user review """ print(f"Total order value is: {total_order_value:.2f}") order_data = extract() order_summary = transform(order_data) load(order_summary["total_order_value"]) example_dag_basic()

Let’s declare the DAG and identify some of the fields that are required, such as schedule:

import json from airflow.decorators import ( dag, task, task_group ) from airflow.operators.empty import EmptyOperator from pendulum import datetime @dag( schedule="@daily", start_date=datetime(2023, 1, 1), catchup=False, default_args={ "retries": 2, }, tags=["zzh"], ) def zzh_dag(): @task() def extract(): data_string = '{"1001": 301.27, "1002": 433.21, "1003": 502.22}' order_data_dict = json.loads(data_string) return order_data_dict @task(multiple_outputs=True) # multiple_outputs=True unrolls dictionaries into separate XCom values def transform(order_data_dict: dict): total_order_value = 0 for value in order_data_dict.values(): total_order_value += value return {"total_order_value": total_order_value} @task() def load(total_order_value: float): print(f"Total order value is: {total_order_value:.2f}") @task_group(group_id='extraction_task_group') def tg1(): t1 = extract() t2 = EmptyOperator(task_id='task_2') t1 >> t2 return t1 order_data = tg1() order_summary = transform(order_data) order_data >> order_summary >> load(order_summary["total_order_value"]) zzh_dag()

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律