frank@ZZHUBT:~$ docker pull jupyter/pyspark-notebook

docker run --name pyspark-notebook -p 8888:8888 -v ~/dkvols/pyspark-notebook/:/home/jovyan/work/ -d jupyter/pyspark-notebook:latest

frank@ZZHUBT:~$ docker logs pyspark-notebook

Entered start.sh with args: jupyter lab

Running hooks in: /usr/local/bin/start-notebook.d as uid: 1000 gid: 100

Done running hooks in: /usr/local/bin/start-notebook.d

Running hooks in: /usr/local/bin/before-notebook.d as uid: 1000 gid: 100

Sourcing shell script: /usr/local/bin/before-notebook.d/spark-config.sh

Done running hooks in: /usr/local/bin/before-notebook.d

Executing the command: jupyter lab

[I 2025-01-17 12:26:41.343 ServerApp] Package jupyterlab took 0.0000s to import

[I 2025-01-17 12:26:41.349 ServerApp] Package jupyter_lsp took 0.0053s to import

[W 2025-01-17 12:26:41.349 ServerApp] A `_jupyter_server_extension_points` function was not found in jupyter_lsp. Instead, a `_jupyter_server_extension_paths` function was found and will be used for now. This function name will be deprecated in future releases of Jupyter Server.

[I 2025-01-17 12:26:41.349 ServerApp] Package jupyter_server_mathjax took 0.0006s to import

[I 2025-01-17 12:26:41.352 ServerApp] Package jupyter_server_terminals took 0.0025s to import

[I 2025-01-17 12:26:41.368 ServerApp] Package jupyterlab_git took 0.0152s to import

[I 2025-01-17 12:26:41.369 ServerApp] Package nbclassic took 0.0013s to import

[W 2025-01-17 12:26:41.370 ServerApp] A `_jupyter_server_extension_points` function was not found in nbclassic. Instead, a `_jupyter_server_extension_paths` function was found and will be used for now. This function name will be deprecated in future releases of Jupyter Server.

[I 2025-01-17 12:26:41.371 ServerApp] Package nbdime took 0.0000s to import

[I 2025-01-17 12:26:41.371 ServerApp] Package notebook took 0.0000s to import

[I 2025-01-17 12:26:41.372 ServerApp] Package notebook_shim took 0.0000s to import

[W 2025-01-17 12:26:41.372 ServerApp] A `_jupyter_server_extension_points` function was not found in notebook_shim. Instead, a `_jupyter_server_extension_paths` function was found and will be used for now. This function name will be deprecated in future releases of Jupyter Server.

[I 2025-01-17 12:26:41.373 ServerApp] jupyter_lsp | extension was successfully linked.

[I 2025-01-17 12:26:41.376 ServerApp] jupyter_server_mathjax | extension was successfully linked.

[I 2025-01-17 12:26:41.380 ServerApp] jupyter_server_terminals | extension was successfully linked.

[I 2025-01-17 12:26:41.383 ServerApp] jupyterlab | extension was successfully linked.

[I 2025-01-17 12:26:41.383 ServerApp] jupyterlab_git | extension was successfully linked.

[I 2025-01-17 12:26:41.385 ServerApp] nbclassic | extension was successfully linked.

[I 2025-01-17 12:26:41.385 ServerApp] nbdime | extension was successfully linked.

[I 2025-01-17 12:26:41.388 ServerApp] notebook | extension was successfully linked.

[I 2025-01-17 12:26:41.389 ServerApp] Writing Jupyter server cookie secret to /home/jovyan/.local/share/jupyter/runtime/jupyter_cookie_secret

[I 2025-01-17 12:26:41.508 ServerApp] notebook_shim | extension was successfully linked.

[I 2025-01-17 12:26:41.544 ServerApp] notebook_shim | extension was successfully loaded.

[I 2025-01-17 12:26:41.546 ServerApp] jupyter_lsp | extension was successfully loaded.

[I 2025-01-17 12:26:41.546 ServerApp] jupyter_server_mathjax | extension was successfully loaded.

[I 2025-01-17 12:26:41.547 ServerApp] jupyter_server_terminals | extension was successfully loaded.

[I 2025-01-17 12:26:41.548 LabApp] JupyterLab extension loaded from /opt/conda/lib/python3.11/site-packages/jupyterlab

[I 2025-01-17 12:26:41.548 LabApp] JupyterLab application directory is /opt/conda/share/jupyter/lab

[I 2025-01-17 12:26:41.549 LabApp] Extension Manager is 'pypi'.

[I 2025-01-17 12:26:41.551 ServerApp] jupyterlab | extension was successfully loaded.

[I 2025-01-17 12:26:41.553 ServerApp] jupyterlab_git | extension was successfully loaded.

[I 2025-01-17 12:26:41.555 ServerApp] nbclassic | extension was successfully loaded.

[I 2025-01-17 12:26:41.587 ServerApp] nbdime | extension was successfully loaded.

[I 2025-01-17 12:26:41.589 ServerApp] notebook | extension was successfully loaded.

[I 2025-01-17 12:26:41.589 ServerApp] Serving notebooks from local directory: /home/jovyan

[I 2025-01-17 12:26:41.589 ServerApp] Jupyter Server 2.8.0 is running at:

[I 2025-01-17 12:26:41.589 ServerApp] http://620453e8d667:8888/lab?token=3c8b85083b99572408a3f51d6f23eb8d591c3434130c5f3c

[I 2025-01-17 12:26:41.589 ServerApp] http://127.0.0.1:8888/lab?token=3c8b85083b99572408a3f51d6f23eb8d591c3434130c5f3c

[I 2025-01-17 12:26:41.589 ServerApp] Use Control-C to stop this server and shut down all kernels (twice to skip confirmation).

[C 2025-01-17 12:26:41.591 ServerApp]

To access the server, open this file in a browser:

file:///home/jovyan/.local/share/jupyter/runtime/jpserver-7-open.html

Or copy and paste one of these URLs:

http://620453e8d667:8888/lab?token=3c8b85083b99572408a3f51d6f23eb8d591c3434130c5f3c

http://127.0.0.1:8888/lab?token=3c8b85083b99572408a3f51d6f23eb8d591c3434130c5f3c

[I 2025-01-17 12:26:41.872 ServerApp] Skipped non-installed server(s): bash-language-server, dockerfile-language-server-nodejs, javascript-typescript-langserver, jedi-language-server, julia-language-server, pyright, python-language-server, python-lsp-server, r-languageserver, sql-language-server, texlab, typescript-language-server, unified-language-server, vscode-css-languageserver-bin, vscode-html-languageserver-bin, vscode-json-languageserver-bin, yaml-language-server

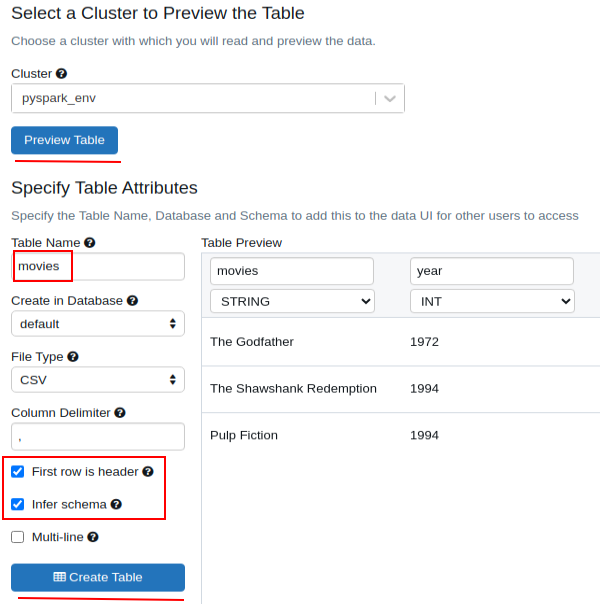

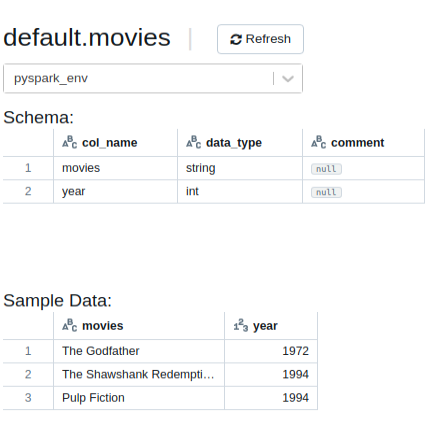

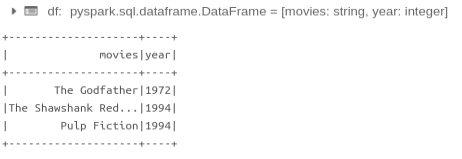

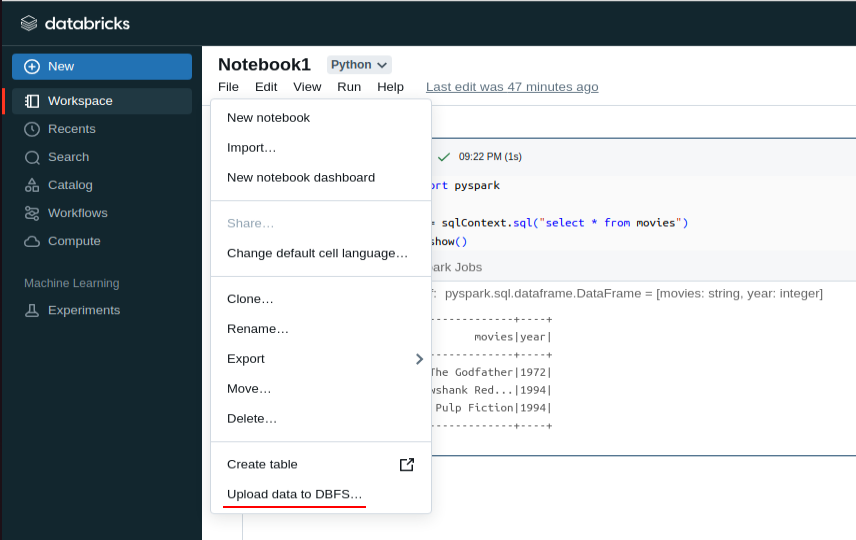

import pyspark df = sqlContext.sql("select * from movies") df.show()

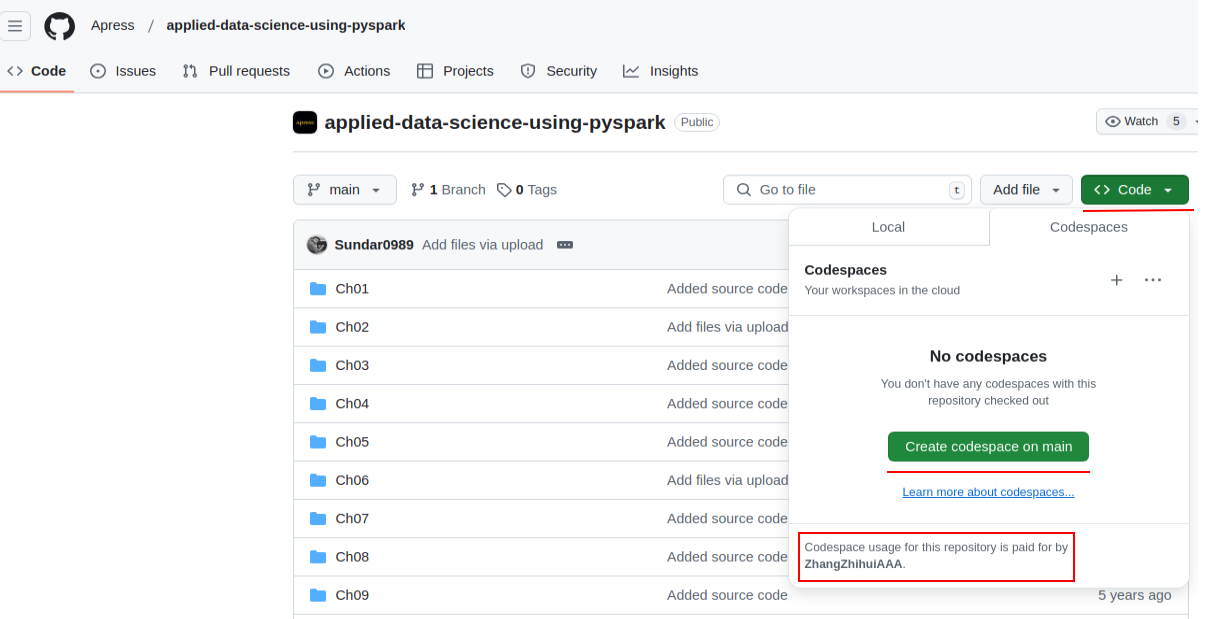

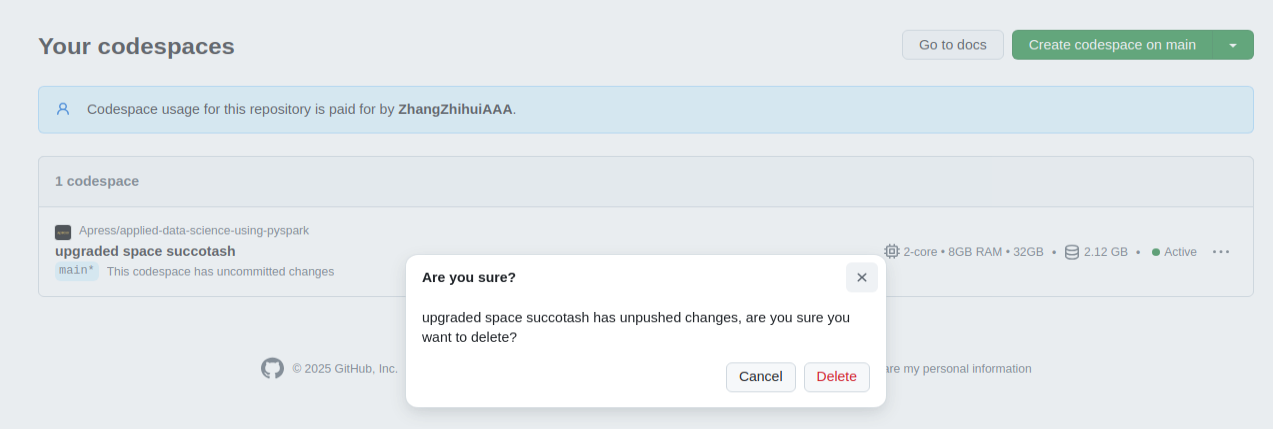

Codespaces are ready-to-use cloud-based development environments offered by GitHub. GitHub code can be executed on the browser-based Visual Studio Code (VS Code) with a few clicks. Personal GitHub accounts are eligible for free use of codespaces, but there are usage quota limitations. Refer to this link for additional details: https://docs.github.com/en/codespaces/overview.

Sign up for a GitHub account (if you don’t have one) and log in. Navigate to the GitHub repository associated with this book: https://github.com/Apress/applieddata-science-using-pyspark.

Select Create Codespace on Main to launch VS Code on the browser:

from pyspark.sql import SparkSession spark = SparkSession.builder.getOrCreate() df = spark.read.csv("data/C01/movies.csv", header=True, sep=',') df.show()

+--------------------+----+ | movies|year| +--------------------+----+ | The Godfather|1972| |The Shawshank Red...|1994| | Pulp Fiction|1994| +--------------------+----+

from pyspark import SparkContext sc = SparkContext.getOrCreate() import random NUM_SAMPLES = 100000000 # Function to check if a point lies inside def inside(p): x, y = random.random(), random.random() return x * x + y * y < 1 # parallelize the computation count = sc.parallelize(range(0, NUM_SAMPLES)).filter(inside).count() # calculate the estimate value of pi pi = 4 * count / NUM_SAMPLES print("Pi is roughly", pi)

Output:

Pi is roughly 3.14128788

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律

2024-01-17 Redis - Search