基于sk_learn的k近邻算法实现-mnist手写数字识别且要求97%以上精确率

1. 导入需要的库

from sklearn.datasets import fetch_openml import numpy as np from sklearn.neighbors import KNeighborsClassifier from sklearn.metrics import accuracy_score

2. 设置随机种子,以获得可复现的结果。

np.random.seed(42)

3. 获取mnist数据集,并将数据集标签 由字符型转换为整数型

1 np.random.seed(42) 2 mnist = fetch_openml("mnist_784", version = 1, as_frame=False) 3 X, y = mnist['data'], mnist['target'] 4 y = y.astype(np.uint8)

4. 划分训练集和测试集

X_train, X_test, y_train, y_test = X[:60000], X[60000:], y[:60000], y[60000:]

5. 训练模型并测试

knn_clf = KNeighborsClassifier() knn_clf.fit(X_train, y_train) y_test_pred = knn_clf.predict(X_test) print(accuracy_score(y_test, y_test_pred))

如图我们得到了模型的准确率 0.9688

6. 训练模型中的超参数weights(默认值为'uniform')和n_neighbors(默认值为5)。由于超参数的连续性,所以n_neighbors的备选值可以为 3, 4, 6

from sklearn.datasets import fetch_openml import numpy as np from sklearn.neighbors import KNeighborsClassifier from sklearn.model_selection import GridSearchCV from sklearn.metrics import accuracy_score np.random.seed(42) mnist = fetch_openml("mnist_784", version = 1, as_frame=False) X, y = mnist['data'], mnist['target'] y = y.astype(np.uint8) X_train, X_test, y_train, y_test = X[:60000], X[60000:], y[:60000], y[60000:] param_grid = [{'weights': ["uniform", "distance"], 'n_neighbors': [3, 4, 6]}] knn_clf = KNeighborsClassifier() grid_search = GridSearchCV(knn_clf, param_grid, cv=5, verbose=3) grid_search.fit(X_train, y_train) y_pred = grid_search.predict(X_test) print(accuracy_score(y_test, y_pred))

如图所示,在测试集上得到的准确率达到97.14%

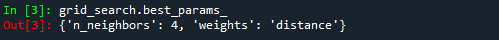

通过如下命令可以获得选取的最合适的超参数以及在验证集上达到的最好结果