k8s 介绍和部署

容器基于内核的命名空间:namespace

Mount 挂载点文件系统

Network 网络设备,网络栈,端口等

User 用户和用户组

Uts 主机名与域名

Ipc 信息量,消息队列

PID 进程编号

支持容器对象

container images network volume

Scheduler 调度器

网络模型:

brdge:2层虚拟网桥

host:共享宿主机

contabiner:共享namespace

none:没有网络接口

什么是pod?

一个或者多个容器的集合,也可称为容器集,kubernetes 调度,部署,运行应用的原子单元

封装的内容:可被该组容器共享的存储资源,网络协议栈及容器运行控制策略等

kubernetes集群架构

master主要有api-server ,contronal-manager和scheduler (调度器)三个组件,以及Etcd存储集群状态服务组成,构成整个集群。

每个Node 节点主要包含kubelet kube proxy,容器运行时三个组件,它们承载运行各类用于容器。

kubelet: 运行在cluster 所有节点上,负责启动pod和容器。

kubeadm: 用于初始化cluster

kubectl: 是kubenetes 命令行工具,通过kubectl 可以部署和管理应用,查看各种资源,创建,删除和更新组件。

API Server:

整个集群的API网关,相关应用程序为kube-apiserver.

声明式API

Cluster Store

集群状态数据存储系统,通常值得就是etcd

仅会通API server 交互

controller manager

负责实现客户端通过通过api提交终态声明,相应应用程序为kube-controller-manager

kubelet

kubernetes集群对每个worker 节点上的代理,相应程序为kuberlet

集群组件运行模式

独立组件模式

除add-ons 以外,各组件以二进制方式部署于节点上,并运行于守护进程。

#部署环境

OS: Ubuntu 20.04.4 LTS (Focal Fossa)

Kubernetes:v1.24.3

Container Runtime: Docker version 20.10.17, build 100c701

CRI: cri-dockerd 0.2.1 (HEAD)

Kubernetes集群由一个master和3个node节点组成

hostnamectl set-hostname master01.magedu.com 10.0.0.10

hostnamectl set-hostname node01.magedu.com 10.0.0.20

hostnamectl set-hostname node02.magedu.com 10.0.0.30

hostnamectl set-hostname node03.magedu.com 10.0.0.40

(1)借助于chronyd服务(程序包名称chrony)设定各节点时间精确同步;

(2)通过DNS完成各节点的主机名称解析;

(3)各节点禁用所有的Swap设备;

(4)各节点禁用默认配置的iptables防火墙服务;

#通过hosts文件实现域名解析

[root@master01 ~]#cat /etc/hosts

10.0.0.10 master01.magedu.com master01 kubeapi.magedu.com kubeapi

10.0.0.20 node01.magedu.com node01

10.0.0.30 node02.magedu.com node02

10.0.0.40 node03.magedu.com node03

#关闭swap(部署集群时,kubeadm 默认会预先检查当前主机是否禁用swap 设备并在未禁用时强制终止部署过程)

否则需要再kubeadm init 及kubeadm join 命令执行时额外使用相关的选项忽略错误。

swapoff -a

systemctl --type swap

#设置主机名

hostnamectl set-hostname master01.magedu.com

#同步时间

apt install chrony

systemctl start chrony.service

#禁用默认的防火墙服务

ufw status

ufw disable

#安装并启动docker-ce

生成docker-ce 相关程序包的仓库,以阿里云的镜像服务器为例

apt -y install apt-transport-https ca-certificates curl software-properties-common

curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | apt-key add -

add-apt-repository "deb [arch=amd64] http://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

apt update

apt install docker-ce

kubelet 需要让docker 容器引擎使用systemd 作为CGroup的驱动,默认为cgroupfs,其中registry-mirrors 指明使用的镜像加速服务。

cat /etc/docker/daemon.json

{

"registry-mirrors": [

],

"exec-opts": ["native.cgroupdriver=systemd"]

}

#配置完成后启动docker服务并设置开机启动

systemctl daemon-reload

systemctl start docker.service

systemctl enable docker.service

为Docker 设定使用的代理服务(可选)

Kubeadm 部署kubernetes 集群过程中,默认使用google的Registry服务k8s.gcr.io 上的镜像,国内无法访问,需要设置FQ的代理来获取相关的镜像

或者从Dockerhub 上下载镜像到本地后自动对镜像标签,设置代理服务的方法,编辑/lib/systemd/system/docker.service文件,在Service]配置段中添加内容;

配置完成后需要重载systemd,并重新启动docker服务

在master01上导入事先下载的t相关tar包

docker load < k8s-master-components.tar

docker load < calico-components.tar

在3个worker 节点导入镜像

docker load <k8s-worker-components.tar< div="">

安装cri-dockerd

CRI:(容器运行时接口)kubernetes 节点的底层有一个叫作“容器运行时”的软件支撑,负责启动容器的事情。

CRI-Dockerd是什么? CRI-Dockerd 其实就是从被移除的 Docker Shim 中,独立出来的一个项目,用于解决历史遗留的节点升级 Kubernetes 的问题。

Kubernetes自v1.24移除了对docker-shim的支持,而Docker Engine默认又不支持CRI规范,因而二者将无法直接完成整合。为此,Mirantis和Docker联合创建了cri-dockerd项目,用于为Docker Engine提供一个能够支持到CRI规范的垫片,从而能够让Kubernetes基于CRI控制Docker 。

apt install ./cri-dockerd_0.2.2.3-0.ubuntu-focal_amd64.deb

scp cri-dockerd_0.2.2.3-0.ubuntu-focal_amd64.deb 10.0.0.20:

scp cri-dockerd_0.2.2.3-0.ubuntu-focal_amd64.deb 10.0.0.30:

scp cri-dockerd_0.2.2.3-0.ubuntu-focal_amd64.deb 10.0.0.40:

完成安装后,相应的服务cri-dockerd.service便会自动启动。

安装kubelet, kubeadm 和 kubectl

在各主机上生成阿里云的镜像的相关程序包仓库

apt update && apt install -y apt-transport-https curl

apt install -y kubelet kubeadm kubectl

apt update && apt install -y apt-transport-https curl

curl -fsSL https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

cat </etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

apt update

apt install -y kubelet kubeadm kubectl

systemctl enable kubelet

scp -r /etc/docker/daemon.json 10.0.0.20:/etc/docker/

scp -r /etc/docker/daemon.json 10.0.0.30:/etc/docker/

scp -r /etc/docker/daemon.json 10.0.0.40:/etc/docker/

安装完成之后需要确保kubeadm等程序的版本,也是后续初始化集群kubernetes 时需要明确指定版本号。

整合kubelet和cri-dockerd(为了支持docker而添加的垫片,能够让Kubernetes基于CRI控制Docker)

配置cri-dockerd,确保正确加载到CNI组件中

cat /usr/lib/systemd/system/cri-docker.service

[Service]

Type=notify

ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint fd:// --network-plugin=cni --cni-bin-dir=/opt/cni/bin --cni-cache-dir=/var/lib/cni/cache --cni-conf-dir=/etc/cni/net.d

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

--network-plugin:指定网络插件规范的类型,这里要使用CNI;

--cni-bin-dir: 指定CNI插件二进制程序文件的搜索目录;

--cni-cache-dir: CNI插件使用的缓存目录;

--cni-conf-dir: CNI插件加载配置文件的目录;

配置完成后,重载并重启cri-docker.service服务

systemctl daemon-reload && systemctl restart cri-docker.service

配置kubelet

指定cri-docker.service 在本地打开的unix sock文件路径

cat /etc/sysconfig/kubelet

KUBELET_KUBEADM_ARGS="--container-runtime=remote --container-runtime-endpoint=/run/cri-dockerd.sock"

初始化第一个主节点

集群核心组件kubeapiserver,kube-controller-manager,kube-scheduler,etcd等所依赖的镜像文件默认来自k8s.gcr.io的Registry服务之上,国内无法访问。

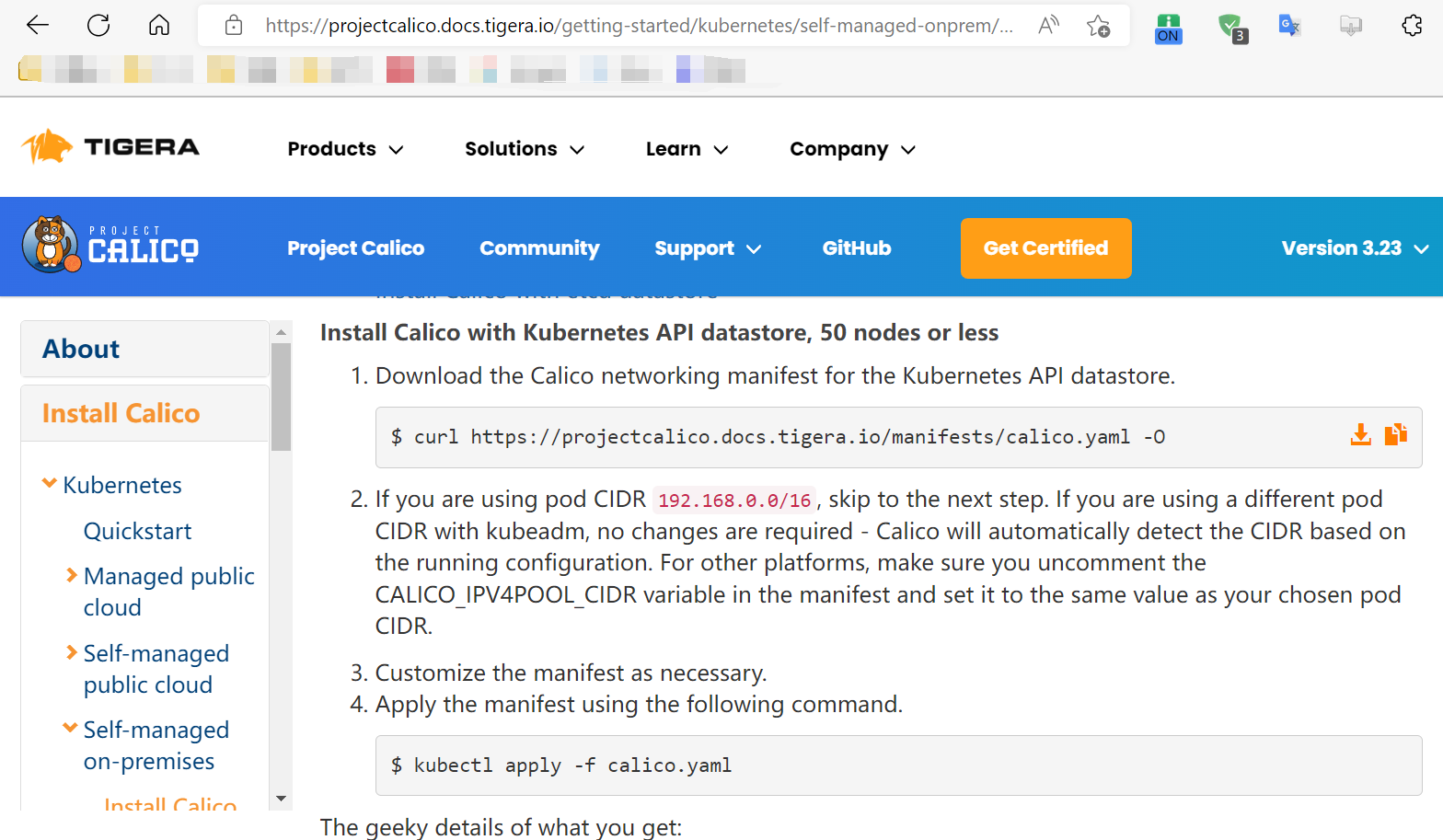

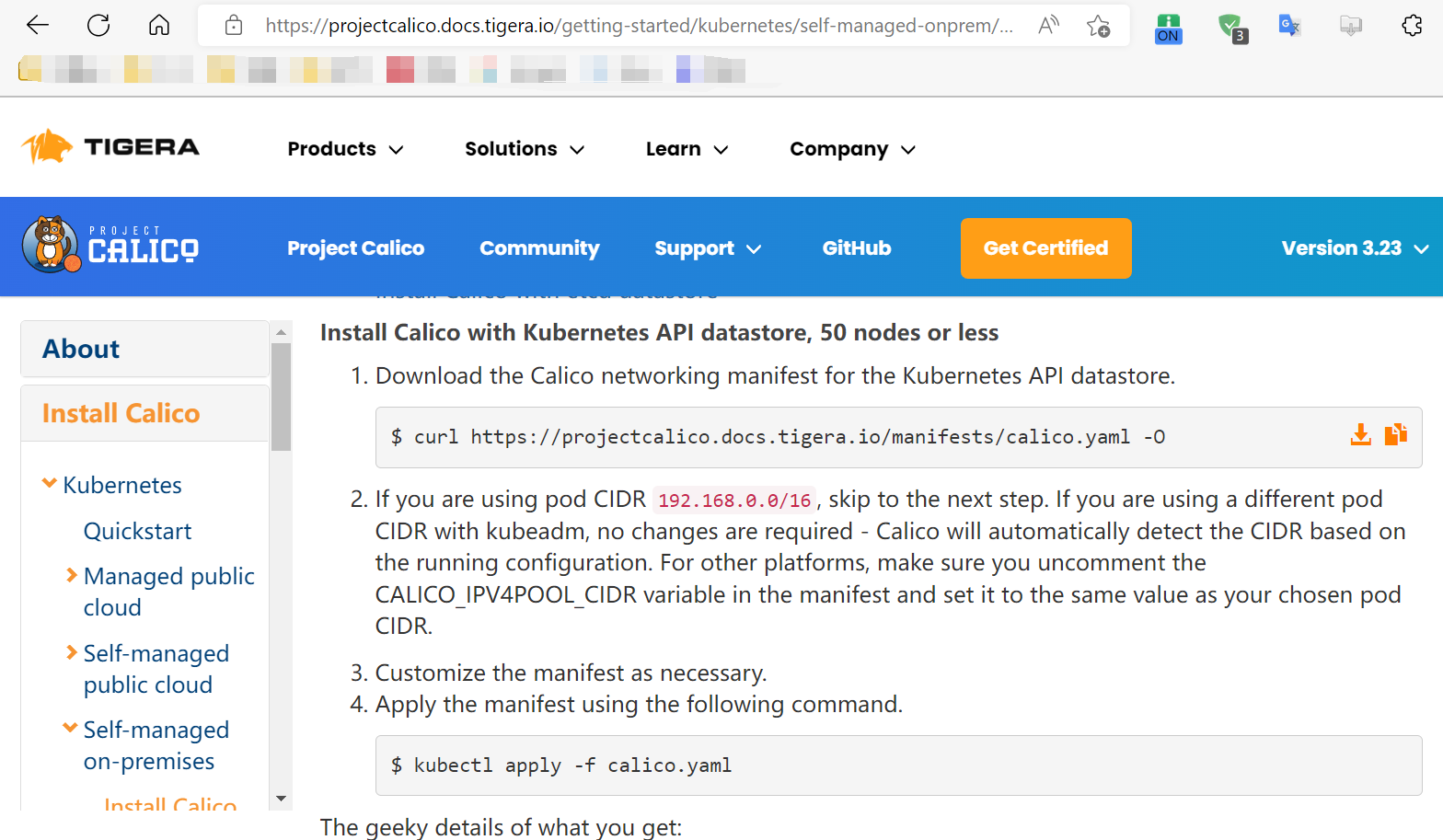

kubeadm init --control-plane-endpoint kubeapi.magedu.com --kubernetes-version=v1.24.3 --pod-network-cidr=192.168.0.0/16 --service-cidr=10.96.0.0/12 --token-ttl=0 --cri-socket unix:///run/cri-dockerd.sock

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join kubeapi.magedu.com:6443 --token ybt28e.g7zfu56bic3mj2ss

--discovery-token-ca-cert-hash sha256:62f74ed09f374b72a51cb7db3165b77e3317e6ca00093fb72d5c948ffdf9646a

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join kubeapi.magedu.com:6443 --token ybt28e.g7zfu56bic3mj2ss

--discovery-token-ca-cert-hash sha256:62f74ed09f374b72a51cb7db3165b77e3317e6ca00093fb72d5c948ffdf9646a

#根据提示信息在master01进行操作

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

#cluster上部署pod network安装calico网络插件

#下载Kubernetes API 的Calico networking

#应用calico清单

kubectl apply -f calico.yaml

#worker节点添加到集群中

kubeadm join kubeapi.magedu.com:6443 --token ybt28e.g7zfu56bic3mj2ss --discovery-token-ca-cert-hash sha256:62f74ed09f374b72a51cb7db3165b77e3317e6ca00093fb72d5c948ffdf9646a --cri-socket unix:///run/cri-dockerd.sock

#验证添加结果

[root@master01 ~]#kubectl get nodes

NAME STATUS ROLES AGE VERSION

master01.magedu.com Ready control-plane 5h19m v1.24.3

node01.magedu.com Ready 63m v1.24.3

node02.magedu.com Ready 63m v1.24.3

node03.magedu.com Ready 63m v1.24.3

#创建3个demoapp- 实例

docker load -i demoapp.tar

[root@master01 ~]#kubectl create deployment demoapp --image=ikubernetes/demoapp:v1.0 --replicas=3

deployment.apps/demoapp created

[root@master01 ~]#kubectl get pods

[root@master01 ~]#kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-78b49597cf-brn5h 1/1 Running 0 101s 192.168.100.2 node01.magedu.com

demoapp-78b49597cf-h8kvb 1/1 Running 0 101s 192.168.213.65 node02.magedu.com

demoapp-78b49597cf-w6dqj 1/1 Running 0 101s 192.168.135.193 node03.magedu.com

[root@master01 ~]#curl 192.168.100.2

iKubernetes demoapp v1.0 !! ClientIP: 192.168.120.192, ServerName: demoapp-78b49597cf-brn5h, ServerIP: 192.168.100.2!

[root@master01 ~]#curl 192.168.213.65

iKubernetes demoapp v1.0 !! ClientIP: 192.168.120.192, ServerName: demoapp-78b49597cf-h8kvb, ServerIP: 192.168.213.65!

[root@master01 ~]#curl 192.168.135.193

iKubernetes demoapp v1.0 !! ClientIP: 192.168.120.192, ServerName: demoapp-78b49597cf-w6dqj, ServerIP: 192.168.135.193!

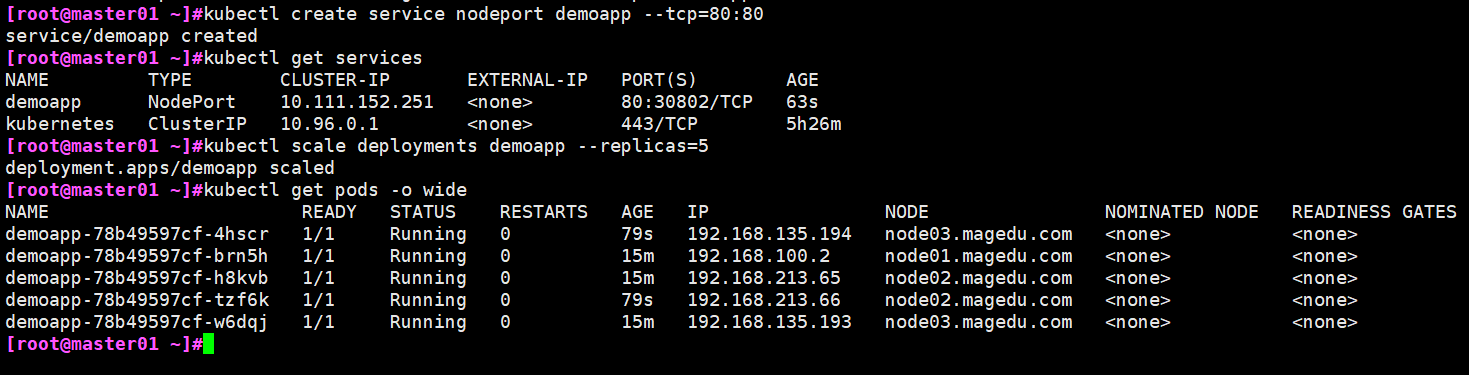

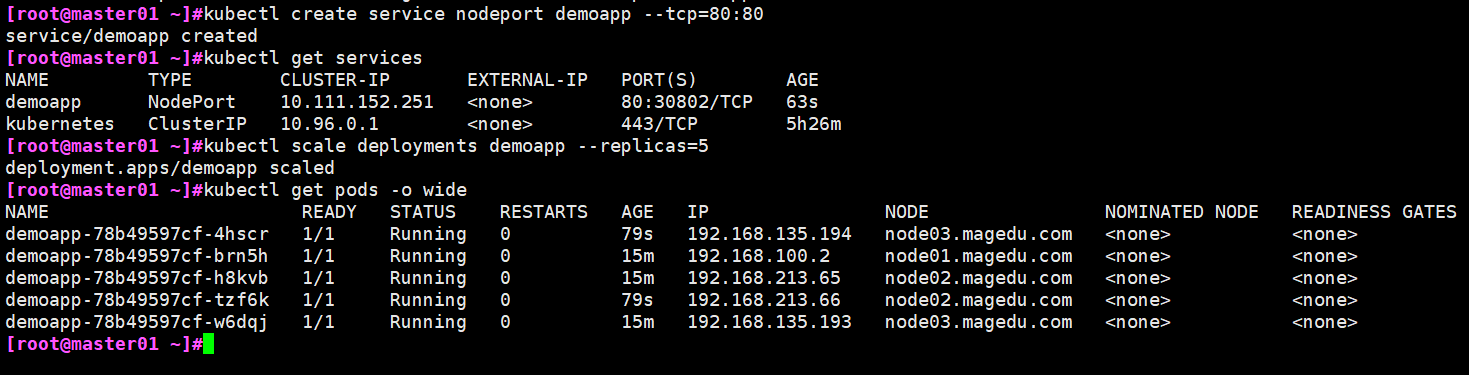

[root@master01 ~]#kubectl create service nodeport demoapp --tcp=80:80

service/demoapp created

[root@master01 ~]#kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

demoapp NodePort 10.111.152.251 80:30802/TCP 63s

kubernetes ClusterIP 10.96.0.1 443/TCP 5h26m

#测试效果

[root@node01 ~]#while :;do curl 10.111.152.251;sleep 2;done

iKubernetes demoapp v1.0 !! ClientIP: 192.168.100.0, ServerName: demoapp-78b49597cf-w6dqj, ServerIP: 192.168.135.193!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.100.0, ServerName: demoapp-78b49597cf-w6dqj, ServerIP: 192.168.135.193!

iKubernetes demoapp v1.0 !! ClientIP: 10.0.0.20, ServerName: demoapp-78b49597cf-brn5h, ServerIP: 192.168.100.2!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.100.0, ServerName: demoapp-78b49597cf-h8kvb, ServerIP: 192.168.213.65!

iKubernetes demoapp v1.0 !! ClientIP: 10.0.0.20, ServerName: demoapp-78b49597cf-brn5h, ServerIP: 192.168.100.2!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.100.0, ServerName: demoapp-78b49597cf-w6dqj, ServerIP: 192.168.135.193!

iKubernetes demoapp v1.0 !! ClientIP: 10.0.0.20, ServerName: demoapp-78b49597cf-brn5h, ServerIP: 192.168.100.2!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.100.0, ServerName: demoapp-78b49597cf-h8kvb, ServerIP: 192.168.213.65!

iKubernetes demoapp v1.0 !! ClientIP: 10.0.0.20, ServerName: demoapp-78b49597cf-brn5h, ServerIP: 192.168.100.2!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.100.0, ServerName: demoapp-78b49597cf-h8kvb, ServerIP: 192.168.213.65!

iKubernetes demoapp v1.0 !! ClientIP: 192.168.100.0, ServerName: demoapp-78b49597cf-w6dqj, ServerIP: 192.168.135.193!

#节点扩缩容

[root@master01 ~]#kubectl scale deployments demoapp --replicas=5

[root@master01 ~]#kubectl get pods -o wide

Calico 是纯三层的 SDN 实现