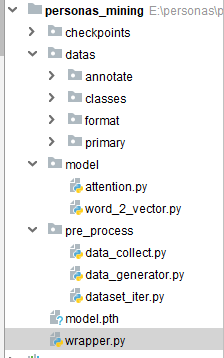

一、文件结构

二、attention

from torch import nn

import torch

import torch.nn.functional as F

class BiLSTM_Attention(nn.Module):

def __init__(self,embedding_dim, num_hiddens, num_layers):

super(BiLSTM_Attention, self).__init__()

# bidirectional设为True即得到双向循环神经网络

self.encoder = nn.LSTM(input_size=embedding_dim,

hidden_size=num_hiddens,

num_layers=num_layers,

batch_first=True,

bidirectional=True)

# 初始时间步和最终时间步的隐藏状态作为全连接层输入

self.w_omega = nn.Parameter(torch.Tensor(

num_hiddens * 2, num_hiddens * 2))

self.u_omega = nn.Parameter(torch.Tensor(num_hiddens * 2, 1))

self.decoder = nn.Linear(2 * num_hiddens, 4)

nn.init.uniform_(self.w_omega, -0.1, 0.1)

nn.init.uniform_(self.u_omega, -0.1, 0.1)

def forward(self, embeddings):

# rnn.LSTM只返回最后一层的隐藏层在各时间步的隐藏状态。

# embeddings形状是:(batch_size, seq_len, embedding_size)

outputs, _ = self.encoder(embeddings) # output, (h, c)

# outputs形状是(batch_size, seq_len, 2 * num_hiddens)

# Attention过程

u = torch.tanh(torch.matmul(outputs, self.w_omega))

# u形状是(batch_size, seq_len, 2 * num_hiddens)

att = torch.matmul(u, self.u_omega)

# att形状是(batch_size, seq_len, 1)

att_score = F.softmax(att, dim=1)

# att_score形状仍为(batch_size, seq_len, 1)

scored_x = outputs * att_score

# scored_x形状是(batch_size, seq_len, 2 * num_hiddens)

# Attention过程结束

feat = torch.sum(scored_x, dim=1) # 加权求和

# feat形状是(batch_size, 2 * num_hiddens)

outs = self.decoder(feat)

# out形状是(batch_size, 4)

return outs

三、word_2_vec

import jieba

import torch

import gensim

import numpy as np

class WordEmbedding(object):

def __init__(self):

self.model = gensim.models.KeyedVectors.load_word2vec_format('checkpoints/word2vec.bin', binary=True)

def sentenceTupleToEmbedding(self, data1):

maxLen = max([len(list(jieba.cut(sentence_a))) for sentence_a in data1])

seq_len = maxLen

a = self.sqence_vec(data1, seq_len) #batch_size, sqence, embedding

return torch.FloatTensor(a)

def sqence_vec(self, data, seq_len):

data_a_vec = []

for sequence_a in data:

sequence_vec = [] # sequence * 128

for word_a in jieba.cut(sequence_a):

if word_a in self.model:

sequence_vec.append(self.model[word_a])

sequence_vec = np.array(sequence_vec)

add = np.zeros((seq_len - sequence_vec.shape[0], 128))

sequenceVec = np.vstack((sequence_vec, add))

data_a_vec.append(sequenceVec)

a_vec = np.array(data_a_vec)

return a_vec

if __name__ == '__main__':

word = WordEmbedding()

a = word.sentenceTupleToEmbedding(["我爱北京天安门"])

print(a)

四、data_generator

# -*- coding: UTF-8 -*-

from tqdm import tqdm

import os, sys

import json

"""生成整体的json文件"""

def get_all_path():

"""

得到所有路径

"""

#parent_path = os.path.dirname(os.path.realpath(__file__))

parent_path = os.path.split(os.path.realpath(__file__))[0]

root = parent_path[:parent_path.find("pre_proces")]

f_in = os.path.join(root, "datas", "primary")

primiry_file_paths = []

dirs = os.listdir(f_in)

# 输出所有文件和文件夹

for fileName in dirs:

path = os.path.join(f_in, fileName)

primiry_file_paths.append([fileName, path])

return primiry_file_paths

def organize_data(file_name, file_path):

"""

根据一个路径整理数据

"""

item = {}

item["file_name"] = file_name

with open(file_path, "r", encoding="utf8") as f:

kefu = 0

kehu = 0

item_object = {}

for line in f.readlines():

line_data = line.split(":")

if "服" in line_data[0]:

#这一行是客服

kefu = kefu + 1

key = "bank_" + str(kefu)

item_object[key] = line_data[1].replace("\n", "")

else:

kehu = kehu + 1

key = "user_" + str(kehu)

item_object[key] = line_data[1].replace("\n", "")

item["datas"] = item_object

return item

def main():

file_out = "../datas/format/primary.json"

with open(file_out, "w", encoding="utf-8") as f:

for path in tqdm(get_all_path()):

file_name, file_path = path[0], path[1]

item = organize_data(file_name, file_path)

text = json.dumps(item, ensure_ascii=False) + "\n"

f.write(text)

if __name__ == '__main__':

main()

五、data_collect

# -*- coding: UTF-8 -*-

import json

import pandas as pd

"""获得所有的文本"""

def get_all_text():

file_path = "../datas/format/primary.json"

names = []

roles = []

texts = []

with open(file_path, "r", encoding="utf8") as f:

for data_line in f.readlines():

json_data = json.loads(data_line)

file_name = json_data["file_name"]

file_data = json_data["datas"]

for k,v in file_data.items():

names.append(file_name)

roles.append(k)

texts.append(v)

file_out = "../datas/format/all_text.csv"

dataframe = pd.DataFrame({'names': names, 'roles': roles, "texts": texts})

dataframe.to_csv(file_out, index=False, sep='\t')

"""从csv搜索数据"""

def search_text(key):

file_out = "../datas/classes/" + key + ".csv"

file_path = "../datas/format/all_text.csv"

data = pd.read_csv(file_path, sep="\t")

da = data[data["texts"].str.contains(key)]

da.to_csv(file_out, index=False, sep='\t')

"""提取带有婚字的数据"""

def data_annotate():

file_in = "../datas/format/primary.json"

file_out = "../datas/annotate/label.json"

with open(file_out, "w", encoding="utf8") as fo:

with open(file_in, "r", encoding="utf8") as f:

for line in f.readlines():

item = {}

label = 0

json_data = json.loads(line)

for k,v in json_data["datas"].items():

if "婚" in v:

label = 1

if label == 1:

item["name"] = json_data["file_name"]

item["label"] = ""

item["datas"] = json_data["datas"]

fo.write(json.dumps(item, ensure_ascii=False) + "\n")

return "success"

"""提取标注过的数据"""

def annotate():

file_in = "../datas/annotate/label.json"

file_labeled = "../datas/annotate/labeled.json"

file_unlabeled = "../datas/annotate/unlabel.json"

with open(file_in, "r", encoding="utf8") as f_in:

with open(file_labeled, "w", encoding="utf8") as f_labeled:

with open(file_unlabeled, "w", encoding="utf8") as f_unlabeled:

for line in f_in.readlines():

json_data = json.loads(line)

if json_data["label"]:

f_labeled.write(json.dumps(json_data, ensure_ascii=False) + "\n")

else:

f_unlabeled.write(json.dumps(json_data, ensure_ascii=False) + "\n")

return "success"

def label_to_csv():

file_path = "../datas/annotate/labeled.json"

labels = []

datas = []

data_dict = []

with open(file_path, "r", encoding="utf8") as f:

for data_line in f.readlines():

json_data = json.loads(data_line)

_label = json_data["label"]

_data = "|".join(json_data["datas"].values())

labels.append(_label)

datas.append(_data)

data_dict.append(data_line.replace("\n", ""))

file_out = "../datas/annotate/labeled.csv"

dataframe = pd.DataFrame({'labels': labels, 'datas': datas, "data_dict": data_dict})

dataframe.to_csv(file_out, index=False, sep='\t')

if __name__ == '__main__':

label_to_csv()

六、dataset_iter

import torch.utils.data as data

import torch

class DatasetIter(data.Dataset):

def __init__(self, text, label):

self.text = text

self.label = label

def __getitem__(self, item):

text = self.text[item]

label = self.label[item]

return text, label

def __len__(self):

return len(self.text)

七、wrapper

from model.attention import BiLSTM_Attention

import torch

import torch.nn as nn

import pandas as pd

from pre_process.dataset_iter import DatasetIter

from torch.utils.data import DataLoader

from torch.utils.data import Subset

from model.word_2_vector import WordEmbedding

import numpy as np

word = WordEmbedding()

class MainProcess(object):

def __init__(self):

self.lr = 0.001

data_frame = pd.read_csv("datas/annotate/labeled.csv", sep="\t")

label = data_frame["labels"]

text = data_frame["datas"]

data_set = DatasetIter(text, label)

dataSet_length = len(data_set)

train_size = int(0.9 * dataSet_length)

train_set = Subset(data_set, range(train_size))

test_set = Subset(data_set, range(train_size, dataSet_length))

self.train_iter = DataLoader(dataset=train_set, batch_size=32, shuffle=True)

self.test_iter = DataLoader(dataset=test_set, batch_size=32, shuffle=True)

embedding_dim = 128

num_hiddens = 64

num_layers = 4

self.num_epochs = 100

self.net = BiLSTM_Attention(embedding_dim, num_hiddens, num_layers)

self.criterion = nn.CrossEntropyLoss()

self.optimizer = torch.optim.Adam(self.net.parameters(), lr=self.lr)

def binary_acc(self, preds, y):

pred = torch.argmax(preds, dim=1)

correct = torch.eq(pred, y).float()

acc = correct.sum() / len(correct)

return acc

def train(self, mynet, train_iter, optimizer, criterion, epoch):

avg_acc = []

avg_loss = []

mynet.train()

for batch_id, (datas, label) in enumerate(train_iter):

try:

X = word.sentenceTupleToEmbedding(datas)

except Exception as e:

continue

y_hat = mynet(X)

loss = criterion(y_hat, label)

acc = self.binary_acc(y_hat, label)

avg_acc.append(acc)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if batch_id % 100==0:

print("轮数:", epoch, "batch: ",batch_id,"训练损失:", loss.item())

avg_loss.append(loss.item())

avg_acc = np.array(avg_acc).mean()

avg_loss = np.array(avg_loss).mean()

print('train acc:', avg_acc)

print("train loss", avg_loss)

def eval(self, mynet, test_iter, criteon):

mynet.eval()

avg_acc = []

avg_loss = []

with torch.no_grad():

for batch_id, (datas, label) in enumerate(test_iter):

try:

X = word.sentenceTupleToEmbedding(datas)

except Exception as e:

continue

y_hat = mynet(X)

loss = criteon(y_hat, label)

acc = self.binary_acc(y_hat, label).item()

avg_acc.append(acc)

avg_loss.append(loss.item())

avg_acc = np.array(avg_acc).mean()

avg_loss = np.array(avg_loss).mean()

print('>>test acc:', avg_acc)

print(">>test loss:", avg_loss)

return (avg_acc, avg_loss)

def main(self):

min_loss = 100000

for epoch in range(50):

self.train(self.net, self.train_iter, self.optimizer, self.criterion, epoch)

eval_acc, eval_loss = self.eval(self.net, self.test_iter, self.criterion)

if eval_loss < min_loss:

min_loss = eval_loss

print("save model")

torch.save(self.net.state_dict(), 'model.pth')

if __name__ == '__main__':

MainProcess().main()

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· 阿里最新开源QwQ-32B,效果媲美deepseek-r1满血版,部署成本又又又降低了!

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧