CHD-5.3.6集群上Flume的文件监控

收集hive的log

hive的运行日志:

/home/hadoop/CDH5.3.6/hive-0.13.1-cdh5.3.6/log/hive.log

* memory

*hdfs

/user/flume/hive-log

1.需要四个包:

commons-configuration-1.6.jar hadoop-auth-2.5.0-cdh5.3.6.jar hadoop-common-2.5.0-cdh5.3.6.jar hadoop-hdfs-2.5.0-cdh5.3.6.jar

传到/home/hadoop/CDH5.3.6/flume-1.5.0-cdh5.3.6/lib下

2.编写配置文件

vi flume_logfile_tail.conf

# The configuration file needs to define the sources, # the channels and the sinks. # Sources, channels and sinks are defined per agent, # in this case called 'agent' ###define agent a2.sources = r2 a2.channels = c2 a2.sinks = k2 ### define sources a2.sources.r2.type = exec a2.sources.r2.command = tail -f /home/hadoop/CDH5.3.6/hive-0.13.1-cdh5.3.6/log/hive.log a2.sources.r2.shell = /bin/bash -c ### define channel a2.channels.c2.type = memory a2.channels.c2.capacity = 1000 a2.channels.c2.transactionCapacity = 100 ### define sink a2.sinks.k2.type = hdfs a2.sinks.k2.hdfs.path = hdfs://192.168.1.30:9000/user/flume/hive-log a2.sinks.k2.hdfs.fileType = DataStream a2.sinks.k2.hdfs.writeFormat = Text a2.sinks.k2.hdfs.batchSize = 10 ### bind the source and sinks to the channel a2.sources.r2.channels = c2 a2.sinks.k2.channel = c2

说明文档:http://flume.apache.org/releases/content/1.9.0/FlumeUserGuide.html#hdfs-sink

创建hdfs目录 hdfs dfs -mkdir /user/flume/hive-log

运行命令:

bin/flume-ng agent \ -c conf \ -n a2 \ -f conf/flume_logfile_tail.conf \ -Dflume.root.logger=DEBUG,console

在第二个窗口打开hive

[hadoop@master bin]$ hive Logging initialized using configuration in file:/home/hadoop/CDH5.3.6/hive-0.13.1-cdh5.3.6/conf/hive-log4j.properties hive (default)> show databases; OK database_name default Time taken: 0.354 seconds, Fetched: 1 row(s) hive (default)> show tables; OK tab_name dept Time taken: 0.037 seconds, Fetched: 1 row(s) hive (default)> select * from dept; OK dept.deptno dept.dname dept.loc 10 ACCOUNTING NEW YORK 20 RESEARCH DALLAS 30 SALES CHICAGO 40 OPERATIONS BOSTON Time taken: 0.43 seconds, Fetched: 4 row(s)

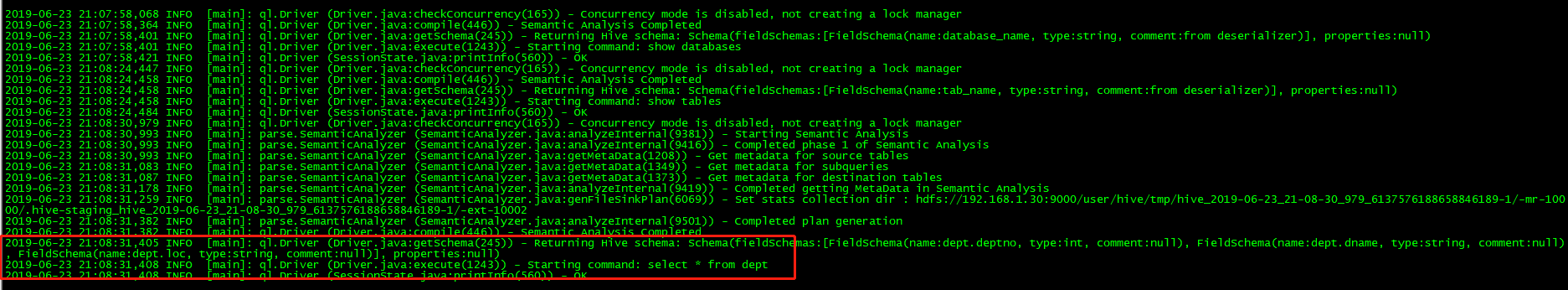

在hive.log的日志显示:

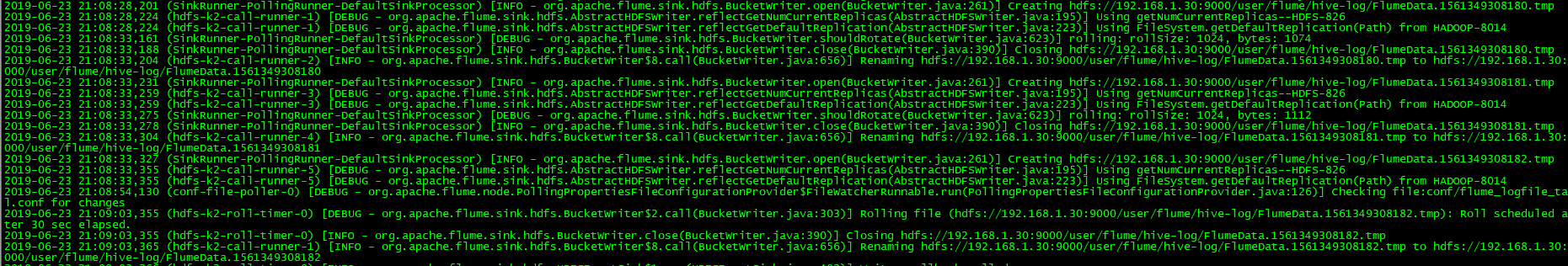

在flume监控窗口输出如下:

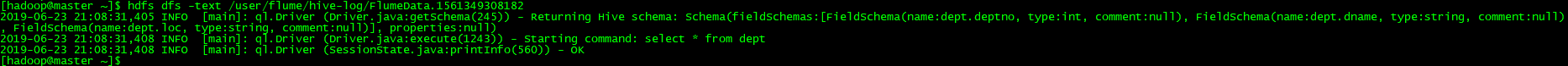

查看HDFS系统上生成的文件:

hdfs dfs -text /user/flume/hive-log/FlumeData.1561349308182

是和hive日志hive.log文件内容一致的。

实现了hive的日志监控到HDFS文件系统上。

天下难事,必作于易;天下大事,必作于细