sqoop 安装

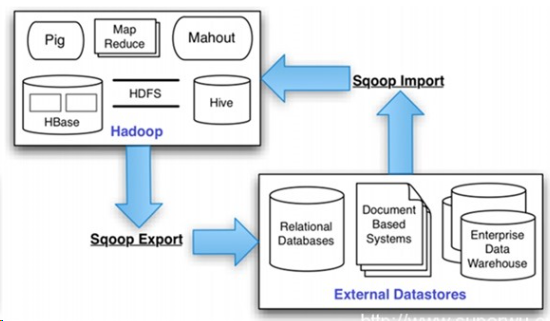

Sqoop是一款开源的工具,主要用于在Hadoop(Hive)与传统的数据库(mysql、postgresql...)间进行数据的传递,可以将一个关系型数据库(例如 : MySQL ,Oracle ,Postgres等)中的数据导进到Hadoop的HDFS中,也可以将HDFS的数据导进到关系型数据库中。Sqoop项目开始于2009年,最早是作为Hadoop的一个第三方模块存在,后来为了让使用者能够快速部署,也为了让开发人员能够更快速的迭代开发,Sqoop独立成为一个Apache项目。

总之Sqoop是一个转换工具,用于在关系型数据库与HDFS之间进行数据转换。

sqoop 安装步骤如下:

1.下载,指定到目录下

下载路径:https://mirrors.tuna.tsinghua.edu.cn/apache/sqoop/1.4.7/

选择版本:sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

安装在master主节点上。

解压:gunzip -d sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

tar -xvf sqoop-1.4.7.bin__hadoop-2.6.0.tar

mv sqoop-1.4.7.bin__hadoop-2.6.0 sqoop-1.4.7

cd sqoop-1.4.7/

cp sqoop-env-template.sh sqoop-env.sh

vi sqoop-env.sh --根据具体内容填写

# Set Hadoop-specific environment variables here. #Set path to where bin/hadoop is available #export HADOOP_COMMON_HOME=/home/hadoop/hadoop-2.7.3 #Set path to where hadoop-*-core.jar is available #export HADOOP_MAPRED_HOME=/home/hadoop/hadoop-2.7.3 #set the path to where bin/hbase is available #export HBASE_HOME=/home/hadoop/hbase #Set the path to where bin/hive is available #export HIVE_HOME=/home/hadoop/hive #Set the path for where zookeper config dir is #export ZOOCFGDIR=/home/hadoop/zookeeper

2.添加环境变量:

vi .bash_profile

export SQOOP_HOME=/home/hadoop/sqoop-1.4.7 export PATH=$PATH:${SQOOP_HOME}/bin export CLASSPATH=.:$JAVA_HOME/lib:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar export CLASSPATH=$CLASSPATH:${SQOOP_HOME}/lib

使文件生效:

source .bash_profile

3.复制相关依赖包$SQOOP_HOME/lib

下载MySQL的依赖包

mysql-connector-java-5.1.46-bin.jar 点击打开链接

上传解压后,把mysql-connector-java-5.1.46-bin.jar 移动到/home/hadoop/sqoop-1.4.7/lib 下

cd /home/hadoop/hadoop-2.7.3/share/hadoop/common

cp hadoop-common-2.7.3.jar /home/hadoop/sqoop-1.4.7/lib/

4.修改$SQOOP_HOME/bin/configure-sqoop

注释掉HCatalog,Accumulo检查(除非你准备使用HCatalog,Accumulo等HADOOP上的组件)

## Moved to be a runtime check in sqoop. #if [ ! -d "${HCAT_HOME}" ]; then # echo "Warning: $HCAT_HOME does not exist! HCatalog jobs will fail." # echo 'Please set $HCAT_HOME to the root of your HCatalog installation.' #fi #if[ ! -d "${ACCUMULO_HOME}" ]; then # echo "Warning: $ACCUMULO_HOME does notexist! Accumulo imports will fail." # echo 'Please set $ACCUMULO_HOME to the rootof your Accumulo installation.' #fi #Add HCatalog to dependency list #if[ -e "${HCAT_HOME}/bin/hcat" ]; then # TMP_SQOOP_CLASSPATH=${SQOOP_CLASSPATH}:`${HCAT_HOME}/bin/hcat-classpath` # if [ -z "${HIVE_CONF_DIR}" ]; then # TMP_SQOOP_CLASSPATH=${TMP_SQOOP_CLASSPATH}:${HIVE_CONF_DIR} # fi # SQOOP_CLASSPATH=${TMP_SQOOP_CLASSPATH} #fi #Add Accumulo to dependency list #if[ -e "$ACCUMULO_HOME/bin/accumulo" ]; then # for jn in `$ACCUMULO_HOME/bin/accumuloclasspath | grep file:.*accumulo.*jar |cut -d':' -f2`; do # SQOOP_CLASSPATH=$SQOOP_CLASSPATH:$jn # done # for jn in `$ACCUMULO_HOME/bin/accumuloclasspath | grep file:.*zookeeper.*jar |cut -d':' -f2`; do # SQOOP_CLASSPATH=$SQOOP_CLASSPATH:$jn # done #fi

测试与mysql的连接

首先确保mysqld在运行:

[root@master ~]# service mysqld status

mysqld (pid 3052) is running...

然后测试是否连通:

[hadoop@master ~]$ sqoop list-databases --connect jdbc:mysql://127.0.0.1:3306/?useSSL=false --username root -P

19/02/18 17:38:32 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

Enter password:

19/02/18 17:38:45 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

information_schema

hive

mysql

performance_schema

sys

输入密码后如果能显示你mysql上的数据库则表示已经连通。

<完>