hadoop安装zookeeper-3.4.12

在安装hbase的时候,需要安装zookeeper,当然也可以用hbase自己管理的zookeeper,在这里我们独立安装zookeeper-3.4.12。

下载地址:https://mirrors.tuna.tsinghua.edu.cn/apache/zookeeper/zookeeper-3.4.12/

zookeeper-3.4.12.tar.gz 上传到主节点上的目录下:/home/hadoop

解压tar -xvf zookeeper-3.4.12.tar.gz

重命名:mv zookeeper-3.4.12/ zookeeper

创建在主目录zookeeper下创建data和logs两个目录用于存储数据和日志:

[hadoop@master zookeeper]$ mkdir data

[hadoop@master zookeeper]$ mkdir logs

修改配置文件:

cp zoo_sample.cfg zoo.cfg

vi zoo.cfg #修改内容已经用红色字体标出

[hadoop@master conf]$ more zoo.cfg # The number of milliseconds of each tick tickTime=2000 # The number of ticks that the initial # synchronization phase can take initLimit=10 # The number of ticks that can pass between # sending a request and getting an acknowledgement syncLimit=5 # the directory where the snapshot is stored. # do not use /tmp for storage, /tmp here is just # example sakes. dataDir=/home/hadoop/zookeeper/data dataLogDir=/home/hadoop/zookeeper/logs # the port at which the clients will connect clientPort=2181 # the maximum number of client connections. # increase this if you need to handle more clients #maxClientCnxns=60 # # Be sure to read the maintenance section of the # administrator guide before turning on autopurge. # # http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance # # The number of snapshots to retain in dataDir #autopurge.snapRetainCount=3 # Purge task interval in hours # Set to "0" to disable auto purge feature #autopurge.purgeInterval=1 server.1=master:2888:3888 server.2=saver1:2888:3888 server.3=saver2:2888:3888

配置文件简单说明

============ zookeeper配置文件说明 ============ 在ZooKeeper的设计中,集群中所有机器上zoo.cfg文件的内容都应该是一致的。 tickTime: 服务器与客户端之间交互的基本时间单元(ms) initLimit : 此配置表示允许follower连接并同步到leader的初始化时间,它以tickTime的倍数来表示。当超过设置倍数的tickTime时间,则连接失败。 syncLimit : Leader服务器与follower服务器之间信息同步允许的最大时间间隔,如果超过次间隔,默认follower服务器与leader服务器之间断开链接。 dataDir: 保存zookeeper数据路径 dataLogDir:保存zookeeper日志路径,当此配置不存在时默认路径与dataDir一致 clientPort(2181) : 客户端与zookeeper相互交互的端口 server.id=host:port:port id代表这是第几号服务器 在服务器的data(dataDir参数所指定的目录)下创建一个文件名为myid的文件, 这个文件的内容只有一行,指定的是自身的id值。比如,服务器“1”应该在myid文件中写入“1”。 这个id必须在集群环境中服务器标识中是唯一的,且大小在1~255之间。 host代表服务器的IP地址 第一个端口号(2888)是follower服务器与集群中的“领导者”leader机器交换信息的端口 第二个端口号(3888)是当领导者失效后,用来执行选举leader时服务器相互通信的端口 maxClientCnxns : 限制连接到zookeeper服务器客户端的数量

修改/etc/profile

export ZOOKEEPER_HOME=/home/hadoop/zookeeper

export PATH=$PATH:$ZOOKEEPER_HOME/bin:$ZOOKEEPER_HOME/conf

使配置文件生效:

source /etc/profile

在master机器上:/home/hadoop/zookeeper/data目录下创建一个文件:myid

[hadoop@master data]$ more myid 1

在saver1机器上创建myid文件

[hadoop@master data]$ more myid 2

在saver2机器上创建myid文件

[hadoop@master data]$ more myid 3

以上三个mydi文件内容和zoo.cfg 最后的server.后对应着

然后在三台服务器上都启用

zkServer.sh start

[hadoop@master ~]$ zkServer.sh start ZooKeeper JMX enabled by default Using config: /home/hadoop/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... STARTED

可以观察到:

master

[hadoop@master ~]$ jps 17888 NameNode 18022 DataNode 18454 NodeManager 18807 Jps 18345 ResourceManager 18782 QuorumPeerMain 18191 SecondaryNameNode [hadoop@master ~]$

[hadoop@master ~]$ zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/hadoop/zookeeper/bin/../conf/zoo.cfg

Mode: followe

saver1

[hadoop@saver1 ~]$ jps 9010 Jps 8979 QuorumPeerMain 8727 DataNode 8839 NodeManager [hadoop@saver1 ~]$

[hadoop@saver1 ~]$ zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/hadoop/zookeeper/bin/../conf/zoo.cfg

Mode: leade

saver2

[hadoop@saver2 ~]$ jps 8738 NodeManager 8626 DataNode 8903 Jps 8878 QuorumPeerMain [hadoop@saver2 ~]$

[hadoop@saver2 ~]$ zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/hadoop/zookeeper/bin/../conf/zoo.cfg

Mode: followe

然后启动Hbase

[hadoop@master ~]$ start-hbase.sh running master, logging to /home/hadoop/hbase/logs/hbase-hadoop-master-master.out saver1: running regionserver, logging to /home/hadoop/hbase/bin/../logs/hbase-hadoop-regionserver-saver1.out saver2: running regionserver, logging to /home/hadoop/hbase/bin/../logs/hbase-hadoop-regionserver-saver2.out [hadoop@master ~]$ jps 19168 Jps 17888 NameNode 18022 DataNode 18454 NodeManager 18983 HMaster 18345 ResourceManager 18782 QuorumPeerMain 18191 SecondaryNameNode

启动和停止

zkServer.sh start

zkServer.sh stop

zkServer.sh restart

zkServer.sh status

前台显示:

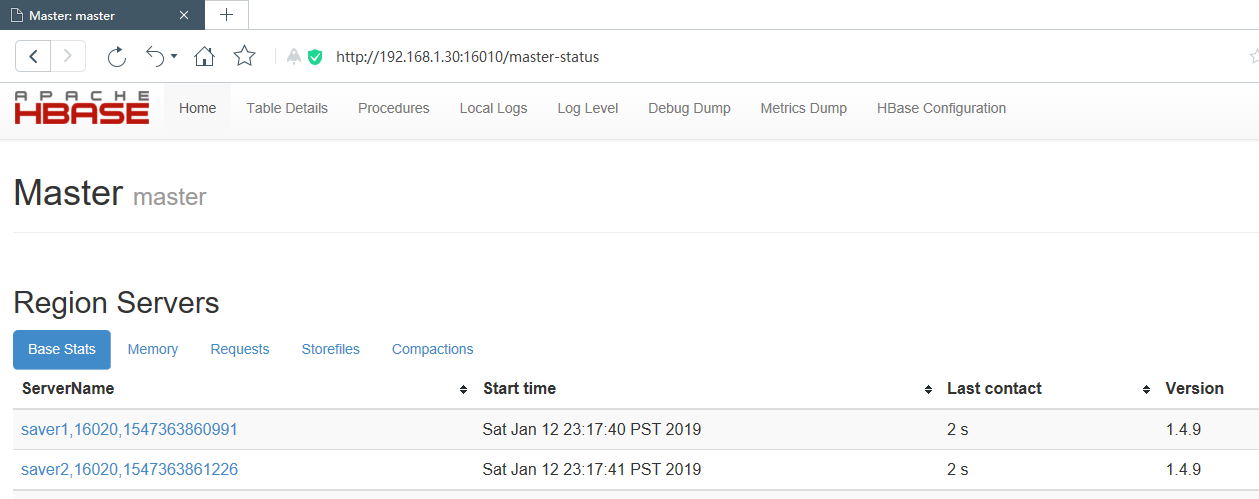

主节点:

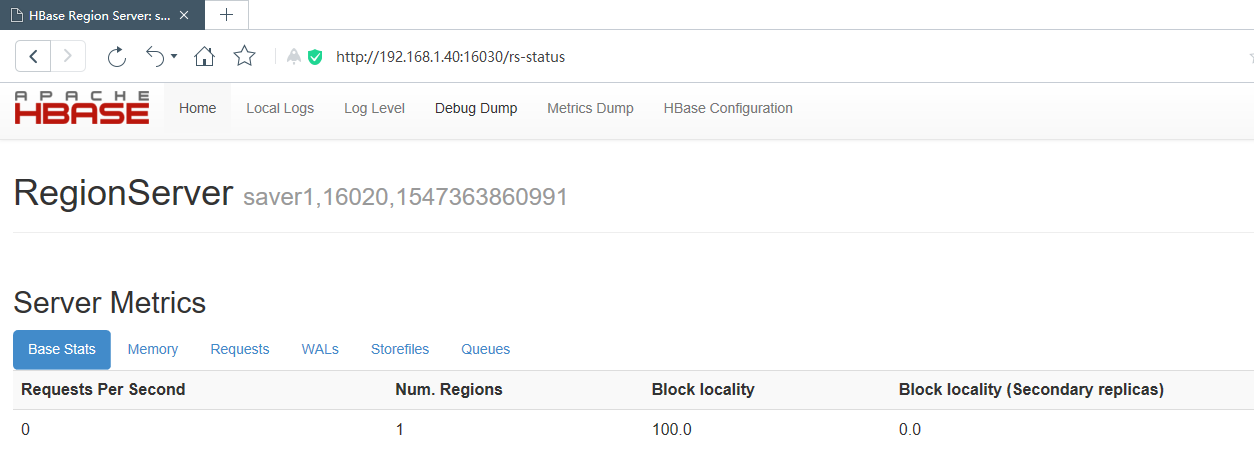

saver节点

完。