kafka 报错:Caused by: java.lang.OutOfMemoryError: Map failed

记录一次kafka的OOM报错:

情况是这样的,我在自己的win10上安装了zookeeper和kafka,用来调试。

第一次启动是ok的,消费端和生产端都是可以正常运行的。

然后,我尝试用代码去循环生产数据,kafka就挂了。

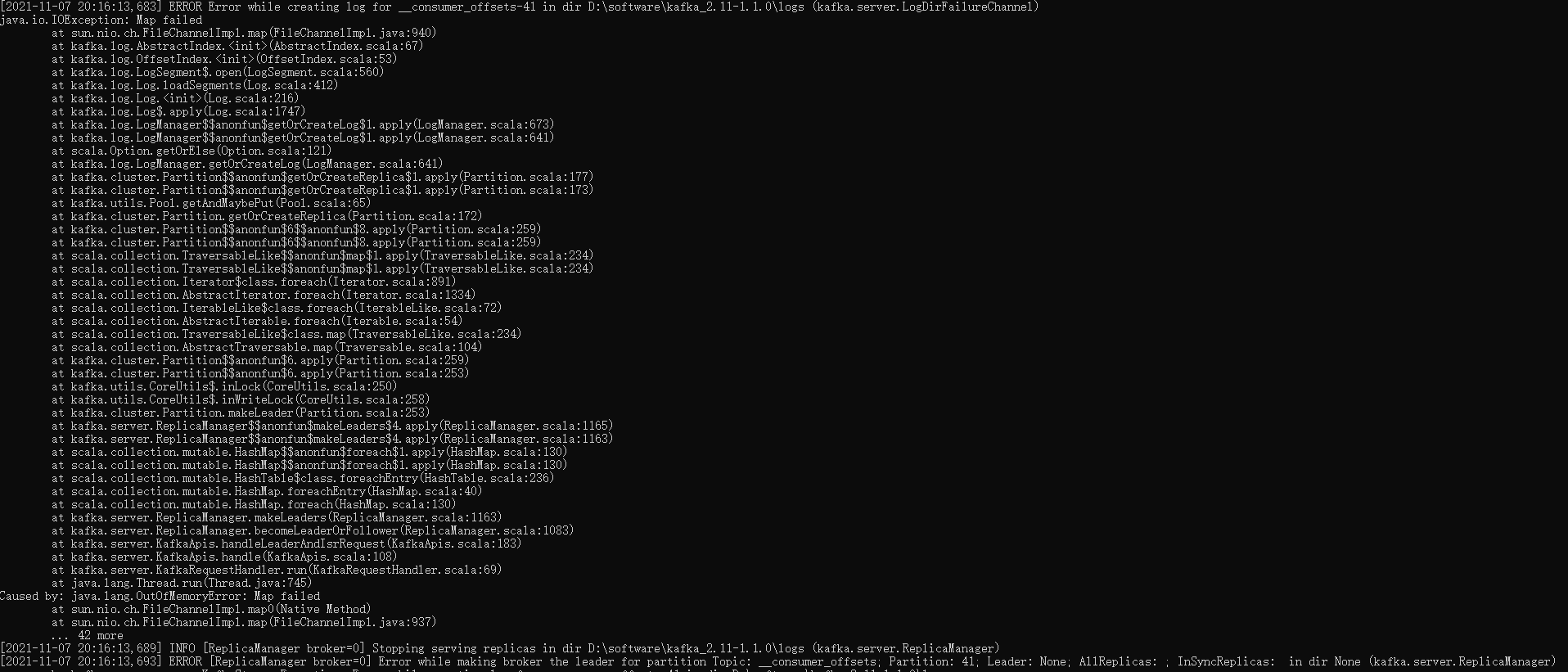

接着我重启kafka,就再也启动不了了,查看启动失败的日志报了OOM。报错内容如下:

[2021-11-07 20:16:13,683] ERROR Error while creating log for __consumer_offsets-41 in dir D:\software\kafka_2.11-1.1.0\logs (kafka.server.LogDirFailureChannel) java.io.IOException: Map failed at sun.nio.ch.FileChannelImpl.map(FileChannelImpl.java:940) at kafka.log.AbstractIndex.<init>(AbstractIndex.scala:67) at kafka.log.OffsetIndex.<init>(OffsetIndex.scala:53) at kafka.log.LogSegment$.open(LogSegment.scala:560) at kafka.log.Log.loadSegments(Log.scala:412) at kafka.log.Log.<init>(Log.scala:216) at kafka.log.Log$.apply(Log.scala:1747) at kafka.log.LogManager$$anonfun$getOrCreateLog$1.apply(LogManager.scala:673) at kafka.log.LogManager$$anonfun$getOrCreateLog$1.apply(LogManager.scala:641) at scala.Option.getOrElse(Option.scala:121) at kafka.log.LogManager.getOrCreateLog(LogManager.scala:641) at kafka.cluster.Partition$$anonfun$getOrCreateReplica$1.apply(Partition.scala:177) at kafka.cluster.Partition$$anonfun$getOrCreateReplica$1.apply(Partition.scala:173) at kafka.utils.Pool.getAndMaybePut(Pool.scala:65) at kafka.cluster.Partition.getOrCreateReplica(Partition.scala:172) at kafka.cluster.Partition$$anonfun$6$$anonfun$8.apply(Partition.scala:259) at kafka.cluster.Partition$$anonfun$6$$anonfun$8.apply(Partition.scala:259) at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234) at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234) at scala.collection.Iterator$class.foreach(Iterator.scala:891) at scala.collection.AbstractIterator.foreach(Iterator.scala:1334) at scala.collection.IterableLike$class.foreach(IterableLike.scala:72) at scala.collection.AbstractIterable.foreach(Iterable.scala:54) at scala.collection.TraversableLike$class.map(TraversableLike.scala:234) at scala.collection.AbstractTraversable.map(Traversable.scala:104) at kafka.cluster.Partition$$anonfun$6.apply(Partition.scala:259) at kafka.cluster.Partition$$anonfun$6.apply(Partition.scala:253) at kafka.utils.CoreUtils$.inLock(CoreUtils.scala:250) at kafka.utils.CoreUtils$.inWriteLock(CoreUtils.scala:258) at kafka.cluster.Partition.makeLeader(Partition.scala:253) at kafka.server.ReplicaManager$$anonfun$makeLeaders$4.apply(ReplicaManager.scala:1165) at kafka.server.ReplicaManager$$anonfun$makeLeaders$4.apply(ReplicaManager.scala:1163) at scala.collection.mutable.HashMap$$anonfun$foreach$1.apply(HashMap.scala:130) at scala.collection.mutable.HashMap$$anonfun$foreach$1.apply(HashMap.scala:130) at scala.collection.mutable.HashTable$class.foreachEntry(HashTable.scala:236) at scala.collection.mutable.HashMap.foreachEntry(HashMap.scala:40) at scala.collection.mutable.HashMap.foreach(HashMap.scala:130) at kafka.server.ReplicaManager.makeLeaders(ReplicaManager.scala:1163) at kafka.server.ReplicaManager.becomeLeaderOrFollower(ReplicaManager.scala:1083) at kafka.server.KafkaApis.handleLeaderAndIsrRequest(KafkaApis.scala:183) at kafka.server.KafkaApis.handle(KafkaApis.scala:108) at kafka.server.KafkaRequestHandler.run(KafkaRequestHandler.scala:69) at java.lang.Thread.run(Thread.java:745) Caused by: java.lang.OutOfMemoryError: Map failed at sun.nio.ch.FileChannelImpl.map0(Native Method) at sun.nio.ch.FileChannelImpl.map(FileChannelImpl.java:937) ... 42 more [2021-11-07 20:16:13,689] INFO [ReplicaManager broker=0] Stopping serving replicas in dir D:\software\kafka_2.11-1.1.0\logs (kafka.server.ReplicaManager) [2021-11-07 20:16:13,693] ERROR [ReplicaManager broker=0] Error while making broker the leader for partition Topic: __consumer_offsets; Partition: 41; Leader: None; AllReplicas: ; InSyncReplicas: in dir None (kafka.server.ReplicaManager) org.apache.kafka.common.errors.KafkaStorageException: Error while creating log for __consumer_offsets-41 in dir D:\software\kafka_2.11-1.1.0\logs Caused by: java.io.IOException: Map failed at sun.nio.ch.FileChannelImpl.map(FileChannelImpl.java:940) at kafka.log.AbstractIndex.<init>(AbstractIndex.scala:67) at kafka.log.OffsetIndex.<init>(OffsetIndex.scala:53) at kafka.log.LogSegment$.open(LogSegment.scala:560) at kafka.log.Log.loadSegments(Log.scala:412) at kafka.log.Log.<init>(Log.scala:216) at kafka.log.Log$.apply(Log.scala:1747) at kafka.log.LogManager$$anonfun$getOrCreateLog$1.apply(LogManager.scala:673) at kafka.log.LogManager$$anonfun$getOrCreateLog$1.apply(LogManager.scala:641) at scala.Option.getOrElse(Option.scala:121) at kafka.log.LogManager.getOrCreateLog(LogManager.scala:641) at kafka.cluster.Partition$$anonfun$getOrCreateReplica$1.apply(Partition.scala:177) at kafka.cluster.Partition$$anonfun$getOrCreateReplica$1.apply(Partition.scala:173) at kafka.utils.Pool.getAndMaybePut(Pool.scala:65) at kafka.cluster.Partition.getOrCreateReplica(Partition.scala:172) at kafka.cluster.Partition$$anonfun$6$$anonfun$8.apply(Partition.scala:259) at kafka.cluster.Partition$$anonfun$6$$anonfun$8.apply(Partition.scala:259) at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234) at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234) at scala.collection.Iterator$class.foreach(Iterator.scala:891) at scala.collection.AbstractIterator.foreach(Iterator.scala:1334) at scala.collection.IterableLike$class.foreach(IterableLike.scala:72) at scala.collection.AbstractIterable.foreach(Iterable.scala:54) at scala.collection.TraversableLike$class.map(TraversableLike.scala:234) at scala.collection.AbstractTraversable.map(Traversable.scala:104) at kafka.cluster.Partition$$anonfun$6.apply(Partition.scala:259) at kafka.cluster.Partition$$anonfun$6.apply(Partition.scala:253) at kafka.utils.CoreUtils$.inLock(CoreUtils.scala:250) at kafka.utils.CoreUtils$.inWriteLock(CoreUtils.scala:258) at kafka.cluster.Partition.makeLeader(Partition.scala:253) at kafka.server.ReplicaManager$$anonfun$makeLeaders$4.apply(ReplicaManager.scala:1165) at kafka.server.ReplicaManager$$anonfun$makeLeaders$4.apply(ReplicaManager.scala:1163) at scala.collection.mutable.HashMap$$anonfun$foreach$1.apply(HashMap.scala:130) at scala.collection.mutable.HashMap$$anonfun$foreach$1.apply(HashMap.scala:130) at scala.collection.mutable.HashTable$class.foreachEntry(HashTable.scala:236) at scala.collection.mutable.HashMap.foreachEntry(HashMap.scala:40) at scala.collection.mutable.HashMap.foreach(HashMap.scala:130) at kafka.server.ReplicaManager.makeLeaders(ReplicaManager.scala:1163) at kafka.server.ReplicaManager.becomeLeaderOrFollower(ReplicaManager.scala:1083) at kafka.server.KafkaApis.handleLeaderAndIsrRequest(KafkaApis.scala:183) at kafka.server.KafkaApis.handle(KafkaApis.scala:108) at kafka.server.KafkaRequestHandler.run(KafkaRequestHandler.scala:69) at java.lang.Thread.run(Thread.java:745) Caused by: java.lang.OutOfMemoryError: Map failed at sun.nio.ch.FileChannelImpl.map0(Native Method) at sun.nio.ch.FileChannelImpl.map(FileChannelImpl.java:937) ... 42 more [2021-11-07 20:16:13,836] ERROR Error while creating log for __consumer_offsets-32 in dir D:\software\kafka_2.11-1.1.0\logs (kafka.server.LogDirFailureChannel)

解决过程,

首先,尝试重启kafka,删除kafka的日志,重启zookeeper,甚至关机重启windows系统,都没有用。

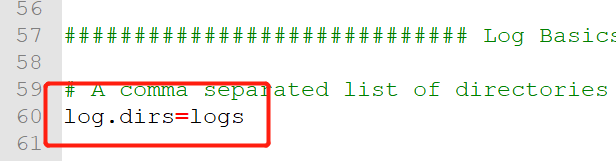

kafka的日志路径:%KAFKA_HOME%\config\server.peoperties中的log.dirs=logs

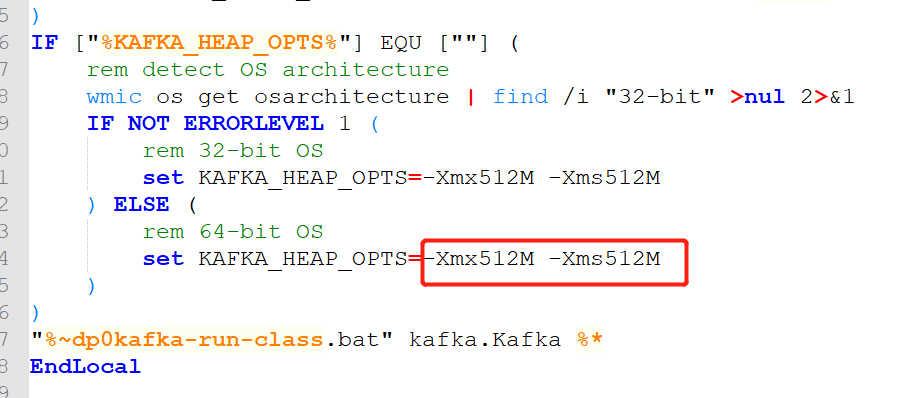

然后,在网上查找解决方案,修改kafka-server-start.bat中的JVM参数,将两个1G修改为512M,结果kafka可以启动一会,但是马上又挂掉。

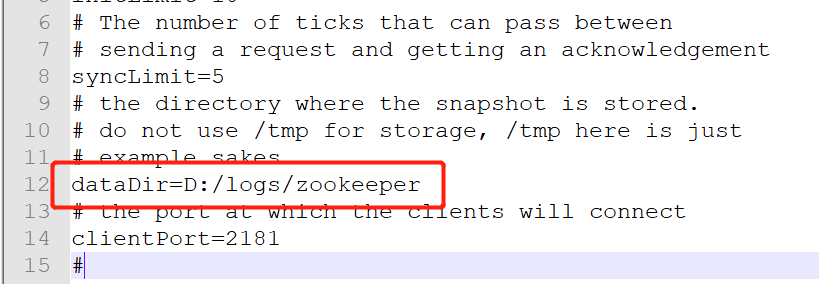

最后通过观察,发现每次重启kafka的时候,logs下面都会有一堆文件产生(而且确认每次启动前我都手动删除过了),很奇怪,不知道这些数据是在哪里缓存的,最终把zookeeper中的logs下面的日志全部删掉,就ok了。

zookeeper的日志路径:在%ZOOKEEPER%conf\zoo.cfg中的dataDir=D:/logs/zookeeper

结论:kafka启动时候的oom主要是因为,数据缓存到依赖的zookeeper中了。惊不惊喜,意不意外

备注:折磨我两天的问题解决了,一些截图暂时没有,后续再补齐,今天周五了,容我先好好休息一下

本文来自博客园,作者:zhangpba,转载请注明原文链接:https://www.cnblogs.com/zhangpb/p/15515600.html

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· winform 绘制太阳,地球,月球 运作规律

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· AI 智能体引爆开源社区「GitHub 热点速览」

· Manus的开源复刻OpenManus初探

· 写一个简单的SQL生成工具