K8S集群搭建

https://www.cnblogs.com/xhyan/p/6655731.html

http://www.voidcn.com/article/p-ufrldnfn-bsd.html

kubernetes是google公司基于docker所做的一个分布式集群,有以下主件组成

etcd: 高可用存储共享配置和服务发现,作为与minion机器上的flannel配套使用,作用是使每台 minion上运行的docker拥有不同的ip段,最终目的是使不同minion上正在运行的docker containner都有一个与别的任意一个containner(别的minion上运行的docker containner)不一样的IP地址。

flannel: 网络结构支持

kube-apiserver: 不论通过kubectl还是使用remote api 直接控制,都要经过apiserver

kube-controller-manager: 对replication controller, endpoints controller, namespace controller, and serviceaccounts controller的循环控制,与kube-apiserver交互,保证这些controller工作

kube-scheduler: Kubernetes scheduler的作用就是根据特定的调度算法将pod调度到指定的工作节点(minion)上,这一过程也叫绑定(bind)

kubelet: Kubelet运行在Kubernetes Minion Node上. 它是container agent的逻辑继任者

kube-proxy: kube-proxy是kubernetes 里运行在minion节点上的一个组件, 它起的作用是一个服务代理的角色

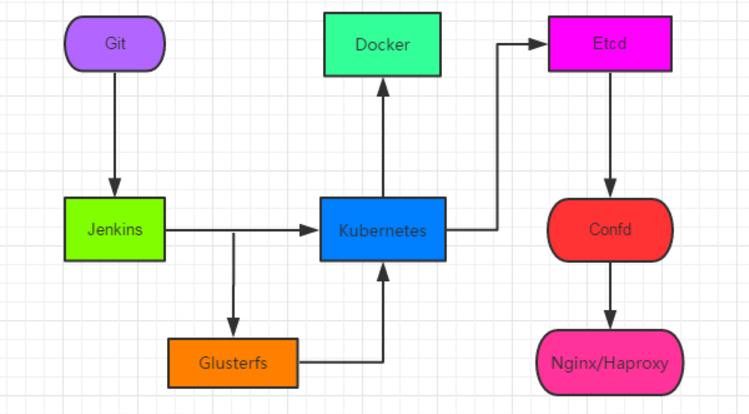

图为GIT+Jenkins+Kubernetes+Docker+Etcd+confd+Nginx+Glusterfs架构:

如下:

环境:

centos7系统机器三台:

192.168.1.165: 用来安装kubernetes master

192.168.1.247: 用作kubernetes minion (minion1)

一、关闭系统运行的防火墙及selinux

1。如果系统开启了防火墙则按如下步骤关闭防火墙(所有机器)

# systemctl stop firewalld # systemctl disable firewalld

2.关闭selinux

1 2 | #setenforce 0#sed -i '/^SELINUX=/cSELINUX=disabled' /etc/sysconfig/selinux |

二、MASTER安装配置

-

安装并配置Kubernetes master(yum 方式)

yum -y install etcd kubernetes

配置etcd。确保列出的这些项都配置正确并且没有被注释掉,下面的配置都是如此

#vim /etc/etcd/etcd.conf

1 2 3 4 | ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"ETCD_NAME="default"ETCD_ADVERTISE_CLIENT_URLS="http://0.0.0.0:2379" |

配置kubernetes

vim /etc/kubernetes/apiserver

KUBE_API_ADDRESS="--address=0.0.0.0"

KUBE_API_PORT="--port=8080"

KUBELET_PORT="--kubelet_port=10250"

KUBE_ETCD_SERVERS="--etcd_servers=http://192.168.1.165:2379"

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16"

KUBE_ADMISSION_CONTROL="--admission_control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

KUBE_API_ARGS=""

2. 启动etcd, kube-apiserver, kube-controller-manager and kube-scheduler服务

for SERVICES in etcd kube-apiserver kube-controller-manager kube-scheduler; do systemctl restart $SERVICES ;systemctl enable $SERVICES ;systemctl status $SERVICES ; done;

3.设置etcd网络

#etcdctl -C 192.168.1.165:2379 set /atomic.io/network/config '{"Network":"10.1.0.0/16"}'

报错如下:

parse 192.168.1.165:2379: first path segment in URL cannot contain colon

解决方法:

etcdctl -C http://127.0.0.1:2379 set /atomic.io/network/config '{"Network":"10.1.0.0/16"}'

{"Network":"10.1.0.0/16"}

4. 至此master配置完成,运行kubectl get nodes可以查看有多少minion在运行,以及其状态。这里我们的minion还都没有开始安装配置,所以运行之后结果为空

kubectl get nodes

三、MINION安装配置(每台minion机器都按如下安装配置)

1. 环境安装和配置

yum -y install flannel kubernetes

配置kubernetes连接的服务端IP

vim /etc/kubernetes/config 修改以下两个配置项

KUBE_MASTER="--master=http://192.168.1.165:8080"

KUBE_ETCD_SERVERS="--etcd_servers=http://192.168.1.165:2379"

配置kubernetes ,(请使用每台minion自己的IP地址比如192.168.1.247:代替下面的$LOCALIP)

#vim /etc/kubernetes/kubelet 红色标记的要调整,具体看后面的步骤,否则创建pod会失败。

KUBELET_ADDRESS="--address=0.0.0.0"

KUBELET_HOSTNAME="--hostname-override=192.168.1.247"

KUBELET_API_SERVER="--api-servers=http://192.168.1.165:8080"

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

KUBELET_ARGS=""

2. 准备启动服务(如果本来机器上已经运行过docker的请看过来,没有运行过的请忽略此步骤)

运行ifconfig,查看机器的网络配置情况(有docker0)

ifconfig docker0Link encap:Ethernet HWaddr 02:42:B2:75:2E:67 inet addr:172.17.0.1 Bcast:0.0.0.0 Mask:255.255.0.0 UPBROADCAST MULTICAST MTU:1500 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

warning:在运行过docker的机器上可以看到有docker0,这里在启动服务之前需要删掉docker0配置,在命令行运行:sudo ip link delete docker0

3.配置flannel网络

#vim /etc/sysconfig/flanneldFLANNEL_ETCD_ENDPOINTS="http://192.168.1.165:2379"

FLANNEL_ETCD_PREFIX="/atomic.io/network"

PS:其中atomic.io与上面etcd中的Network对应

4. 启动服务

for SERVICES in flanneld kube-proxy kubelet docker; do systemctl restart $SERVICES ;systemctl enable $SERVICES ;systemctl status $SERVICES; done

确定minion(192.168.1.247)和一台master(192.168.1.165)都已经成功的安装配置并且服务都已经启动了。

切换到master机器上,运行命令kubectl get nodes

kubectl get nodesNAME STATUS AGE

192.168.1.247 Ready 6h

可以看到配置的minion已经在master的node列表中了。如果想要更多的node,只需要按照minion的配置,配置更多的机器就可以了。

三、Kubernetes之深入了解Pod

2、Pod基本用法:

在使用docker时,我们可以使用docker run命令创建并启动一个容器,而在Kubernetes系统中对长时间运行的容器要求是:其主程序需要一直在前台运行。如果我们创建的docker镜像的启动命令是后台执行程序,例如Linux脚本:

nohup ./startup.sh &

则kubelet创建包含这个容器的pod后运行完该命令,即认为Pod执行结束,之后根据RC中定义的pod的replicas副本数量生产一个新的pod,而一旦创建出新的pod,将在执行完命令后陷入无限循环的过程中,这就是Kubernetes需要我们创建的docker镜像以一个前台命令作为启动命令的原因。

对于无法改造为前台执行的应用,也可以使用开源工具supervisor辅助进行前台运行的功能。

****Pod可以由一个或多个容器组合而成

例如:两个容器应用的前端frontend和redis为紧耦合的关系,应该组合成一个整体对外提供服务,则应该将这两个打包为一个pod.

配置文件frontend-localredis-pod.yaml如下,刚开始的时候没有直接复制,yaml对格式严格要求,所以可以通过kubectl create -f frontend-localredis-pod.yaml --dry-run --validate=true检查配置文件哪里有问题。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | apiVersion: v1kind: Podmetadata: name: redis-php labels: name: redis-phpspec: containers: - name: frontend image: kubeguide/guestbook-php-frontend:localredis ports: - containerPort: 80 - name: redis-php image: kubeguide/redis-master ports: - containerPort: 6379 |

kubernetes启动pod,pod状态一直不正常,查看pod状态显示

image pull failed for registry.access.redhat.com/rhel7/pod-infrastructure:latest, this may be because there are no credentials on this request. details: (open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)

按照网上所说的解决方案:yum install *rhsm* -y

Failed to create pod infra container: ImagePullBackOff; Skipping pod "redis-master-jj6jw_default(fec25a87-cdbe-11e7-ba32-525400cae48b)": Back-off pulling image "registry.access.redhat.com/rhel7/pod-infrastructure:latest

解决方法:试试通过手动下载

docker pull registry.access.redhat.com/rhel7/pod-infrastructure:latest

docker pull 是还是报错

open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory

查看下redhat-ca.crt确实不存在,registry.access.redhat.com/rhel7/pod-infrastructure:latest默认是https下载。

最终解决方案:

1.docker search pod-infrastructure

docker search pod-infrastructure

INDEX NAME DESCRIPTION STARS OFFICIAL AUTOMATED

docker.io docker.io/openshift/origin-pod The pod infrastructure image for OpenShift 3 8

docker.io docker.io/davinkevin/podcast-server Container around the Podcast-Server Applic... 5

docker.io docker.io/infrastructureascode/aws-cli Containerized AWS CLI on alpine to avoid r... 4 [OK]

docker.io docker.io/newrelic/infrastructure Public image for New Relic Infrastructure. 4

docker.io docker.io/infrastructureascode/uwsgi uWSGI application server 2 [OK]

docker.io docker.io/infrastructureascode/serf A tiny Docker image with HashiCorp Serf us... 1 [OK]

docker.io docker.io/mosquitood/k8s-rhel7-pod-infrastructure 1

docker.io docker.io/podigg/podigg-lc-hobbit A HOBBIT dataset generator wrapper for PoDiGG 1 [OK]

docker.io docker.io/stefanprodan/podinfo Kubernetes multi-arch pod info 1

docker.io docker.io/tianyebj/pod-infrastructure registry.access.redhat.com/rhel7/pod-infra... 1

docker.io docker.io/w564791/pod-infrastructure latest 1

docker.io docker.io/infrastructureascode/hello-world A tiny "Hello World" web server with a hea... 0 [OK]

docker.io docker.io/jqka/pod-infrastructure redhat pod 0 [OK]

docker.io docker.io/ocpqe/hello-pod Copy form docker.io/deshuai/hello-pod:latest 0

docker.io docker.io/oudi/pod-infrastructure pod-infrastructure 0 [OK]

docker.io docker.io/sebastianhutter/podcaster python script to download podcasts https:/... 0 [OK]

docker.io docker.io/shadowalker911/pod-infrastructure 0

docker.io docker.io/statemood/pod-infrastructure Automated build from registry.access.redha... 0 [OK]

docker.io docker.io/tfgco/podium Podium is a blazing-fast player ranking se... 0

docker.io docker.io/trancong/pod2consul register pod with consul 0

docker.io docker.io/tundradotcom/podyn dockerized Podyn 0

docker.io docker.io/vistalba/podget Podget Docker with rename included. 0 [OK]

docker.io docker.io/wedeploy/infrastructure 0

docker.io docker.io/xplenty/rhel7-pod-infrastructure registry.access.redhat.com/rhel7/pod-infra... 0

docker.io docker.io/zengshaoyong/pod-infrastructure pod-infrastructure 0 [OK]

2.vi /etc/kubernetes/kubelet

替换为上面第一个节点(Deven:后面改回去了也没有报错了,所以这个步骤可能不是必须的。) KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=docker.io/openshift/origin-pod"

3.重启

systemctl restart kube-apiserver

systemctl restart kube-controller-manager

systemctl restart kube-scheduler

systemctl restart kubelet

systemctl restart kube-proxy

4. kubectl get pods 查看之前的pods已经正常状态Running

属于一个Pod的多个容器应用之间相互访问只需要通过localhost就可以通信,这一组容器被绑定在一个环境中。

使用kubectl create创建该Pod后,get pod信息可以看到如下图:

#kubectl get pods

NAME READY STATUS RESTARTS AGE

myweb-1rr24 1/1 Running 1 27m

redis-php 2/2 Running 0 16s

查看pod的详细信息,可以看到两个容器的定义和创建过程。

#kubectl describe pods redis-php

Name: redis-php

Namespace: default

Node: 192.168.1.247/192.168.1.247

Start Time: Sat, 04 Aug 2018 17:37:53 +0800

Labels: name=redis-php

Status: Running

IP: 10.1.49.3

Controllers: <none>

Containers:

frontend:

Container ID: docker://1c109acce5c81f57f7c02619c489855ae67ece114fdfa104189521e1f2fc052b

Image: kubeguide/guestbook-php-frontend:localredis

Image ID: docker-pullable://docker.io/kubeguide/guestbook-php-frontend@sha256:37c2c1dcfcf0a51bf9531430fe057bcb1d4b94c64048be40ff091f01e384f81e

Port: 80/TCP

State: Running

Started: Sat, 04 Aug 2018 17:37:54 +0800

Ready: True

Restart Count: 0

Volume Mounts: <none>

Environment Variables: <none>

redis-php:

Container ID: docker://6edf7724a548f178975eb9abcbee675788720a5d804867124f1dc454e7e3b058

Image: kubeguide/redis-master

Image ID: docker-pullable://docker.io/kubeguide/redis-master@sha256:e11eae36476b02a195693689f88a325b30540f5c15adbf531caaecceb65f5b4d

Port: 6379/TCP

State: Running

Started: Sat, 04 Aug 2018 17:38:00 +0800

Ready: True

Restart Count: 0

Volume Mounts: <none>

Environment Variables: <none>

Conditions:

Type Status

Initialized True

Ready True

PodScheduled True

No volumes.

QoS Class: BestEffort

Tolerations: <none>

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

2m 2m 1 {default-scheduler } Normal Scheduled Successfully assigned redis-php to 192.168.1.247

2m 2m 1 {kubelet 192.168.1.247} spec.containers{frontend} Normal Pulled Container image "kubeguide/guestbook-php-frontend:localredis" already present on machine

2m 2m 1 {kubelet 192.168.1.247} spec.containers{frontend} Normal Created Created container with docker id 1c109acce5c8; Security:[seccomp=unconfined]

2m 2m 1 {kubelet 192.168.1.247} spec.containers{frontend} Normal Started Started container with docker id 1c109acce5c8

2m 2m 1 {kubelet 192.168.1.247} spec.containers{redis-php} Normal Pulling pulling image "kubeguide/redis-master"

2m 2m 3 {kubelet 192.168.1.247} Warning MissingClusterDNS kubelet does not have ClusterDNS IP configured and cannot create Pod using "ClusterFirst" policy. Falling back to DNSDefault policy.

2m 2m 1 {kubelet 192.168.1.247} spec.containers{redis-php} Normal Pulled Successfully pulled image "kubeguide/redis-master"

2m 2m 1 {kubelet 192.168.1.247} spec.containers{redis-php} Normal Created Created container with docker id 6edf7724a548; Security:[seccomp=unconfined]

2m 2m 1 {kubelet 192.168.1.247} spec.containers{redis-php} Normal Started Started container with docker id 6edf7724a548

四、创建RC(Replication Controller)

vim myweb-rc.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | apiVersion: v1kind: ReplicationControllermetadata: name: mywebspec: replicas: 2 selector: name: myweb template: metadata: labels: name: myweb spec: containers: - name: myweb image: kubeguide/tomcat-app:v1 ports: - containerPort: 8080 |

创建pod kubectl create -f wyweb-rc.yaml

查看创建情况:kubectl get po

NAME READY STATUS RESTARTS AGE

command-demo 0/1 CrashLoopBackOff 38 2h

myweb-46x8r 1/1 Running 0 7m

redis-php 2/2 Running 0 2h

posted on 2019-04-14 21:54 zhangmingda 阅读(767) 评论(0) 编辑 收藏 举报

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· 阿里最新开源QwQ-32B,效果媲美deepseek-r1满血版,部署成本又又又降低了!

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· AI技术革命,工作效率10个最佳AI工具