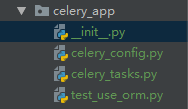

celery_异步并行分布式框架简易部署报错记录

# -*- coding: utf-8 -*- from celery import Celery import os os.environ.setdefault('FORKED_BY_MULTIPROCESSING', '1') app = Celery('demo') app.config_from_object('celery_app.celery_config')

# -*- coding:utf-8 -*- from __future__ import absolute_import #celery_config.py CELERY_RESULT_BACKEND = 'redis://192.168.36.201/2' BROKER_URL = 'redis://192.168.36.201/1' # UTC CELERY_ENABLE_UTC = True CELERY_TIMEZONE = 'Asia/Shanghai' # 导入指定的任务模块 CELERY_IMPORTS = ( 'celery_app.celery_tasks', ) #提交任务传递的参数序列化方式:解决发送任务传递实例类型数据报错:kombu.exceptions.EncodeError: Object of type 'Session' is not JSON serializable CELERY_TASK_SERIALIZER='pickle' CELERY_ACCEPT_CONTENT=['pickle','json']

from celery_app import app from app.tools.orm import ORM #数据库连接相关:session会话 import time @app.task def session_commit_data(cpu_data,mem_data,swap_data,_date_time): while True: session = ORM.create_session() try: session.add(cpu_data) session.add(mem_data) session.add(swap_data) session.commit() except Exception as e: session.rollback() print(e) print('\033[31;1m {_date_time} 的数据提交失败1S后重试\033[0m'.format(_date_time=_date_time)) time.sleep(1) else: print('\033[32;1m {_date_time}时间的存储成功\033[0m'.format(_date_time=_date_time)) session.close() # break return '{_date_time} monitor_data save success'.format(_date_time=_date_time) finally: session.close() @app.task def test_str_add(str1,str2,str3): strall = str1 + str2 + str3 + '成功' time.sleep(10) # print(strall) return strall

# -*- coding: utf-8 -*- #test_use_orm.py from app.tools.monitor import Monitor #psutil 封装的获取当前系统监控数据模块 from app.models.models import Cpu,Mem,Swap #要存储的数据类型类 import time,datetime #导入异步提交数据模块 from celery_app.celery_tasks import session_commit_data as async_commit_data #获取日期时间获取函数 def dt(): now = datetime.datetime.now() _date_time = now.strftime("%Y-%m-%d %H:%M:%S") _date = now.strftime("%Y-%m-%d") _time = now.strftime("%H:%M:%S") return _date_time,_date,_time m = Monitor() # 获取监控数据实例(类) #获取监控数据函数 def get_monitor_data(): cpu_info, mem_info, swap_info = m.cpu(), m.mem(), m.swap() #获取时间 _date_time, _date, _time = dt() cpu = Cpu( percent=cpu_info.get('percent_avg'), create_date=_date, create_time=_time, create_dt=_date_time ) mem = Mem( percent=mem_info.get('percent'), total=mem_info.get('total'), used=mem_info.get('used'), free=mem_info.get('free'), create_dt=_date_time, create_time=_time, create_date=_date ) swap = Swap( percent=swap_info.get('percent'), total=swap_info.get('total'), used=swap_info.get('used'), free=swap_info.get('free'), create_dt=_date_time, create_time=_time, create_date=_date ) return cpu,mem,swap,_date_time import json while True: cpu_data,mem_data,swap_data,_date_time = get_monitor_data() print('\033[32;1m提交{_date_time} 的数据\033[0m'.format(_date_time=_date_time)) r = async_commit_data.delay(cpu_data,mem_data,swap_data,_date_time) time.sleep(0.3) print('r.ready()',r.ready()) # print('r.result',r.result) # print('r.get()',r.get()) time.sleep(5) # while True: # r = test_str_add.delay('a','b','c') # time.sleep(0.2) # print('r.ready()',r.ready()) # print('r.result',r.result) # # print('r.get()',r.get()) # time.sleep(1)

启动celeryapp:

在celery_app 上一级目录:windows10-pycharmTerminal命令行【暂时称为:celeryapp Terminal】:celery -A celery_app worker -l info 启动成功

附

附

运行: celery multi start worker1 -B -A celery_app -l info --logfile=celerylog.log --pidfile=celerypid.pid 停止: celery multi stop worker1 --pidfile=celerypid.pid

[root@vm192-168-3-2 monitor]# celery multi start worker1 -B -A celery_app -l info --logfile=celerylog.log --pidfile=celerypid.pid

celery multi v4.3.0 (rhubarb)

> Starting nodes...

> worker1@vm192-168-3-2.ksc.com: OK

[root@vm192-168-3-2 monitor]# celery multi stop worker1 --pidfile=celerypid.pid

celery multi v4.3.0 (rhubarb)

> Stopping nodes...

> worker1@vm192-168-3-2.ksc.com: TERM -> 15787

#win10下pycharm的Terminal D:\Python3_study\monitor\celery_app>celery -A celery_app worker -l info -------------- celery@BZD24963 v4.3.0 (rhubarb) ---- **** ----- --- * *** * -- Windows-10-10.0.16299-SP0 2019-10-14 16:55:12 -- * - **** --- - ** ---------- [config] - ** ---------- .> app: demo:0x1f61681bb38 - ** ---------- .> transport: redis://192.168.36.201:6379/1 - ** ---------- .> results: redis://192.168.36.201/2 - *** --- * --- .> concurrency: 8 (prefork) -- ******* ---- .> task events: OFF (enable -E to monitor tasks in this worker) --- ***** ----- -------------- [queues] .> celery exchange=celery(direct) key=celery [tasks] . celery_app.celery_tasks.session_commit_data . celery_app.celery_tasks.test_str_add [2019-10-14 16:55:12,426: INFO/MainProcess] Connected to redis://192.168.36.201:6379/1 [2019-10-14 16:55:12,441: INFO/MainProcess] mingle: searching for neighbors [2019-10-14 16:55:13,298: INFO/SpawnPoolWorker-1] child process 32792 calling self.run() [2019-10-14 16:55:13,308: INFO/SpawnPoolWorker-4] child process 18896 calling self.run() [2019-10-14 16:55:13,344: INFO/SpawnPoolWorker-2] child process 22512 calling self.run() [2019-10-14 16:55:13,381: INFO/SpawnPoolWorker-3] child process 40720 calling self.run() [2019-10-14 16:55:13,389: INFO/SpawnPoolWorker-5] child process 38592 calling self.run() [2019-10-14 16:55:13,474: INFO/SpawnPoolWorker-8] child process 20656 calling self.run() [2019-10-14 16:55:13,499: INFO/SpawnPoolWorker-6] child process 26908 calling self.run() [2019-10-14 16:55:13,516: INFO/MainProcess] mingle: all alone [2019-10-14 16:55:13,620: INFO/MainProcess] celery@BZD24963 ready. [2019-10-14 16:55:13,634: INFO/SpawnPoolWorker-7] child process 41408 calling self.run()

另外一个Terminal终端 【暂时称为:提交任务 Terminal】:python test_use_orm.py

错误一:【celeryapp Terminal】报错:ValueError: not enough values to unpack (expected 3, got 0)

解决:app 定义文件中配置环境变量

# os.environ.setdefault('FORKED_BY_MULTIPROCESSING', '1')

参考:https://www.jb51.net/article/151611.htm

错误二:

【celeryapp Terminal】报错:kombu.exceptions.ContentDisallowed: Refusing to deserialize untrusted content of type pickle (application/x-python-serialize)

原因:默认情况下celery4不会接受pickle类型的消息,所以报这个错误解决:

设置 CELERY_ACCEPT_CONTENT=['pickle'] 解决

错误三:

test_use_orm.py 中 print('r.ready()',r.ready())报错:kombu.exceptions.ContentDisallowed: Refusing to deserialize untrusted content of type json (application/json)

在上面配置中加入'json'即可: CELERY_ACCEPT_CONTENT=['pickle','json']

错误二三参考:https://blog.csdn.net/libing_thinking/article/details/78622943

posted on 2019-10-14 17:37 zhangmingda 阅读(812) 评论(0) 编辑 收藏 举报