logstash采集tomcat日志、mysql错误日志

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | input{ file { path => "/opt/Tomcat7.0.28/logs/*.txt" start_position => "beginning" sincedb_path => "/dev/null" }}filter { grok { match => ["message", "%{COMMONAPACHELOG}"] } date{ match=>["timestamp","dd/MMM/yyyy:HH:mm:ss Z"] target=>"@timestamp" }}output{ if "/BIZPortalServer" in [request]{ stdout { codec => rubydebug } elasticsearch { hosts => ["10.75.8.167:9200"] index => "logstash-lcfwzx-%{+YYYY.MM.dd}" } } } |

上面是一般情况下logstash采集tomcat日志的配置文件,但是@timestamp字段的值是UTC时区,但是tomcat日志(如10.19.42.226 - - [18/Oct/2017:13:58:00 +0800] "GET/static/ace/js/grid.locale-en.js HTTP/1.1" 200 4033)里的网络请求却是服务器本地时间,一般我们都会调成上海时间,这样时区不一致,就造成了es根据时间区间过滤数据时结果不正确。下面的配置文件解决了时区问题:

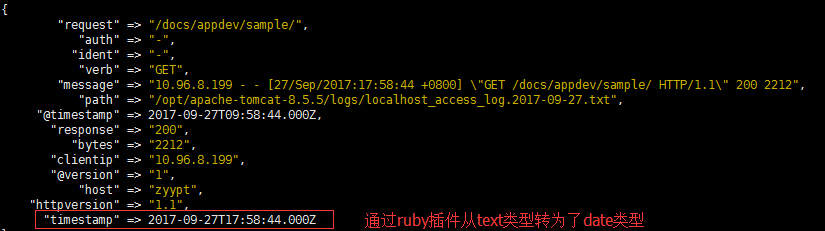

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | input{ file { path => "/opt/apache-tomcat-8.5.5/logs/*.txt" start_position => "beginning" sincedb_path => "/dev/null" }}filter { grok { match => ["message", "%{COMMONAPACHELOG}"] } date{ match=>["timestamp","dd/MMM/yyyy:HH:mm:ss Z"] target=>"@timestamp" } ruby { code => "event.set('timestamp', event.get('@timestamp').time.localtime + 8*60*60)" }}output{ stdout { codec => rubydebug } elasticsearch { hosts => ["10.75.8.167:9200"] index => "logstash-lcfwzx-%{+YYYY.MM}" } } |

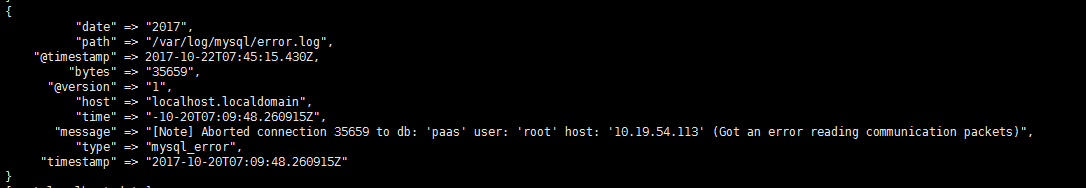

logstash采集mysql错误日志(格式:2017-10-20T02:08:34.642330Z 34586 [Note] Aborted connection 34586 to db: 'paas' user: 'root' host: '10.19.54.113'(Got an error reading communication packets))的配置文件:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | input { file { path => "/var/log/mysql/error.log" type => mysql_error start_position => "beginning" }}filter { grok { match => [ 'message', "(?m)^%{NUMBER:date} *%{NOTSPACE:time} %{NUMBER:bytes} %{GREEDYDATA:message}" ] overwrite => [ 'message' ] add_field => { "timestamp" => "%{date}%{time}" } }}output { elasticsearch { hosts => ["10.75.8.167:9200"] index => "logstash-errorsql" document_type => "mysql_logs" } stdout { codec => rubydebug }} |

log4j日志采集:

日志格式:[INFO ][2017/10/30 11:00:03473][com.imserver.apnsPushServer.service.ApnsPushService$1.run(ApnsPushService.java:171)] end feedback

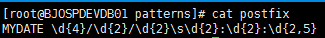

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | input{ file { path => "/opt/tomcat/logs/log4j.log.20*" codec => multiline { pattern => "^\s\[INFO" negate => true what => "previous" } start_position => "beginning" }}filter{ grok { patterns_dir => ["./patterns"] match => [ 'message', "%{MYDATE:timestamp}"] }}output{ stdout { codec => rubydebug } elasticsearch { hosts => ["10.75.8.167:9200"] index => "logstash-imserver-%{+YYYY.MM}" }} |

1 2 3 4 5 6 | date { locale => "en_US" timezone => "Asia/Shanghai" match => [ "timestamp", "yyyy-MM-dd HH:mm:ss"] target => "@timestamp" } |

==============================

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | input{ file { path => "/home/data/domains/domain1/logs/access/zmc" start_position => "beginning" sincedb_path => "/dev/null" }}filter { grok { patterns_dir => ["./patterns"] match => ["message","\"%{MYIP:clientip}\" \"%{MYUSER:myuser}\" \"%{HTTPDATE:timestamp}\" \"%{WORD:verb} %{NOTSPACE:request} %{HTTPV:http}\" %{NUMBER:response} %{NUMBER:res}"] }}output{ stdout { codec => rubydebug } elasticsearch { hosts => ["10.75.8.167:9200"] index => "logstash-zmch52" }} |

===============

MYUSER NULL-AUTH-USER

MYIP \d{1,3}.\d{1,3}.\d{1,3}.\d{1,3}

HTTPV HTTP/\d{1}.\d{1}

-----------------

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | input{ file { path => "/usr/local/apache-tomcat-7.0.73/logs/localhost_access_log.*.txt" start_position => "beginning" }}filter { grok { patterns_dir => ["./patterns"] match => ["message","\"%{MYIP:clientip}\" \"%{MYUSER:myuser}\" \"%{HTTPDATE:timestamp}\" \"%{WORD:verb} %{NOTSPACE:request} %{HTTPV:http}\" %{NUMBER:response} %{NUMBER:res}"] } date{ match=>["timestamp","dd/MMM/yyyy:HH:mm:ss Z"] target=>"@timestamp" } ruby { code => "event.set('timestamp', event.get('@timestamp').time.localtime + 8*60*60)" }}output{ stdout { codec => rubydebug } elasticsearch { hosts => ["10.75.8.167:9200"] index => "logstash-dbxt-%{+YYYY.MM}" }} |

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 【译】Visual Studio 中新的强大生产力特性

· 张高兴的大模型开发实战:(一)使用 Selenium 进行网页爬虫

· 【设计模式】告别冗长if-else语句:使用策略模式优化代码结构