解决机房加电后Kubernetes集群kube-apiserver服务不断重启报错问题

1、背景

去年协助某部门在他们测试环境下部署了一套Kubernetes集群(1Master 4Worker),当时在Kubernetes集群配置了公司内部的镜像仓库,今年上半年由于公司机房网络环境调整,某部门的服务器连不上公司镜像仓库了,于是临时在他们测试环境下部署了一套Harbor镜像仓库,用于接收他们业务构建的容器镜像,并将部署Kubernetes集群用到的镜像推送到了新的Harbor镜像仓库里面,由于部署Kubernetes集群时还部署了一些附件组件(日志、监控、微服务治理等),整个Kubernetes集群工作负载数量较多,所以就没挨着修改工作负载的镜像地址,导致部分工作负载还是配置了公司镜像仓库镜像,由于测试环境使用不多,加上附件组件比较稳定,最近半年附件组件对应Pod也没有重新调度,所以Kubernetes集群及其组件运行平稳。

上周末某部门机房需要断电进行机房线路加固,周一早晨机房来电后,发现Kubernetes集群没有自动恢复,然后他们运维人员联系我这边来帮他们恢复Kubernetes集群。

2、问题排查及解决

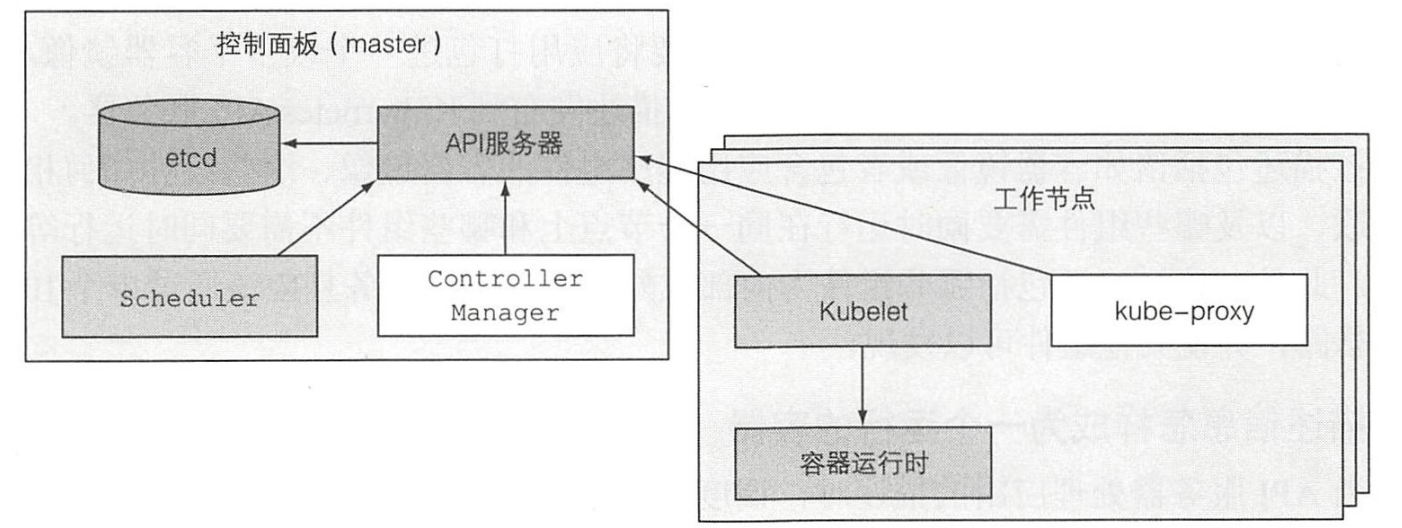

登录Kubernetes集群发现kube-apiserver一直重启,导致Kubernetes控制组件一直在重启,查看kube-apiserver日志报不断在尝试连接etcd 2379端口错误:

1 | W0420 06:27:37.750969 1 clientconn.go:1208] grpc: addrConn.createTransport failed to connect to {https://192.111.1.134:2379 <nil> 0 <nil>}. Err :connection error: desc = "transport: Error while dialing dial tcp 192.111.1.134:2379: connect: connection refused". Reconnecting... |

然后,查看etcd服务日志,发现报如下错误:

1 | embed: rejected connection from (error "tls: oversized record received with length 64774", ServerName "") |

通过查看etcd状态可知etcd服务运行正常:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | [root@master-sg-134 ~]# ETCDCTL_API=3 etcdctl --cacert=/etc/ssl/etcd/ssl/ca.pem --cert=/etc/ssl/etcd/ssl/member-master-sg-134.pem --key=/etc/ssl/etcd/ssl/member-master-sg-134-key.pem --endpoints="https://192.111.1.134:2379" endpoint status --write-out=table+----------------------------+------------------+---------+---------+-----------+-----------+------------+| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | RAFT TERM | RAFT INDEX |+----------------------------+------------------+---------+---------+-----------+-----------+------------+| https://192.111.1.134:2379 | 98184cb9ad9cec26 | 3.3.12 | 41 MB | true | 21 | 195592703 |+----------------------------+------------------+---------+---------+-----------+-----------+------------+[root@master-sg-134 ~]# ETCDCTL_API=3 etcdctl --cacert=/etc/ssl/etcd/ssl/ca.pem --cert=/etc/ssl/etcd/ssl/member-master-sg-134.pem --key=/etc/ssl/etcd/ssl/member-master-sg-134-key.pem --endpoints="https://192.111.1.134:2379" endpoint health --write-out=tablehttps://192.111.1.134:2379 is healthy: successfully committed proposal: took = 844.331µs[root@master-sg-134 ~]# ETCDCTL_API=3 etcdctl --cacert=/etc/ssl/etcd/ssl/ca.pem --cert=/etc/ssl/etcd/ssl/member-master-sg-134.pem --key=/etc/ssl/etcd/ssl/member-master-sg-134-key.pem --endpoints="https://192.111.1.134:2379" member list --write-out=table+------------------+---------+-------+----------------------------+----------------------------+| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS |+------------------+---------+-------+----------------------------+----------------------------+| 98184cb9ad9cec26 | started | etcd1 | https://192.111.1.134:2380 | https://192.111.1.134:2379 |+------------------+---------+-------+----------------------------+----------------------------+[root@master-sg-134 ~]# |

将etcd数据导入到etcd_data.json文件中,通过查看导出的etcd_data.json文件得知Kubernetes集群数据正常。

1 | ETCDCTL_API=3 etcdctl --cacert=/etc/ssl/etcd/ssl/ca.pem --cert=/etc/ssl/etcd/ssl/member-master-sg-134.pem --key=/etc/ssl/etcd/ssl/member-master-sg-134-key.pem --endpoints="https://192.111.1.134:2379" get / --prefix > etcd_data.json |

备份Kubernetes集群数据目录:

1 | tar -zcvf etcd-202201205.tar.gz /var/lib/etcd |

通过对etcd组件的排查可以得知etcd服务运行正常,通过etcdctl客户端也能正常连接etcd服务,所以etcd组件是没问题的。但是,kube-apiserver Pod却一直重启报连不上etcd错误, /etc/kubernetes/manifests/kube-apiserver.yaml配置文件里面关于etcd相关配置也都正常,所以想着重启一下kube-apiserver这个静态Pod。

1 2 3 4 | - --etcd-cafile=/etc/ssl/etcd/ssl/ca.pem- --etcd-certfile=/etc/ssl/etcd/ssl/node-master-sg-134.pem- --etcd-keyfile=/etc/ssl/etcd/ssl/node-master-sg-134-key.pem- --etcd-servers=https://192.111.1.134:2379 |

由于kube-apiserver是静态容器,用docker命令直接停止并删除kube-apiserver相关的容器(pause容器和运行kube-apiserver进程容器)后,kubelet会自动重启kube-apiserver这个静态Pod,但是删除kube-apiserver相关容器后发现kubelet并没重新创建kube-apiserver相关容器。

于是排查kubelet日志和docker服务引擎日志,通过docker服务引擎日志可以看出镜像仓库中没有libray/pause这个镜像。

1 2 | Dec 05 16:58:20 master-sg-134 dockerd[1403]: time="2022-12-05T16:58:20.610370733+08:00" level=warning msg="Error getting v2 registry: Get https://192.111.1.137:80/v2/: http: server gave HTTP response to HTTPS client"Dec 05 16:58:20 master-sg-134 dockerd[1403]: time="2022-12-05T16:58:20.650481522+08:00" level=error msg="Not continuing with pull after error: unknown: repository library/pause not found |

正常节点有这个镜像,不需要去镜像仓库拉取,可能服务器加电后,运维人员清理服务器磁盘了。经排查kubelet使用了公司镜像仓库,于是修改kueblet配置将其改成在他们测试环境下部署的Harbor镜像仓库地址,并重启kubelet服务。

1 2 | [root@master-sg-134 ~]# cat /var/lib/kubelet/kubeadm-flags.envKUBELET_KUBEADM_ARGS="--cgroup-driver=cgroupfs --network-plugin=cni --pod-infra-container-image=新搭建测试镜像仓库地址/xxxxx/pause:3.2" |

重启kueblet服务后,发下kube-apiserver这个静态Pod成功启动了,剩下的Kuberenetes控制面板组件及安装的附加组件都跟着启动了,然后处理所有报错组件的镜像地址,改成他们测试环境下部署的Harbor镜像仓库地址,之后整个Kubernetes集群服务恢复。

3、总结

遇到组件启动报错的情况下,一定要基于它们之间相互依赖关系进行排查,重点查看组件日志,基于日志分析并解决问题。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 【译】Visual Studio 中新的强大生产力特性

· 张高兴的大模型开发实战:(一)使用 Selenium 进行网页爬虫

· 【设计模式】告别冗长if-else语句:使用策略模式优化代码结构

2021-12-07 Linux中top命令参数详解

2021-12-07 iptables动作总结

2021-12-07 Kubernetes 就绪探针(Readiness Probe)

2021-12-07 Kubernetes存活探针(Liveness Probe)

2014-12-07 HDU1556:Color the ball(简单的线段树区域更新)

2014-12-07 HDU1698:Just a Hook(线段树区域更新模板题)

2014-12-07 32位的二进制数