Centos7 HyperLedger Fabric 1.4 生产环境部署

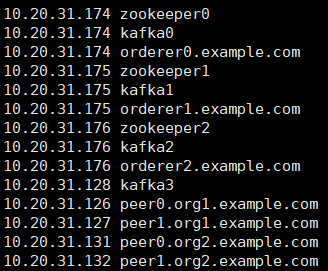

Kafka生产环境部署案例采用三个排序(orderer)服务、四个kafka、三个zookeeper和四个节点(peer)组成,共准备八台服务器,每台服务器对应的服务如下所示:

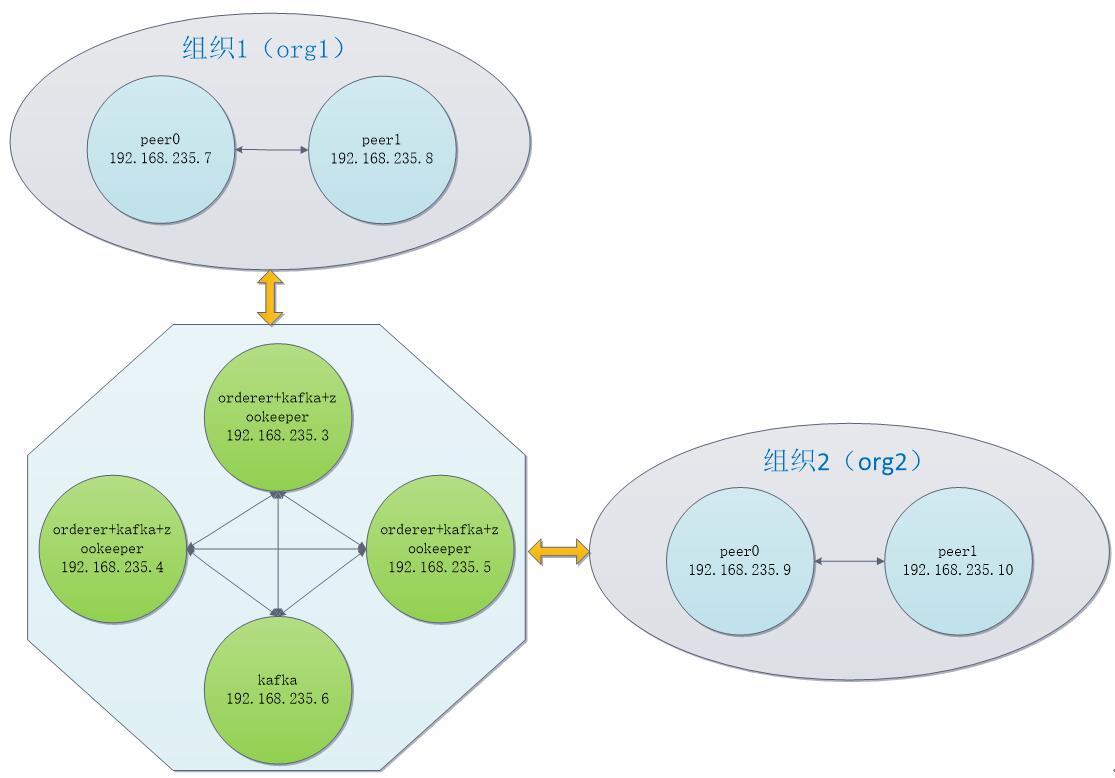

kafka案例网络拓扑图如下:

一、基本环境搭建:关闭防火墙(或开放端口)、selinux、安装配置docker(17.06.2-ce or later)、docker-compose(1.14.0 or later)、git、go(version 1.11.x)、域名ip映射(/etc/hosts)

二、fabirc编译安装

1. 创建目录(GOPATH变量在安装go的时候就配置好了)

1 | mkdir -p $GOPATH/src/github.com/hyperledger |

2. 下载fabric源码

进入上述目录后,下载源码

1 | git clone https://github.com/hyperledger/fabric.git |

3. 安装相关依赖软件

1 | go get github.com/golang/protobuf/protoc-gen-go |

ps:go get下载的文件会自动存放到$GOBIN对应的目录中,如果没有设置GOBIN,则会存放到$GOPATH/bin下面

创建目录

1 | mkdir -p $GOPATH/src/github.com/hyperledger/fabric/.build/docker/gotools/bin |

注意:build前有一个点“.”,遗漏的话会导致在make docker时出现找不到protoc-gen-go的错误

将下载的文件复制到上一步创建的目录下

1 | cp $GOPATH/bin/protoc-gen-go $GOPATH/src/github.com/hyperledger/fabric/.build/docker/gotools/bin |

4. 编译fabric模块

首先进入fabric安装目录

然后执行make release,如果出现以下错误,则说明没有安装gcc,需要先安装gcc:yum install gcc

1 2 3 4 5 6 7 | [root@master1 fabric]# make releaseBuilding release/linux-amd64/bin/configtxgen for linux-amd64mkdir -p release/linux-amd64/binCGO_CFLAGS=" " GOOS=linux GOARCH=amd64 go build -o /root/gopath/src/github.com/hyperledger/fabric/release/linux-amd64/bin/configtxgen -tags "" -ldflags "-X github.com/hyperledger/fabric/common/tools/configtxgen/metadata.CommitSHA=e91c57c" github.com/hyperledger/fabric/common/tools/configtxgen# runtime/cgoexec: "gcc": executable file not found in $PATHmake: *** [release/linux-amd64/bin/configtxgen] 错误 2 |

make release的正确过程:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | [root@master1 fabric]# make releaseBuilding release/linux-amd64/bin/configtxgen for linux-amd64mkdir -p release/linux-amd64/binCGO_CFLAGS=" " GOOS=linux GOARCH=amd64 go build -o /root/gopath/src/github.com/hyperledger/fabric/release/linux-amd64/bin/configtxgen -tags "" -ldflags "-X github.com/hyperledger/fabric/common/tools/configtxgen/metadata.CommitSHA=e91c57c" github.com/hyperledger/fabric/common/tools/configtxgenBuilding release/linux-amd64/bin/cryptogen for linux-amd64mkdir -p release/linux-amd64/binCGO_CFLAGS=" " GOOS=linux GOARCH=amd64 go build -o /root/gopath/src/github.com/hyperledger/fabric/release/linux-amd64/bin/cryptogen -tags "" -ldflags "-X github.com/hyperledger/fabric/common/tools/cryptogen/metadata.CommitSHA=e91c57c" github.com/hyperledger/fabric/common/tools/cryptogenBuilding release/linux-amd64/bin/idemixgen for linux-amd64mkdir -p release/linux-amd64/binCGO_CFLAGS=" " GOOS=linux GOARCH=amd64 go build -o /root/gopath/src/github.com/hyperledger/fabric/release/linux-amd64/bin/idemixgen -tags "" -ldflags "-X github.com/hyperledger/fabric/common/tools/idemixgen/metadata.CommitSHA=e91c57c" github.com/hyperledger/fabric/common/tools/idemixgenBuilding release/linux-amd64/bin/discover for linux-amd64mkdir -p release/linux-amd64/binCGO_CFLAGS=" " GOOS=linux GOARCH=amd64 go build -o /root/gopath/src/github.com/hyperledger/fabric/release/linux-amd64/bin/discover -tags "" -ldflags "-X github.com/hyperledger/fabric/cmd/discover/metadata.CommitSHA=e91c57c" github.com/hyperledger/fabric/cmd/discoverBuilding release/linux-amd64/bin/configtxlator for linux-amd64mkdir -p release/linux-amd64/binCGO_CFLAGS=" " GOOS=linux GOARCH=amd64 go build -o /root/gopath/src/github.com/hyperledger/fabric/release/linux-amd64/bin/configtxlator -tags "" -ldflags "-X github.com/hyperledger/fabric/common/tools/configtxlator/metadata.CommitSHA=e91c57c" github.com/hyperledger/fabric/common/tools/configtxlatorBuilding release/linux-amd64/bin/peer for linux-amd64mkdir -p release/linux-amd64/binCGO_CFLAGS=" " GOOS=linux GOARCH=amd64 go build -o /root/gopath/src/github.com/hyperledger/fabric/release/linux-amd64/bin/peer -tags "" -ldflags "-X github.com/hyperledger/fabric/common/metadata.Version=1.4.1 -X github.com/hyperledger/fabric/common/metadata.CommitSHA=e91c57c -X github.com/hyperledger/fabric/common/metadata.BaseVersion=0.4.14 -X github.com/hyperledger/fabric/common/metadata.BaseDockerLabel=org.hyperledger.fabric -X github.com/hyperledger/fabric/common/metadata.DockerNamespace=hyperledger -X github.com/hyperledger/fabric/common/metadata.BaseDockerNamespace=hyperledger" github.com/hyperledger/fabric/peerBuilding release/linux-amd64/bin/orderer for linux-amd64mkdir -p release/linux-amd64/binCGO_CFLAGS=" " GOOS=linux GOARCH=amd64 go build -o /root/gopath/src/github.com/hyperledger/fabric/release/linux-amd64/bin/orderer -tags "" -ldflags "-X github.com/hyperledger/fabric/common/metadata.Version=1.4.1 -X github.com/hyperledger/fabric/common/metadata.CommitSHA=e91c57c -X github.com/hyperledger/fabric/common/metadata.BaseVersion=0.4.14 -X github.com/hyperledger/fabric/common/metadata.BaseDockerLabel=org.hyperledger.fabric -X github.com/hyperledger/fabric/common/metadata.DockerNamespace=hyperledger -X github.com/hyperledger/fabric/common/metadata.BaseDockerNamespace=hyperledger" github.com/hyperledger/fabric/orderermkdir -p release/linux-amd64/bin |

make release之后再执行make docker,出现:

1 2 3 4 | Successfully built f45ddffeb1beSuccessfully tagged hyperledger/fabric-tools:latestdocker tag hyperledger/fabric-tools hyperledger/fabric-tools:amd64-1.4.0-snapshot-docker tag hyperledger/fabric-tools hyperledger/fabric-tools:amd64-latest |

代表编译成功,make docker需要下载父镜像、构建新镜像,所以这步需要等待些许时间,另外在make docker时会一直报错,然后每次报错重新执行make docker命令就好,直到出现上面的编译成功标识。

上述make release和make docker操作完成后,会自动将编译好的二进制文件存放在以下路径中:

1 | $GOPATH/src/github.com/hyperledger/fabric/release/linux-amd64/bin |

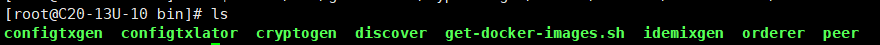

具体内容如下:

5. fabric模块的安装

编译完之后,这些模块就可以被运行了,但目前只能在编译文件所在的文件夹中运行这些模块,非常不方便。为了在系统的任何路径下都能运行,需要通过下面的命令将这些模块的可执行文件复制到系统目录中:

1 | cp $GOPATH/src/github.com/hyperledger/fabric/release/linux-amd64/bin/* /usr/local/bin/ |

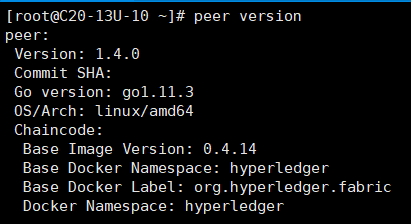

6. fabric模块安装结果检查

peer模块

orderer模块

cryptogen模块

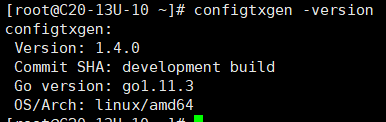

configtxgen模块

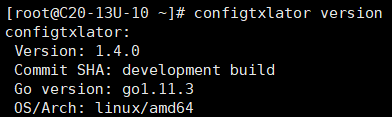

configtxlator模块

如果全都显示正确,则说明fabric安装成功了!

三、HyperLedger Fabric 1.4 生产环境配置(没有启用tls)

1、服务器(10.20.31.174)部署配置

1) 创建kafkapeer目录

1 2 3 | cd $GOPATH/src/github.com/hyperledger/fabricmkdir kafkapeercd kafkapeer |

2) 准备生成证书和区块配置文件

配置crypto-config.yaml和configtx.yaml文件

crypto-config.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 | # Copyright IBM Corp. All Rights Reserved.## SPDX-License-Identifier: Apache-2.0## ---------------------------------------------------------------------------# "OrdererOrgs" - Definition of organizations managing orderer nodes# ---------------------------------------------------------------------------OrdererOrgs: # --------------------------------------------------------------------------- # Orderer # --------------------------------------------------------------------------- - Name: Orderer Domain: example.com CA: Country: US Province: California Locality: San Francisco # --------------------------------------------------------------------------- # "Specs" - See PeerOrgs below for complete description # --------------------------------------------------------------------------- Specs: - Hostname: orderer0 - Hostname: orderer1 - Hostname: orderer2# ---------------------------------------------------------------------------# "PeerOrgs" - Definition of organizations managing peer nodes# ---------------------------------------------------------------------------PeerOrgs: # --------------------------------------------------------------------------- # Org1 # --------------------------------------------------------------------------- - Name: Org1 Domain: org1.example.com EnableNodeOUs: true CA: Country: US Province: California Locality: San Francisco # --------------------------------------------------------------------------- # "Specs" # --------------------------------------------------------------------------- # Uncomment this section to enable the explicit definition of hosts in your # configuration. Most users will want to use Template, below # # Specs is an array of Spec entries. Each Spec entry consists of two fields: # - Hostname: (Required) The desired hostname, sans the domain. # - CommonName: (Optional) Specifies the template or explicit override for # the CN. By default, this is the template: # # "{{.Hostname}}.{{.Domain}}" # # which obtains its values from the Spec.Hostname and # Org.Domain, respectively. # --------------------------------------------------------------------------- # Specs: # - Hostname: foo # implicitly "foo.org1.example.com" # CommonName: foo27.org5.example.com # overrides Hostname-based FQDN set above # - Hostname: bar # - Hostname: baz # --------------------------------------------------------------------------- # "Template" # --------------------------------------------------------------------------- # Allows for the definition of 1 or more hosts that are created sequentially # from a template. By default, this looks like "peer%d" from 0 to Count-1. # You may override the number of nodes (Count), the starting index (Start) # or the template used to construct the name (Hostname). # # Note: Template and Specs are not mutually exclusive. You may define both # sections and the aggregate nodes will be created for you. Take care with # name collisions # --------------------------------------------------------------------------- Template: Count: 2 # Start: 5 # Hostname: {{.Prefix}}{{.Index}} # default # --------------------------------------------------------------------------- # "Users" # --------------------------------------------------------------------------- # Count: The number of user accounts _in addition_ to Admin # --------------------------------------------------------------------------- Users: Count: 1 # --------------------------------------------------------------------------- # Org2: See "Org1" for full specification # --------------------------------------------------------------------------- - Name: Org2 Domain: org2.example.com EnableNodeOUs: true CA: Country: US Province: California Locality: San Francisco Template: Count: 2 Users: Count: 1 |

configtx.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 | # Copyright IBM Corp. All Rights Reserved.## SPDX-License-Identifier: Apache-2.0#---################################################################################## Section: Organizations## - This section defines the different organizational identities which will# be referenced later in the configuration.#################################################################################Organizations: # SampleOrg defines an MSP using the sampleconfig. It should never be used # in production but may be used as a template for other definitions - &OrdererOrg # DefaultOrg defines the organization which is used in the sampleconfig # of the fabric.git development environment Name: OrdererOrg # ID to load the MSP definition as ID: OrdererMSP # MSPDir is the filesystem path which contains the MSP configuration MSPDir: crypto-config/ordererOrganizations/example.com/msp # Policies defines the set of policies at this level of the config tree # For organization policies, their canonical path is usually # /Channel/<Application|Orderer>/<OrgName>/<PolicyName> Policies: Readers: Type: Signature Rule: "OR('OrdererMSP.member')" Writers: Type: Signature Rule: "OR('OrdererMSP.member')" Admins: Type: Signature Rule: "OR('OrdererMSP.admin')" - &Org1 # DefaultOrg defines the organization which is used in the sampleconfig # of the fabric.git development environment Name: Org1MSP # ID to load the MSP definition as ID: Org1MSP MSPDir: crypto-config/peerOrganizations/org1.example.com/msp # Policies defines the set of policies at this level of the config tree # For organization policies, their canonical path is usually # /Channel/<Application|Orderer>/<OrgName>/<PolicyName> Policies: Readers: Type: Signature Rule: "OR('Org1MSP.admin', 'Org1MSP.peer', 'Org1MSP.client')" Writers: Type: Signature Rule: "OR('Org1MSP.admin', 'Org1MSP.client')" Admins: Type: Signature Rule: "OR('Org1MSP.admin')" AnchorPeers: # AnchorPeers defines the location of peers which can be used # for cross org gossip communication. Note, this value is only # encoded in the genesis block in the Application section context - Host: peer0.org1.example.com Port: 7051 - &Org2 # DefaultOrg defines the organization which is used in the sampleconfig # of the fabric.git development environment Name: Org2MSP # ID to load the MSP definition as ID: Org2MSP MSPDir: crypto-config/peerOrganizations/org2.example.com/msp # Policies defines the set of policies at this level of the config tree # For organization policies, their canonical path is usually # /Channel/<Application|Orderer>/<OrgName>/<PolicyName> Policies: Readers: Type: Signature Rule: "OR('Org2MSP.admin', 'Org2MSP.peer', 'Org2MSP.client')" Writers: Type: Signature Rule: "OR('Org2MSP.admin', 'Org2MSP.client')" Admins: Type: Signature Rule: "OR('Org2MSP.admin')" AnchorPeers: # AnchorPeers defines the location of peers which can be used # for cross org gossip communication. Note, this value is only # encoded in the genesis block in the Application section context - Host: peer0.org2.example.com Port: 7051################################################################################## SECTION: Capabilities## - This section defines the capabilities of fabric network. This is a new# concept as of v1.1.0 and should not be utilized in mixed networks with# v1.0.x peers and orderers. Capabilities define features which must be# present in a fabric binary for that binary to safely participate in the# fabric network. For instance, if a new MSP type is added, newer binaries# might recognize and validate the signatures from this type, while older# binaries without this support would be unable to validate those# transactions. This could lead to different versions of the fabric binaries# having different world states. Instead, defining a capability for a channel# informs those binaries without this capability that they must cease# processing transactions until they have been upgraded. For v1.0.x if any# capabilities are defined (including a map with all capabilities turned off)# then the v1.0.x peer will deliberately crash.#################################################################################Capabilities: # Channel capabilities apply to both the orderers and the peers and must be # supported by both. Set the value of the capability to true to require it. Global: &ChannelCapabilities # V1.1 for Global is a catchall flag for behavior which has been # determined to be desired for all orderers and peers running v1.0.x, # but the modification of which would cause incompatibilities. Users # should leave this flag set to true. V1_1: true # Orderer capabilities apply only to the orderers, and may be safely # manipulated without concern for upgrading peers. Set the value of the # capability to true to require it. Orderer: &OrdererCapabilities # V1.1 for Order is a catchall flag for behavior which has been # determined to be desired for all orderers running v1.0.x, but the # modification of which would cause incompatibilities. Users should # leave this flag set to true. V1_1: true # Application capabilities apply only to the peer network, and may be safely # manipulated without concern for upgrading orderers. Set the value of the # capability to true to require it. Application: &ApplicationCapabilities # V1.1 for Application is a catchall flag for behavior which has been # determined to be desired for all peers running v1.0.x, but the # modification of which would cause incompatibilities. Users should # leave this flag set to true. V1_2: true################################################################################## SECTION: Application## - This section defines the values to encode into a config transaction or# genesis block for application related parameters#################################################################################Application: &ApplicationDefaults # Organizations is the list of orgs which are defined as participants on # the application side of the network Organizations: # Policies defines the set of policies at this level of the config tree # For Application policies, their canonical path is # /Channel/Application/<PolicyName> Policies: Readers: Type: ImplicitMeta Rule: "ANY Readers" Writers: Type: ImplicitMeta Rule: "ANY Writers" Admins: Type: ImplicitMeta Rule: "MAJORITY Admins" # Capabilities describes the application level capabilities, see the # dedicated Capabilities section elsewhere in this file for a full # description Capabilities: <<: *ApplicationCapabilities################################################################################## SECTION: Orderer## - This section defines the values to encode into a config transaction or# genesis block for orderer related parameters#################################################################################Orderer: &OrdererDefaults # Orderer Type: The orderer implementation to start # Available types are "solo" and "kafka" OrdererType: kafka Addresses: - orderer0.example.com:7050 - orderer1.example.com:7050 - orderer2.example.com:7050 # Batch Timeout: The amount of time to wait before creating a batch BatchTimeout: 2s # Batch Size: Controls the number of messages batched into a block BatchSize: # Max Message Count: The maximum number of messages to permit in a batch MaxMessageCount: 10 # Absolute Max Bytes: The absolute maximum number of bytes allowed for # the serialized messages in a batch. AbsoluteMaxBytes: 98 MB # Preferred Max Bytes: The preferred maximum number of bytes allowed for # the serialized messages in a batch. A message larger than the preferred # max bytes will result in a batch larger than preferred max bytes. PreferredMaxBytes: 512 KB Kafka: # Brokers: A list of Kafka brokers to which the orderer connects. Edit # this list to identify the brokers of the ordering service. # NOTE: Use IP:port notation. Brokers: - kafka0:9092 - kafka1:9092 - kafka2:9092 - kafka3:9092 # Organizations is the list of orgs which are defined as participants on # the orderer side of the network Organizations: # Policies defines the set of policies at this level of the config tree # For Orderer policies, their canonical path is # /Channel/Orderer/<PolicyName> Policies: Readers: Type: ImplicitMeta Rule: "ANY Readers" Writers: Type: ImplicitMeta Rule: "ANY Writers" Admins: Type: ImplicitMeta Rule: "MAJORITY Admins" # BlockValidation specifies what signatures must be included in the block # from the orderer for the peer to validate it. BlockValidation: Type: ImplicitMeta Rule: "ANY Writers" # Capabilities describes the orderer level capabilities, see the # dedicated Capabilities section elsewhere in this file for a full # description Capabilities: <<: *OrdererCapabilities################################################################################## CHANNEL## This section defines the values to encode into a config transaction or# genesis block for channel related parameters.#################################################################################Channel: &ChannelDefaults # Policies defines the set of policies at this level of the config tree # For Channel policies, their canonical path is # /Channel/<PolicyName> Policies: # Who may invoke the 'Deliver' API Readers: Type: ImplicitMeta Rule: "ANY Readers" # Who may invoke the 'Broadcast' API Writers: Type: ImplicitMeta Rule: "ANY Writers" # By default, who may modify elements at this config level Admins: Type: ImplicitMeta Rule: "MAJORITY Admins" # Capabilities describes the channel level capabilities, see the # dedicated Capabilities section elsewhere in this file for a full # description Capabilities: <<: *ChannelCapabilities################################################################################## Profile## - Different configuration profiles may be encoded here to be specified# as parameters to the configtxgen tool#################################################################################Profiles: TwoOrgsOrdererGenesis: <<: *ChannelDefaults Orderer: <<: *OrdererDefaults Organizations: - *OrdererOrg Consortiums: SampleConsortium: Organizations: - *Org1 - *Org2 TwoOrgsChannel: Consortium: SampleConsortium Application: <<: *ApplicationDefaults Organizations: - *Org1 - *Org2 |

3) 生成公私钥和证书

cryptogen generate --config=./crypto-config.yaml

生成的文件都保存到crypto-config文件夹,我们可以进入该文件夹查看生成了哪些文件:tree crypto-config

4) 生成创世区块

mkdir channel-artifacts configtxgen -profile TwoOrgsOrdererGenesis -outputBlock ./channel-artifacts/genesis.block

5) 生成通道配置区块

configtxgen -profile TwoOrgsChannel -outputCreateChannelTx ./channel-artifacts/mychannel.tx -channelID mychannel

另外关于锚节点的更新,我们也需要使用这个程序来生成文件:

1 2 | configtxgen -profile TwoOrgsChannel -outputAnchorPeersUpdate ./channel-artifacts/Org1MSPanchors.tx -channelID mychannel -asOrg Org1MSPconfigtxgen -profile TwoOrgsChannel -outputAnchorPeersUpdate ./channel-artifacts/Org2MSPanchors.tx -channelID mychannel -asOrg Org2MSP |

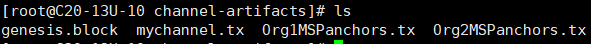

最终,我们在channel-artifacts文件夹中,应该是能够看到4个文件。

6) 拷贝生成文件到其它7台服务器

1 2 3 4 5 6 7 8 | # cd ..# scp -r kafkapeer root@10.20.31.175:/opt/gopath/src/github.com/hyperledger/fabric# scp -r kafkapeer root@10.20.31.176:/opt/gopath/src/github.com/hyperledger/fabric# scp -r kafkapeer root@10.20.31.128:/opt/gopath/src/github.com/hyperledger/fabric# scp -r kafkapeer root@10.20.31.126:/opt/gopath/src/github.com/hyperledger/fabric# scp -r kafkapeer root@10.20.31.127:/opt/gopath/src/github.com/hyperledger/fabric# scp -r kafkapeer root@10.20.31.131:/opt/gopath/src/github.com/hyperledger/fabric# scp -r kafkapeer root@10.20.31.132:/opt/gopath/src/github.com/hyperledger/fabric |

7) 准备zookeeper配置文件

配置docker-compose-zookeeper.yaml文件,拷贝到kafkapeer目录下。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | # Copyright IBM Corp. All Rights Reserved.# # SPDX-License-Identifier: Apache-2.0# version: '2'services: zookeeper0: container_name: zookeeper0 hostname: zookeeper0 image: hyperledger/fabric-zookeeper restart: always environment: - ZOO_MY_ID=1 - ZOO_SERVERS=server.1=zookeeper0:2888:3888 server.2=zookeeper1:2888:3888 server.3=zookeeper2:2888:3888 ports: - 2181:2181 - 2888:2888 - 3888:3888 extra_hosts: - "zookeeper0:10.20.31.174" - "zookeeper1:10.20.31.175" - "zookeeper2:10.20.31.176" - "kafka0:10.20.31.174" - "kafka1:10.20.31.175" - "kafka2:10.20.31.176" - "kafka3:10.20.31.128" |

8)准备kafka配置文件

配置docker-compose-kafka.yaml文件,拷贝到kafkapeer目录下。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | # Copyright IBM Corp. All Rights Reserved.# # SPDX-License-Identifier: Apache-2.0# version: '2'services: kafka0: container_name: kafka0 hostname: kafka0 image: hyperledger/fabric-kafka restart: always environment: - KAFKA_MESSAGE_MAX_BYTES=103809024 # 99 * 1024 * 1024 B - KAFKA_REPLICA_FETCH_MAX_BYTES=103809024 # 99 * 1024 * 1024 B - KAFKA_UNCLEAN_LEADER_ELECTION_ENABLE=false environment: - KAFKA_BROKER_ID=1 - KAFKA_MIN_INSYNC_REPLICAS=2 - KAFKA_DEFAULT_REPLICATION_FACTOR=3 - KAFKA_ZOOKEEPER_CONNECT=zookeeper0:2181,zookeeper1:2181,zookeeper2:2181 volumes: - /var/hyperledger/kafka/kafka-logs:/tmp/kafka-logs ports: - 9092:9092 extra_hosts: - "zookeeper0:10.20.31.174" - "zookeeper1:10.20.31.175" - "zookeeper2:10.20.31.176" - "kafka0:10.20.31.174" - "kafka1:10.20.31.175" - "kafka2:10.20.31.176" - "kafka3:10.20.31.128" |

9) 准备orderer配置文件

配置docker-compose-orderer.yaml文件,拷贝到kafkapeer目录下。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 | # Copyright IBM Corp. All Rights Reserved.## SPDX-License-Identifier: Apache-2.0#version: '2'services: orderer0.example.com: container_name: orderer0.example.com image: hyperledger/fabric-orderer environment: - ORDERER_GENERAL_LOGLEVEL=debug - ORDERER_GENERAL_LISTENADDRESS=0.0.0.0 - ORDERER_GENERAL_GENESISMETHOD=file - ORDERER_GENERAL_GENESISFILE=/var/hyperledger/orderer/orderer.genesis.block - ORDERER_GENERAL_LOCALMSPID=OrdererMSP - ORDERER_GENERAL_LOCALMSPDIR=/var/hyperledger/orderer/msp # enabled TLS - ORDERER_GENERAL_TLS_ENABLED=false - ORDERER_GENERAL_TLS_PRIVATEKEY=/var/hyperledger/orderer/tls/server.key - ORDERER_GENERAL_TLS_CERTIFICATE=/var/hyperledger/orderer/tls/server.crt - ORDERER_GENERAL_TLS_ROOTCAS=[/var/hyperledger/orderer/tls/ca.crt] - ORDERER_KAFKA_RETRY_LONGINTERVAL=10s - ORDERER_KAFKA_RETRY_LONGTOTAL=100s - ORDERER_KAFKA_RETRY_SHORTINTERVAL=1s - ORDERER_KAFKA_RETRY_SHORTTOTAL=30s - ORDERER_KAFKA_VERBOSE=true working_dir: /opt/gopath/src/github.com/hyperledger/fabric command: orderer volumes: - /var/hyperledger/order_data/:/var/hyperledger/production/ - ./channel-artifacts/genesis.block:/var/hyperledger/orderer/orderer.genesis.block - ./crypto-config/ordererOrganizations/example.com/orderers/orderer0.example.com/msp:/var/hyperledger/orderer/msp - ./crypto-config/ordererOrganizations/example.com/orderers/orderer0.example.com/tls/:/var/hyperledger/orderer/tls ports: - 7050:7050 extra_hosts: - "kafka0:10.20.31.174" - "kafka1:10.20.31.175" - "kafka2:10.20.31.176" - "kafka3:10.20.31.128" |

2、服务器(10.20.31.175)部署配置

1) 准备zookeeper配置文件

配置docker-compose-zookeeper.yaml文件,拷贝到kafkapeer目录下。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | # Copyright IBM Corp. All Rights Reserved.# # SPDX-License-Identifier: Apache-2.0# version: '2'services: zookeeper1: container_name: zookeeper1 hostname: zookeeper1 image: hyperledger/fabric-zookeeper restart: always environment: - ZOO_MY_ID=2 - ZOO_SERVERS=server.1=zookeeper0:2888:3888 server.2=zookeeper1:2888:3888 server.3=zookeeper2:2888:3888 ports: - 2181:2181 - 2888:2888 - 3888:3888 extra_hosts: - "zookeeper0:10.20.31.174" - "zookeeper1:10.20.31.175" - "zookeeper2:10.20.31.176" - "kafka0:10.20.31.174" - "kafka1:10.20.31.175" - "kafka2:10.20.31.176" - "kafka3:10.20.31.128" |

2) 准备kafka配置文件

配置docker-compose-kafka.yaml文件,拷贝到kafkapeer目录下。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | # Copyright IBM Corp. All Rights Reserved.# # SPDX-License-Identifier: Apache-2.0# version: '2'services: kafka1: container_name: kafka1 hostname: kafka1 image: hyperledger/fabric-kafka restart: always environment: - KAFKA_MESSAGE_MAX_BYTES=103809024 # 99 * 1024 * 1024 B - KAFKA_REPLICA_FETCH_MAX_BYTES=103809024 # 99 * 1024 * 1024 B - KAFKA_UNCLEAN_LEADER_ELECTION_ENABLE=false environment: - KAFKA_BROKER_ID=2 - KAFKA_MIN_INSYNC_REPLICAS=2 - KAFKA_DEFAULT_REPLICATION_FACTOR=3 - KAFKA_ZOOKEEPER_CONNECT=zookeeper0:2181,zookeeper1:2181,zookeeper2:2181 volumes: - /var/hyperledger/kafka/kafka-logs:/tmp/kafka-logs ports: - 9092:9092 extra_hosts: - "zookeeper0:10.20.31.174" - "zookeeper1:10.20.31.175" - "zookeeper2:10.20.31.176" - "kafka0:10.20.31.174" - "kafka1:10.20.31.175" - "kafka2:10.20.31.176" - "kafka3:10.20.31.128" |

3) 准备orderer配置文件

配置docker-compose-orderer.yaml文件,拷贝到kafkapeer目录下。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 | # Copyright IBM Corp. All Rights Reserved.# # SPDX-License-Identifier: Apache-2.0# version: '2'services: orderer1.example.com: container_name: orderer1.example.com image: hyperledger/fabric-orderer environment: - ORDERER_GENERAL_LOGLEVEL=debug - ORDERER_GENERAL_LISTENADDRESS=0.0.0.0 - ORDERER_GENERAL_GENESISMETHOD=file - ORDERER_GENERAL_GENESISFILE=/var/hyperledger/orderer/orderer.genesis.block - ORDERER_GENERAL_LOCALMSPID=OrdererMSP - ORDERER_GENERAL_LOCALMSPDIR=/var/hyperledger/orderer/msp # enabled TLS - ORDERER_GENERAL_TLS_ENABLED=false - ORDERER_GENERAL_TLS_PRIVATEKEY=/var/hyperledger/orderer/tls/server.key - ORDERER_GENERAL_TLS_CERTIFICATE=/var/hyperledger/orderer/tls/server.crt - ORDERER_GENERAL_TLS_ROOTCAS=[/var/hyperledger/orderer/tls/ca.crt] - ORDERER_KAFKA_RETRY_LONGINTERVAL=10s - ORDERER_KAFKA_RETRY_LONGTOTAL=100s - ORDERER_KAFKA_RETRY_SHORTINTERVAL=1s - ORDERER_KAFKA_RETRY_SHORTTOTAL=30s - ORDERER_KAFKA_VERBOSE=true working_dir: /opt/gopath/src/github.com/hyperledger/fabric command: orderer volumes: - /var/hyperledger/order_data/:/var/hyperledger/production/ - ./channel-artifacts/genesis.block:/var/hyperledger/orderer/orderer.genesis.block - ./crypto-config/ordererOrganizations/example.com/orderers/orderer1.example.com/msp:/var/hyperledger/orderer/msp - ./crypto-config/ordererOrganizations/example.com/orderers/orderer1.example.com/tls/:/var/hyperledger/orderer/tls ports: - 7050:7050 extra_hosts: - "kafka0:10.20.31.174" - "kafka1:10.20.31.175" - "kafka2:10.20.31.176" - "kafka3:10.20.31.128" |

3、服务器(10.20.31.176)部署配置

1) 准备zookeeper配置文件

配置docker-compose-zookeeper.yaml文件,拷贝到kafkapeer目录下。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | # Copyright IBM Corp. All Rights Reserved.# # SPDX-License-Identifier: Apache-2.0# version: '2'services: zookeeper2: container_name: zookeeper2 hostname: zookeeper2 image: hyperledger/fabric-zookeeper restart: always environment: - ZOO_MY_ID=3 - ZOO_SERVERS=server.1=zookeeper0:2888:3888 server.2=zookeeper1:2888:3888 server.3=zookeeper2:2888:3888 ports: - 2181:2181 - 2888:2888 - 3888:3888 extra_hosts: - "zookeeper0:10.20.31.174" - "zookeeper1:10.20.31.175" - "zookeeper2:10.20.31.176" - "kafka0:10.20.31.174" - "kafka1:10.20.31.175" - "kafka2:10.20.31.176" - "kafka3:10.20.31.128" |

2) 准备kafka配置文件

配置docker-compose-kafka.yaml文件,拷贝到kafkapeer目录下。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | # Copyright IBM Corp. All Rights Reserved.# # SPDX-License-Identifier: Apache-2.0# version: '2'services: kafka2: container_name: kafka2 hostname: kafka2 image: hyperledger/fabric-kafka restart: always environment: - KAFKA_MESSAGE_MAX_BYTES=103809024 # 99 * 1024 * 1024 B - KAFKA_REPLICA_FETCH_MAX_BYTES=103809024 # 99 * 1024 * 1024 B - KAFKA_UNCLEAN_LEADER_ELECTION_ENABLE=false environment: - KAFKA_BROKER_ID=3 - KAFKA_MIN_INSYNC_REPLICAS=2 - KAFKA_DEFAULT_REPLICATION_FACTOR=3 - KAFKA_ZOOKEEPER_CONNECT=zookeeper0:2181,zookeeper1:2181,zookeeper2:2181 volumes: - /var/hyperledger/kafka/kafka-logs:/tmp/kafka-logs ports: - 9092:9092 extra_hosts: - "zookeeper0:10.20.31.174" - "zookeeper1:10.20.31.175" - "zookeeper2:10.20.31.176" - "kafka0:10.20.31.174" - "kafka1:10.20.31.175" - "kafka2:10.20.31.176" - "kafka3:10.20.31.128" |

3) 准备orderer配置文件

配置docker-compose-orderer.yaml文件,拷贝到kafkapeer目录下。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 | # Copyright IBM Corp. All Rights Reserved.# # SPDX-License-Identifier: Apache-2.0# version: '2'services: orderer2.example.com: container_name: orderer2.example.com image: hyperledger/fabric-orderer environment: - ORDERER_GENERAL_LOGLEVEL=debug - ORDERER_GENERAL_LISTENADDRESS=0.0.0.0 - ORDERER_GENERAL_GENESISMETHOD=file - ORDERER_GENERAL_GENESISFILE=/var/hyperledger/orderer/orderer.genesis.block - ORDERER_GENERAL_LOCALMSPID=OrdererMSP - ORDERER_GENERAL_LOCALMSPDIR=/var/hyperledger/orderer/msp # enabled TLS - ORDERER_GENERAL_TLS_ENABLED=false - ORDERER_GENERAL_TLS_PRIVATEKEY=/var/hyperledger/orderer/tls/server.key - ORDERER_GENERAL_TLS_CERTIFICATE=/var/hyperledger/orderer/tls/server.crt - ORDERER_GENERAL_TLS_ROOTCAS=[/var/hyperledger/orderer/tls/ca.crt] - ORDERER_KAFKA_RETRY_LONGINTERVAL=10s - ORDERER_KAFKA_RETRY_LONGTOTAL=100s - ORDERER_KAFKA_RETRY_SHORTINTERVAL=1s - ORDERER_KAFKA_RETRY_SHORTTOTAL=30s - ORDERER_KAFKA_VERBOSE=true working_dir: /opt/gopath/src/github.com/hyperledger/fabric command: orderer volumes: - /var/hyperledger/order_data/:/var/hyperledger/production/ - ./channel-artifacts/genesis.block:/var/hyperledger/orderer/orderer.genesis.block - ./crypto-config/ordererOrganizations/example.com/orderers/orderer2.example.com/msp:/var/hyperledger/orderer/msp - ./crypto-config/ordererOrganizations/example.com/orderers/orderer2.example.com/tls/:/var/hyperledger/orderer/tls ports: - 7050:7050 extra_hosts: - "kafka0:10.20.31.174" - "kafka1:10.20.31.175" - "kafka2:10.20.31.176" - "kafka3:10.20.31.128" |

4、服务器(10.20.31.128)部署配置

1) 准备kafka配置文件

配置docker-compose-kafka.yaml文件,拷贝到kafkapeer目录下。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | # Copyright IBM Corp. All Rights Reserved.# # SPDX-License-Identifier: Apache-2.0# version: '2'services: kafka3: container_name: kafka3 hostname: kafka3 image: hyperledger/fabric-kafka restart: always environment: - KAFKA_MESSAGE_MAX_BYTES=103809024 # 99 * 1024 * 1024 B - KAFKA_REPLICA_FETCH_MAX_BYTES=103809024 # 99 * 1024 * 1024 B - KAFKA_UNCLEAN_LEADER_ELECTION_ENABLE=false environment: - KAFKA_BROKER_ID=4 - KAFKA_MIN_INSYNC_REPLICAS=2 - KAFKA_DEFAULT_REPLICATION_FACTOR=3 - KAFKA_ZOOKEEPER_CONNECT=zookeeper0:2181,zookeeper1:2181,zookeeper2:2181 volumes: - /var/hyperledger/kafka/kafka-logs:/tmp/kafka-logs ports: - 9092:9092 extra_hosts: - "zookeeper0:10.20.31.174" - "zookeeper1:10.20.31.175" - "zookeeper2:10.20.31.176" - "kafka0:10.20.31.174" - "kafka1:10.20.31.175" - "kafka2:10.20.31.176" - "kafka3:10.20.31.128" |

5、服务器(10.20.31.126)部署配置

1) 准备peer配置文件

配置docker-compose-peer.yaml文件,拷贝到kafkapeer目录下。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 | # All elements in this file should depend on the docker-compose-base.yaml# Provided fabric peer nodeversion: '2'services: peer0.org1.example.com: container_name: peer0.org1.example.com hostname: peer0.org1.example.com image: hyperledger/fabric-peer environment: - CORE_PEER_ID=peer0.org1.example.com - CORE_PEER_ADDRESS=peer0.org1.example.com:7051 #- CORE_PEER_CHAINCODELISTENADDRESS=peer0.org1.example.com:7052 - CORE_PEER_GOSSIP_EXTERNALENDPOINT=peer0.org1.example.com:7051 - CORE_PEER_LOCALMSPID=Org1MSP - CORE_VM_ENDPOINT=unix:///host/var/run/docker.sock # the following setting starts chaincode containers on the same # bridge network as the peers # https://docs.docker.com/compose/networking/ #- CORE_LOGGING_LEVEL=ERROR - CORE_LOGGING_LEVEL=DEBUG - CORE_PEER_GOSSIP_USELEADERELECTION=true - CORE_PEER_GOSSIP_ORGLEADER=false - CORE_PEER_PROFILE_ENABLED=true - CORE_CHAINCODE_EXECUTETIMEOUT=1000s - CORE_CHAINCODE_DEPLOYTIMEOUT=1000s - CORE_PEER_TLS_ENABLED=false - CORE_PEER_TLS_CERT_FILE=/etc/hyperledger/fabric/tls/server.crt - CORE_PEER_TLS_KEY_FILE=/etc/hyperledger/fabric/tls/server.key - CORE_PEER_TLS_ROOTCERT_FILE=/etc/hyperledger/fabric/tls/ca.crt working_dir: /opt/gopath/src/github.com/hyperledger/fabric/peer command: peer node start volumes: - /var/run/:/host/var/run/ - /var/hyperledger/peer_data/:/var/hyperledger/production/ - ../peer:/etc/hyperledger/fabric/ - ./crypto-config/peerOrganizations/org1.example.com/peers/peer0.org1.example.com/msp:/etc/hyperledger/fabric/msp - ./crypto-config/peerOrganizations/org1.example.com/peers/peer0.org1.example.com/tls:/etc/hyperledger/fabric/tls ports: - 7051:7051 - 7052:7052 - 7053:7053 extra_hosts: - "orderer0.example.com:10.20.31.174" - "orderer1.example.com:10.20.31.175" - "orderer2.example.com:10.20.31.176" cli: container_name: cli image: hyperledger/fabric-tools tty: true environment: - GOPATH=/opt/gopath - CORE_VM_ENDPOINT=unix:///host/var/run/docker.sock # - CORE_LOGGING_LEVEL=ERROR - CORE_LOGGING_LEVEL=DEBUG - CORE_PEER_ID=cli - CORE_PEER_ADDRESS=peer0.org1.example.com:7051 - CORE_PEER_LOCALMSPID=Org1MSP - CORE_PEER_TLS_ENABLED=false - CORE_PEER_TLS_CERT_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org1.example.com/peers/peer0.org1.example.com/tls/server.crt - CORE_PEER_TLS_KEY_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org1.example.com/peers/peer0.org1.example.com/tls/server.key - CORE_PEER_TLS_ROOTCERT_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org1.example.com/peers/peer0.org1.example.com/tls/ca.crt - CORE_PEER_MSPCONFIGPATH=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org1.example.com/users/Admin@org1.example.com/msp working_dir: /opt/gopath/src/github.com/hyperledger/fabric/peer volumes: - /var/run/:/host/var/run/ #- ./chaincode/go/:/opt/gopath/src/github.com/hyperledger/fabric/kafkapeer/chaincode/go - /root/go/src/github.com/hyperledger/fabric:/opt/gopath/src/github.com/hyperledger/fabric - ./chaincode/go/:/opt/gopath/src/github.com/hyperledger/fabric/examples/chaincode/go - ./crypto-config:/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/ - ./channel-artifacts:/opt/gopath/src/github.com/hyperledger/fabric/peer/channel-artifacts extra_hosts: - "orderer0.example.com:10.20.31.174" - "orderer1.example.com:10.20.31.175" - "orderer2.example.com:10.20.31.176" - "peer0.org1.example.com:10.20.31.126" - "peer1.org1.example.com:10.20.31.127" - "peer0.org2.example.com:10.20.31.131" - "peer1.org2.example.com:10.20.31.132" |

2)准备core.yaml配置文件,拷贝到$GOPATH/src/github.com/hyperledger/fabric/peer目录下。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 404 405 406 407 408 409 410 411 412 413 414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429 430 431 432 433 434 435 436 437 438 439 440 441 442 443 444 445 446 447 448 449 450 451 452 453 454 455 456 457 458 459 460 461 462 463 464 465 466 467 468 469 470 471 472 473 474 475 476 477 478 479 480 481 482 483 484 485 486 487 488 489 490 491 492 493 494 495 496 497 498 499 500 501 502 503 504 505 506 507 508 509 510 511 512 513 514 515 516 517 518 519 520 521 522 523 524 525 526 527 528 529 530 531 532 533 534 535 536 537 538 539 540 541 542 543 544 545 546 547 548 549 550 551 552 553 554 555 556 557 558 559 560 561 562 563 564 565 566 567 568 569 570 571 572 573 574 575 576 577 578 579 580 581 582 583 584 585 586 587 588 589 590 591 592 593 594 595 596 597 598 599 600 601 602 603 604 605 606 607 608 609 610 611 612 613 614 615 616 617 618 619 620 621 622 623 624 625 626 627 628 629 630 631 632 633 634 635 636 637 638 639 640 641 642 643 644 645 646 647 648 649 650 651 652 653 654 655 656 657 658 659 660 661 662 663 664 | # Copyright IBM Corp. All Rights Reserved.## SPDX-License-Identifier: Apache-2.0################################################################################## Peer section################################################################################peer: # The Peer id is used for identifying this Peer instance. id: jdoe # The networkId allows for logical seperation of networks networkId: dev # The Address at local network interface this Peer will listen on. # By default, it will listen on all network interfaces listenAddress: 0.0.0.0:7051 # The endpoint this peer uses to listen for inbound chaincode connections. # If this is commented-out, the listen address is selected to be # the peer's address (see below) with port 7052 # chaincodeListenAddress: 0.0.0.0:7052 # The endpoint the chaincode for this peer uses to connect to the peer. # If this is not specified, the chaincodeListenAddress address is selected. # And if chaincodeListenAddress is not specified, address is selected from # peer listenAddress. # chaincodeAddress: 0.0.0.0:7052 # When used as peer config, this represents the endpoint to other peers # in the same organization. For peers in other organization, see # gossip.externalEndpoint for more info. # When used as CLI config, this means the peer's endpoint to interact with address: 0.0.0.0:7051 # Whether the Peer should programmatically determine its address # This case is useful for docker containers. addressAutoDetect: false # Setting for runtime.GOMAXPROCS(n). If n < 1, it does not change the # current setting gomaxprocs: -1 # Keepalive settings for peer server and clients keepalive: # MinInterval is the minimum permitted time between client pings. # If clients send pings more frequently, the peer server will # disconnect them minInterval: 60s # Client keepalive settings for communicating with other peer nodes client: # Interval is the time between pings to peer nodes. This must # greater than or equal to the minInterval specified by peer # nodes interval: 60s # Timeout is the duration the client waits for a response from # peer nodes before closing the connection timeout: 20s # DeliveryClient keepalive settings for communication with ordering # nodes. deliveryClient: # Interval is the time between pings to ordering nodes. This must # greater than or equal to the minInterval specified by ordering # nodes. interval: 60s # Timeout is the duration the client waits for a response from # ordering nodes before closing the connection timeout: 20s # Gossip related configuration gossip: # Bootstrap set to initialize gossip with. # This is a list of other peers that this peer reaches out to at startup. # Important: The endpoints here have to be endpoints of peers in the same # organization, because the peer would refuse connecting to these endpoints # unless they are in the same organization as the peer. bootstrap: 127.0.0.1:7051 # NOTE: orgLeader and useLeaderElection parameters are mutual exclusive. # Setting both to true would result in the termination of the peer # since this is undefined state. If the peers are configured with # useLeaderElection=false, make sure there is at least 1 peer in the # organization that its orgLeader is set to true. # Defines whenever peer will initialize dynamic algorithm for # "leader" selection, where leader is the peer to establish # connection with ordering service and use delivery protocol # to pull ledger blocks from ordering service. It is recommended to # use leader election for large networks of peers. useLeaderElection: true # Statically defines peer to be an organization "leader", # where this means that current peer will maintain connection # with ordering service and disseminate block across peers in # its own organization orgLeader: false # Interval for membershipTracker polling membershipTrackerInterval: 5s # Overrides the endpoint that the peer publishes to peers # in its organization. For peers in foreign organizations # see 'externalEndpoint' endpoint: # Maximum count of blocks stored in memory maxBlockCountToStore: 100 # Max time between consecutive message pushes(unit: millisecond) maxPropagationBurstLatency: 10ms # Max number of messages stored until a push is triggered to remote peers maxPropagationBurstSize: 10 # Number of times a message is pushed to remote peers propagateIterations: 1 # Number of peers selected to push messages to propagatePeerNum: 3 # Determines frequency of pull phases(unit: second) # Must be greater than digestWaitTime + responseWaitTime pullInterval: 4s # Number of peers to pull from pullPeerNum: 3 # Determines frequency of pulling state info messages from peers(unit: second) requestStateInfoInterval: 4s # Determines frequency of pushing state info messages to peers(unit: second) publishStateInfoInterval: 4s # Maximum time a stateInfo message is kept until expired stateInfoRetentionInterval: # Time from startup certificates are included in Alive messages(unit: second) publishCertPeriod: 10s # Should we skip verifying block messages or not (currently not in use) skipBlockVerification: false # Dial timeout(unit: second) dialTimeout: 3s # Connection timeout(unit: second) connTimeout: 2s # Buffer size of received messages recvBuffSize: 20 # Buffer size of sending messages sendBuffSize: 200 # Time to wait before pull engine processes incoming digests (unit: second) # Should be slightly smaller than requestWaitTime digestWaitTime: 1s # Time to wait before pull engine removes incoming nonce (unit: milliseconds) # Should be slightly bigger than digestWaitTime requestWaitTime: 1500ms # Time to wait before pull engine ends pull (unit: second) responseWaitTime: 2s # Alive check interval(unit: second) aliveTimeInterval: 5s # Alive expiration timeout(unit: second) aliveExpirationTimeout: 25s # Reconnect interval(unit: second) reconnectInterval: 25s # This is an endpoint that is published to peers outside of the organization. # If this isn't set, the peer will not be known to other organizations. externalEndpoint: # Leader election service configuration election: # Longest time peer waits for stable membership during leader election startup (unit: second) startupGracePeriod: 15s # Interval gossip membership samples to check its stability (unit: second) membershipSampleInterval: 1s # Time passes since last declaration message before peer decides to perform leader election (unit: second) leaderAliveThreshold: 10s # Time between peer sends propose message and declares itself as a leader (sends declaration message) (unit: second) leaderElectionDuration: 5s pvtData: # pullRetryThreshold determines the maximum duration of time private data corresponding for a given block # would be attempted to be pulled from peers until the block would be committed without the private data pullRetryThreshold: 60s # As private data enters the transient store, it is associated with the peer's ledger's height at that time. # transientstoreMaxBlockRetention defines the maximum difference between the current ledger's height upon commit, # and the private data residing inside the transient store that is guaranteed not to be purged. # Private data is purged from the transient store when blocks with sequences that are multiples # of transientstoreMaxBlockRetention are committed. transientstoreMaxBlockRetention: 1000 # pushAckTimeout is the maximum time to wait for an acknowledgement from each peer # at private data push at endorsement time. pushAckTimeout: 3s # Block to live pulling margin, used as a buffer # to prevent peer from trying to pull private data # from peers that is soon to be purged in next N blocks. # This helps a newly joined peer catch up to current # blockchain height quicker. btlPullMargin: 10 # the process of reconciliation is done in an endless loop, while in each iteration reconciler tries to # pull from the other peers the most recent missing blocks with a maximum batch size limitation. # reconcileBatchSize determines the maximum batch size of missing private data that will be reconciled in a # single iteration. reconcileBatchSize: 10 # reconcileSleepInterval determines the time reconciler sleeps from end of an iteration until the beginning # of the next reconciliation iteration. reconcileSleepInterval: 1m # reconciliationEnabled is a flag that indicates whether private data reconciliation is enable or not. reconciliationEnabled: true # TLS Settings # Note that peer-chaincode connections through chaincodeListenAddress is # not mutual TLS auth. See comments on chaincodeListenAddress for more info tls: # Require server-side TLS enabled: false # Require client certificates / mutual TLS. # Note that clients that are not configured to use a certificate will # fail to connect to the peer. clientAuthRequired: false # X.509 certificate used for TLS server cert: file: tls/server.crt # Private key used for TLS server (and client if clientAuthEnabled # is set to true key: file: tls/server.key # Trusted root certificate chain for tls.cert rootcert: file: tls/ca.crt # Set of root certificate authorities used to verify client certificates clientRootCAs: files: - tls/ca.crt # Private key used for TLS when making client connections. If # not set, peer.tls.key.file will be used instead clientKey: file: # X.509 certificate used for TLS when making client connections. # If not set, peer.tls.cert.file will be used instead clientCert: file: # Authentication contains configuration parameters related to authenticating # client messages authentication: # the acceptable difference between the current server time and the # client's time as specified in a client request message timewindow: 15m # Path on the file system where peer will store data (eg ledger). This # location must be access control protected to prevent unintended # modification that might corrupt the peer operations. fileSystemPath: /var/hyperledger/production # BCCSP (Blockchain crypto provider): Select which crypto implementation or # library to use BCCSP: Default: SW # Settings for the SW crypto provider (i.e. when DEFAULT: SW) SW: # TODO: The default Hash and Security level needs refactoring to be # fully configurable. Changing these defaults requires coordination # SHA2 is hardcoded in several places, not only BCCSP Hash: SHA2 Security: 256 # Location of Key Store FileKeyStore: # If "", defaults to 'mspConfigPath'/keystore KeyStore: # Settings for the PKCS#11 crypto provider (i.e. when DEFAULT: PKCS11) PKCS11: # Location of the PKCS11 module library Library: # Token Label Label: # User PIN Pin: Hash: Security: FileKeyStore: KeyStore: # Path on the file system where peer will find MSP local configurations mspConfigPath: msp # Identifier of the local MSP # ----!!!!IMPORTANT!!!-!!!IMPORTANT!!!-!!!IMPORTANT!!!!---- # Deployers need to change the value of the localMspId string. # In particular, the name of the local MSP ID of a peer needs # to match the name of one of the MSPs in each of the channel # that this peer is a member of. Otherwise this peer's messages # will not be identified as valid by other nodes. localMspId: Org1MSP # CLI common client config options client: # connection timeout connTimeout: 3s # Delivery service related config deliveryclient: # It sets the total time the delivery service may spend in reconnection # attempts until its retry logic gives up and returns an error reconnectTotalTimeThreshold: 3600s # It sets the delivery service <-> ordering service node connection timeout connTimeout: 3s # It sets the delivery service maximal delay between consecutive retries reConnectBackoffThreshold: 3600s # Type for the local MSP - by default it's of type bccsp localMspType: bccsp # Used with Go profiling tools only in none production environment. In # production, it should be disabled (eg enabled: false) profile: enabled: false listenAddress: 0.0.0.0:6060 # The admin service is used for administrative operations such as # control over logger levels, etc. # Only peer administrators can use the service. adminService: # The interface and port on which the admin server will listen on. # If this is commented out, or the port number is equal to the port # of the peer listen address - the admin service is attached to the # peer's service (defaults to 7051). #listenAddress: 0.0.0.0:7055 # Handlers defines custom handlers that can filter and mutate # objects passing within the peer, such as: # Auth filter - reject or forward proposals from clients # Decorators - append or mutate the chaincode input passed to the chaincode # Endorsers - Custom signing over proposal response payload and its mutation # Valid handler definition contains: # - A name which is a factory method name defined in # core/handlers/library/library.go for statically compiled handlers # - library path to shared object binary for pluggable filters # Auth filters and decorators are chained and executed in the order that # they are defined. For example: # authFilters: # - # name: FilterOne # library: /opt/lib/filter.so # - # name: FilterTwo # decorators: # - # name: DecoratorOne # - # name: DecoratorTwo # library: /opt/lib/decorator.so # Endorsers are configured as a map that its keys are the endorsement system chaincodes that are being overridden. # Below is an example that overrides the default ESCC and uses an endorsement plugin that has the same functionality # as the default ESCC. # If the 'library' property is missing, the name is used as the constructor method in the builtin library similar # to auth filters and decorators. # endorsers: # escc: # name: DefaultESCC # library: /etc/hyperledger/fabric/plugin/escc.so handlers: authFilters: - name: DefaultAuth - name: ExpirationCheck # This filter checks identity x509 certificate expiration decorators: - name: DefaultDecorator endorsers: escc: name: DefaultEndorsement library: validators: vscc: name: DefaultValidation library: # library: /etc/hyperledger/fabric/plugin/escc.so # Number of goroutines that will execute transaction validation in parallel. # By default, the peer chooses the number of CPUs on the machine. Set this # variable to override that choice. # NOTE: overriding this value might negatively influence the performance of # the peer so please change this value only if you know what you're doing validatorPoolSize: # The discovery service is used by clients to query information about peers, # such as - which peers have joined a certain channel, what is the latest # channel config, and most importantly - given a chaincode and a channel, # what possible sets of peers satisfy the endorsement policy. discovery: enabled: true # Whether the authentication cache is enabled or not. authCacheEnabled: true # The maximum size of the cache, after which a purge takes place authCacheMaxSize: 1000 # The proportion (0 to 1) of entries that remain in the cache after the cache is purged due to overpopulation authCachePurgeRetentionRatio: 0.75 # Whether to allow non-admins to perform non channel scoped queries. # When this is false, it means that only peer admins can perform non channel scoped queries. orgMembersAllowedAccess: false################################################################################# VM section################################################################################vm: # Endpoint of the vm management system. For docker can be one of the following in general # unix:///var/run/docker.sock # http://localhost:2375 # https://localhost:2376 endpoint: unix:///var/run/docker.sock # settings for docker vms docker: tls: enabled: false ca: file: docker/ca.crt cert: file: docker/tls.crt key: file: docker/tls.key # Enables/disables the standard out/err from chaincode containers for # debugging purposes attachStdout: false # Parameters on creating docker container. # Container may be efficiently created using ipam & dns-server for cluster # NetworkMode - sets the networking mode for the container. Supported # standard values are: `host`(default),`bridge`,`ipvlan`,`none`. # Dns - a list of DNS servers for the container to use. # Note: `Privileged` `Binds` `Links` and `PortBindings` properties of # Docker Host Config are not supported and will not be used if set. # LogConfig - sets the logging driver (Type) and related options # (Config) for Docker. For more info, # https://docs.docker.com/engine/admin/logging/overview/ # Note: Set LogConfig using Environment Variables is not supported. hostConfig: NetworkMode: host Dns: # - 192.168.0.1 LogConfig: Type: json-file Config: max-size: "50m" max-file: "5" Memory: 2147483648################################################################################# Chaincode section################################################################################chaincode: # The id is used by the Chaincode stub to register the executing Chaincode # ID with the Peer and is generally supplied through ENV variables # the `path` form of ID is provided when installing the chaincode. # The `name` is used for all other requests and can be any string. id: path: name: # Generic builder environment, suitable for most chaincode types builder: $(DOCKER_NS)/fabric-ccenv:latest # Enables/disables force pulling of the base docker images (listed below) # during user chaincode instantiation. # Useful when using moving image tags (such as :latest) pull: false golang: # golang will never need more than baseos runtime: $(BASE_DOCKER_NS)/fabric-baseos:$(ARCH)-$(BASE_VERSION) # whether or not golang chaincode should be linked dynamically dynamicLink: false car: # car may need more facilities (JVM, etc) in the future as the catalog # of platforms are expanded. For now, we can just use baseos runtime: $(BASE_DOCKER_NS)/fabric-baseos:$(ARCH)-$(BASE_VERSION) java: # This is an image based on java:openjdk-8 with addition compiler # tools added for java shim layer packaging. # This image is packed with shim layer libraries that are necessary # for Java chaincode runtime. runtime: $(DOCKER_NS)/fabric-javaenv:$(ARCH)-$(PROJECT_VERSION) node: # need node.js engine at runtime, currently available in baseimage # but not in baseos runtime: $(BASE_DOCKER_NS)/fabric-baseimage:$(ARCH)-$(BASE_VERSION) # Timeout duration for starting up a container and waiting for Register # to come through. 1sec should be plenty for chaincode unit tests startuptimeout: 300s # Timeout duration for Invoke and Init calls to prevent runaway. # This timeout is used by all chaincodes in all the channels, including # system chaincodes. # Note that during Invoke, if the image is not available (e.g. being # cleaned up when in development environment), the peer will automatically # build the image, which might take more time. In production environment, # the chaincode image is unlikely to be deleted, so the timeout could be # reduced accordingly. executetimeout: 30s # There are 2 modes: "dev" and "net". # In dev mode, user runs the chaincode after starting peer from # command line on local machine. # In net mode, peer will run chaincode in a docker container. mode: net # keepalive in seconds. In situations where the communiction goes through a # proxy that does not support keep-alive, this parameter will maintain connection # between peer and chaincode. # A value <= 0 turns keepalive off keepalive: 1000m # system chaincodes whitelist. To add system chaincode "myscc" to the # whitelist, add "myscc: enable" to the list below, and register in # chaincode/importsysccs.go system: cscc: enable lscc: enable escc: enable vscc: enable qscc: enable # System chaincode plugins: # System chaincodes can be loaded as shared objects compiled as Go plugins. # See examples/plugins/scc for an example. # Plugins must be white listed in the chaincode.system section above. systemPlugins: # example configuration: # - enabled: true # name: myscc # path: /opt/lib/myscc.so # invokableExternal: true # invokableCC2CC: true # Logging section for the chaincode container logging: # Default level for all loggers within the chaincode container level: info # Override default level for the 'shim' logger shim: warning # Format for the chaincode container logs format: '%{color}%{time:2006-01-02 15:04:05.000 MST} [%{module}] %{shortfunc} -> %{level:.4s} %{id:03x}%{color:reset} %{message}'################################################################################# Ledger section - ledger configuration encompases both the blockchain# and the state################################################################################ledger: blockchain: state: # stateDatabase - options are "goleveldb", "CouchDB" # goleveldb - default state database stored in goleveldb. # CouchDB - store state database in CouchDB stateDatabase: goleveldb # Limit on the number of records to return per query totalQueryLimit: 100000 couchDBConfig: # It is recommended to run CouchDB on the same server as the peer, and # not map the CouchDB container port to a server port in docker-compose. # Otherwise proper security must be provided on the connection between # CouchDB client (on the peer) and server. couchDBAddress: 127.0.0.1:5984 # This username must have read and write authority on CouchDB username: # The password is recommended to pass as an environment variable # during start up (eg LEDGER_COUCHDBCONFIG_PASSWORD). # If it is stored here, the file must be access control protected # to prevent unintended users from discovering the password. password: # Number of retries for CouchDB errors maxRetries: 3 # Number of retries for CouchDB errors during peer startup maxRetriesOnStartup: 12 # CouchDB request timeout (unit: duration, e.g. 20s) requestTimeout: 35s # Limit on the number of records per each CouchDB query # Note that chaincode queries are only bound by totalQueryLimit. # Internally the chaincode may execute multiple CouchDB queries, # each of size internalQueryLimit. internalQueryLimit: 1000 # Limit on the number of records per CouchDB bulk update batch maxBatchUpdateSize: 1000 # Warm indexes after every N blocks. # This option warms any indexes that have been # deployed to CouchDB after every N blocks. # A value of 1 will warm indexes after every block commit, # to ensure fast selector queries. # Increasing the value may improve write efficiency of peer and CouchDB, # but may degrade query response time. warmIndexesAfterNBlocks: 1 # Create the _global_changes system database # This is optional. Creating the global changes database will require # additional system resources to track changes and maintain the database createGlobalChangesDB: false history: # enableHistoryDatabase - options are true or false # Indicates if the history of key updates should be stored. # All history 'index' will be stored in goleveldb, regardless if using # CouchDB or alternate database for the state. enableHistoryDatabase: true################################################################################# Operations section################################################################################operations: # host and port for the operations server listenAddress: 127.0.0.1:9443 # TLS configuration for the operations endpoint tls: # TLS enabled enabled: false # path to PEM encoded server certificate for the operations server cert: file: # path to PEM encoded server key for the operations server key: file: # most operations service endpoints require client authentication when TLS # is enabled. clientAuthRequired requires client certificate authentication # at the TLS layer to access all resources. clientAuthRequired: false # paths to PEM encoded ca certificates to trust for client authentication clientRootCAs: files: []################################################################################# Metrics section################################################################################metrics: # metrics provider is one of statsd, prometheus, or disabled provider: disabled # statsd configuration statsd: # network type: tcp or udp network: udp # statsd server address address: 127.0.0.1:8125 # the interval at which locally cached counters and gauges are pushed # to statsd; timings are pushed immediately writeInterval: 10s # prefix is prepended to all emitted statsd metrics prefix: |

6、服务器(10.20.31.127)部署配置

1) 准备peer配置文件

配置docker-compose-peer.yaml文件,拷贝到kafkapeer目录下。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 | # All elements in this file should depend on the docker-compose-base.yaml# Provided fabric peer nodeversion: '2'services: peer1.org1.example.com: container_name: peer1.org1.example.com hostname: peer1.org1.example.com image: hyperledger/fabric-peer environment: - CORE_PEER_ID=peer1.org1.example.com - CORE_PEER_ADDRESS=peer1.org1.example.com:7051 #- CORE_PEER_CHAINCODELISTENADDRESS=peer1.org1.example.com:7052 - CORE_PEER_GOSSIP_EXTERNALENDPOINT=peer1.org1.example.com:7051 - CORE_PEER_LOCALMSPID=Org1MSP - CORE_VM_ENDPOINT=unix:///host/var/run/docker.sock #- CORE_LOGGING_LEVEL=ERROR - CORE_LOGGING_LEVEL=DEBUG - CORE_PEER_GOSSIP_USELEADERELECTION=true - CORE_PEER_GOSSIP_ORGLEADER=false - CORE_PEER_PROFILE_ENABLED=true - CORE_CHAINCODE_EXECUTETIMEOUT=1000s - CORE_CHAINCODE_DEPLOYTIMEOUT=1000s - CORE_PEER_TLS_ENABLED=false - CORE_PEER_TLS_CERT_FILE=/etc/hyperledger/fabric/tls/server.crt - CORE_PEER_TLS_KEY_FILE=/etc/hyperledger/fabric/tls/server.key - CORE_PEER_TLS_ROOTCERT_FILE=/etc/hyperledger/fabric/tls/ca.crt working_dir: /opt/gopath/src/github.com/hyperledger/fabric/peer command: peer node start volumes: - /var/run/:/host/var/run/ - /var/hyperledger/peer_data/:/var/hyperledger/production/ - ../peer:/etc/hyperledger/fabric/ - ./crypto-config/peerOrganizations/org1.example.com/peers/peer1.org1.example.com/msp:/etc/hyperledger/fabric/msp - ./crypto-config/peerOrganizations/org1.example.com/peers/peer1.org1.example.com/tls:/etc/hyperledger/fabric/tls ports: - 7051:7051 - 7052:7052 - 7053:7053 extra_hosts: - "orderer0.example.com:10.20.31.174" - "orderer1.example.com:10.20.31.175" - "orderer2.example.com:10.20.31.176" cli: container_name: cli image: hyperledger/fabric-tools tty: true environment: - GOPATH=/opt/gopath - CORE_VM_ENDPOINT=unix:///host/var/run/docker.sock - CORE_LOGGING_LEVEL=DEBUG - CORE_PEER_ID=cli - CORE_PEER_ADDRESS=peer1.org1.example.com:7051 - CORE_PEER_LOCALMSPID=Org1MSP - CORE_PEER_TLS_ENABLED=false - CORE_PEER_TLS_CERT_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org1.example.com/peers/peer1.org1.example.com/tls/server.crt - CORE_PEER_TLS_KEY_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org1.example.com/peers/peer1.org1.example.com/tls/server.key - CORE_PEER_TLS_ROOTCERT_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org1.example.com/peers/peer1.org1.example.com/tls/ca.crt - CORE_PEER_MSPCONFIGPATH=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org1.example.com/users/Admin@org1.example.com/msp working_dir: /opt/gopath/src/github.com/hyperledger/fabric/peer volumes: - /var/run/:/host/var/run/ #- ./chaincode/go/:/opt/gopath/src/github.com/hyperledger/fabric/kafkapeer/chaincode/go - /root/go/src/github.com/hyperledger/fabric:/opt/gopath/src/github.com/hyperledger/fabric - ./chaincode/go/:/opt/gopath/src/github.com/hyperledger/fabric/examples/chaincode/go - ./crypto-config:/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/ - ./channel-artifacts:/opt/gopath/src/github.com/hyperledger/fabric/peer/channel-artifacts extra_hosts: - "orderer0.example.com:10.20.31.174" - "orderer1.example.com:10.20.31.175" - "orderer2.example.com:10.20.31.176" - "peer0.org1.example.com:10.20.31.126" - "peer1.org1.example.com:10.20.31.127" - "peer0.org2.example.com:10.20.31.131" - "peer1.org2.example.com:10.20.31.132" |

2)准备core.yaml配置文件,拷贝到$GOPATH/src/github.com/hyperledger/fabric/peer目录下。