Kubernetes K8S之Helm部署、使用与示例

Kubernetes K8S之Helm部署、使用、常见操作与示例

主机配置规划

| 服务器名称(hostname) | 系统版本 | 配置 | 内网IP | 外网IP(模拟) |

|---|---|---|---|---|

| k8s-master | CentOS7.7 | 2C/4G/20G | 172.16.1.110 | 10.0.0.110 |

| k8s-node01 | CentOS7.7 | 2C/4G/20G | 172.16.1.111 | 10.0.0.111 |

| k8s-node02 | CentOS7.7 | 2C/4G/20G | 172.16.1.112 | 10.0.0.112 |

Helm是什么

没有使用Helm之前,在Kubernetes部署应用,我们要依次部署deployment、service等,步骤比较繁琐。况且随着很多项目微服务化,复杂的应用在容器中部署以及管理显得较为复杂。

helm通过打包的方式,支持发布的版本管理和控制,很大程度上简化了Kubernetes应用的部署和管理。

Helm本质就是让k8s的应用管理(Deployment、Service等)可配置,能动态生成。通过动态生成K8S资源清单文件(deployment.yaml、service.yaml)。然后kubectl自动调用K8S资源部署。

Helm是官方提供类似于YUM的包管理,是部署环境的流程封装,Helm有三个重要的概念:chart、release和Repository

- chart是创建一个应用的信息集合,包括各种Kubernetes对象的配置模板、参数定义、依赖关系、文档说明等。可以将chart想象成apt、yum中的软件安装包。

- release是chart的运行实例,代表一个正在运行的应用。当chart被安装到Kubernetes集群,就生成一个release。chart能多次安装到同一个集群,每次安装都是一个release【根据chart赋值不同,完全可以部署出多个release出来】。

- Repository用于发布和存储 Chart 的存储库。

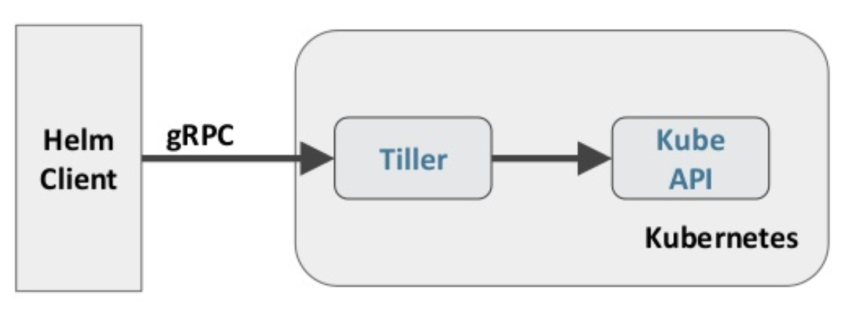

Helm包含两个组件:Helm客户端和Tiller服务端,如下图所示:

Helm 客户端负责 chart 和 release 的创建和管理以及和 Tiller 的交互。Tiller 服务端运行在 Kubernetes 集群中,它会处理Helm客户端的请求,与 Kubernetes API Server 交互。

Helm部署

现在越来越多的公司和团队开始使用Helm这个Kubernetes的包管理器,我们也会使用Helm安装Kubernetes的常用组件。Helm由客户端命令helm工具和服务端tiller组成。

helm的GitHub地址

https://github.com/helm/helm

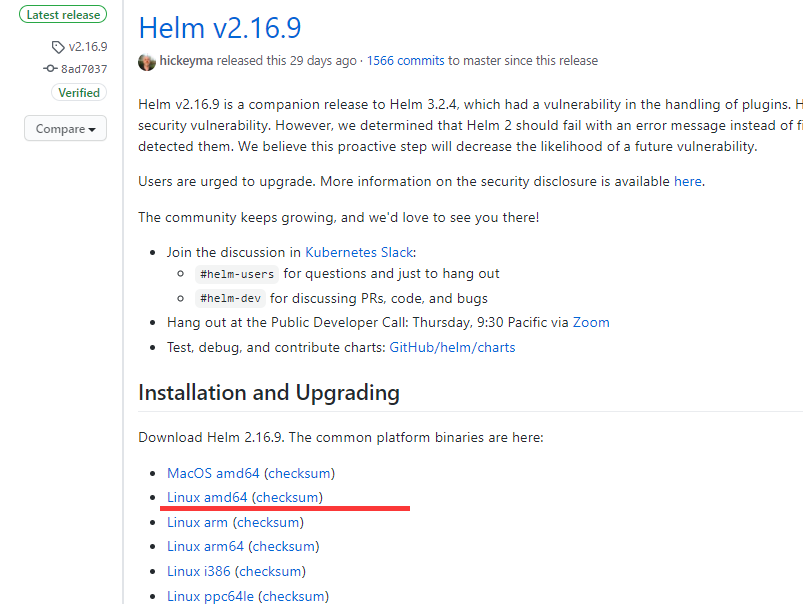

本次部署版本

Helm安装部署

1 [root@k8s-master software]# pwd 2 /root/software 3 [root@k8s-master software]# wget https://get.helm.sh/helm-v2.16.9-linux-amd64.tar.gz 4 [root@k8s-master software]# 5 [root@k8s-master software]# tar xf helm-v2.16.9-linux-amd64.tar.gz 6 [root@k8s-master software]# ll 7 total 12624 8 -rw-r--r-- 1 root root 12926032 Jun 16 06:55 helm-v3.2.4-linux-amd64.tar.gz 9 drwxr-xr-x 2 3434 3434 50 Jun 16 06:55 linux-amd64 10 [root@k8s-master software]# 11 [root@k8s-master software]# cp -a linux-amd64/helm /usr/bin/helm

因为Kubernetes API Server开启了RBAC访问控制,所以需要创建tiller的service account:tiller并分配合适的角色给它。这里为了简单起见我们直接分配cluster-admin这个集群内置的ClusterRole给它。

1 [root@k8s-master helm]# pwd 2 /root/k8s_practice/helm 3 [root@k8s-master helm]# 4 [root@k8s-master helm]# cat rbac-helm.yaml 5 apiVersion: v1 6 kind: ServiceAccount 7 metadata: 8 name: tiller 9 namespace: kube-system 10 --- 11 apiVersion: rbac.authorization.k8s.io/v1 12 kind: ClusterRoleBinding 13 metadata: 14 name: tiller 15 roleRef: 16 apiGroup: rbac.authorization.k8s.io 17 kind: ClusterRole 18 name: cluster-admin 19 subjects: 20 - kind: ServiceAccount 21 name: tiller 22 namespace: kube-system 23 [root@k8s-master helm]# 24 [root@k8s-master helm]# kubectl apply -f rbac-helm.yaml 25 serviceaccount/tiller created 26 clusterrolebinding.rbac.authorization.k8s.io/tiller created

初始化Helm的client 和 server

1 [root@k8s-master helm]# helm init --service-account tiller 2 ……………… 3 [root@k8s-master helm]# kubectl get pod -n kube-system -o wide | grep 'tiller' 4 tiller-deploy-8488d98b4c-j8txs 0/1 Pending 0 38m <none> <none> <none> <none> 5 [root@k8s-master helm]# 6 ##### 之所有没有调度成功,就是因为拉取镜像包失败;查看需要拉取的镜像包 7 [root@k8s-master helm]# kubectl describe pod tiller-deploy-8488d98b4c-j8txs -n kube-system 8 Name: tiller-deploy-8488d98b4c-j8txs 9 Namespace: kube-system 10 Priority: 0 11 Node: <none> 12 Labels: app=helm 13 name=tiller 14 pod-template-hash=8488d98b4c 15 Annotations: <none> 16 Status: Pending 17 IP: 18 IPs: <none> 19 Controlled By: ReplicaSet/tiller-deploy-8488d98b4c 20 Containers: 21 tiller: 22 Image: gcr.io/kubernetes-helm/tiller:v2.16.9 23 Ports: 44134/TCP, 44135/TCP 24 Host Ports: 0/TCP, 0/TCP 25 Liveness: http-get http://:44135/liveness delay=1s timeout=1s period=10s #success=1 #failure=3 26 Readiness: http-get http://:44135/readiness delay=1s timeout=1s period=10s #success=1 #failure=3 27 Environment: 28 TILLER_NAMESPACE: kube-system 29 TILLER_HISTORY_MAX: 0 30 Mounts: 31 /var/run/secrets/kubernetes.io/serviceaccount from tiller-token-kjqb7 (ro) 32 Conditions: 33 ………………

由上可见,镜像下载失败。原因是镜像在国外,因此这里需要修改镜像地址

1 [root@k8s-master helm]# helm init --upgrade --tiller-image registry.cn-beijing.aliyuncs.com/google_registry/tiller:v2.16.9 2 [root@k8s-master helm]# 3 ### 等待一会儿后 4 [root@k8s-master helm]# kubectl get pod -o wide -A | grep 'till' 5 kube-system tiller-deploy-7b7787d77-zln6t 1/1 Running 0 8m43s 10.244.4.123 k8s-node01 <none> <none>

由上可见,Helm服务端tiller部署成功

helm版本信息查看

1 [root@k8s-master helm]# helm version 2 Client: &version.Version{SemVer:"v2.16.9", GitCommit:"8ad7037828e5a0fca1009dabe290130da6368e39", GitTreeState:"clean"} 3 Server: &version.Version{SemVer:"v2.16.9", GitCommit:"8ad7037828e5a0fca1009dabe290130da6368e39", GitTreeState:"dirty"}

Helm使用

helm源地址

helm默认使用的charts源地址

1 [root@k8s-master helm]# helm repo list 2 NAME URL 3 stable https://kubernetes-charts.storage.googleapis.com 4 local http://127.0.0.1:8879/charts

改变helm源【是否改变helm源,根据实际情况而定,一般不需要修改】

1 helm repo remove stable 2 helm repo add stable https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts 3 helm repo update 4 helm repo list

helm安装包下载存放位置

/root/.helm/cache/archive

helm常见应用操作

1 # 列出charts仓库中所有可用的应用 2 helm search 3 # 查询指定应用 4 helm search memcached 5 # 查询指定应用的具体信息 6 helm inspect stable/memcached 7 # 用helm安装软件包,--name:指定release名字 8 helm install --name memcached1 stable/memcached 9 # 查看安装的软件包 10 helm list 11 # 删除指定引用 12 helm delete memcached1

helm常用命令

chart管理

1 create:根据给定的name创建一个新chart 2 fetch:从仓库下载chart,并(可选项)将其解压缩到本地目录中 3 inspect:chart详情 4 package:打包chart目录到一个chart归档 5 lint:语法检测 6 verify:验证位于给定路径的chart已被签名且有效

release管理

1 get:下载一个release 2 delete:根据给定的release name,从Kubernetes中删除指定的release 3 install:安装一个chart 4 list:显示release列表 5 upgrade:升级release 6 rollback:回滚release到之前的一个版本 7 status:显示release状态信息 8 history:Fetch release历史信息

helm常见操作

1 # 添加仓库 2 helm repo add REPO_INFO # 如:helm repo add incubator http://storage.googleapis.com/kubernetes-charts-incubator 3 ##### 示例 4 helm repo add incubator http://storage.googleapis.com/kubernetes-charts-incubator 5 helm repo add elastic https://helm.elastic.co 6 # 查看helm仓库列表 7 helm repo list 8 # 创建chart【可供参考,一般都是自己手动创建chart】 9 helm create CHART_PATH 10 # 根据指定chart部署一个release 11 helm install --name RELEASE_NAME CHART_PATH 12 # 根据指定chart模拟安装一个release,并打印处debug信息 13 helm install --dry-run --debug --name RELEASE_NAME CHART_PATH 14 # 列出已经部署的release 15 helm list 16 # 列出所有的release 17 helm list --all 18 # 查询指定release的状态 19 helm status Release_NAME 20 # 回滚到指定版本的release,这里指定的helm release版本 21 helm rollback Release_NAME REVISION_NUM 22 # 查看指定release的历史信息 23 helm history Release_NAME 24 # 对指定chart打包 25 helm package CHART_PATH 如:helm package my-test-app/ 26 # 对指定chart进行语法检测 27 helm lint CHART_PATH 28 # 查看指定chart详情 29 helm inspect CHART_PATH 30 # 从Kubernetes中删除指定release相关的资源【helm list --all 中仍然可见release记录信息】 31 helm delete RELEASE_NAME 32 # 从Kubernetes中删除指定release相关的资源,并删除release记录 33 helm delete --purge RELEASE_NAME

上述操作可结合下文示例,这样能看到更多细节。

helm示例

chart文件信息

1 [root@k8s-master helm]# pwd 2 /root/k8s_practice/helm 3 [root@k8s-master helm]# 4 [root@k8s-master helm]# mkdir my-test-app 5 [root@k8s-master helm]# cd my-test-app 6 [root@k8s-master my-test-app]# 7 [root@k8s-master my-test-app]# ll 8 total 8 9 -rw-r--r-- 1 root root 158 Jul 16 17:53 Chart.yaml 10 drwxr-xr-x 2 root root 49 Jul 16 21:04 templates 11 -rw-r--r-- 1 root root 129 Jul 16 21:04 values.yaml 12 [root@k8s-master my-test-app]# 13 [root@k8s-master my-test-app]# cat Chart.yaml 14 apiVersion: v1 15 appVersion: v2.2 16 description: my test app 17 keywords: 18 - myapp 19 maintainers: 20 - email: zhang@test.com 21 name: zhang 22 # 该name值与上级目录名相同 23 name: my-test-app 24 version: v1.0.0 25 [root@k8s-master my-test-app]# 26 [root@k8s-master my-test-app]# cat values.yaml 27 deployname: my-test-app02 28 replicaCount: 2 29 images: 30 repository: registry.cn-beijing.aliyuncs.com/google_registry/myapp 31 tag: v2 32 [root@k8s-master my-test-app]# 33 [root@k8s-master my-test-app]# ll templates/ 34 total 8 35 -rw-r--r-- 1 root root 544 Jul 16 21:04 deployment.yaml 36 -rw-r--r-- 1 root root 222 Jul 16 20:41 service.yaml 37 [root@k8s-master my-test-app]# 38 [root@k8s-master my-test-app]# cat templates/deployment.yaml 39 apiVersion: apps/v1 40 kind: Deployment 41 metadata: 42 name: {{ .Values.deployname }} 43 labels: 44 app: mytestapp-deploy 45 spec: 46 replicas: {{ .Values.replicaCount }} 47 selector: 48 matchLabels: 49 app: mytestapp 50 env: test 51 template: 52 metadata: 53 labels: 54 app: mytestapp 55 env: test 56 description: mytest 57 spec: 58 containers: 59 - name: myapp-pod 60 image: {{ .Values.images.repository }}:{{ .Values.images.tag }} 61 imagePullPolicy: IfNotPresent 62 ports: 63 - containerPort: 80 64 65 [root@k8s-master my-test-app]# 66 [root@k8s-master my-test-app]# cat templates/service.yaml 67 apiVersion: v1 68 kind: Service 69 metadata: 70 name: my-test-app 71 namespace: default 72 spec: 73 type: NodePort 74 selector: 75 app: mytestapp 76 env: test 77 ports: 78 - name: http 79 port: 80 80 targetPort: 80 81 protocol: TCP

生成release

1 [root@k8s-master my-test-app]# pwd 2 /root/k8s_practice/helm/my-test-app 3 [root@k8s-master my-test-app]# ll 4 total 8 5 -rw-r--r-- 1 root root 160 Jul 16 21:15 Chart.yaml 6 drwxr-xr-x 2 root root 49 Jul 16 21:04 templates 7 -rw-r--r-- 1 root root 129 Jul 16 21:04 values.yaml 8 [root@k8s-master my-test-app]# 9 [root@k8s-master my-test-app]# helm install --name mytest-app01 . ### 如果在上级目录则为 helm install --name mytest-app01 my-test-app/ 10 NAME: mytest-app01 11 LAST DEPLOYED: Thu Jul 16 21:18:08 2020 12 NAMESPACE: default 13 STATUS: DEPLOYED 14 15 RESOURCES: 16 ==> v1/Deployment 17 NAME READY UP-TO-DATE AVAILABLE AGE 18 my-test-app02 0/2 2 0 0s 19 20 ==> v1/Pod(related) 21 NAME READY STATUS RESTARTS AGE 22 my-test-app02-58cb6b67fc-4ss4v 0/1 ContainerCreating 0 0s 23 my-test-app02-58cb6b67fc-w2nhc 0/1 ContainerCreating 0 0s 24 25 ==> v1/Service 26 NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE 27 my-test-app NodePort 10.110.82.62 <none> 80:30965/TCP 0s 28 29 [root@k8s-master my-test-app]# helm list 30 NAME REVISION UPDATED STATUS CHART APP VERSION NAMESPACE 31 mytest-app01 1 Thu Jul 16 21:18:08 2020 DEPLOYED my-test-app-v1.0.0 v2.2 default

curl访问

1 [root@k8s-master ~]# kubectl get pod -o wide 2 NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES 3 my-test-app02-58cb6b67fc-4ss4v 1/1 Running 0 9m3s 10.244.2.187 k8s-node02 <none> <none> 4 my-test-app02-58cb6b67fc-w2nhc 1/1 Running 0 9m3s 10.244.4.134 k8s-node01 <none> <none> 5 [root@k8s-master ~]# 6 [root@k8s-master ~]# kubectl get svc -o wide 7 NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR 8 kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 65d <none> 9 my-test-app NodePort 10.110.82.62 <none> 80:30965/TCP 9m8s app=mytestapp,env=test 10 [root@k8s-master ~]# 11 ##### 根据svc的IP访问 12 [root@k8s-master ~]# curl 10.110.82.62 13 Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a> 14 [root@k8s-master ~]# 15 [root@k8s-master ~]# curl 10.110.82.62/hostname.html 16 my-test-app02-58cb6b67fc-4ss4v 17 [root@k8s-master ~]# 18 [root@k8s-master ~]# curl 10.110.82.62/hostname.html 19 my-test-app02-58cb6b67fc-w2nhc 20 [root@k8s-master ~]# 21 ##### 根据本机的IP访问 22 [root@k8s-master ~]# curl 172.16.1.110:30965/hostname.html 23 my-test-app02-58cb6b67fc-w2nhc 24 [root@k8s-master ~]# 25 [root@k8s-master ~]# curl 172.16.1.110:30965/hostname.html 26 my-test-app02-58cb6b67fc-4ss4v

chart更新

values.yaml文件修改

1 [root@k8s-master my-test-app]# pwd 2 /root/k8s_practice/helm/my-test-app 3 [root@k8s-master my-test-app]# 4 [root@k8s-master my-test-app]# cat values.yaml 5 deployname: my-test-app02 6 replicaCount: 2 7 images: 8 repository: registry.cn-beijing.aliyuncs.com/google_registry/myapp 9 # 改了tag 10 tag: v3

重新release发布

1 [root@k8s-master my-test-app]# helm list 2 NAME REVISION UPDATED STATUS CHART APP VERSION NAMESPACE 3 mytest-app01 1 Thu Jul 16 21:18:08 2020 DEPLOYED my-test-app-v1.0.0 v2.2 default 4 [root@k8s-master my-test-app]# 5 [root@k8s-master my-test-app]# helm upgrade mytest-app01 . ### 如果在上级目录则为 helm upgrade mytest-app01 my-test-app/ 6 Release "mytest-app01" has been upgraded. 7 LAST DEPLOYED: Thu Jul 16 21:32:25 2020 8 NAMESPACE: default 9 STATUS: DEPLOYED 10 11 RESOURCES: 12 ==> v1/Deployment 13 NAME READY UP-TO-DATE AVAILABLE AGE 14 my-test-app02 2/2 1 2 14m 15 16 ==> v1/Pod(related) 17 NAME READY STATUS RESTARTS AGE 18 my-test-app02-58cb6b67fc-4ss4v 1/1 Running 0 14m 19 my-test-app02-58cb6b67fc-w2nhc 1/1 Running 0 14m 20 my-test-app02-6b84df49bb-lpww7 0/1 ContainerCreating 0 0s 21 22 ==> v1/Service 23 NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE 24 my-test-app NodePort 10.110.82.62 <none> 80:30965/TCP 14m 25 26 27 [root@k8s-master my-test-app]# 28 [root@k8s-master my-test-app]# helm list 29 NAME REVISION UPDATED STATUS CHART APP VERSION NAMESPACE 30 mytest-app01 2 Thu Jul 16 21:32:25 2020 DEPLOYED my-test-app-v1.0.0 v2.2 default

curl访问,可参见上面。可见app version已从v2改为了v3。

相关阅读

1、Helm官网地址

完毕!

———END———

如果觉得不错就关注下呗 (-^O^-) !