SpringBoot和VW-Crawler抓取csdn的文章

一:工程介绍

使用Springboot做架构,redis做数据存储,vw-crawler做爬虫模块抓取csdn的文章,pom配置如下:

<parent> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-parent</artifactId> <version>1.4.3.RELEASE</version> <relativePath/> </parent> <properties> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding> <java.version>1.8</java.version> <vw-crawler.version>0.0.4</vw-crawler.version> </properties> <dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> <exclusions> <exclusion> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-tomcat</artifactId> </exclusion> </exclusions> </dependency> <dependency> <groupId>com.github.vector4wang</groupId> <artifactId>vw-crawler</artifactId> <version>0.0.5</version> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-redis</artifactId> </dependency> <dependency> <groupId>com.alibaba</groupId> <artifactId>fastjson</artifactId> <version>1.2.31</version> </dependency> </dependencies>

redis相关配置

# Redis数据库索引(默认为0)

spring.redis.database=0

# Redis服务器地址

spring.redis.host=localhost

# Redis服务器连接端口

spring.redis.port=6379

# Redis服务器连接密码(默认为空)

spring.redis.password=

# 连接池最大连接数(使用负值表示没有限制)

spring.redis.pool.max-active=8

# 连接池最大阻塞等待时间(使用负值表示没有限制)

spring.redis.pool.max-wait=-1

# 连接池中的最大空闲连接

spring.redis.pool.max-idle=8

# 连接池中的最小空闲连接

spring.redis.pool.min-idle=0

# 连接超时时间(毫秒)

spring.redis.timeout=0

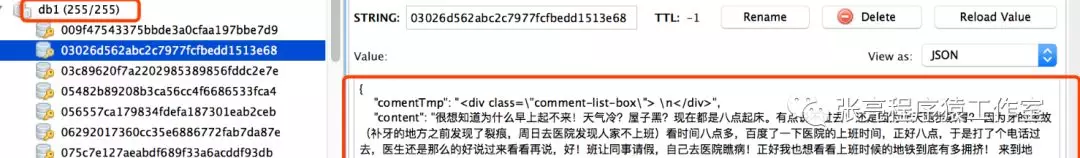

redis操作封装

@Component public class DataCache { @Autowired private StringRedisTemplate redisTemplate; /** * url作为key保存到redis */ public void save(Blog blog) { redisTemplate.opsForValue().set(blog.getUrlMd5(), JSON.toJSONString(blog)); } /** * 根据url获取 */ public Blog get(String url) { String md5Url = Md5Util.getMD5(url.getBytes()); String blogStr = redisTemplate.opsForValue().get(md5Url); if (StringUtils.isEmpty(blogStr)) { return new Blog(); } return JSON.parseObject(blogStr, Blog.class); } }

二:爬虫操作

1:页面模型,cssSelector定位数据

public class Blog implements Serializable { @CssSelector(selector = "#mainBox > main > div.blog-content-box > div.article-header-box > div > div.article-info-box > div > span.time", dateFormat = "yyyy年MM月dd日 HH:mm:ss") private Date publishDate; @CssSelector(selector = "main > div.blog-content-box > div.article-header-box > div.article-header>div.article-title-box > h1", resultType = SelectType.TEXT) private String title; @CssSelector(selector = "main > div.blog-content-box > div.article-header-box > div.article-header>div.article-info-box > div > div > span.read-count", resultType = SelectType.TEXT) private String readCountStr; private int readCount; @CssSelector(selector = "#article_content",resultType = SelectType.TEXT) private String content; @CssSelector(selector = "body > div.tool-box > ul > li:nth-child(1) > button > p",resultType = SelectType.TEXT) private int likeCount; /** * 暂时不支持自动解析列表的功能,所以加个中间变量,需要二次解析下 */ @CssSelector(selector = "#mainBox > main > div.comment-box > div.comment-list-container > div.comment-list-box",resultType = SelectType.HTML) private String comentTmp; private String url; private String urlMd5; private List<String> comment;

2:配置请求头、爬虫线程数、超时时间等等,并实现CrawlerService爬虫接口

@Component @Order public class Crawler implements CommandLineRunner { @Autowired private DataCache dataCache; @Override public void run(String... strs) { new VWCrawler.Builder().setUrl("https://blog.csdn.net/qqHJQS").setHeader("User-Agent", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.108 Safari/537.36") .setTargetUrlRex("https://blog.csdn.net/zhang__l/article/details/[0-9]+") .setThreadCount(5) .setTimeOut(5000).setPageParser(new CrawlerService<Blog>() { @Override public void parsePage(Document doc, Blog pageObj) { pageObj.setReadCount(Integer.parseInt(pageObj.getReadCountStr().replace("阅读数:", "").replace("", "0"))); pageObj.setUrl(doc.baseUri()); pageObj.setUrlMd5(Md5Util.getMD5(pageObj.getUrl().getBytes())); /** * TODO 评论列表还未处理 */ } @Override public void save(Blog pageObj) { dataCache.save(pageObj); } }).build().start(); } }

3:启动执行

右键执行CrawlerApplication

入群二维码(失效请加:13128600812,备注:ljs)

posted on 2019-05-14 15:42 张亮13128600812 阅读(207) 评论(0) 编辑 收藏 举报