apach hadoop2.6 集群利用Phoenix 4.6-hbase 批量导入并自动创建索引

基础环境:

1、安装apach 版本hadoop2.6

2、部署hbase1.0.0

3、下载phoenix-4.6.0-HBase-1.0。下载地址(http://mirror.nus.edu.sg/apache/phoenix/phoenix-4.6.0-HBase-1.0/bin/phoenix-4.6.0-HBase-1.0-bin.tar.gz)

4、phoenix 集成HBase : 将hoenix-4.6.0-HBase-1.0-server.jar拷贝到每一个RegionServer下的hbase lib目录下

配置hbse-site.xml 文件

在hbase-site.xml加入如下配置

<property>

<name>hbase.regionserver.wal.codec</name>

<value>org.apache.hadoop.hbase.regionserver.wal.IndexedWALEditCodec</value>

</property>

<property>

<name>hbase.regionserver.wal.codec</name>

<value>org.apache.hadoop.hbase.regionserver.wal.IndexedWALEditCodec</value>

</property>

<property>

<name>hbase.region.server.rpc.scheduler.factory.class</name>

<value>org.apache.hadoop.hbase.ipc.PhoenixRpcSchedulerFactory</value>

<description>Factory to create the Phoenix RPC Scheduler that uses separate queues for index and metadata updates</description>

</property>

<property>

<name>hbase.rpc.controllerfactory.class</name>

<value>org.apache.hadoop.hbase.ipc.controller.ServerRpcControllerFactory</value>

<description>Factory to create the Phoenix RPC Scheduler that uses separate queues for index and metadata updates</description>

</property>

<property>

<name>hbase.coprocessor.regionserver.classes</name>

<value>org.apache.hadoop.hbase.regionserver.LocalIndexMerger</value>

</property>

<property>

<name>hbase.master.loadbalancer.class</name>

<value>org.apache.phoenix.hbase.index.balancer.IndexLoadBalancer</value>

</property>

<property>

<name>hbase.coprocessor.master.classes</name>

<value>org.apache.phoenix.hbase.index.master.IndexMasterObserver</value>

</property>

否则在创建索引的时候会出现如下错误:

java.sql.SQLException: ERROR 1029 (42Y88): Mutable secondary indexes must have the hbase.regionserver.wal.codec property

set to org.apache.hadoop.hbase.regionserver.wal.IndexedWALEditCodec in the hbase-sites.xml of every region server tableName=INDEX_CUSTOM

5、进入phoenix 如下

6、创建表和索引

CREATE TABLE IF NOT EXISTS USPO (

state CHAR(2) NOT NULL,

city VARCHAR NOT NULL,

population BIGINT CONSTRAINT my_pk PRIMARY KEY (state,city));

create index index_pupulation on population(city,state);

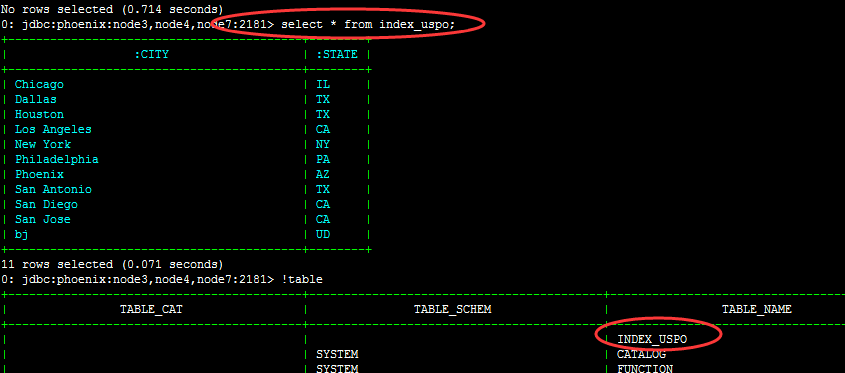

查看表是否创建成功:

7、将测试数据上传到hdfs 上:

uopu.csv

NY,New York,8143197

CA,Los Angeles,3844829

IL,Chicago,2842518

TX,Houston,2016582

PA,Philadelphia,1463281

AZ,Phoenix,1461575

TX,San Antonio,1256509

CA,San Diego,1255540

TX,Dallas,1213825

CA,San Jose,912332

8、执行命令:

hadoop jar /home/hadoop/phoenix-4.6.0-HBase-1.0-bin/phoenix-4.6.0-HBase-1.0-client.jar org.apache.phoenix.mapreduce.CsvBulkLoadTool -t uspo -i /phoenix/uopu.csv -z node3,node4,node7:2181

会执行Mapredue过程

9、查询数据:

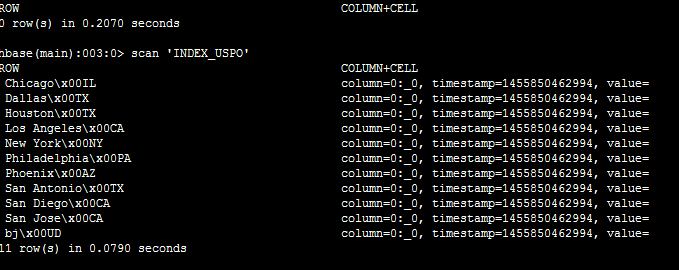

10、查询hbase数据

问题:

发现查询phoenix 上的uspo 数据是空的。

本文参考地址:https://phoenix.apache.org/secondary_indexing.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号