kubectl 命令

kubect get svc -n ingress-nginx

kubect get ns

kubect api-resources

kubect get pod -n kube-system 查看系统名称空间的pod

kubectl get pods --namespace=kube-system

kubect get rs -o wide

kubectl get pod --show-labels -A -l app

kubectl get rs -o wide

kubectl get ds

kubectl apply -f filebeat-daemonset.yaml

kubectl get svc

kubectl get pods -n ingress-nginx

kubectl get all -n namespace

kubectl get ingress -n namespace

kubectl get pods --namespace=ingress-nginx

kubectl exec -it ingress-nginx-controller-xn85x -n ingress-nginx /bin/bash

kubectl get pod,service -n ingress-nginx -o wide

kubectl get pods -n ingress-nginx

kubectl describe pod ingress-nginx-controller-9f64489f5-7pvwf -n ingress-nginx

kubectl get pod -n dev -o wide

kubectl get endpoints

kubect1 describe svc nginx

ipvsadm -Ln

kubectl logs

kubectl get pod -n namespace -o wide|grep service

kubectl get pod -n namespace podname -o yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

artifact.spinnaker.io/location: jfm

artifact.spinnaker.io/name: jfm-qyweb

artifact.spinnaker.io/type: kubernetes/deployment

artifact.spinnaker.io/version: ""

moniker.spinnaker.io/application: jfm

moniker.spinnaker.io/cluster: deployment jfm-qyweb

creationTimestamp: "2022-12-14T09:19:39Z"

generateName: jfm-qyweb-77675d44d5-

labels:

app: jfm-qyweb

app.kubernetes.io/managed-by: spinnaker

app.kubernetes.io/name: jfm

pod-template-hash: 77675d44d5

managedFields:

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:artifact.spinnaker.io/location: {}

f:artifact.spinnaker.io/name: {}

f:artifact.spinnaker.io/type: {}

f:artifact.spinnaker.io/version: {}

f:moniker.spinnaker.io/application: {}

f:moniker.spinnaker.io/cluster: {}

f:generateName: {}

f:labels:

.: {}

f:app: {}

f:app.kubernetes.io/managed-by: {}

f:app.kubernetes.io/name: {}

f:pod-template-hash: {}

f:ownerReferences:

.: {}

k:{"uid":"297f38ab-a04a-4a6a-85ed-ad420d7c5084"}: {}

f:spec:

f:containers:

k:{"name":"nginx"}:

.: {}

f:image: {}

f:imagePullPolicy: {}

f:name: {}

f:ports:

.: {}

k:{"containerPort":80,"protocol":"TCP"}:

.: {}

f:containerPort: {}

f:name: {}

f:protocol: {}

f:resources: {}

f:terminationMessagePath: {}

f:terminationMessagePolicy: {}

f:volumeMounts:

.: {}

k:{"mountPath":"/home/www/qingyun"}:

.: {}

f:mountPath: {}

f:name: {}

k:{"mountPath":"/home/www/qingyun/upload/"}:

.: {}

f:mountPath: {}

f:name: {}

f:subPath: {}

k:{"mountPath":"/var/log/nginx/"}:

.: {}

f:mountPath: {}

f:name: {}

f:subPath: {}

k:{"name":"php-fpm"}:

.: {}

f:image: {}

f:imagePullPolicy: {}

f:name: {}

f:ports:

.: {}

k:{"containerPort":9000,"protocol":"TCP"}:

.: {}

f:containerPort: {}

f:protocol: {}

f:resources: {}

f:terminationMessagePath: {}

f:terminationMessagePolicy: {}

f:volumeMounts:

.: {}

k:{"mountPath":"/home/www/qingyun"}:

.: {}

f:mountPath: {}

f:name: {}

f:dnsPolicy: {}

f:enableServiceLinks: {}

f:imagePullSecrets:

.: {}

k:{"name":"harbor1.r0wfppse.com"}: {}

f:initContainers:

.: {}

k:{"name":"code"}:

.: {}

f:command: {}

f:image: {}

f:imagePullPolicy: {}

f:name: {}

f:resources: {}

f:terminationMessagePath: {}

f:terminationMessagePolicy: {}

f:volumeMounts:

.: {}

k:{"mountPath":"/src-www/"}:

.: {}

f:mountPath: {}

f:name: {}

f:restartPolicy: {}

f:schedulerName: {}

f:securityContext: {}

f:terminationGracePeriodSeconds: {}

f:volumes:

.: {}

k:{"name":"persistent-vol"}:

.: {}

f:name: {}

f:persistentVolumeClaim:

.: {}

f:claimName: {}

k:{"name":"wwwroot"}:

.: {}

f:emptyDir: {}

f:name: {}

manager: kube-controller-manager

operation: Update

time: "2022-12-14T09:19:39Z"

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:status:

f:conditions:

k:{"type":"ContainersReady"}:

.: {}

f:lastProbeTime: {}

f:lastTransitionTime: {}

f:message: {}

f:reason: {}

f:status: {}

f:type: {}

k:{"type":"Initialized"}:

.: {}

f:lastProbeTime: {}

f:lastTransitionTime: {}

f:status: {}

f:type: {}

k:{"type":"Ready"}:

.: {}

f:lastProbeTime: {}

f:lastTransitionTime: {}

f:message: {}

f:reason: {}

f:status: {}

f:type: {}

f:containerStatuses: {}

f:hostIP: {}

f:initContainerStatuses: {}

f:phase: {}

f:podIP: {}

f:podIPs:

.: {}

k:{"ip":"10.244.3.92"}:

.: {}

f:ip: {}

f:startTime: {}

manager: kubelet

operation: Update

subresource: status

time: "2022-12-14T09:19:42Z"

name: jfm-qyweb-77675d44d5-gmwcs

namespace: jfm

ownerReferences:

- apiVersion: apps/v1

blockOwnerDeletion: true

controller: true

kind: ReplicaSet

name: jfm-qyweb-77675d44d5

uid: 297f38ab-a04a-4a6a-85ed-ad420d7c5084

resourceVersion: "25916315"

uid: b2f5f0e2-2be0-439a-927d-2713594b5eea

spec:

containers:

- image: harbor1.R0WfPPse.com/jfmys/all/qingyun-web-nginx:develop-1.8.5.3-20221214153740

imagePullPolicy: Always

name: nginx

ports:

- containerPort: 80

name: nginx-port-80

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /home/www/qingyun

name: wwwroot

- mountPath: /var/log/nginx/

name: persistent-vol

subPath: JFM/nginx/

- mountPath: /home/www/qingyun/upload/

name: persistent-vol

subPath: JFM/qingyun/upload/

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: kube-api-access-2lw9v

readOnly: true

- image: harbor1.R0WfPPse.com/jfmys/all/qingyun-web-runenv:develop-1.8.5.3-20221214154532

imagePullPolicy: Always

name: php-fpm

ports:

- containerPort: 9000

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /home/www/qingyun

name: wwwroot

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: kube-api-access-2lw9v

readOnly: true

dnsPolicy: ClusterFirst

enableServiceLinks: true

imagePullSecrets:

- name: harbor1.r0wfppse.com

initContainers:

- command:

- sh

- -c

- cp -rf /code/* /src-www/ && chmod -R 777 /src-www/

image: harbor1.R0WfPPse.com/jfmys/all/qingyun-web-code:develop-1.8.5.3-20221214153751

imagePullPolicy: Always

name: code

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /src-www/

name: wwwroot

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: kube-api-access-2lw9v

readOnly: true

nodeName: k8s-uat-work1.148962587001

preemptionPolicy: PreemptLowerPriority

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: default

serviceAccountName: default

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

volumes:

- emptyDir: {}

name: wwwroot

- name: persistent-vol

persistentVolumeClaim:

claimName: jfm-nfs-pvc

- name: kube-api-access-2lw9v

projected:

defaultMode: 420

sources:

- serviceAccountToken:

expirationSeconds: 3607

path: token

- configMap:

items:

- key: ca.crt

path: ca.crt

name: kube-root-ca.crt

- downwardAPI:

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

path: namespace

status:

conditions:

- lastProbeTime: null

lastTransitionTime: "2022-12-14T09:19:41Z"

status: "True"

type: Initialized

- lastProbeTime: null

lastTransitionTime: "2022-12-14T09:19:39Z"

message: 'containers with unready status: [php-fpm]'

reason: ContainersNotReady

status: "False"

type: Ready

- lastProbeTime: null

lastTransitionTime: "2022-12-14T09:19:39Z"

message: 'containers with unready status: [php-fpm]'

reason: ContainersNotReady

status: "False"

type: ContainersReady

- lastProbeTime: null

lastTransitionTime: "2022-12-14T09:19:39Z"

status: "True"

type: PodScheduled

containerStatuses:

- containerID: docker://130081879b6872f32f026461a2d519e8aaf865022604508ebcfffa2e35687e23

image: harbor1.R0WfPPse.com/jfmys/all/qingyun-web-nginx:develop-1.8.5.3-20221214153740

imageID: docker-pullable://harbor1.R0WfPPse.com/jfmys/all/qingyun-web-nginx@sha256:3b1d7fbc40730a95cdb859ec0b63d7f3bc3315fbf40412e73c0b613da792e425

lastState: {}

name: nginx

ready: true

restartCount: 0

started: true

state:

running:

startedAt: "2022-12-14T09:19:41Z"

- containerID: docker://4a78ac198a32d2f6c391da2957e13885bc8070e3041e01bc401cc066a7bb93fb

image: harbor1.R0WfPPse.com/jfmys/all/qingyun-web-runenv:develop-1.8.5.3-20221214154532

imageID: docker-pullable://harbor1.R0WfPPse.com/jfmys/all/qingyun-web-runenv@sha256:67ba76e6fa9280d758021e99cbfdabeda80ab86c05cd9248f0387b9eae06db07

lastState:

terminated:

containerID: docker://4a78ac198a32d2f6c391da2957e13885bc8070e3041e01bc401cc066a7bb93fb

exitCode: 127

finishedAt: "2022-12-14T09:22:39Z"

message: 'failed to create shim task: OCI runtime create failed: runc create

failed: unable to start container process: exec: "/docker-entrypoint.sh":

stat /docker-entrypoint.sh: no such file or directory: unknown'

reason: ContainerCannotRun

startedAt: "2022-12-14T09:22:39Z"

name: php-fpm

ready: false

restartCount: 5

started: false

state:

waiting:

message: back-off 2m40s restarting failed container=php-fpm pod=jfm-qyweb-77675d44d5-gmwcs_jfm(b2f5f0e2-2be0-439a-927d-2713594b5eea)

reason: CrashLoopBackOff

hostIP: 172.31.27.193

initContainerStatuses:

- containerID: docker://f75a55e5d6720b5f8e0c5f5a3bfbb977ef6136a7b33b617aa73ceb9048dcc9dd

image: harbor1.R0WfPPse.com/jfmys/all/qingyun-web-code:develop-1.8.5.3-20221214153751

imageID: docker-pullable://harbor1.R0WfPPse.com/jfmys/all/qingyun-web-code@sha256:5a25b6dfedf52e7b8304abeb2fb7fc3a9f9a51786e58f59a8ac82ef302a61db1

lastState: {}

name: code

ready: true

restartCount: 0

state:

terminated:

containerID: docker://f75a55e5d6720b5f8e0c5f5a3bfbb977ef6136a7b33b617aa73ceb9048dcc9dd

exitCode: 0

finishedAt: "2022-12-14T09:19:40Z"

reason: Completed

startedAt: "2022-12-14T09:19:40Z"

phase: Running

podIP: 10.244.3.92

podIPs:

- ip: 10.244.3.92

qosClass: BestEffort

startTime: "2022-12-14T09:19:39Z"

kubectl create -f pod1.yaml

kubectl get rc,service

kubectl describe node name

kubectl delete pod corxxxx

kubectl get pod nginx-deploy-7db697dfbd-2qh7v -o yaml

kubectl get svc -n ingress-nginx

kubectl describe pod/ingress-nginx-controller-mngr9 -n ingress-nginx

kubectl get pods,svc -n ingress-nginx -o wide

kubectl exec -it pod/ingress-nginx-controller-8bwxb bash -n ingress-nginx

kubectl get pods ingress-nginx-controller-xn85x -n ingress-nginx -o yaml

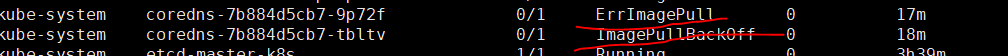

1.查看coredns的pod 处于 imagePullBackoff 状态

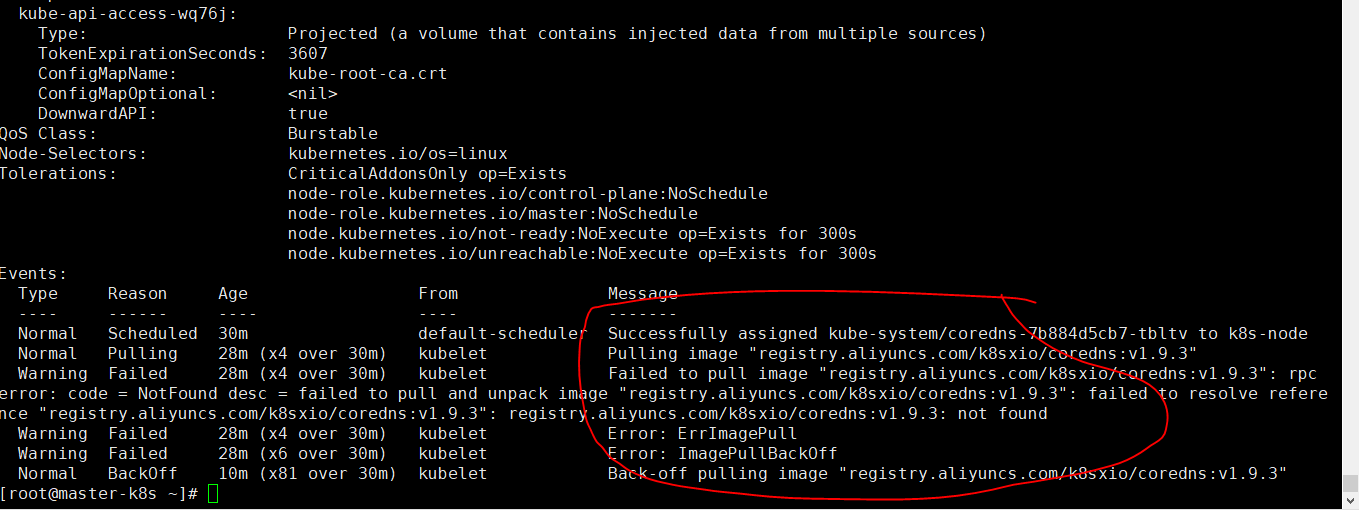

2.查询pod描述状态 kubectl describe pod coredns-7b884d5cb7-tbltv -n kube-system,可以指定镜像没有git pull 导致的问题

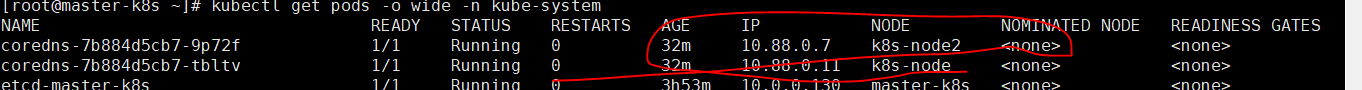

3. 查询pod的负载在哪些node节点上 然后节点应该没有core的镜像

4.可以利用docker仓库的coredns 拉取 ctr -n k8s.io i pull docker.io/coredns/coredns:1.9.3,然后利用tag 去改名 ctr -n k8s.io i tag docker.io/coredns/coredns:1.9.3 registry.aliyuncs.com/k8sxio/coredns:v1.9.3

5.最后到2个node节点去拉取镜像 pod运行正常

6. 查看pod日志kebelet 处于 un 9 22:15:56 master kubelet: E0609 22:15:56.367821 12257 node_container_manager_linux.go:61] “Failed to create cgroup” 状态,分析可能跟SystemdCgroup 有关系

7.结果是是查看正常,并非这个问题,处理是system版本过低,yum -y remote system,然后进行yum install system 去安装最新的,然后看kubelte 启动正常

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· winform 绘制太阳,地球,月球 运作规律

· AI与.NET技术实操系列(五):向量存储与相似性搜索在 .NET 中的实现

· 超详细:普通电脑也行Windows部署deepseek R1训练数据并当服务器共享给他人

· 上周热点回顾(3.3-3.9)

· AI 智能体引爆开源社区「GitHub 热点速览」